Meeting Summarization

Meeting summarization is the process of creating a concise summary of a meeting based on audio or video recordings.

Papers and Code

ContextEvolve: Multi-Agent Context Compression for Systems Code Optimization

Feb 01, 2026Large language models are transforming systems research by automating the discovery of performance-critical algorithms for computer systems. Despite plausible codes generated by LLMs, producing solutions that meet the stringent correctness and performance requirements of systems demands iterative optimization. Test-time reinforcement learning offers high search efficiency but requires parameter updates infeasible under API-only access, while existing training-free evolutionary methods suffer from inefficient context utilization and undirected search. We introduce ContextEvolve, a multi-agent framework that achieves RL-level search efficiency under strict parameter-blind constraints by decomposing optimization context into three orthogonal dimensions: a Summarizer Agent condenses semantic state via code-to-language abstraction, a Navigator Agent distills optimization direction from trajectory analysis, and a Sampler Agent curates experience distribution through prioritized exemplar retrieval. This orchestration forms a functional isomorphism with RL-mapping to state representation, policy gradient, and experience replay-enabling principled optimization in a textual latent space. On the ADRS benchmark, ContextEvolve outperforms state-of-the-art baselines by 33.3% while reducing token consumption by 29.0%. Codes for our work are released at https://anonymous.4open.science/r/ContextEvolve-ACC

Can LLMs Track Their Output Length? A Dynamic Feedback Mechanism for Precise Length Regulation

Jan 07, 2026Precisely controlling the length of generated text is a common requirement in real-world applications. However, despite significant advancements in following human instructions, Large Language Models (LLMs) still struggle with this task. In this work, we demonstrate that LLMs often fail to accurately measure their response lengths, leading to poor adherence to length constraints. To address this issue, we propose a novel length regulation approach that incorporates dynamic length feedback during generation, enabling adaptive adjustments to meet target lengths. Experiments on summarization and biography tasks show our training-free approach significantly improves precision in achieving target token, word, or sentence counts without compromising quality. Additionally, we demonstrate that further supervised fine-tuning allows our method to generalize effectively to broader text-generation tasks.

Gavel: Agent Meets Checklist for Evaluating LLMs on Long-Context Legal Summarization

Jan 07, 2026Large language models (LLMs) now support contexts of up to 1M tokens, but their effectiveness on complex long-context tasks remains unclear. In this paper, we study multi-document legal case summarization, where a single case often spans many documents totaling 100K-500K tokens. We introduce Gavel-Ref, a reference-based evaluation framework with multi-value checklist evaluation over 26 items, as well as residual fact and writing-style evaluations. Using Gavel-Ref, we go beyond the single aggregate scores reported in prior work and systematically evaluate 12 frontier LLMs on 100 legal cases ranging from 32K to 512K tokens, primarily from 2025. Our results show that even the strongest model, Gemini 2.5 Pro, achieves only around 50 of $S_{\text{Gavel-Ref}}$, highlighting the difficulty of the task. Models perform well on simple checklist items (e.g., filing date) but struggle on multi-value or rare ones such as settlements and monitor reports. As LLMs continue to improve and may surpass human-written summaries -- making human references less reliable -- we develop Gavel-Agent, an efficient and autonomous agent scaffold that equips LLMs with six tools to navigate and extract checklists directly from case documents. With Qwen3, Gavel-Agent reduces token usage by 36% while resulting in only a 7% drop in $S_{\text{checklist}}$ compared to end-to-end extraction with GPT-4.1.

AI-Driven Channel State Information (CSI) Extrapolation for 6G: Current Situations, Challenges and Future Research

Jan 01, 2026CSI extrapolation is an effective method for acquiring channel state information (CSI), essential for optimizing performance of sixth-generation (6G) communication systems. Traditional channel estimation methods face scalability challenges due to the surging overhead in emerging high-mobility, extremely large-scale multiple-input multiple-output (EL-MIMO), and multi-band systems. CSI extrapolation techniques mitigate these challenges by using partial CSI to infer complete CSI, significantly reducing overhead. Despite growing interest, a comprehensive review of state-of-the-art (SOTA) CSI extrapolation techniques is lacking. This paper addresses this gap by comprehensively reviewing the current status, challenges, and future directions of CSI extrapolation for the first time. Firstly, we analyze the performance metrics specific to CSI extrapolation in 6G, including extrapolation accuracy, adaption to dynamic scenarios and algorithm costs. We then review both model-driven and artificial intelligence (AI)-driven approaches for time, frequency, antenna, and multi-domain CSI extrapolation. Key insights and takeaways from these methods are summarized. Given the promise of AI-driven methods in meeting performance requirements, we also examine the open-source channel datasets and simulators that could be used to train high-performance AI-driven CSI extrapolation models. Finally, we discuss the critical challenges of the existing research and propose perspective research opportunities.

Corpus of Cross-lingual Dialogues with Minutes and Detection of Misunderstandings

Dec 23, 2025Speech processing and translation technology have the potential to facilitate meetings of individuals who do not share any common language. To evaluate automatic systems for such a task, a versatile and realistic evaluation corpus is needed. Therefore, we create and present a corpus of cross-lingual dialogues between individuals without a common language who were facilitated by automatic simultaneous speech translation. The corpus consists of 5 hours of speech recordings with ASR and gold transcripts in 12 original languages and automatic and corrected translations into English. For the purposes of research into cross-lingual summarization, our corpus also includes written summaries (minutes) of the meetings. Moreover, we propose automatic detection of misunderstandings. For an overview of this task and its complexity, we attempt to quantify misunderstandings in cross-lingual meetings. We annotate misunderstandings manually and also test the ability of current large language models to detect them automatically. The results show that the Gemini model is able to identify text spans with misunderstandings with recall of 77% and precision of 47%.

* 12 pages, 2 figures, 6 tables, published as a conference paper in Text, Speech, and Dialogue 28th International Conference, TSD 2025, Erlangen, Germany, August 25-28, 2025, Proceedings, Part II. This version published here on arXiv.org is before review comments and seedings of the TSD conference staff

When F1 Fails: Granularity-Aware Evaluation for Dialogue Topic Segmentation

Dec 24, 2025Dialogue topic segmentation supports summarization, retrieval, memory management, and conversational continuity. Despite decades of work, evaluation practice remains dominated by strict boundary matching and F1-based metrics. Modern large language model (LLM) based conversational systems increasingly rely on segmentation to manage conversation history beyond fixed context windows. In such systems, unstructured context accumulation degrades efficiency and coherence. This paper introduces an evaluation framework that reports boundary density and segment alignment diagnostics (purity and coverage) alongside window-tolerant F1 (W-F1). By separating boundary scoring from boundary selection, we evaluate segmentation quality across density regimes rather than at a single operating point. Cross-dataset evaluation shows that reported performance differences often reflect annotation granularity mismatch rather than boundary placement quality alone. We evaluate structurally distinct segmentation strategies across eight dialogue datasets spanning task-oriented, open-domain, meeting-style, and synthetic interactions. Boundary-based metrics are strongly coupled to boundary density: threshold sweeps produce larger W-F1 changes than switching between methods. These findings support viewing topic segmentation as a granularity selection problem rather than prediction of a single correct boundary set. This motivates separating boundary scoring from boundary selection for analyzing and tuning segmentation under varying annotation granularities.

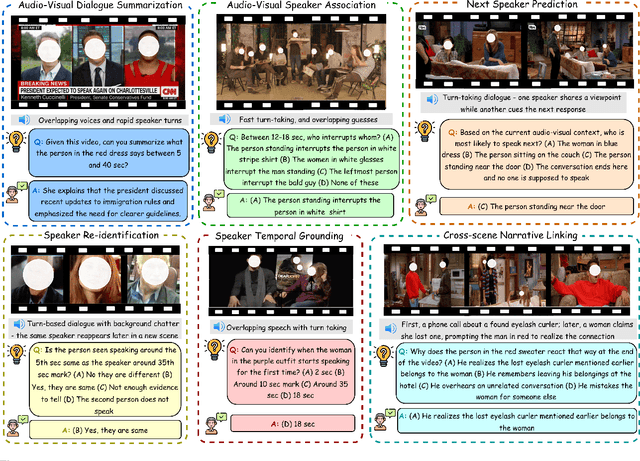

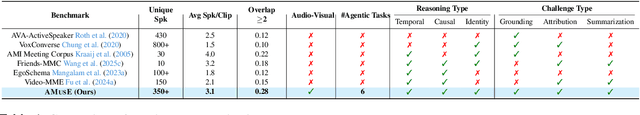

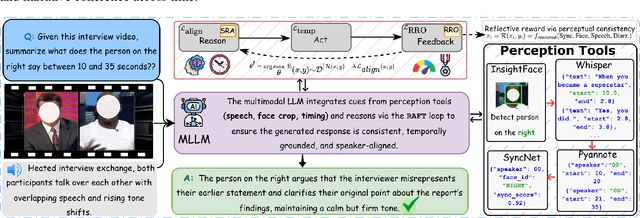

AMUSE: Audio-Visual Benchmark and Alignment Framework for Agentic Multi-Speaker Understanding

Dec 18, 2025

Recent multimodal large language models (MLLMs) such as GPT-4o and Qwen3-Omni show strong perception but struggle in multi-speaker, dialogue-centric settings that demand agentic reasoning tracking who speaks, maintaining roles, and grounding events across time. These scenarios are central to multimodal audio-video understanding, where models must jointly reason over audio and visual streams in applications such as conversational video assistants and meeting analytics. We introduce AMUSE, a benchmark designed around tasks that are inherently agentic, requiring models to decompose complex audio-visual interactions into planning, grounding, and reflection steps. It evaluates MLLMs across three modes zero-shot, guided, and agentic and six task families, including spatio-temporal speaker grounding and multimodal dialogue summarization. Across all modes, current models exhibit weak multi-speaker reasoning and inconsistent behavior under both non-agentic and agentic evaluation. Motivated by the inherently agentic nature of these tasks and recent advances in LLM agents, we propose RAFT, a data-efficient agentic alignment framework that integrates reward optimization with intrinsic multimodal self-evaluation as reward and selective parameter adaptation for data and parameter efficient updates. Using RAFT, we achieve up to 39.52\% relative improvement in accuracy on our benchmark. Together, AMUSE and RAFT provide a practical platform for examining agentic reasoning in multimodal models and improving their capabilities.

ICX360: In-Context eXplainability 360 Toolkit

Nov 14, 2025Large Language Models (LLMs) have become ubiquitous in everyday life and are entering higher-stakes applications ranging from summarizing meeting transcripts to answering doctors' questions. As was the case with earlier predictive models, it is crucial that we develop tools for explaining the output of LLMs, be it a summary, list, response to a question, etc. With these needs in mind, we introduce In-Context Explainability 360 (ICX360), an open-source Python toolkit for explaining LLMs with a focus on the user-provided context (or prompts in general) that are fed to the LLMs. ICX360 contains implementations for three recent tools that explain LLMs using both black-box and white-box methods (via perturbations and gradients respectively). The toolkit, available at https://github.com/IBM/ICX360, contains quick-start guidance materials as well as detailed tutorials covering use cases such as retrieval augmented generation, natural language generation, and jailbreaking.

KVSwap: Disk-aware KV Cache Offloading for Long-Context On-device Inference

Nov 14, 2025Language models (LMs) underpin emerging mobile and embedded AI applications like meeting and video summarization and document analysis, which often require processing multiple long-context inputs. Running an LM locally on-device improves privacy, enables offline use, and reduces cost, but long-context inference quickly hits a \emph{memory capacity wall} as the key-value (KV) cache grows linearly with context length and batch size. We present KVSwap, a software framework to break this memory wall by offloading the KV cache to non-volatile secondary storage (disk). KVSwap leverages the observation that only a small, dynamically changing subset of KV entries is critical for generation. It stores the full cache on disk, uses a compact in-memory metadata to predict which entries to preload, overlaps computation with hardware-aware disk access, and orchestrates read patterns to match storage device characteristics. Our evaluation shows that across representative LMs and storage types, KVSwap delivers higher throughput under tight memory budgets while maintaining the generation quality when compared with existing KV cache offloading schemes.

User Perceptions of Privacy and Helpfulness in LLM Responses to Privacy-Sensitive Scenarios

Oct 23, 2025Large language models (LLMs) have seen rapid adoption for tasks such as drafting emails, summarizing meetings, and answering health questions. In such uses, users may need to share private information (e.g., health records, contact details). To evaluate LLMs' ability to identify and redact such private information, prior work developed benchmarks (e.g., ConfAIde, PrivacyLens) with real-life scenarios. Using these benchmarks, researchers have found that LLMs sometimes fail to keep secrets private when responding to complex tasks (e.g., leaking employee salaries in meeting summaries). However, these evaluations rely on LLMs (proxy LLMs) to gauge compliance with privacy norms, overlooking real users' perceptions. Moreover, prior work primarily focused on the privacy-preservation quality of responses, without investigating nuanced differences in helpfulness. To understand how users perceive the privacy-preservation quality and helpfulness of LLM responses to privacy-sensitive scenarios, we conducted a user study with 94 participants using 90 scenarios from PrivacyLens. We found that, when evaluating identical responses to the same scenario, users showed low agreement with each other on the privacy-preservation quality and helpfulness of the LLM response. Further, we found high agreement among five proxy LLMs, while each individual LLM had low correlation with users' evaluations. These results indicate that the privacy and helpfulness of LLM responses are often specific to individuals, and proxy LLMs are poor estimates of how real users would perceive these responses in privacy-sensitive scenarios. Our results suggest the need to conduct user-centered studies on measuring LLMs' ability to help users while preserving privacy. Additionally, future research could investigate ways to improve the alignment between proxy LLMs and users for better estimation of users' perceived privacy and utility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge