Zhuowen Tu

Open-Vocabulary Panoptic Segmentation with MaskCLIP

Aug 18, 2022

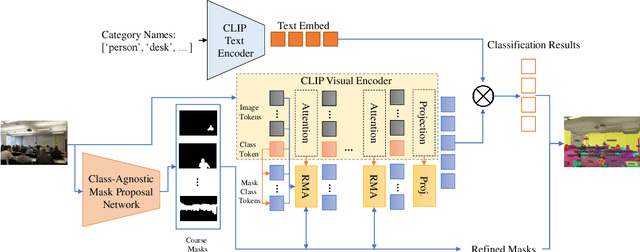

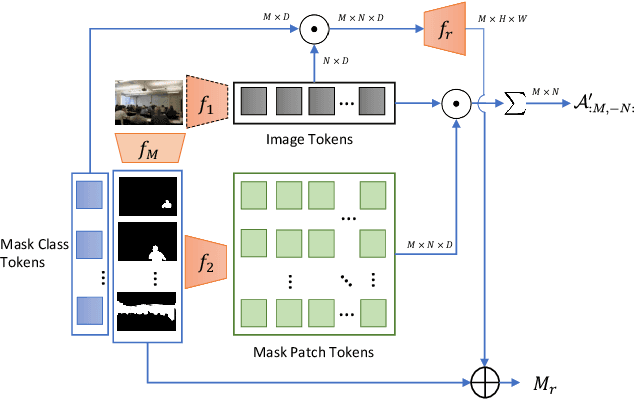

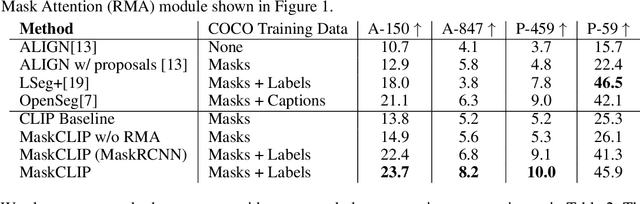

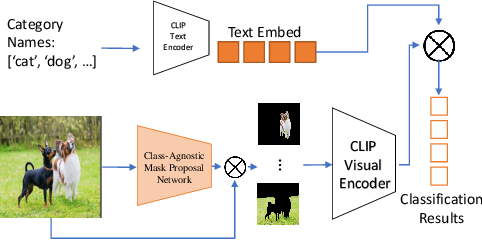

Abstract:In this paper, we tackle a new computer vision task, open-vocabulary panoptic segmentation, that aims to perform panoptic segmentation (background semantic labeling + foreground instance segmentation) for arbitrary categories of text-based descriptions. We first build a baseline method without finetuning nor distillation to utilize the knowledge in the existing CLIP model. We then develop a new method, MaskCLIP, that is a Transformer-based approach using mask queries with the ViT-based CLIP backbone to perform semantic segmentation and object instance segmentation. Here we design a Relative Mask Attention (RMA) module to account for segmentations as additional tokens to the ViT CLIP model. MaskCLIP learns to efficiently and effectively utilize pre-trained dense/local CLIP features by avoiding the time-consuming operation to crop image patches and compute feature from an external CLIP image model. We obtain encouraging results for open-vocabulary panoptic segmentation and state-of-the-art results for open-vocabulary semantic segmentation on ADE20K and PASCAL datasets. We show qualitative illustration for MaskCLIP with custom categories.

Semi-supervised Vision Transformers at Scale

Aug 11, 2022

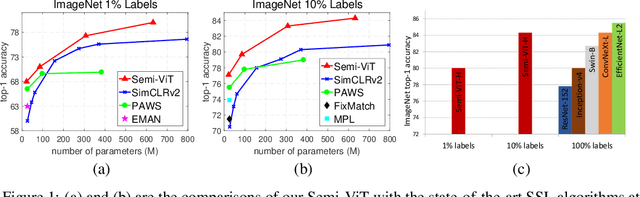

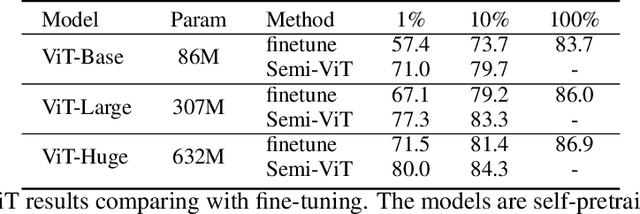

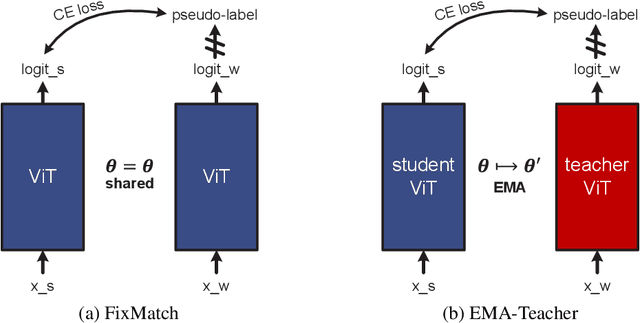

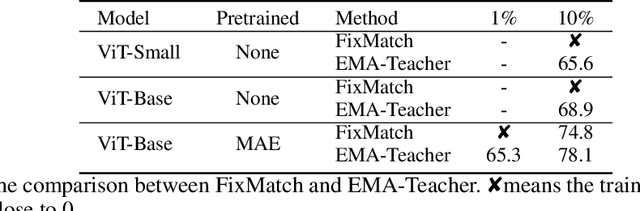

Abstract:We study semi-supervised learning (SSL) for vision transformers (ViT), an under-explored topic despite the wide adoption of the ViT architectures to different tasks. To tackle this problem, we propose a new SSL pipeline, consisting of first un/self-supervised pre-training, followed by supervised fine-tuning, and finally semi-supervised fine-tuning. At the semi-supervised fine-tuning stage, we adopt an exponential moving average (EMA)-Teacher framework instead of the popular FixMatch, since the former is more stable and delivers higher accuracy for semi-supervised vision transformers. In addition, we propose a probabilistic pseudo mixup mechanism to interpolate unlabeled samples and their pseudo labels for improved regularization, which is important for training ViTs with weak inductive bias. Our proposed method, dubbed Semi-ViT, achieves comparable or better performance than the CNN counterparts in the semi-supervised classification setting. Semi-ViT also enjoys the scalability benefits of ViTs that can be readily scaled up to large-size models with increasing accuracies. For example, Semi-ViT-Huge achieves an impressive 80% top-1 accuracy on ImageNet using only 1% labels, which is comparable with Inception-v4 using 100% ImageNet labels.

The Geometry of Multilingual Language Model Representations

May 22, 2022

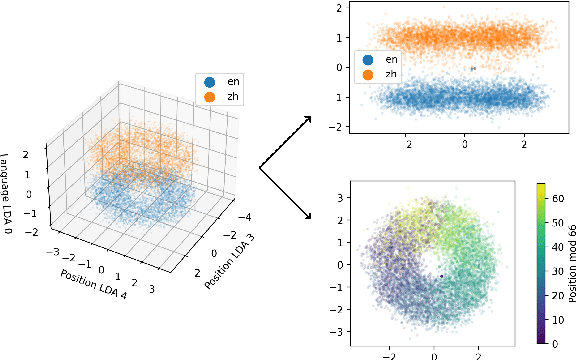

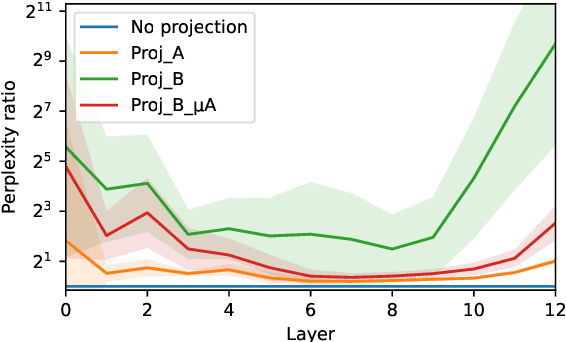

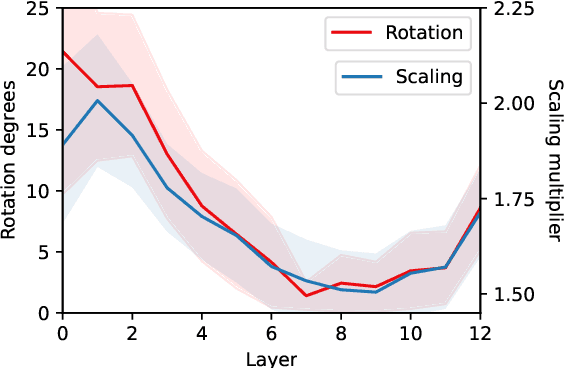

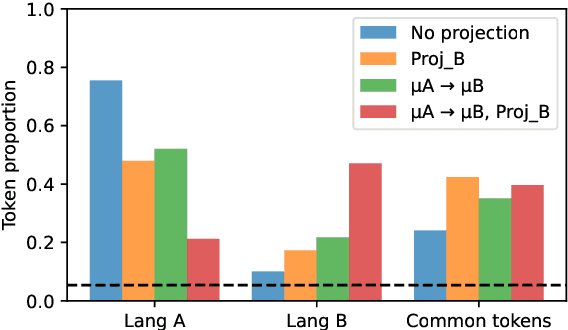

Abstract:We assess how multilingual language models maintain a shared multilingual representation space while still encoding language-sensitive information in each language. Using XLM-R as a case study, we show that languages occupy similar linear subspaces after mean-centering, evaluated based on causal effects on language modeling performance and direct comparisons between subspaces for 88 languages. The subspace means differ along language-sensitive axes that are relatively stable throughout middle layers, and these axes encode information such as token vocabularies. Shifting representations by language means is sufficient to induce token predictions in different languages. However, we also identify stable language-neutral axes that encode information such as token positions and part-of-speech. We visualize representations projected onto language-sensitive and language-neutral axes, identifying language family and part-of-speech clusters, along with spirals, toruses, and curves representing token position information. These results demonstrate that multilingual language models encode information along orthogonal language-sensitive and language-neutral axes, allowing the models to extract a variety of features for downstream tasks and cross-lingual transfer learning.

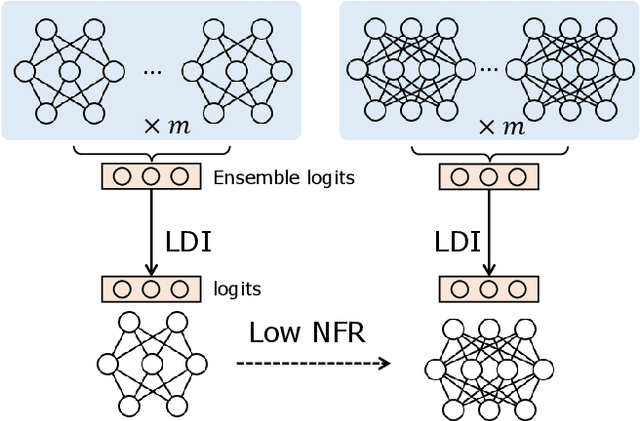

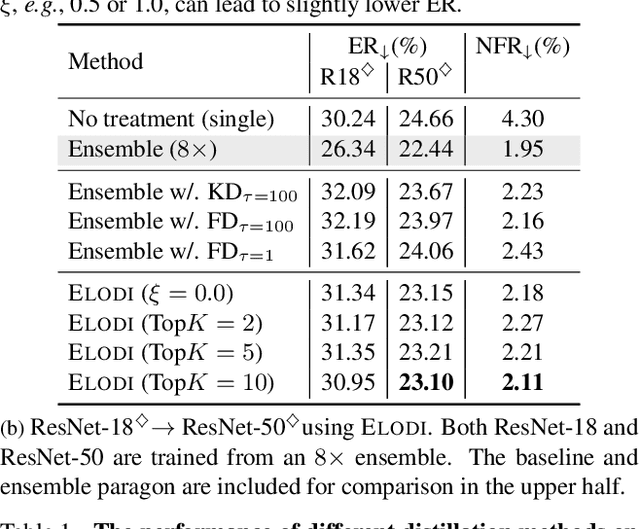

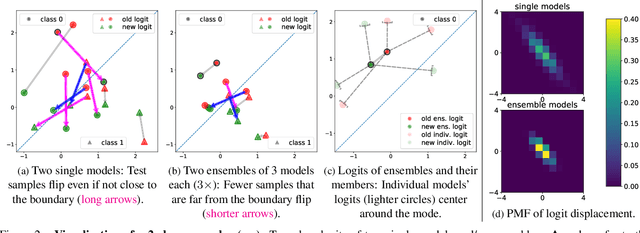

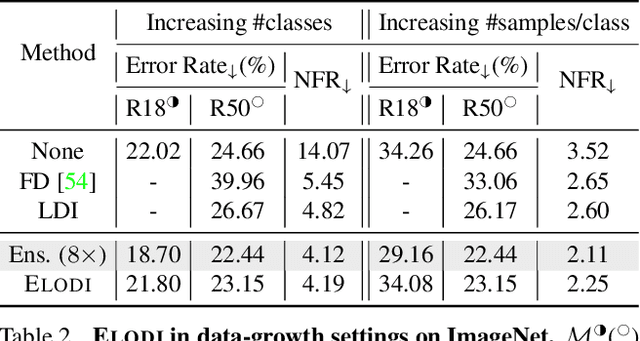

ELODI: Ensemble Logit Difference Inhibition for Positive-Congruent Training

May 13, 2022

Abstract:Negative flips are errors introduced in a classification system when a legacy model is replaced with a new one. Existing methods to reduce the negative flip rate (NFR) either do so at the expense of overall accuracy using model distillation, or use ensembles, which multiply inference cost prohibitively. We present a method to train a classification system that achieves paragon performance in both error rate and NFR, at the inference cost of a single model. Our method introduces a generalized distillation objective, Logit Difference Inhibition (LDI), that penalizes changes in the logits between the new and old model, without forcing them to coincide as in ordinary distillation. LDI affords the model flexibility to reduce error rate along with NFR. The method uses a homogeneous ensemble as the reference model for LDI, hence the name Ensemble LDI, or ELODI. The reference model can then be substituted with a single model at inference time. The method leverages the observation that negative flips are typically not close to the decision boundary, but often exhibit large deviations in the distance among their logits, which are reduced by ELODI.

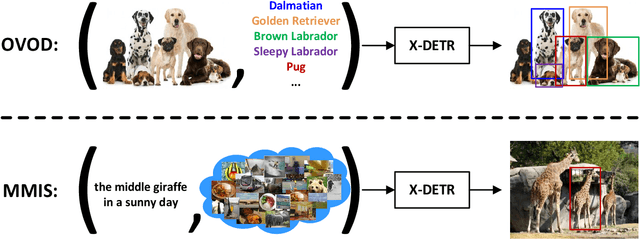

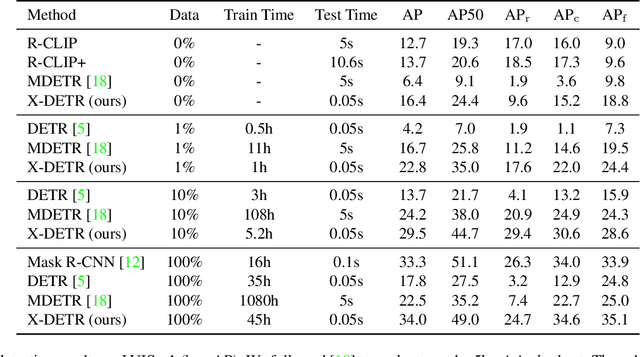

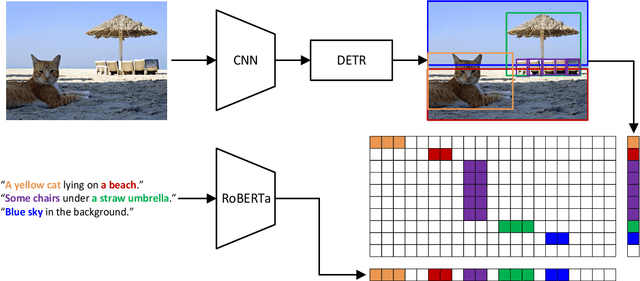

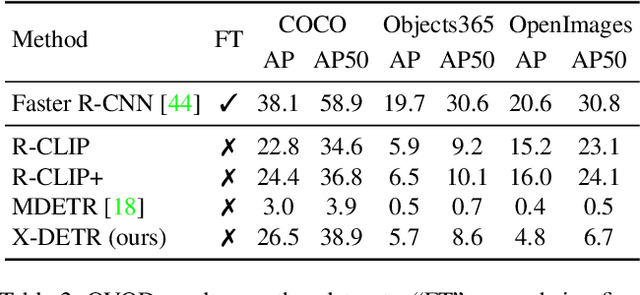

X-DETR: A Versatile Architecture for Instance-wise Vision-Language Tasks

Apr 12, 2022

Abstract:In this paper, we study the challenging instance-wise vision-language tasks, where the free-form language is required to align with the objects instead of the whole image. To address these tasks, we propose X-DETR, whose architecture has three major components: an object detector, a language encoder, and vision-language alignment. The vision and language streams are independent until the end and they are aligned using an efficient dot-product operation. The whole network is trained end-to-end, such that the detector is optimized for the vision-language tasks instead of an off-the-shelf component. To overcome the limited size of paired object-language annotations, we leverage other weak types of supervision to expand the knowledge coverage. This simple yet effective architecture of X-DETR shows good accuracy and fast speeds for multiple instance-wise vision-language tasks, e.g., 16.4 AP on LVIS detection of 1.2K categories at ~20 frames per second without using any LVIS annotation during training.

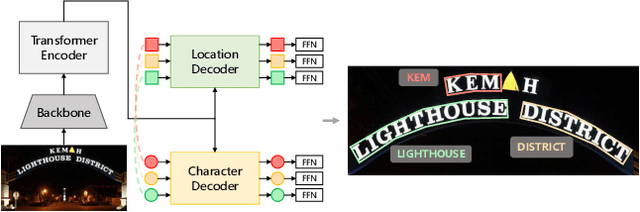

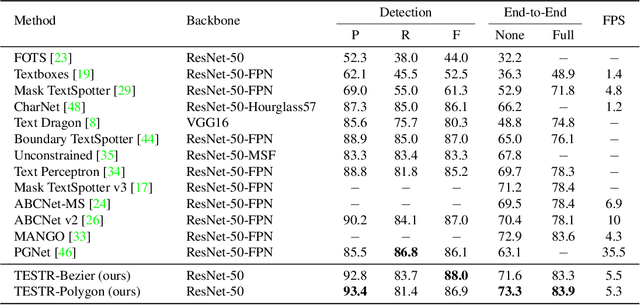

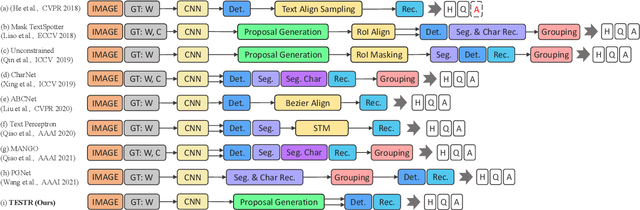

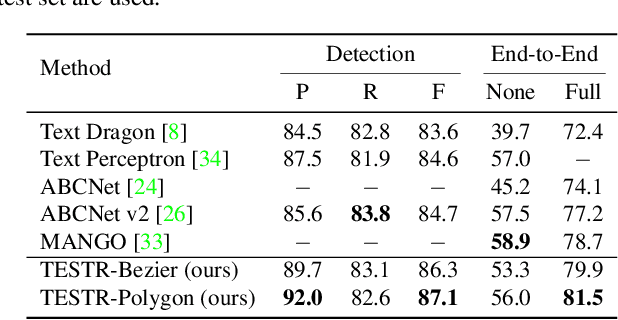

Text Spotting Transformers

Apr 05, 2022

Abstract:In this paper, we present TExt Spotting TRansformers (TESTR), a generic end-to-end text spotting framework using Transformers for text detection and recognition in the wild. TESTR builds upon a single encoder and dual decoders for the joint text-box control point regression and character recognition. Other than most existing literature, our method is free from Region-of-Interest operations and heuristics-driven post-processing procedures; TESTR is particularly effective when dealing with curved text-boxes where special cares are needed for the adaptation of the traditional bounding-box representations. We show our canonical representation of control points suitable for text instances in both Bezier curve and polygon annotations. In addition, we design a bounding-box guided polygon detection (box-to-polygon) process. Experiments on curved and arbitrarily shaped datasets demonstrate state-of-the-art performances of the proposed TESTR algorithm.

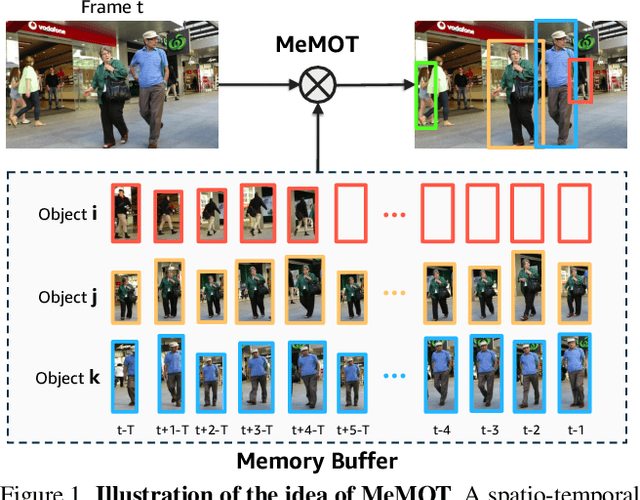

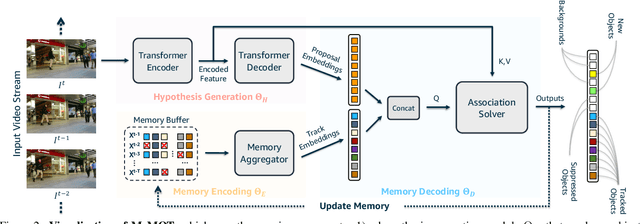

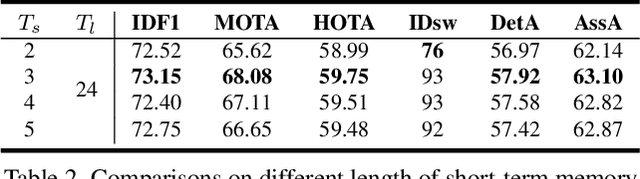

MeMOT: Multi-Object Tracking with Memory

Mar 31, 2022

Abstract:We propose an online tracking algorithm that performs the object detection and data association under a common framework, capable of linking objects after a long time span. This is realized by preserving a large spatio-temporal memory to store the identity embeddings of the tracked objects, and by adaptively referencing and aggregating useful information from the memory as needed. Our model, called MeMOT, consists of three main modules that are all Transformer-based: 1) Hypothesis Generation that produce object proposals in the current video frame; 2) Memory Encoding that extracts the core information from the memory for each tracked object; and 3) Memory Decoding that solves the object detection and data association tasks simultaneously for multi-object tracking. When evaluated on widely adopted MOT benchmark datasets, MeMOT observes very competitive performance.

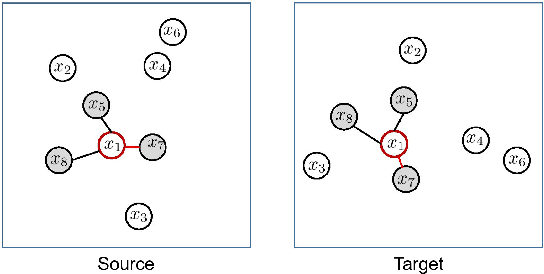

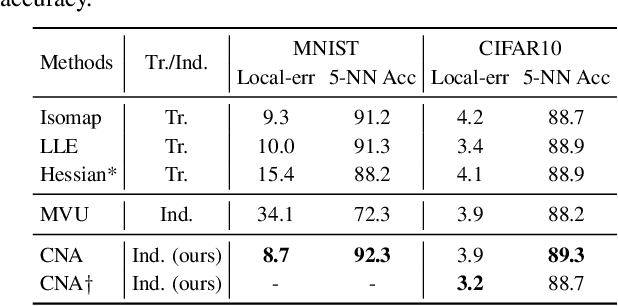

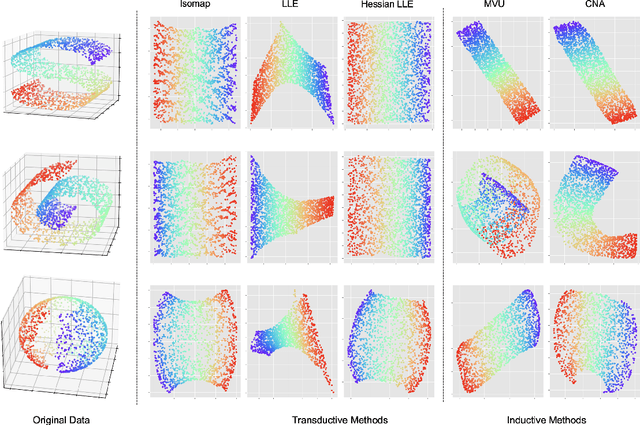

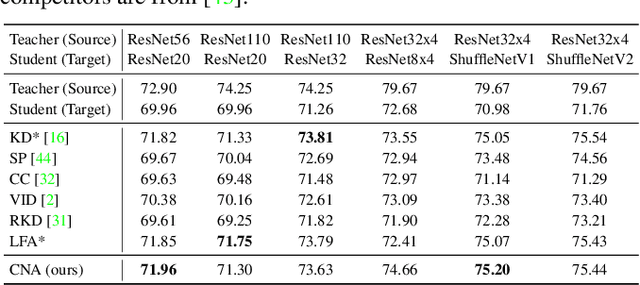

Contrastive Neighborhood Alignment

Jan 06, 2022

Abstract:We present Contrastive Neighborhood Alignment (CNA), a manifold learning approach to maintain the topology of learned features whereby data points that are mapped to nearby representations by the source (teacher) model are also mapped to neighbors by the target (student) model. The target model aims to mimic the local structure of the source representation space using a contrastive loss. CNA is an unsupervised learning algorithm that does not require ground-truth labels for the individual samples. CNA is illustrated in three scenarios: manifold learning, where the model maintains the local topology of the original data in a dimension-reduced space; model distillation, where a small student model is trained to mimic a larger teacher; and legacy model update, where an older model is replaced by a more powerful one. Experiments show that CNA is able to capture the manifold in a high-dimensional space and improves performance compared to the competing methods in their domains.

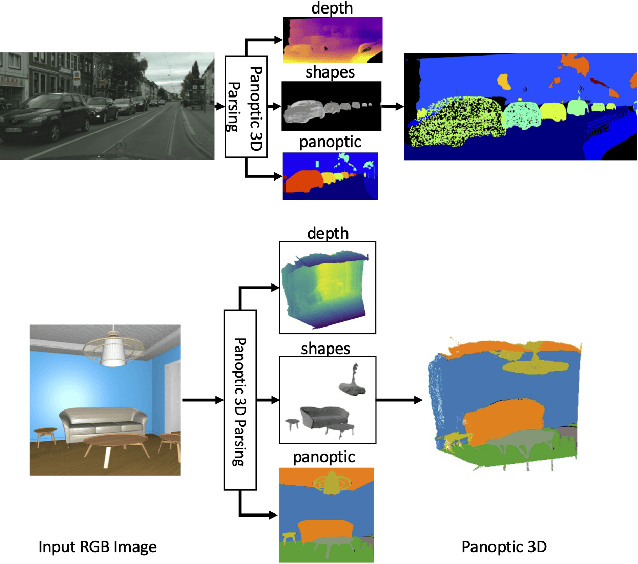

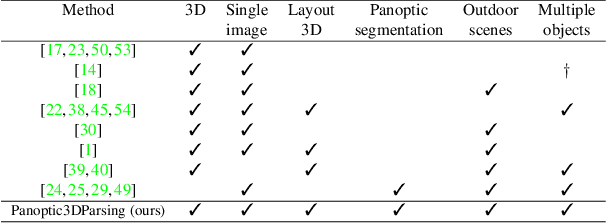

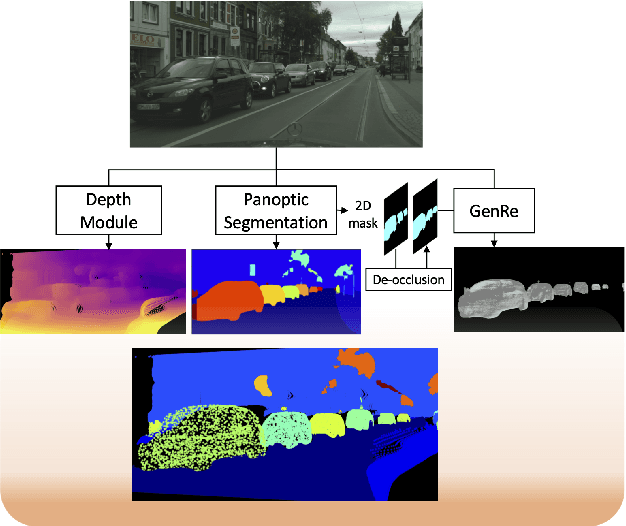

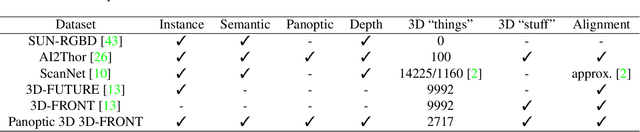

Towards Panoptic 3D Parsing for Single Image in the Wild

Nov 29, 2021

Abstract:Performing single image holistic understanding and 3D reconstruction is a central task in computer vision. This paper presents an integrated system that performs dense scene labeling, object detection, instance segmentation, depth estimation, 3D shape reconstruction, and 3D layout estimation for indoor and outdoor scenes from a single RGB image. We name our system panoptic 3D parsing (Panoptic3D) in which panoptic segmentation ("stuff" segmentation and "things" detection/segmentation) with 3D reconstruction is performed. We design a stage-wise system, Panoptic3D (stage-wise), where a complete set of annotations is absent. Additionally, we present an end-to-end pipeline, Panoptic3D (end-to-end), trained on a synthetic dataset with a full set of annotations. We show results on both indoor (3D-FRONT) and outdoor (COCO and Cityscapes) scenes. Our proposed panoptic 3D parsing framework points to a promising direction in computer vision. Panoptic3D can be applied to a variety of applications, including autonomous driving, mapping, robotics, design, computer graphics, robotics, human-computer interaction, and augmented reality.

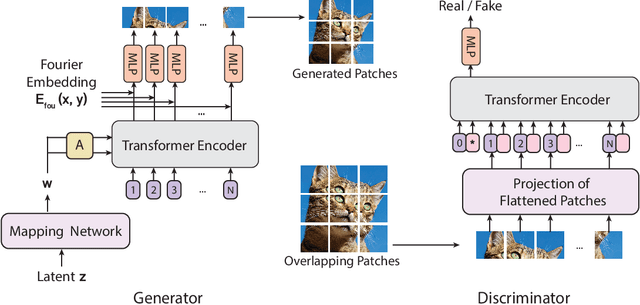

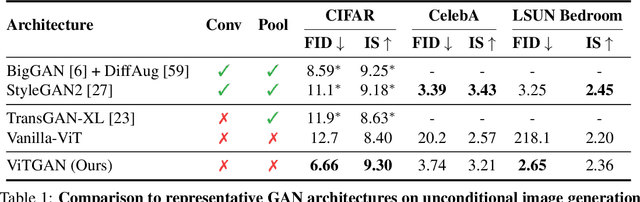

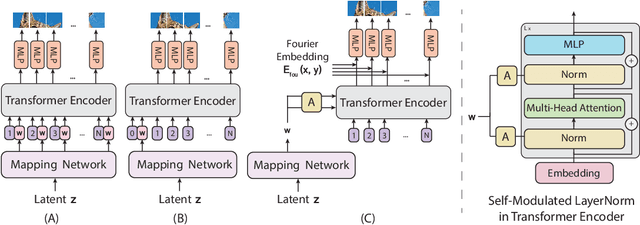

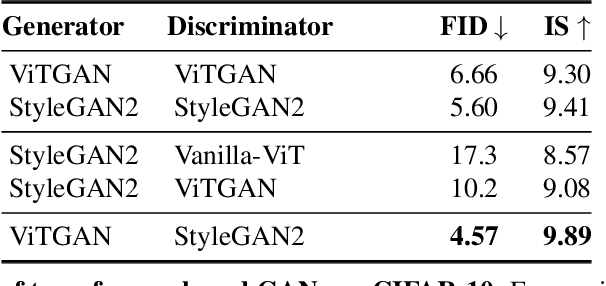

ViTGAN: Training GANs with Vision Transformers

Jul 09, 2021

Abstract:Recently, Vision Transformers (ViTs) have shown competitive performance on image recognition while requiring less vision-specific inductive biases. In this paper, we investigate if such observation can be extended to image generation. To this end, we integrate the ViT architecture into generative adversarial networks (GANs). We observe that existing regularization methods for GANs interact poorly with self-attention, causing serious instability during training. To resolve this issue, we introduce novel regularization techniques for training GANs with ViTs. Empirically, our approach, named ViTGAN, achieves comparable performance to state-of-the-art CNN-based StyleGAN2 on CIFAR-10, CelebA, and LSUN bedroom datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge