Zezhou Sun

UNCLE-Grasp: Uncertainty-Aware Grasping of Leaf-Occluded Strawberries

Jan 20, 2026Abstract:Robotic strawberry harvesting is challenging under partial occlusion, where leaves induce significant geometric uncertainty and make grasp decisions based on a single deterministic shape estimate unreliable. From a single partial observation, multiple incompatible 3D completions may be plausible, causing grasps that appear feasible on one completion to fail on another. We propose an uncertainty-aware grasping pipeline for partially occluded strawberries that explicitly models completion uncertainty arising from both occlusion and learned shape reconstruction. Our approach uses point cloud completion with Monte Carlo dropout to sample multiple shape hypotheses, generates candidate grasps for each completion, and evaluates grasp feasibility using physically grounded force-closure-based metrics. Rather than selecting a grasp based on a single estimate, we aggregate feasibility across completions and apply a conservative lower confidence bound (LCB) criterion to decide whether a grasp should be attempted or safely abstained. We evaluate the proposed method in simulation and on a physical robot across increasing levels of synthetic and real leaf occlusion. Results show that uncertainty-aware decision making enables reliable abstention from high-risk grasp attempts under severe occlusion while maintaining robust grasp execution when geometric confidence is sufficient, outperforming deterministic baselines in both simulated and physical robot experiments.

Imagination at Inference: Synthesizing In-Hand Views for Robust Visuomotor Policy Inference

Sep 19, 2025Abstract:Visual observations from different viewpoints can significantly influence the performance of visuomotor policies in robotic manipulation. Among these, egocentric (in-hand) views often provide crucial information for precise control. However, in some applications, equipping robots with dedicated in-hand cameras may pose challenges due to hardware constraints, system complexity, and cost. In this work, we propose to endow robots with imaginative perception - enabling them to 'imagine' in-hand observations from agent views at inference time. We achieve this via novel view synthesis (NVS), leveraging a fine-tuned diffusion model conditioned on the relative pose between the agent and in-hand views cameras. Specifically, we apply LoRA-based fine-tuning to adapt a pretrained NVS model (ZeroNVS) to the robotic manipulation domain. We evaluate our approach on both simulation benchmarks (RoboMimic and MimicGen) and real-world experiments using a Unitree Z1 robotic arm for a strawberry picking task. Results show that synthesized in-hand views significantly enhance policy inference, effectively recovering the performance drop caused by the absence of real in-hand cameras. Our method offers a scalable and hardware-light solution for deploying robust visuomotor policies, highlighting the potential of imaginative visual reasoning in embodied agents.

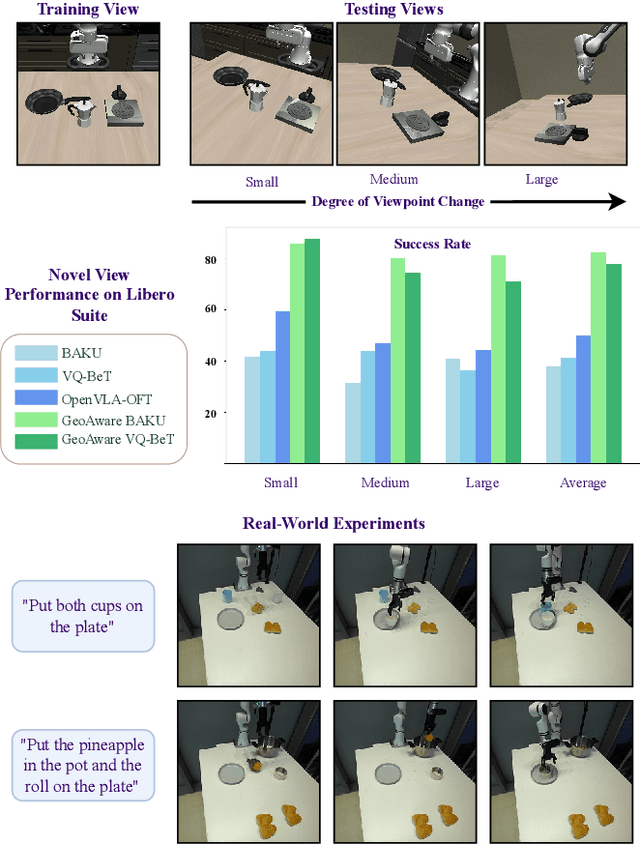

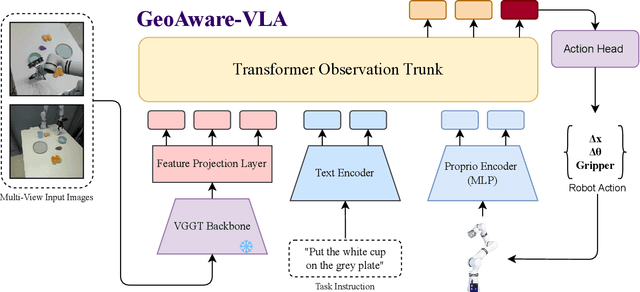

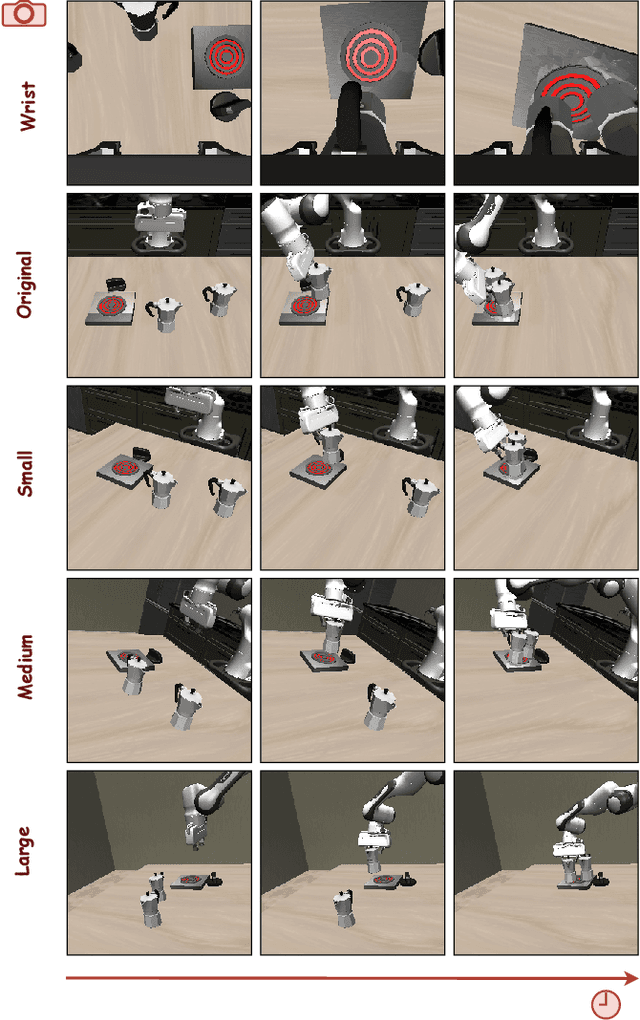

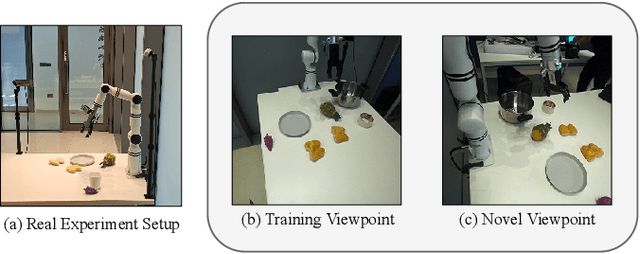

GeoAware-VLA: Implicit Geometry Aware Vision-Language-Action Model

Sep 17, 2025

Abstract:Vision-Language-Action (VLA) models often fail to generalize to novel camera viewpoints, a limitation stemming from their difficulty in inferring robust 3D geometry from 2D images. We introduce GeoAware-VLA, a simple yet effective approach that enhances viewpoint invariance by integrating strong geometric priors into the vision backbone. Instead of training a visual encoder or relying on explicit 3D data, we leverage a frozen, pretrained geometric vision model as a feature extractor. A trainable projection layer then adapts these geometrically-rich features for the policy decoder, relieving it of the burden of learning 3D consistency from scratch. Through extensive evaluations on LIBERO benchmark subsets, we show GeoAware-VLA achieves substantial improvements in zero-shot generalization to novel camera poses, boosting success rates by over 2x in simulation. Crucially, these benefits translate to the physical world; our model shows a significant performance gain on a real robot, especially when evaluated from unseen camera angles. Our approach proves effective across both continuous and discrete action spaces, highlighting that robust geometric grounding is a key component for creating more generalizable robotic agents.

A Hybrid Hinge-Beam Continuum Robot with Passive Safety Capping for Real-Time Fatigue Awareness

Sep 11, 2025Abstract:Cable-driven continuum robots offer high flexibility and lightweight design, making them well-suited for tasks in constrained and unstructured environments. However, prolonged use can induce mechanical fatigue from plastic deformation and material degradation, compromising performance and risking structural failure. In the state of the art, fatigue estimation of continuum robots remains underexplored, limiting long-term operation. To address this, we propose a fatigue-aware continuum robot with three key innovations: (1) a Hybrid Hinge-Beam structure where TwistBeam and BendBeam decouple torsion and bending: passive revolute joints in the BendBeam mitigate stress concentration, while TwistBeam's limited torsional deformation reduces BendBeam stress magnitude, enhancing durability; (2) a Passive Stopper that safely constrains motion via mechanical constraints and employs motor torque sensing to detect corresponding limit torque, ensuring safety and enabling data collection; and (3) a real-time fatigue-awareness method that estimates stiffness from motor torque at the limit pose, enabling online fatigue estimation without additional sensors. Experiments show that the proposed design reduces fatigue accumulation by about 49% compared with a conventional design, while passive mechanical limiting combined with motor-side sensing allows accurate estimation of structural fatigue and damage. These results confirm the effectiveness of the proposed architecture for safe and reliable long-term operation.

Towards Safe Imitation Learning via Potential Field-Guided Flow Matching

Aug 12, 2025

Abstract:Deep generative models, particularly diffusion and flow matching models, have recently shown remarkable potential in learning complex policies through imitation learning. However, the safety of generated motions remains overlooked, particularly in complex environments with inherent obstacles. In this work, we address this critical gap by proposing Potential Field-Guided Flow Matching Policy (PF2MP), a novel approach that simultaneously learns task policies and extracts obstacle-related information, represented as a potential field, from the same set of successful demonstrations. During inference, PF2MP modulates the flow matching vector field via the learned potential field, enabling safe motion generation. By leveraging these complementary fields, our approach achieves improved safety without compromising task success across diverse environments, such as navigation tasks and robotic manipulation scenarios. We evaluate PF2MP in both simulation and real-world settings, demonstrating its effectiveness in task space and joint space control. Experimental results demonstrate that PF2MP enhances safety, achieving a significant reduction of collisions compared to baseline policies. This work paves the way for safer motion generation in unstructured and obstaclerich environments.

Can Large Vision Language Models Read Maps Like a Human?

Mar 18, 2025

Abstract:In this paper, we introduce MapBench-the first dataset specifically designed for human-readable, pixel-based map-based outdoor navigation, curated from complex path finding scenarios. MapBench comprises over 1600 pixel space map path finding problems from 100 diverse maps. In MapBench, LVLMs generate language-based navigation instructions given a map image and a query with beginning and end landmarks. For each map, MapBench provides Map Space Scene Graph (MSSG) as an indexing data structure to convert between natural language and evaluate LVLM-generated results. We demonstrate that MapBench significantly challenges state-of-the-art LVLMs both zero-shot prompting and a Chain-of-Thought (CoT) augmented reasoning framework that decomposes map navigation into sequential cognitive processes. Our evaluation of both open-source and closed-source LVLMs underscores the substantial difficulty posed by MapBench, revealing critical limitations in their spatial reasoning and structured decision-making capabilities. We release all the code and dataset in https://github.com/taco-group/MapBench.

Active Loop Closure for OSM-guided Robotic Mapping in Large-Scale Urban Environments

Jul 24, 2024

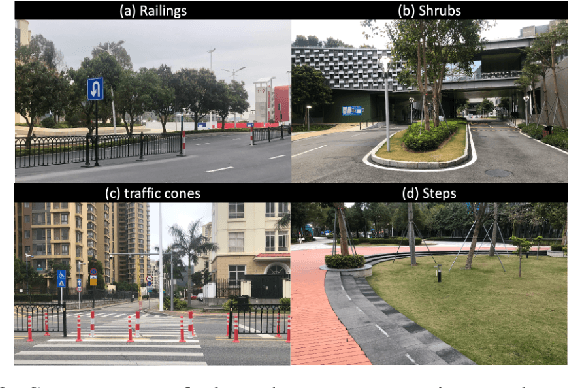

Abstract:The autonomous mapping of large-scale urban scenes presents significant challenges for autonomous robots. To mitigate the challenges, global planning, such as utilizing prior GPS trajectories from OpenStreetMap (OSM), is often used to guide the autonomous navigation of robots for mapping. However, due to factors like complex terrain, unexpected body movement, and sensor noise, the uncertainty of the robot's pose estimates inevitably increases over time, ultimately leading to the failure of robotic mapping. To address this issue, we propose a novel active loop closure procedure, enabling the robot to actively re-plan the previously planned GPS trajectory. The method can guide the robot to re-visit the previous places where the loop-closure detection can be performed to trigger the back-end optimization, effectively reducing errors and uncertainties in pose estimation. The proposed active loop closure mechanism is implemented and embedded into a real-time OSM-guided robot mapping framework. Empirical results on several large-scale outdoor scenarios demonstrate its effectiveness and promising performance.

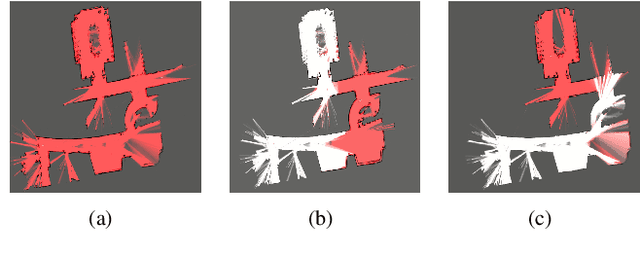

Graph Gain: A Concave-Hull Based Volumetric Gain for Robotic Exploration

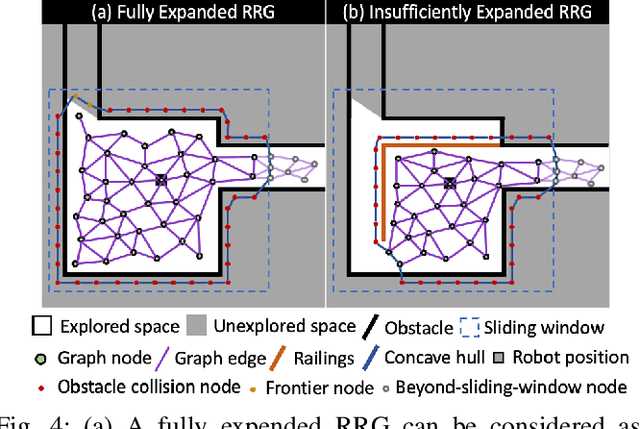

Apr 22, 2022

Abstract:The existing volumetric gain for robotic exploration is calculated in the 3D occupancy map, while the sampling-based exploration method is extended in the reachable (free) space. The inconsistency between them makes the existing calculation of volumetric gain inappropriate for a complete exploration of the environment. To address this issue, we propose a concave-hull based volumetric gain in a sampling-based exploration framework. The concave hull is constructed based on the viewpoints generated by Rapidly-exploring Random Tree (RRT) and the nodes that fail to expand. All space outside this concave hull is considered unknown. The volumetric gain is calculated based on the viewpoints configuration rather than using the occupancy map. With the new volumetric gain, robots can avoid inefficient or even erroneous exploration behavior caused by the inappropriateness of existing volumetric gain calculation methods. Our exploration method is evaluated against the existing state-of-the-art RRT-based method in a benchmark environment. In the evaluated environment, the average running time of our method is about 38.4% of the existing state-of-the-art method and our method is more robust.

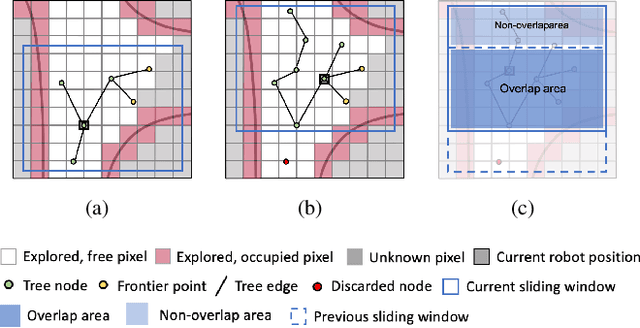

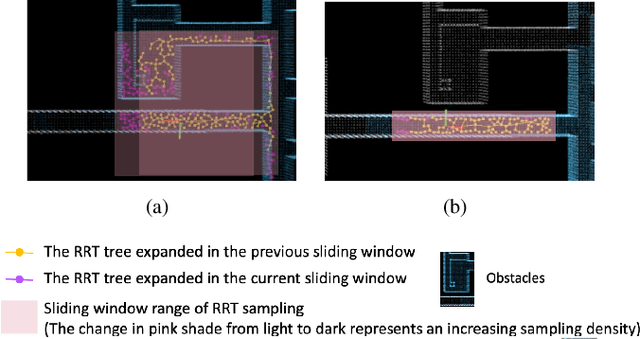

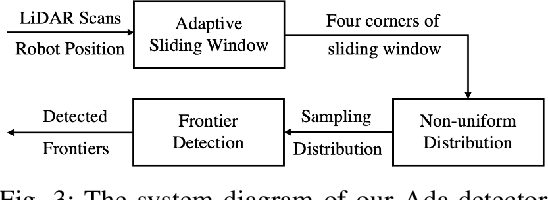

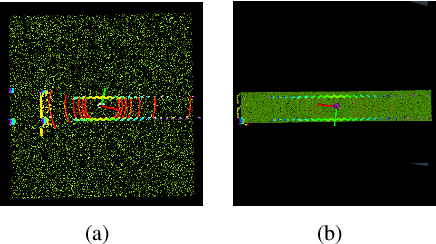

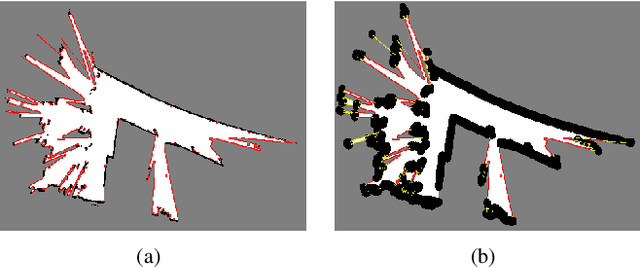

Ada-Detector: Adaptive Frontier Detector for Rapid Exploration

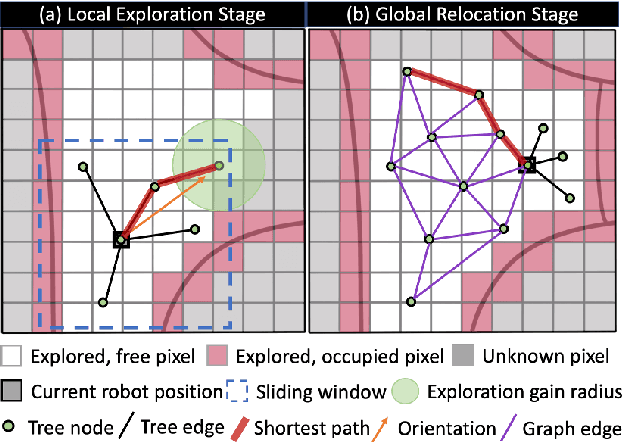

Apr 13, 2022

Abstract:In this paper, we propose an efficient frontier detector method based on adaptive Rapidly-exploring Random Tree (RRT) for autonomous robot exploration. Robots can achieve real-time incremental frontier detection when they are exploring unknown environments. First, our detector adaptively adjusts the sampling space of RRT by sensing the surrounding environment structure. The adaptive sampling space can greatly improve the successful sampling rate of RRT (the ratio of the number of samples successfully added to the RRT tree to the number of sampling attempts) according to the environment structure and control the expansion bias of the RRT. Second, by generating non-uniform distributed samples, our method also solves the over-sampling problem of RRT in the sliding windows, where uniform random sampling causes over-sampling in the overlap area between two adjacent sliding windows. In this way, our detector is more inclined to sample in the latest explored area, which improves the efficiency of frontier detection and achieves incremental detection. We validated our method in three simulated benchmark scenarios. The experimental comparison shows that we reduce the frontier detection runtime by about 40% compared with the SOTA method, DSV Planner.

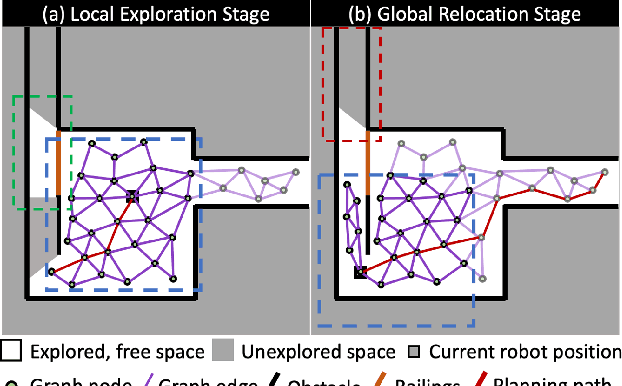

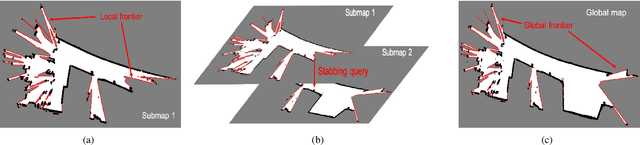

Frontier Detection and Reachability Analysis for Efficient 2D Graph-SLAM Based Active Exploration

Sep 07, 2020

Abstract:We propose an integrated approach to active exploration by exploiting the Cartographer method as the base SLAM module for submap creation and performing efficient frontier detection in the geometrically co-aligned submaps induced by graph optimization. We also carry out analysis on the reachability of frontiers and their clusters to ensure that the detected frontier can be reached by robot. Our method is tested on a mobile robot in real indoor scene to demonstrate the effectiveness and efficiency of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge