Xiaonan Luo

FakeRadar: Probing Forgery Outliers to Detect Unknown Deepfake Videos

Dec 16, 2025Abstract:In this paper, we propose FakeRadar, a novel deepfake video detection framework designed to address the challenges of cross-domain generalization in real-world scenarios. Existing detection methods typically rely on manipulation-specific cues, performing well on known forgery types but exhibiting severe limitations against emerging manipulation techniques. This poor generalization stems from their inability to adapt effectively to unseen forgery patterns. To overcome this, we leverage large-scale pretrained models (e.g. CLIP) to proactively probe the feature space, explicitly highlighting distributional gaps between real videos, known forgeries, and unseen manipulations. Specifically, FakeRadar introduces Forgery Outlier Probing, which employs dynamic subcluster modeling and cluster-conditional outlier generation to synthesize outlier samples near boundaries of estimated subclusters, simulating novel forgery artifacts beyond known manipulation types. Additionally, we design Outlier-Guided Tri-Training, which optimizes the detector to distinguish real, fake, and outlier samples using proposed outlier-driven contrastive learning and outlier-conditioned cross-entropy losses. Experiments show that FakeRadar outperforms existing methods across various benchmark datasets for deepfake video detection, particularly in cross-domain evaluations, by handling the variety of emerging manipulation techniques.

Better Datasets Start From RefineLab: Automatic Optimization for High-Quality Dataset Refinement

Nov 09, 2025Abstract:High-quality Question-Answer (QA) datasets are foundational for reliable Large Language Model (LLM) evaluation, yet even expert-crafted datasets exhibit persistent gaps in domain coverage, misaligned difficulty distributions, and factual inconsistencies. The recent surge in generative model-powered datasets has compounded these quality challenges. In this work, we introduce RefineLab, the first LLM-driven framework that automatically refines raw QA textual data into high-quality datasets under a controllable token-budget constraint. RefineLab takes a set of target quality attributes (such as coverage and difficulty balance) as refinement objectives, and performs selective edits within a predefined token budget to ensure practicality and efficiency. In essence, RefineLab addresses a constrained optimization problem: improving the quality of QA samples as much as possible while respecting resource limitations. With a set of available refinement operations (e.g., rephrasing, distractor replacement), RefineLab takes as input the original dataset, a specified set of target quality dimensions, and a token budget, and determines which refinement operations should be applied to each QA sample. This process is guided by an assignment module that selects optimal refinement strategies to maximize overall dataset quality while adhering to the budget constraint. Experiments demonstrate that RefineLab consistently narrows divergence from expert datasets across coverage, difficulty alignment, factual fidelity, and distractor quality. RefineLab pioneers a scalable, customizable path to reproducible dataset design, with broad implications for LLM evaluation.

VLDrive: Vision-Augmented Lightweight MLLMs for Efficient Language-grounded Autonomous Driving

Nov 09, 2025Abstract:Recent advancements in language-grounded autonomous driving have been significantly promoted by the sophisticated cognition and reasoning capabilities of large language models (LLMs). However, current LLM-based approaches encounter critical challenges: (1) Failure analysis reveals that frequent collisions and obstructions, stemming from limitations in visual representations, remain primary obstacles to robust driving performance. (2) The substantial parameters of LLMs pose considerable deployment hurdles. To address these limitations, we introduce VLDrive, a novel approach featuring a lightweight MLLM architecture with enhanced vision components. VLDrive achieves compact visual tokens through innovative strategies, including cycle-consistent dynamic visual pruning and memory-enhanced feature aggregation. Furthermore, we propose a distance-decoupled instruction attention mechanism to improve joint visual-linguistic feature learning, particularly for long-range visual tokens. Extensive experiments conducted in the CARLA simulator demonstrate VLDrive`s effectiveness. Notably, VLDrive achieves state-of-the-art driving performance while reducing parameters by 81% (from 7B to 1.3B), yielding substantial driving score improvements of 15.4%, 16.8%, and 7.6% at tiny, short, and long distances, respectively, in closed-loop evaluations. Code is available at https://github.com/ReaFly/VLDrive.

LGM-Pose: A Lightweight Global Modeling Network for Real-time Human Pose Estimation

Jun 05, 2025Abstract:Most of the current top-down multi-person pose estimation lightweight methods are based on multi-branch parallel pure CNN network architecture, which often struggle to capture the global context required for detecting semantically complex keypoints and are hindered by high latency due to their intricate and redundant structures. In this article, an approximate single-branch lightweight global modeling network (LGM-Pose) is proposed to address these challenges. In the network, a lightweight MobileViM Block is designed with a proposed Lightweight Attentional Representation Module (LARM), which integrates information within and between patches using the Non-Parametric Transformation Operation(NPT-Op) to extract global information. Additionally, a novel Shuffle-Integrated Fusion Module (SFusion) is introduced to effectively integrate multi-scale information, mitigating performance degradation often observed in single-branch structures. Experimental evaluations on the COCO and MPII datasets demonstrate that our approach not only reduces the number of parameters compared to existing mainstream lightweight methods but also achieves superior performance and faster processing speeds.

AdaReasoner: Adaptive Reasoning Enables More Flexible Thinking

May 22, 2025Abstract:LLMs often need effective configurations, like temperature and reasoning steps, to handle tasks requiring sophisticated reasoning and problem-solving, ranging from joke generation to mathematical reasoning. Existing prompting approaches usually adopt general-purpose, fixed configurations that work 'well enough' across tasks but seldom achieve task-specific optimality. To address this gap, we introduce AdaReasoner, an LLM-agnostic plugin designed for any LLM to automate adaptive reasoning configurations for tasks requiring different types of thinking. AdaReasoner is trained using a reinforcement learning (RL) framework, combining a factorized action space with a targeted exploration strategy, along with a pretrained reward model to optimize the policy model for reasoning configurations with only a few-shot guide. AdaReasoner is backed by theoretical guarantees and experiments of fast convergence and a sublinear policy gap. Across six different LLMs and a variety of reasoning tasks, it consistently outperforms standard baselines, preserves out-of-distribution robustness, and yield gains on knowledge-intensive tasks through tailored prompts.

MorphText: Deep Morphology Regularized Arbitrary-shape Scene Text Detection

Apr 26, 2024

Abstract:Bottom-up text detection methods play an important role in arbitrary-shape scene text detection but there are two restrictions preventing them from achieving their great potential, i.e., 1) the accumulation of false text segment detections, which affects subsequent processing, and 2) the difficulty of building reliable connections between text segments. Targeting these two problems, we propose a novel approach, named ``MorphText", to capture the regularity of texts by embedding deep morphology for arbitrary-shape text detection. Towards this end, two deep morphological modules are designed to regularize text segments and determine the linkage between them. First, a Deep Morphological Opening (DMOP) module is constructed to remove false text segment detections generated in the feature extraction process. Then, a Deep Morphological Closing (DMCL) module is proposed to allow text instances of various shapes to stretch their morphology along their most significant orientation while deriving their connections. Extensive experiments conducted on four challenging benchmark datasets (CTW1500, Total-Text, MSRA-TD500 and ICDAR2017) demonstrate that our proposed MorphText outperforms both top-down and bottom-up state-of-the-art arbitrary-shape scene text detection approaches.

Gland segmentation via dual encoders and boundary-enhanced attention

Jan 29, 2024

Abstract:Accurate and automated gland segmentation on pathological images can assist pathologists in diagnosing the malignancy of colorectal adenocarcinoma. However, due to various gland shapes, severe deformation of malignant glands, and overlapping adhesions between glands. Gland segmentation has always been very challenging. To address these problems, we propose a DEA model. This model consists of two branches: the backbone encoding and decoding network and the local semantic extraction network. The backbone encoding and decoding network extracts advanced Semantic features, uses the proposed feature decoder to restore feature space information, and then enhances the boundary features of the gland through boundary enhancement attention. The local semantic extraction network uses the pre-trained DeepLabv3+ as a Local semantic-guided encoder to realize the extraction of edge features. Experimental results on two public datasets, GlaS and CRAG, confirm that the performance of our method is better than other gland segmentation methods.

A novel dataset and a two-stage mitosis nuclei detection method based on hybrid anchor branch

Jan 18, 2023

Abstract:Mitosis detection is one of the challenging problems in computational pathology, and mitotic count is an important index of cancer grading for pathologists. However, current counts of mitotic nuclei rely on pathologists looking microscopically at the number of mitotic nuclei in hot spots, which is subjective and time-consuming. In this paper, we propose a two-stage cascaded network, named FoCasNet, for mitosis detection. In the first stage, a detection network named M_det is proposed to detect as many mitoses as possible. In the second stage, a classification network M_class is proposed to refine the results of the first stage. In addition, the attention mechanism, normalization method, and hybrid anchor branch classification subnet are introduced to improve the overall detection performance. Our method achieves the current highest F1-score of 0.888 on the public dataset ICPR 2012. We also evaluated our method on the GZMH dataset released by our research team for the first time and reached the highest F1-score of 0.563, which is also better than multiple classic detection networks widely used at present. It confirmed the effectiveness and generalization of our method. The code will be available at: https://github.com/antifen/mitosis-nuclei-detection.

A Novel Dataset and a Deep Learning Method for Mitosis Nuclei Segmentation and Classification

Dec 27, 2022

Abstract:Mitosis nuclei count is one of the important indicators for the pathological diagnosis of breast cancer. The manual annotation needs experienced pathologists, which is very time-consuming and inefficient. With the development of deep learning methods, some models with good performance have emerged, but the generalization ability should be further strengthened. In this paper, we propose a two-stage mitosis segmentation and classification method, named SCMitosis. Firstly, the segmentation performance with a high recall rate is achieved by the proposed depthwise separable convolution residual block and channel-spatial attention gate. Then, a classification network is cascaded to further improve the detection performance of mitosis nuclei. The proposed model is verified on the ICPR 2012 dataset, and the highest F-score value of 0.8687 is obtained compared with the current state-of-the-art algorithms. In addition, the model also achieves good performance on GZMH dataset, which is prepared by our group and will be firstly released with the publication of this paper. The code will be available at: https://github.com/antifen/mitosis-nuclei-segmentation.

Binary Representation via Jointly Personalized Sparse Hashing

Aug 31, 2022

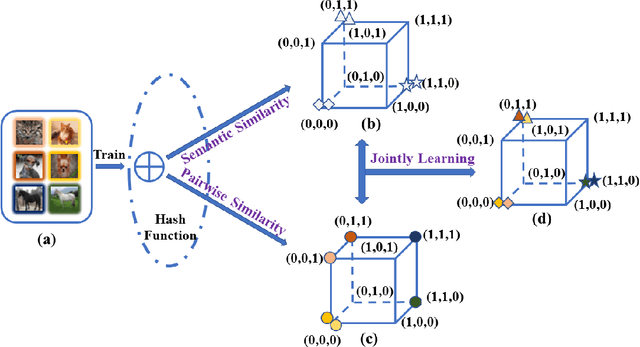

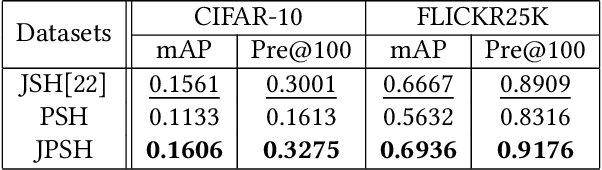

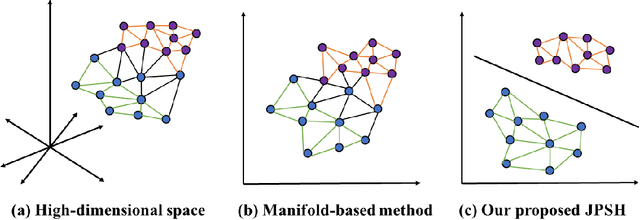

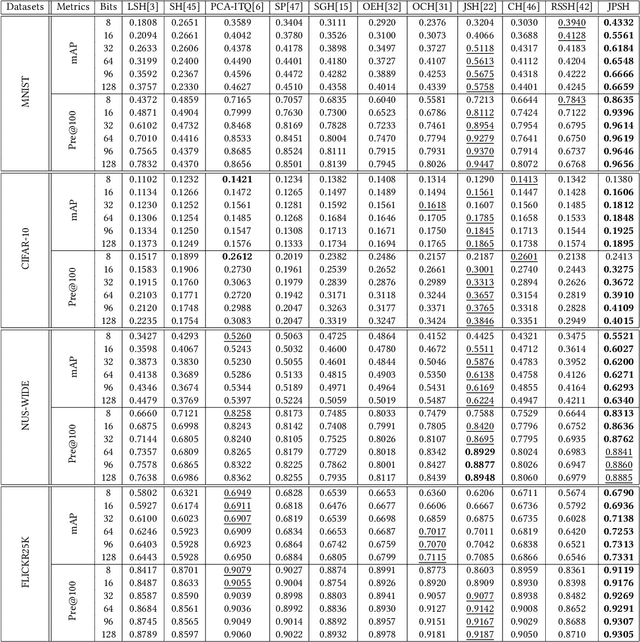

Abstract:Unsupervised hashing has attracted much attention for binary representation learning due to the requirement of economical storage and efficiency of binary codes. It aims to encode high-dimensional features in the Hamming space with similarity preservation between instances. However, most existing methods learn hash functions in manifold-based approaches. Those methods capture the local geometric structures (i.e., pairwise relationships) of data, and lack satisfactory performance in dealing with real-world scenarios that produce similar features (e.g. color and shape) with different semantic information. To address this challenge, in this work, we propose an effective unsupervised method, namely Jointly Personalized Sparse Hashing (JPSH), for binary representation learning. To be specific, firstly, we propose a novel personalized hashing module, i.e., Personalized Sparse Hashing (PSH). Different personalized subspaces are constructed to reflect category-specific attributes for different clusters, adaptively mapping instances within the same cluster to the same Hamming space. In addition, we deploy sparse constraints for different personalized subspaces to select important features. We also collect the strengths of the other clusters to build the PSH module with avoiding over-fitting. Then, to simultaneously preserve semantic and pairwise similarities in our JPSH, we incorporate the PSH and manifold-based hash learning into the seamless formulation. As such, JPSH not only distinguishes the instances from different clusters, but also preserves local neighborhood structures within the cluster. Finally, an alternating optimization algorithm is adopted to iteratively capture analytical solutions of the JPSH model. Extensive experiments on four benchmark datasets verify that the JPSH outperforms several hashing algorithms on the similarity search task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge