Wang Chen

MLOps Spanning Whole Machine Learning Life Cycle: A Survey

Apr 13, 2023

Abstract:Google AlphaGos win has significantly motivated and sped up machine learning (ML) research and development, which led to tremendous ML technical advances and wider adoptions in various domains (e.g., Finance, Health, Defense, and Education). These advances have resulted in numerous new concepts and technologies, which are too many for people to catch up to and even make them confused, especially for newcomers to the ML area. This paper is aimed to present a clear picture of the state-of-the-art of the existing ML technologies with a comprehensive survey. We lay out this survey by viewing ML as a MLOps (ML Operations) process, where the key concepts and activities are collected and elaborated with representative works and surveys. We hope that this paper can serve as a quick reference manual (a survey of surveys) for newcomers (e.g., researchers, practitioners) of ML to get an overview of the MLOps process, as well as a good understanding of the key technologies used in each step of the ML process, and know where to find more details.

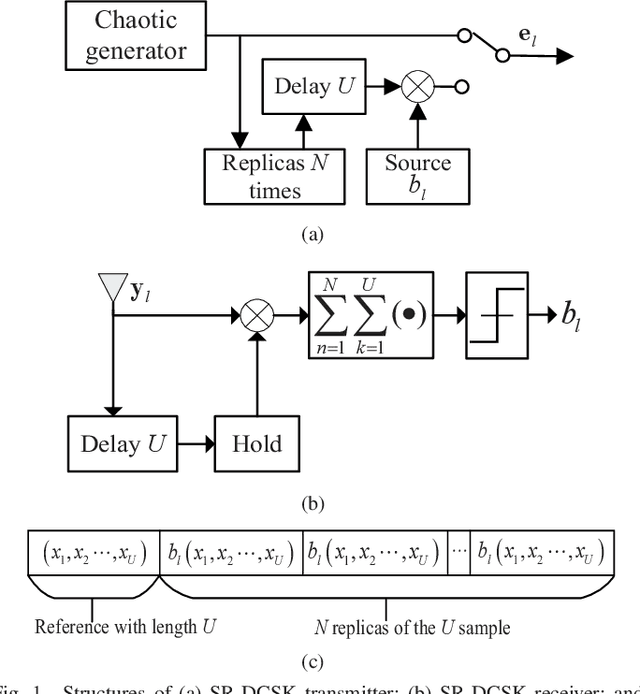

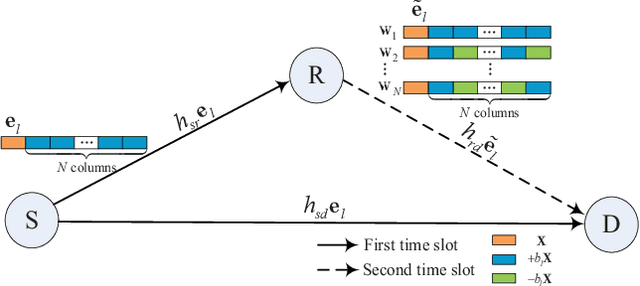

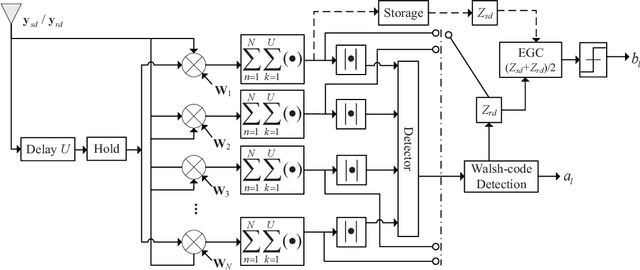

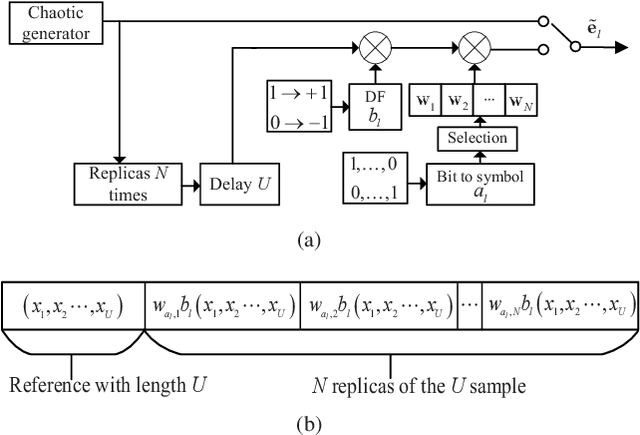

SR-DCSK Cooperative Communication System with Code Index Modulation: A New Design for 6G New Radios

Aug 27, 2022

Abstract:This paper proposes a high-throughput short reference differential chaos shift keying cooperative communication system with the aid of code index modulation, referred to as CIM-SR-DCSK-CC system. In the proposed CIM-SR-DCSK-CC system, the source transmits information bits to both the relay and destination in the first time slot, while the relay not only forwards the source information bits but also sends new information bits to the destination in the second time slot. To be specific, the relay employs an $N$-order Walsh code to carry additional ${{\log }_{2}}N$ information bits, which are superimposed onto the SR-DCSK signal carrying the decoded source information bits. Subsequently, the superimposed signal carrying both the source and relay information bits is transmitted to the destination. Moreover, the theoretical bit error rate (BER) expressions of the proposed CIM-SR-DCSK-CC system are derived over additive white Gaussian noise (AWGN) and multipath Rayleigh fading channels. Compared with the conventional DCSK-CC system and SR-DCSK-CC system, the proposed CIM-SR-DCSK-CC system can significantly improve the throughput without deteriorating any BER performance. As a consequence, the proposed system is very promising for the applications of the 6G-enabled low-power and high-rate communication.

Global Mixup: Eliminating Ambiguity with Clustering

Jun 06, 2022

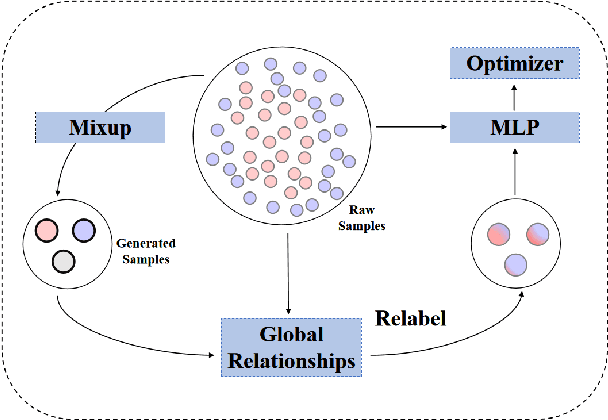

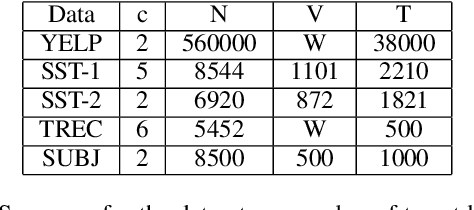

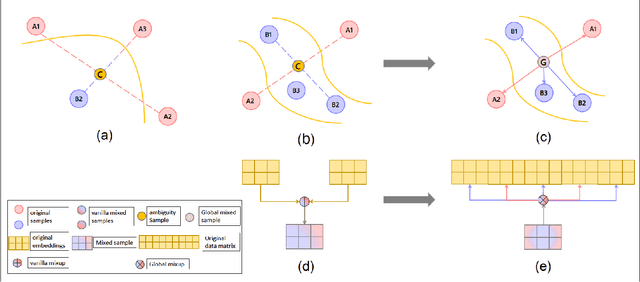

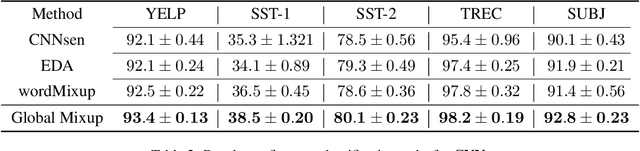

Abstract:Data augmentation with \textbf{Mixup} has been proven an effective method to regularize the current deep neural networks. Mixup generates virtual samples and corresponding labels at once through linear interpolation. However, this one-stage generation paradigm and the use of linear interpolation have the following two defects: (1) The label of the generated sample is directly combined from the labels of the original sample pairs without reasonable judgment, which makes the labels likely to be ambiguous. (2) linear combination significantly limits the sampling space for generating samples. To tackle these problems, we propose a novel and effective augmentation method based on global clustering relationships named \textbf{Global Mixup}. Specifically, we transform the previous one-stage augmentation process into two-stage, decoupling the process of generating virtual samples from the labeling. And for the labels of the generated samples, relabeling is performed based on clustering by calculating the global relationships of the generated samples. In addition, we are no longer limited to linear relationships but generate more reliable virtual samples in a larger sampling space. Extensive experiments for \textbf{CNN}, \textbf{LSTM}, and \textbf{BERT} on five tasks show that Global Mixup significantly outperforms previous state-of-the-art baselines. Further experiments also demonstrate the advantage of Global Mixup in low-resource scenarios.

A novel multiobjective evolutionary algorithm based on decomposition and multi-reference points strategy

Nov 11, 2021

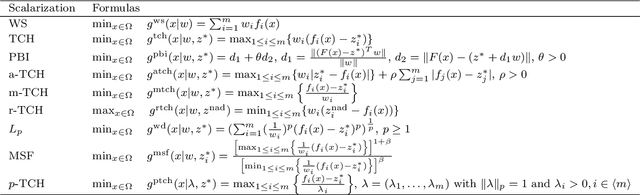

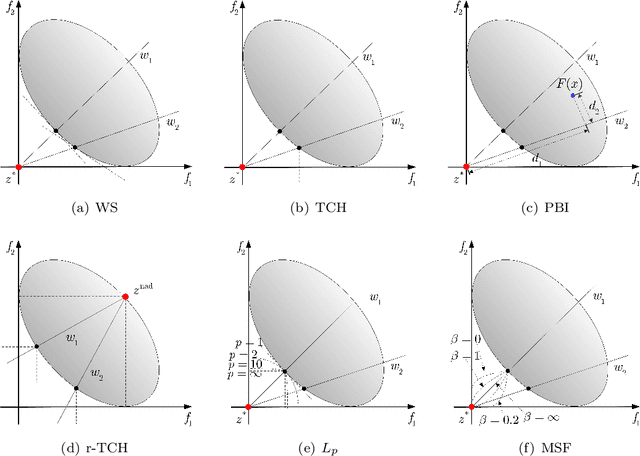

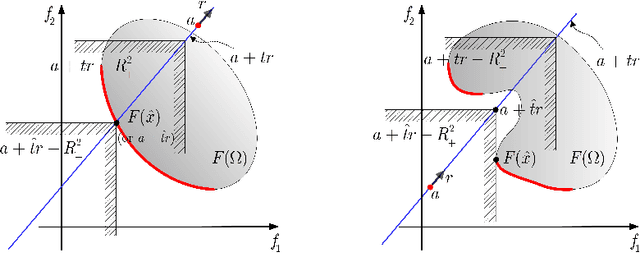

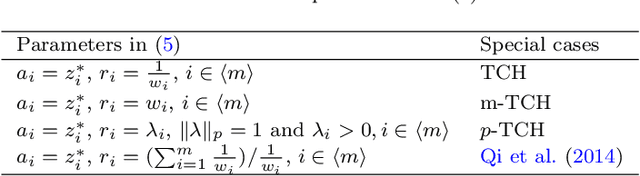

Abstract:Many real-world optimization problems such as engineering design can be eventually modeled as the corresponding multiobjective optimization problems (MOPs) which must be solved to obtain approximate Pareto optimal fronts. Multiobjective evolutionary algorithm based on decomposition (MOEA/D) has been regarded as a significantly promising approach for solving MOPs. Recent studies have shown that MOEA/D with uniform weight vectors is well-suited to MOPs with regular Pareto optimal fronts, but its performance in terms of diversity usually deteriorates when solving MOPs with irregular Pareto optimal fronts. In this way, the solution set obtained by the algorithm can not provide more reasonable choices for decision makers. In order to efficiently overcome this drawback, we propose an improved MOEA/D algorithm by virtue of the well-known Pascoletti-Serafini scalarization method and a new strategy of multi-reference points. Specifically, this strategy consists of the setting and adaptation of reference points generated by the techniques of equidistant partition and projection. For performance assessment, the proposed algorithm is compared with existing four state-of-the-art multiobjective evolutionary algorithms on benchmark test problems with various types of Pareto optimal fronts. According to the experimental results, the proposed algorithm exhibits better diversity performance than that of the other compared algorithms. Finally, our algorithm is applied to two real-world MOPs in engineering optimization successfully.

Dialogue Summarization with Supporting Utterance Flow Modeling and Fact Regularization

Aug 03, 2021

Abstract:Dialogue summarization aims to generate a summary that indicates the key points of a given dialogue. In this work, we propose an end-to-end neural model for dialogue summarization with two novel modules, namely, the \emph{supporting utterance flow modeling module} and the \emph{fact regularization module}. The supporting utterance flow modeling helps to generate a coherent summary by smoothly shifting the focus from the former utterances to the later ones. The fact regularization encourages the generated summary to be factually consistent with the ground-truth summary during model training, which helps to improve the factual correctness of the generated summary in inference time. Furthermore, we also introduce a new benchmark dataset for dialogue summarization. Extensive experiments on both existing and newly-introduced datasets demonstrate the effectiveness of our model.

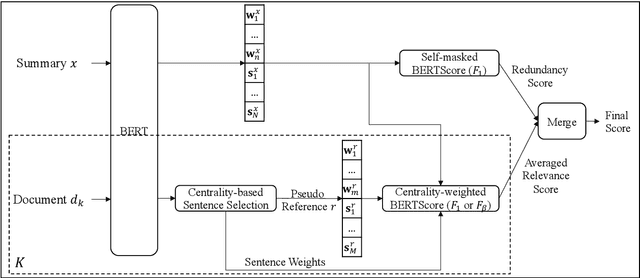

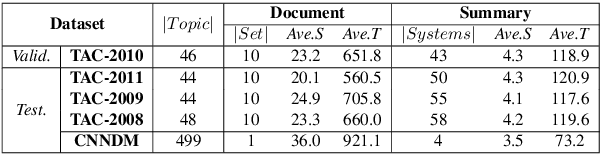

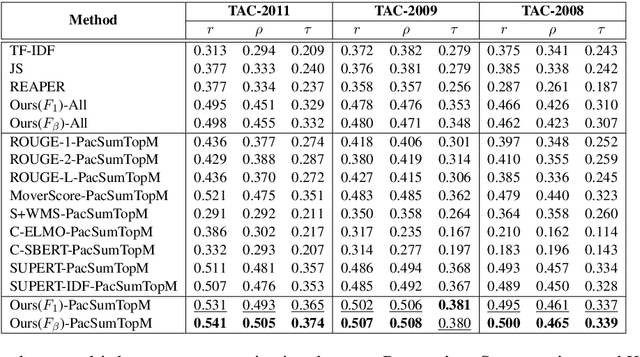

A Training-free and Reference-free Summarization Evaluation Metric via Centrality-weighted Relevance and Self-referenced Redundancy

Jun 26, 2021

Abstract:In recent years, reference-based and supervised summarization evaluation metrics have been widely explored. However, collecting human-annotated references and ratings are costly and time-consuming. To avoid these limitations, we propose a training-free and reference-free summarization evaluation metric. Our metric consists of a centrality-weighted relevance score and a self-referenced redundancy score. The relevance score is computed between the pseudo reference built from the source document and the given summary, where the pseudo reference content is weighted by the sentence centrality to provide importance guidance. Besides an $F_1$-based relevance score, we also design an $F_\beta$-based variant that pays more attention to the recall score. As for the redundancy score of the summary, we compute a self-masked similarity score with the summary itself to evaluate the redundant information in the summary. Finally, we combine the relevance and redundancy scores to produce the final evaluation score of the given summary. Extensive experiments show that our methods can significantly outperform existing methods on both multi-document and single-document summarization evaluation.

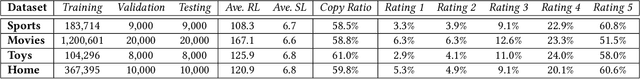

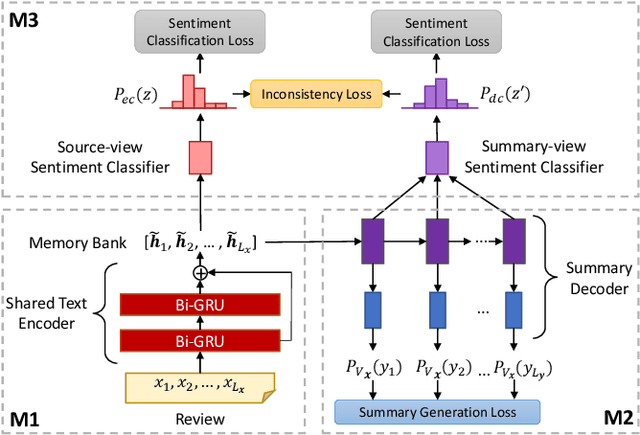

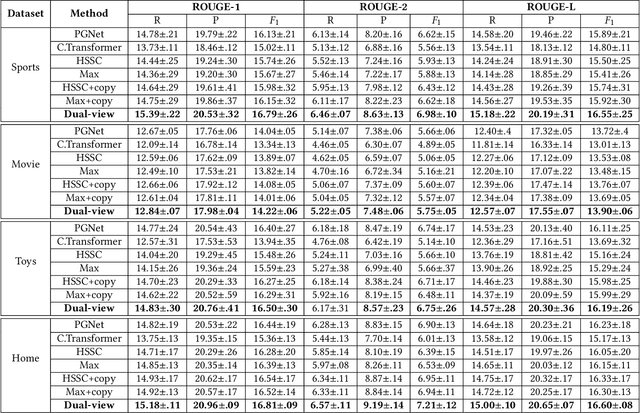

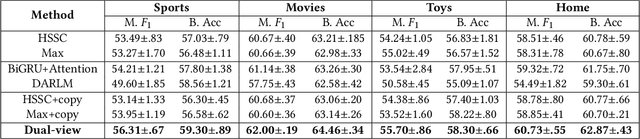

A Unified Dual-view Model for Review Summarization and Sentiment Classification with Inconsistency Loss

Jun 02, 2020

Abstract:Acquiring accurate summarization and sentiment from user reviews is an essential component of modern e-commerce platforms. Review summarization aims at generating a concise summary that describes the key opinions and sentiment of a review, while sentiment classification aims to predict a sentiment label indicating the sentiment attitude of a review. To effectively leverage the shared sentiment information in both review summarization and sentiment classification tasks, we propose a novel dual-view model that jointly improves the performance of these two tasks. In our model, an encoder first learns a context representation for the review, then a summary decoder generates a review summary word by word. After that, a source-view sentiment classifier uses the encoded context representation to predict a sentiment label for the review, while a summary-view sentiment classifier uses the decoder hidden states to predict a sentiment label for the generated summary. During training, we introduce an inconsistency loss to penalize the disagreement between these two classifiers. It helps the decoder to generate a summary to have a consistent sentiment tendency with the review and also helps the two sentiment classifiers learn from each other. Experiment results on four real-world datasets from different domains demonstrate the effectiveness of our model.

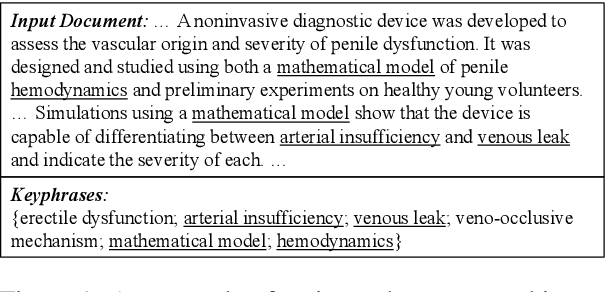

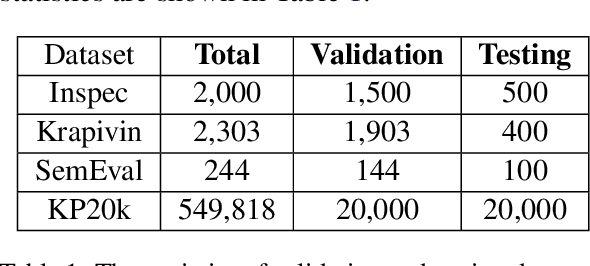

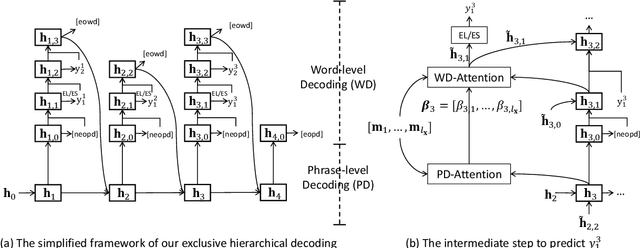

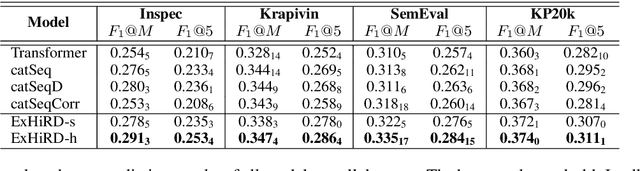

Exclusive Hierarchical Decoding for Deep Keyphrase Generation

Apr 18, 2020

Abstract:Keyphrase generation (KG) aims to summarize the main ideas of a document into a set of keyphrases. A new setting is recently introduced into this problem, in which, given a document, the model needs to predict a set of keyphrases and simultaneously determine the appropriate number of keyphrases to produce. Previous work in this setting employs a sequential decoding process to generate keyphrases. However, such a decoding method ignores the intrinsic hierarchical compositionality existing in the keyphrase set of a document. Moreover, previous work tends to generate duplicated keyphrases, which wastes time and computing resources. To overcome these limitations, we propose an exclusive hierarchical decoding framework that includes a hierarchical decoding process and either a soft or a hard exclusion mechanism. The hierarchical decoding process is to explicitly model the hierarchical compositionality of a keyphrase set. Both the soft and the hard exclusion mechanisms keep track of previously-predicted keyphrases within a window size to enhance the diversity of the generated keyphrases. Extensive experiments on multiple KG benchmark datasets demonstrate the effectiveness of our method to generate less duplicated and more accurate keyphrases.

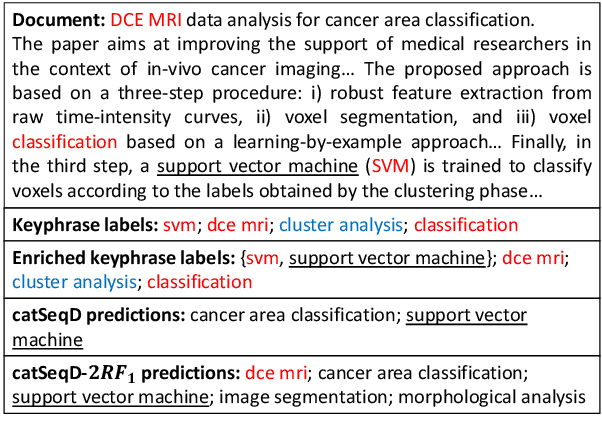

Neural Keyphrase Generation via Reinforcement Learning with Adaptive Rewards

Jun 10, 2019

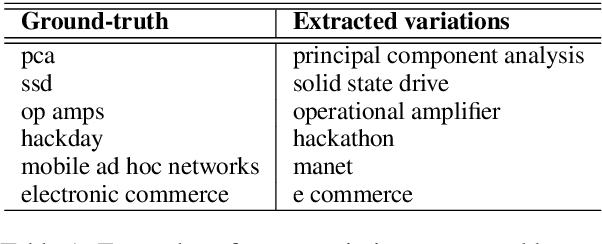

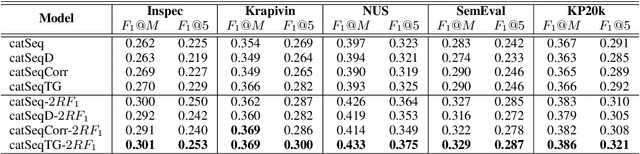

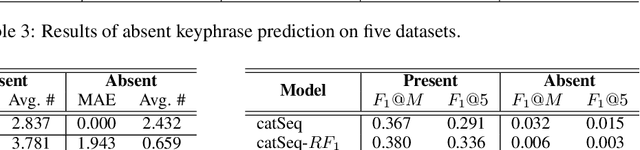

Abstract:Generating keyphrases that summarize the main points of a document is a fundamental task in natural language processing. Although existing generative models are capable of predicting multiple keyphrases for an input document as well as determining the number of keyphrases to generate, they still suffer from the problem of generating too few keyphrases. To address this problem, we propose a reinforcement learning (RL) approach for keyphrase generation, with an adaptive reward function that encourages a model to generate both sufficient and accurate keyphrases. Furthermore, we introduce a new evaluation method that incorporates name variations of the ground-truth keyphrases using the Wikipedia knowledge base. Thus, our evaluation method can more robustly evaluate the quality of predicted keyphrases. Extensive experiments on five real-world datasets of different scales demonstrate that our RL approach consistently and significantly improves the performance of the state-of-the-art generative models with both conventional and new evaluation methods.

An Integrated Approach for Keyphrase Generation via Exploring the Power of Retrieval and Extraction

Apr 06, 2019

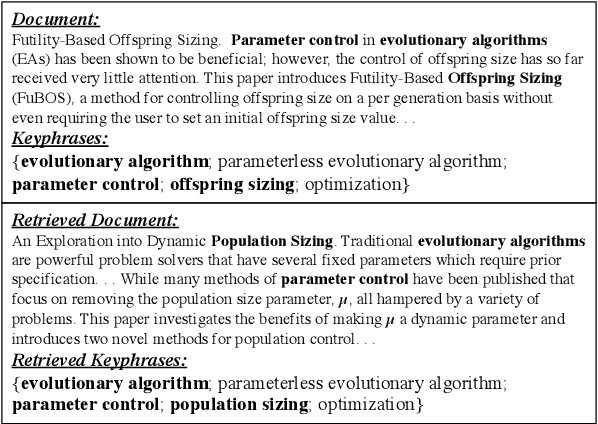

Abstract:In this paper, we present a novel integrated approach for keyphrase generation (KG). Unlike previous works which are purely extractive or generative, we first propose a new multi-task learning framework that jointly learns an extractive model and a generative model. Besides extracting keyphrases, the output of the extractive model is also employed to rectify the copy probability distribution of the generative model, such that the generative model can better identify important contents from the given document. Moreover, we retrieve similar documents with the given document from training data and use their associated keyphrases as external knowledge for the generative model to produce more accurate keyphrases. For further exploiting the power of extraction and retrieval, we propose a neural-based merging module to combine and re-rank the predicted keyphrases from the enhanced generative model, the extractive model, and the retrieved keyphrases. Experiments on the five KG benchmarks demonstrate that our integrated approach outperforms the state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge