Qian Du

Superpixel-guided Discriminative Low-rank Representation of Hyperspectral Images for Classification

Aug 25, 2021

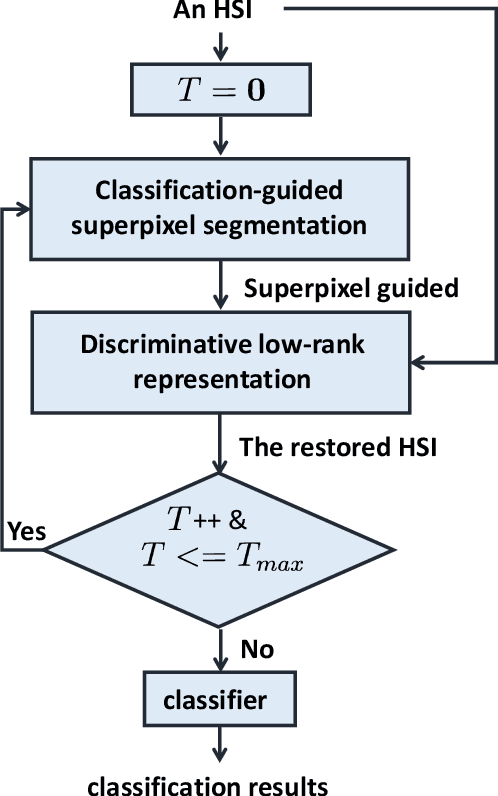

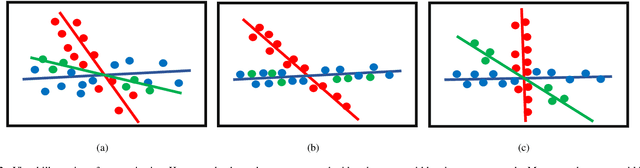

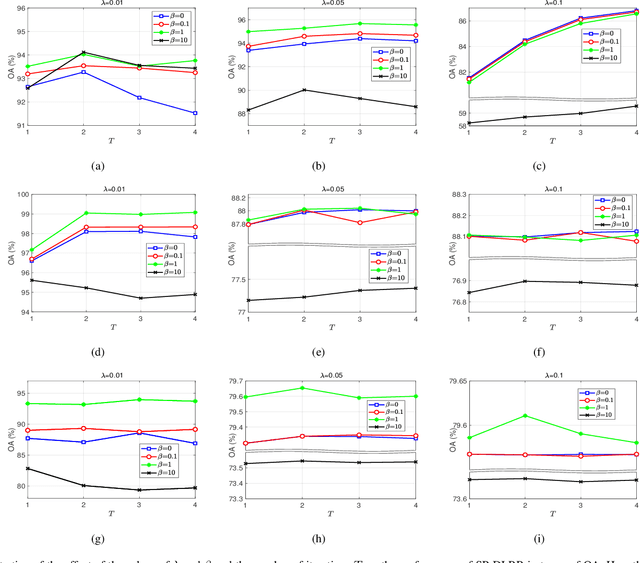

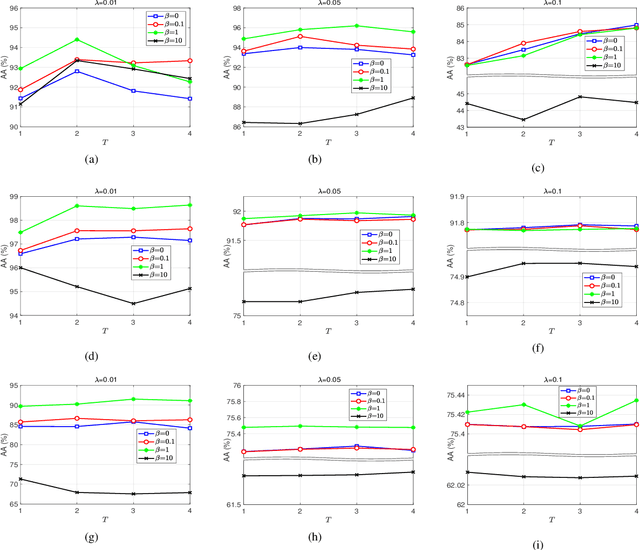

Abstract:In this paper, we propose a novel classification scheme for the remotely sensed hyperspectral image (HSI), namely SP-DLRR, by comprehensively exploring its unique characteristics, including the local spatial information and low-rankness. SP-DLRR is mainly composed of two modules, i.e., the classification-guided superpixel segmentation and the discriminative low-rank representation, which are iteratively conducted. Specifically, by utilizing the local spatial information and incorporating the predictions from a typical classifier, the first module segments pixels of an input HSI (or its restoration generated by the second module) into superpixels. According to the resulting superpixels, the pixels of the input HSI are then grouped into clusters and fed into our novel discriminative low-rank representation model with an effective numerical solution. Such a model is capable of increasing the intra-class similarity by suppressing the spectral variations locally while promoting the inter-class discriminability globally, leading to a restored HSI with more discriminative pixels. Experimental results on three benchmark datasets demonstrate the significant superiority of SP-DLRR over state-of-the-art methods, especially for the case with an extremely limited number of training pixels.

Hyperspectral and Multispectral Classification for Coastal Wetland Using Depthwise Feature Interaction Network

Jul 12, 2021

Abstract:The monitoring of coastal wetlands is of great importance to the protection of marine and terrestrial ecosystems. However, due to the complex environment, severe vegetation mixture, and difficulty of access, it is impossible to accurately classify coastal wetlands and identify their species with traditional classifiers. Despite the integration of multisource remote sensing data for performance enhancement, there are still challenges with acquiring and exploiting the complementary merits from multisource data. In this paper, the Deepwise Feature Interaction Network (DFINet) is proposed for wetland classification. A depthwise cross attention module is designed to extract self-correlation and cross-correlation from multisource feature pairs. In this way, meaningful complementary information is emphasized for classification. DFINet is optimized by coordinating consistency loss, discrimination loss, and classification loss. Accordingly, DFINet reaches the standard solution-space under the regularity of loss functions, while the spatial consistency and feature discrimination are preserved. Comprehensive experimental results on two hyperspectral and multispectral wetland datasets demonstrate that the proposed DFINet outperforms other competitive methods in terms of overall accuracy.

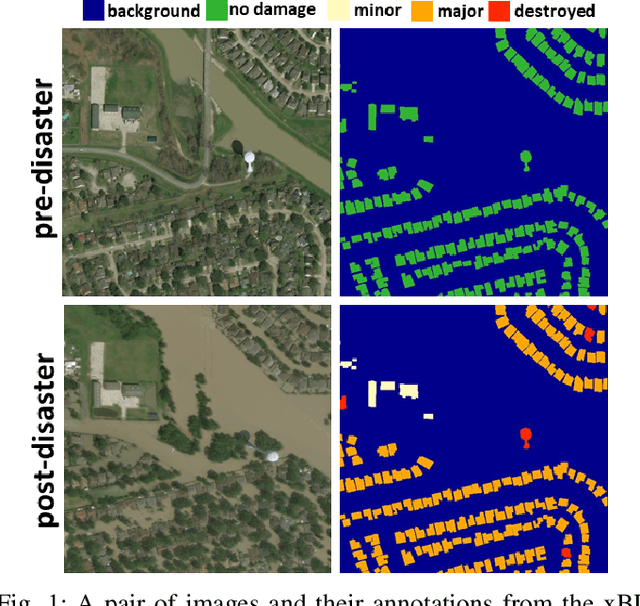

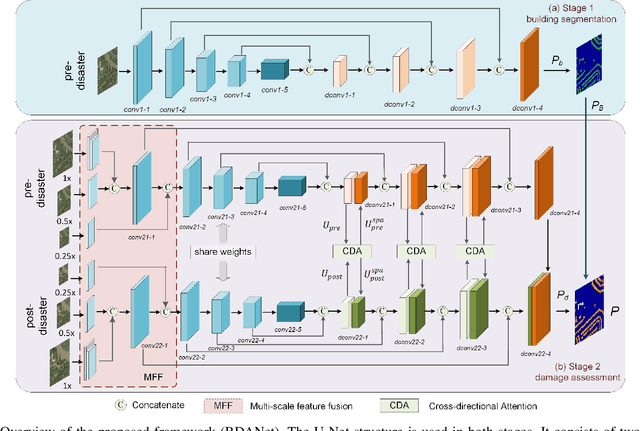

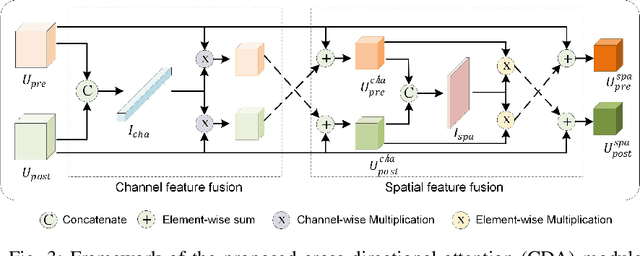

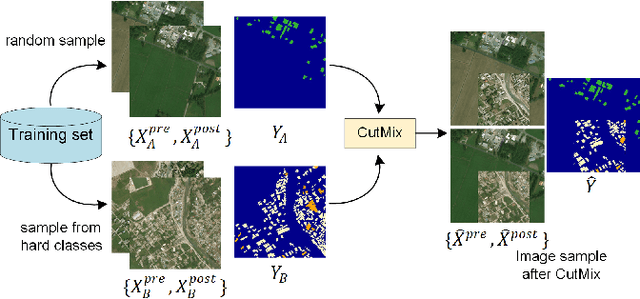

BDANet: Multiscale Convolutional Neural Network with Cross-directional Attention for Building Damage Assessment from Satellite Images

May 16, 2021

Abstract:Fast and effective responses are required when a natural disaster (e.g., earthquake, hurricane, etc.) strikes. Building damage assessment from satellite imagery is critical before relief effort is deployed. With a pair of pre- and post-disaster satellite images, building damage assessment aims at predicting the extent of damage to buildings. With the powerful ability of feature representation, deep neural networks have been successfully applied to building damage assessment. Most existing works simply concatenate pre- and post-disaster images as input of a deep neural network without considering their correlations. In this paper, we propose a novel two-stage convolutional neural network for Building Damage Assessment, called BDANet. In the first stage, a U-Net is used to extract the locations of buildings. Then the network weights from the first stage are shared in the second stage for building damage assessment. In the second stage, a two-branch multi-scale U-Net is employed as backbone, where pre- and post-disaster images are fed into the network separately. A cross-directional attention module is proposed to explore the correlations between pre- and post-disaster images. Moreover, CutMix data augmentation is exploited to tackle the challenge of difficult classes. The proposed method achieves state-of-the-art performance on a large-scale dataset -- xBD. The code is available at https://github.com/ShaneShen/BDANet-Building-Damage-Assessment.

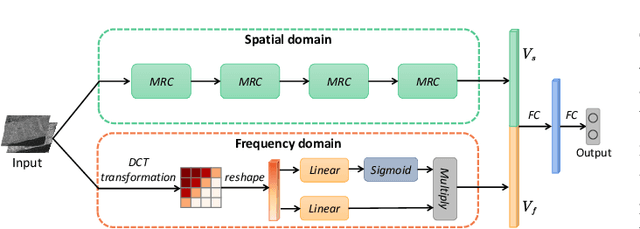

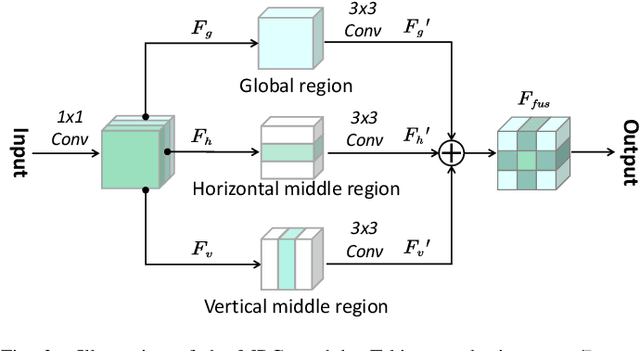

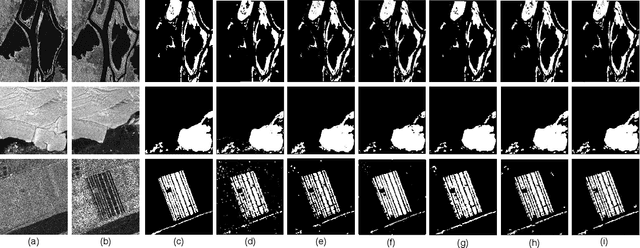

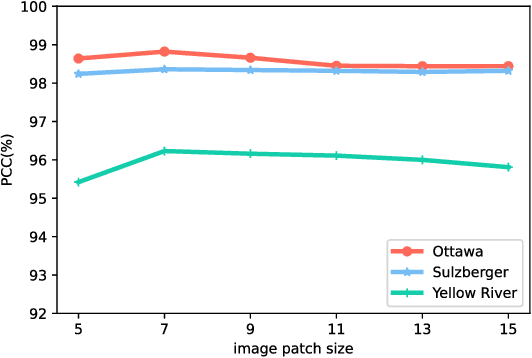

Change Detection in Synthetic Aperture Radar Images Using a Dual-Domain Network

Apr 15, 2021

Abstract:Change detection from synthetic aperture radar (SAR) imagery is a critical yet challenging task. Existing methods mainly focus on feature extraction in spatial domain, and little attention has been paid to frequency domain. Furthermore, in patch-wise feature analysis, some noisy features in the marginal region may be introduced. To tackle the above two challenges, we propose a Dual-Domain Network. Specifically, we take features from the discrete cosine transform domain into consideration and the reshaped DCT coefficients are integrated into the proposed model as the frequency domain branch. Feature representations from both frequency and spatial domain are exploited to alleviate the speckle noise. In addition, we further propose a multi-region convolution module, which emphasizes the central region of each patch. The contextual information and central region features are modeled adaptively. The experimental results on three SAR datasets demonstrate the effectiveness of the proposed model. Our codes are available at https://github.com/summitgao/SAR_CD_DDNet.

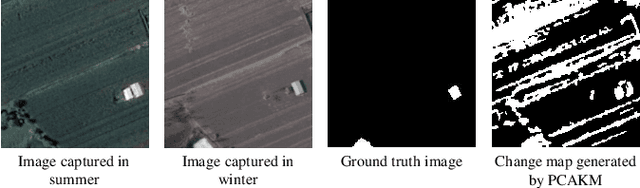

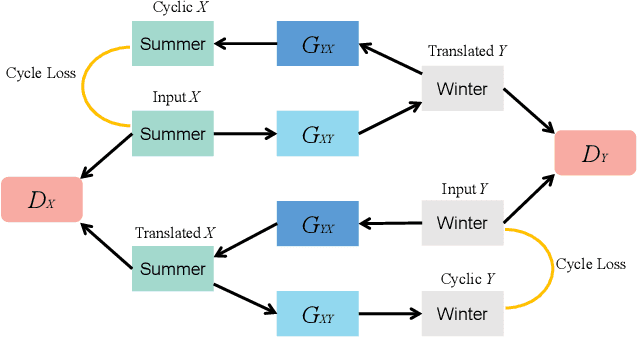

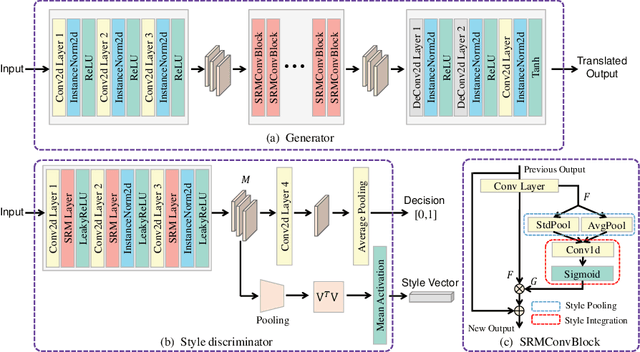

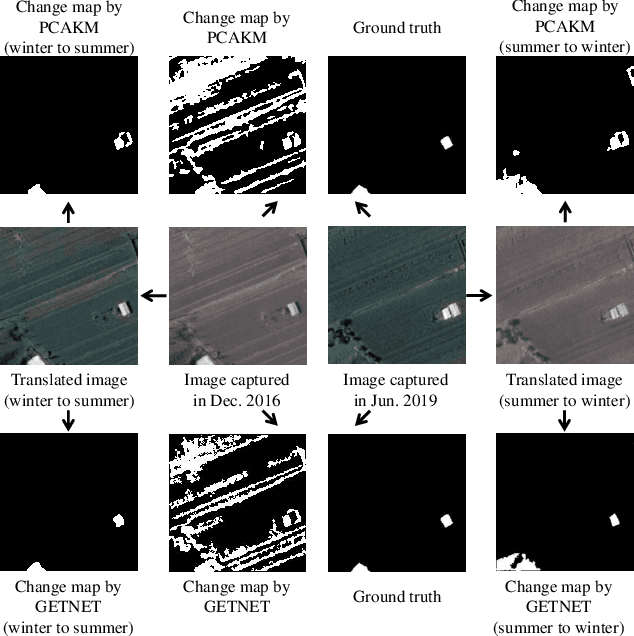

Remote Sensing Image Translation via Style-Based Recalibration Module and Improved Style Discriminator

Mar 29, 2021

Abstract:Existing remote sensing change detection methods are heavily affected by seasonal variation. Since vegetation colors are different between winter and summer, such variations are inclined to be falsely detected as changes. In this letter, we proposed an image translation method to solve the problem. A style-based recalibration module is introduced to capture seasonal features effectively. Then, a new style discriminator is designed to improve the translation performance. The discriminator can not only produce a decision for the fake or real sample, but also return a style vector according to the channel-wise correlations. Extensive experiments are conducted on season-varying dataset. The experimental results show that the proposed method can effectively perform image translation, thereby consistently improving the season-varying image change detection performance. Our codes and data are available at https://github.com/summitgao/RSIT_SRM_ISD.

More Diverse Means Better: Multimodal Deep Learning Meets Remote Sensing Imagery Classification

Aug 12, 2020

Abstract:Classification and identification of the materials lying over or beneath the Earth's surface have long been a fundamental but challenging research topic in geoscience and remote sensing (RS) and have garnered a growing concern owing to the recent advancements of deep learning techniques. Although deep networks have been successfully applied in single-modality-dominated classification tasks, yet their performance inevitably meets the bottleneck in complex scenes that need to be finely classified, due to the limitation of information diversity. In this work, we provide a baseline solution to the aforementioned difficulty by developing a general multimodal deep learning (MDL) framework. In particular, we also investigate a special case of multi-modality learning (MML) -- cross-modality learning (CML) that exists widely in RS image classification applications. By focusing on "what", "where", and "how" to fuse, we show different fusion strategies as well as how to train deep networks and build the network architecture. Specifically, five fusion architectures are introduced and developed, further being unified in our MDL framework. More significantly, our framework is not only limited to pixel-wise classification tasks but also applicable to spatial information modeling with convolutional neural networks (CNNs). To validate the effectiveness and superiority of the MDL framework, extensive experiments related to the settings of MML and CML are conducted on two different multimodal RS datasets. Furthermore, the codes and datasets will be available at https://github.com/danfenghong/IEEE_TGRS_MDL-RS, contributing to the RS community.

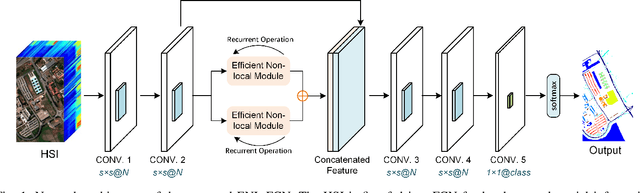

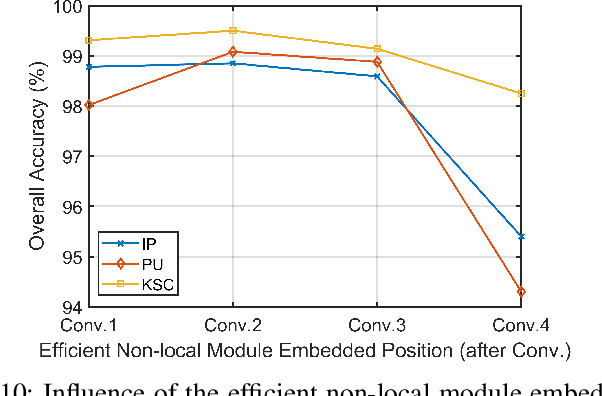

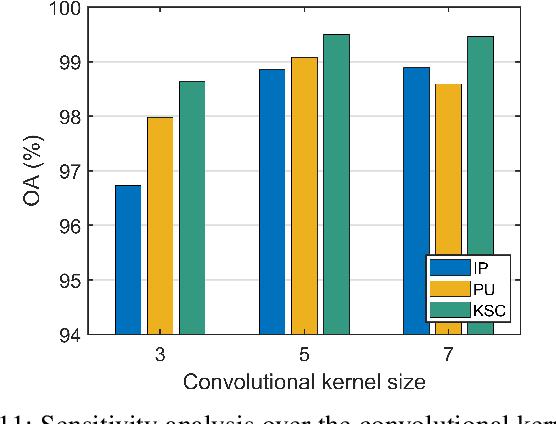

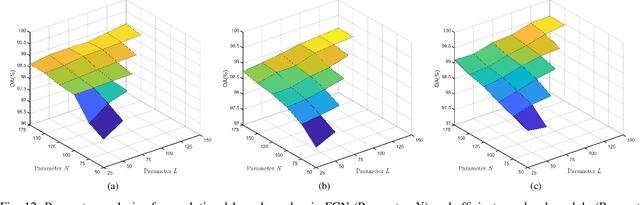

Efficient Deep Learning of Non-local Features for Hyperspectral Image Classification

Aug 02, 2020

Abstract:Deep learning based methods, such as Convolution Neural Network (CNN), have demonstrated their efficiency in hyperspectral image (HSI) classification. These methods can automatically learn spectral-spatial discriminative features within local patches. However, for each pixel in an HSI, it is not only related to its nearby pixels but also has connections to pixels far away from itself. Therefore, to incorporate the long-range contextual information, a deep fully convolutional network (FCN) with an efficient non-local module, named ENL-FCN, is proposed for HSI classification. In the proposed framework, a deep FCN considers an entire HSI as input and extracts spectral-spatial information in a local receptive field. The efficient non-local module is embedded in the network as a learning unit to capture the long-range contextual information. Different from the traditional non-local neural networks, the long-range contextual information is extracted in a specially designed criss-cross path for computation efficiency. Furthermore, by using a recurrent operation, each pixel's response is aggregated from all pixels of HSI. The benefits of our proposed ENL-FCN are threefold: 1) the long-range contextual information is incorporated effectively, 2) the efficient module can be freely embedded in a deep neural network in a plug-and-play fashion, and 3) it has much fewer learning parameters and requires less computational resources. The experiments conducted on three popular HSI datasets demonstrate that the proposed method achieves state-of-the-art classification performance with lower computational cost in comparison with several leading deep neural networks for HSI.

Vehicle Detection of Multi-source Remote Sensing Data Using Active Fine-tuning Network

Jul 16, 2020

Abstract:Vehicle detection in remote sensing images has attracted increasing interest in recent years. However, its detection ability is limited due to lack of well-annotated samples, especially in densely crowded scenes. Furthermore, since a list of remotely sensed data sources is available, efficient exploitation of useful information from multi-source data for better vehicle detection is challenging. To solve the above issues, a multi-source active fine-tuning vehicle detection (Ms-AFt) framework is proposed, which integrates transfer learning, segmentation, and active classification into a unified framework for auto-labeling and detection. The proposed Ms-AFt employs a fine-tuning network to firstly generate a vehicle training set from an unlabeled dataset. To cope with the diversity of vehicle categories, a multi-source based segmentation branch is then designed to construct additional candidate object sets. The separation of high quality vehicles is realized by a designed attentive classifications network. Finally, all three branches are combined to achieve vehicle detection. Extensive experimental results conducted on two open ISPRS benchmark datasets, namely the Vaihingen village and Potsdam city datasets, demonstrate the superiority and effectiveness of the proposed Ms-AFt for vehicle detection. In addition, the generalization ability of Ms-AFt in dense remote sensing scenes is further verified on stereo aerial imagery of a large camping site.

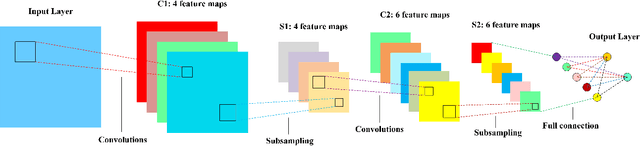

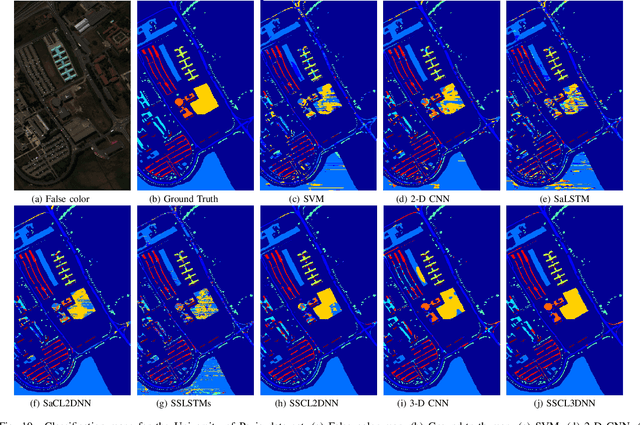

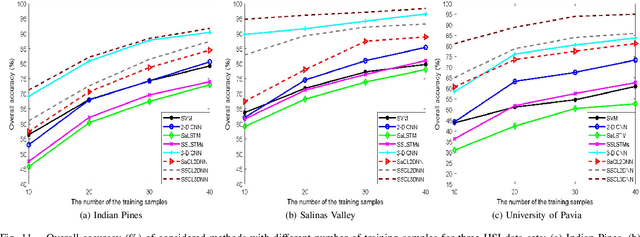

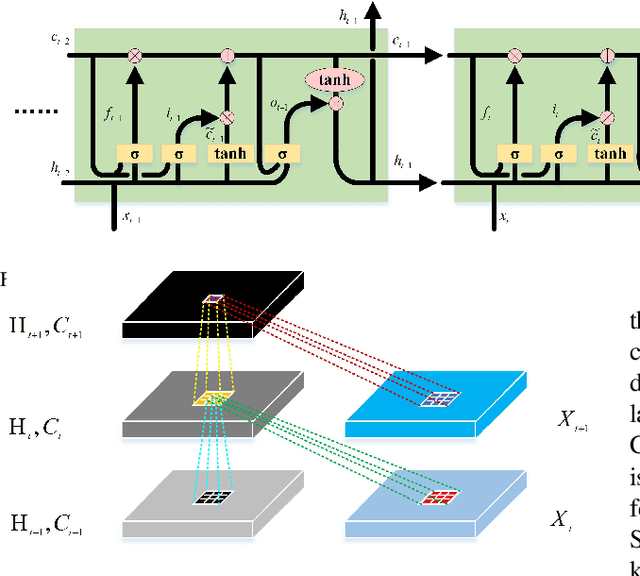

Feature Extraction and Classification Based on Spatial-Spectral ConvLSTM Neural Network for Hyperspectral Images

May 09, 2019

Abstract:In recent years, deep learning has presented a great advance in hyperspectral image (HSI) classification. Particularly, Long Short-Term Memory (LSTM), as a special deep learning structure, has shown great ability in modeling long-term dependencies in the time dimension of video or the spectral dimension of HSIs. However, the loss of spatial information makes it quite difficult to obtain the better performance. In order to address this problem, two novel deep models are proposed to extract more discriminative spatial-spectral features by exploiting the Convolutional LSTM (ConvLSTM) for the first time. By taking the data patch in a local sliding window as the input of each memory cell band by band, the 2-D extended architecture of LSTM is considered for building the spatial-spectral ConvLSTM 2-D Neural Network (SSCL2DNN) to model long-range dependencies in the spectral domain. To take advantage of spatial and spectral information more effectively for extracting a more discriminative spatial-spectral feature representation, the spatial-spectral ConvLSTM 3-D Neural Network (SSCL3DNN) is further proposed by extending LSTM to 3-D version. The experiments, conducted on three commonly used HSI data sets, demonstrate that the proposed deep models have certain competitive advantages and can provide better classification performance than other state-of-the-art approaches.

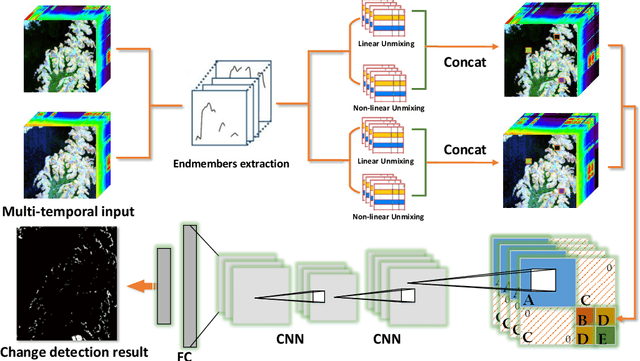

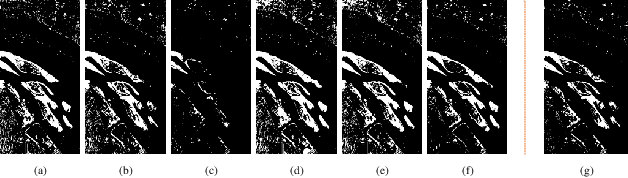

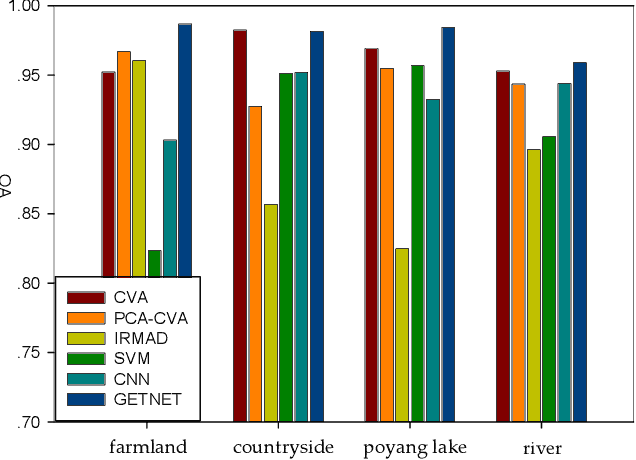

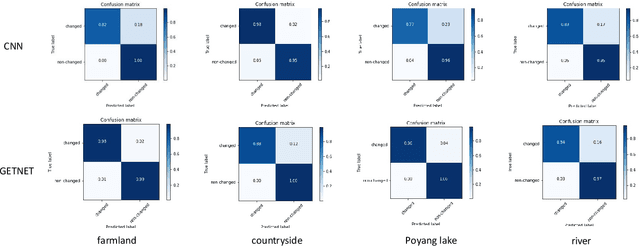

GETNET: A General End-to-end Two-dimensional CNN Framework for Hyperspectral Image Change Detection

May 05, 2019

Abstract:Change detection (CD) is an important application of remote sensing, which provides timely change information about large-scale Earth surface. With the emergence of hyperspectral imagery, CD technology has been greatly promoted, as hyperspectral data with the highspectral resolution are capable of detecting finer changes than using the traditional multispectral imagery. Nevertheless, the high dimension of hyperspectral data makes it difficult to implement traditional CD algorithms. Besides, endmember abundance information at subpixel level is often not fully utilized. In order to better handle high dimension problem and explore abundance information, this paper presents a General End-to-end Two-dimensional CNN (GETNET) framework for hyperspectral image change detection (HSI-CD). The main contributions of this work are threefold: 1) Mixed-affinity matrix that integrates subpixel representation is introduced to mine more cross-channel gradient features and fuse multi-source information; 2) 2-D CNN is designed to learn the discriminative features effectively from multi-source data at a higher level and enhance the generalization ability of the proposed CD algorithm; 3) A new HSI-CD data set is designed for the objective comparison of different methods. Experimental results on real hyperspectral data sets demonstrate the proposed method outperforms most of the state-of-the-arts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge