Pieter Abbeel

UC Berkeley

Learning Predictive Representations for Deformable Objects Using Contrastive Estimation

Mar 11, 2020

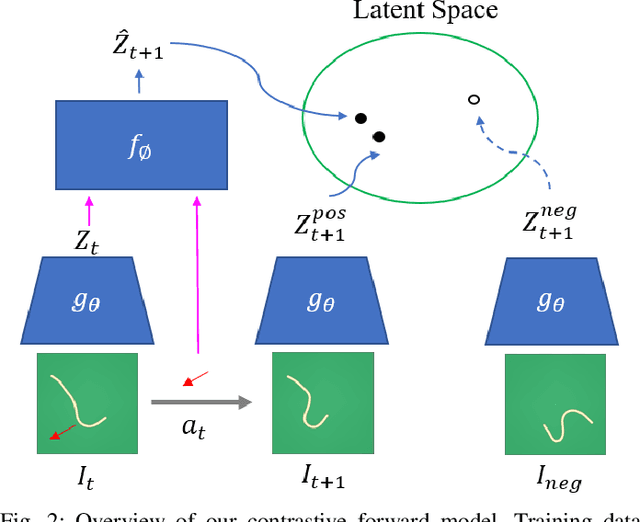

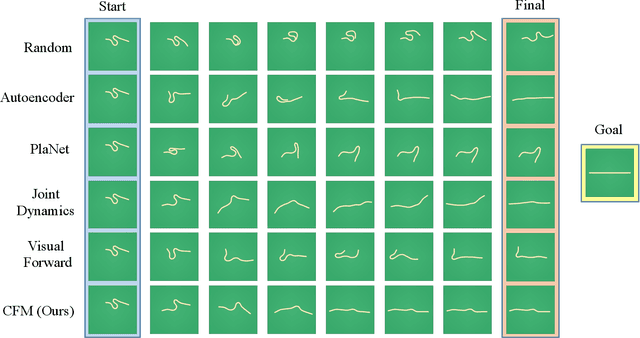

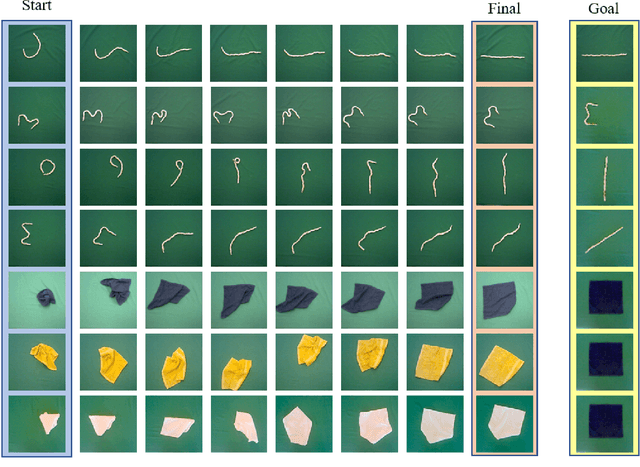

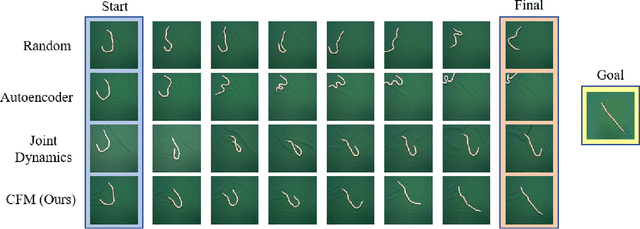

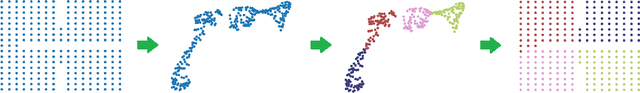

Abstract:Using visual model-based learning for deformable object manipulation is challenging due to difficulties in learning plannable visual representations along with complex dynamic models. In this work, we propose a new learning framework that jointly optimizes both the visual representation model and the dynamics model using contrastive estimation. Using simulation data collected by randomly perturbing deformable objects on a table, we learn latent dynamics models for these objects in an offline fashion. Then, using the learned models, we use simple model-based planning to solve challenging deformable object manipulation tasks such as spreading ropes and cloths. Experimentally, we show substantial improvements in performance over standard model-based learning techniques across our rope and cloth manipulation suite. Finally, we transfer our visual manipulation policies trained on data purely collected in simulation to a real PR2 robot through domain randomization.

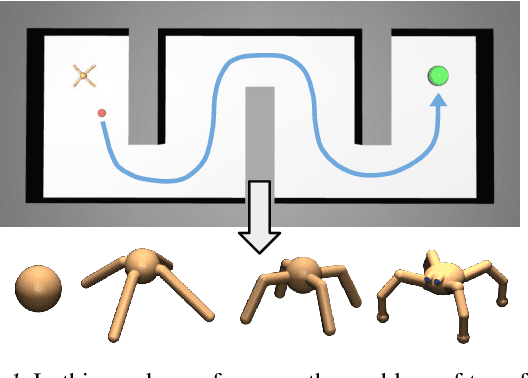

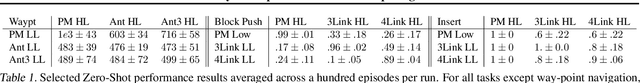

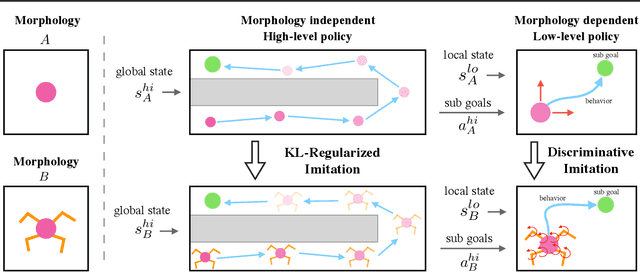

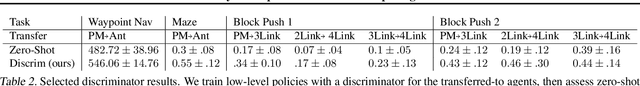

Hierarchically Decoupled Imitation for Morphological Transfer

Mar 03, 2020

Abstract:Learning long-range behaviors on complex high-dimensional agents is a fundamental problem in robot learning. For such tasks, we argue that transferring learned information from a morphologically simpler agent can massively improve the sample efficiency of a more complex one. To this end, we propose a hierarchical decoupling of policies into two parts: an independently learned low-level policy and a transferable high-level policy. To remedy poor transfer performance due to mismatch in morphologies, we contribute two key ideas. First, we show that incentivizing a complex agent's low-level to imitate a simpler agent's low-level significantly improves zero-shot high-level transfer. Second, we show that KL-regularized training of the high level stabilizes learning and prevents mode-collapse. Finally, on a suite of publicly released navigation and manipulation environments, we demonstrate the applicability of hierarchical transfer on long-range tasks across morphologies. Our code and videos can be found at https://sites.google.com/berkeley.edu/morphology-transfer.

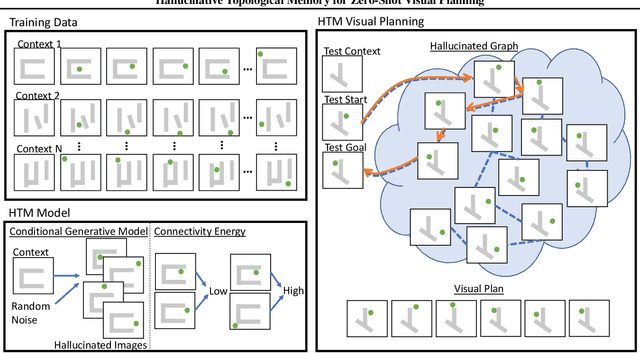

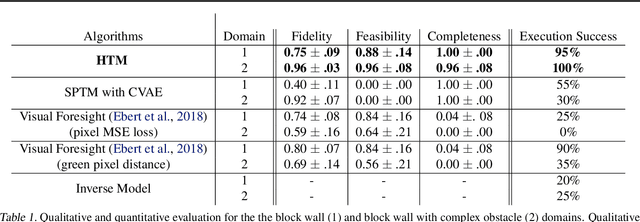

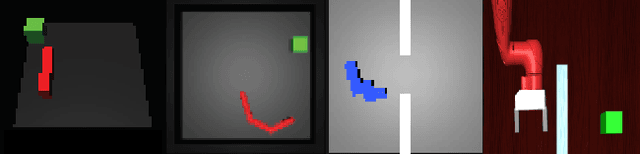

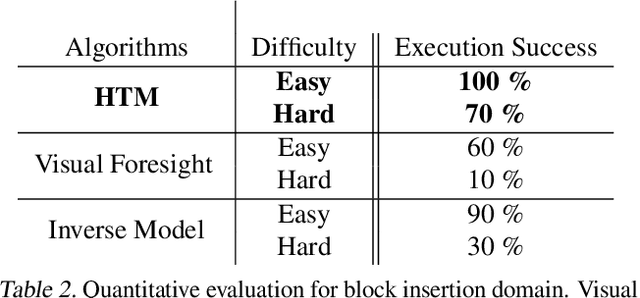

Hallucinative Topological Memory for Zero-Shot Visual Planning

Feb 27, 2020

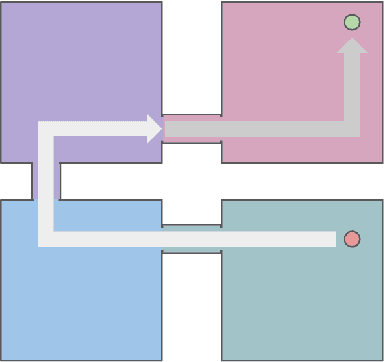

Abstract:In visual planning (VP), an agent learns to plan goal-directed behavior from observations of a dynamical system obtained offline, e.g., images obtained from self-supervised robot interaction. Most previous works on VP approached the problem by planning in a learned latent space, resulting in low-quality visual plans, and difficult training algorithms. Here, instead, we propose a simple VP method that plans directly in image space and displays competitive performance. We build on the semi-parametric topological memory (SPTM) method: image samples are treated as nodes in a graph, the graph connectivity is learned from image sequence data, and planning can be performed using conventional graph search methods. We propose two modifications on SPTM. First, we train an energy-based graph connectivity function using contrastive predictive coding that admits stable training. Second, to allow zero-shot planning in new domains, we learn a conditional VAE model that generates images given a context of the domain, and use these hallucinated samples for building the connectivity graph and planning. We show that this simple approach significantly outperform the state-of-the-art VP methods, in terms of both plan interpretability and success rate when using the plan to guide a trajectory-following controller. Interestingly, our method can pick up non-trivial visual properties of objects, such as their geometry, and account for it in the plans.

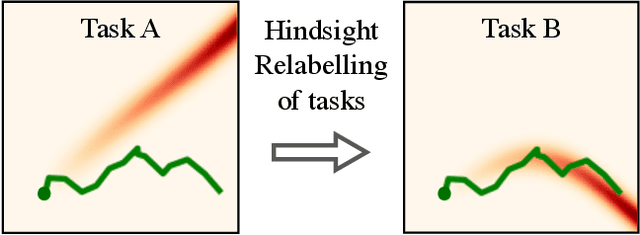

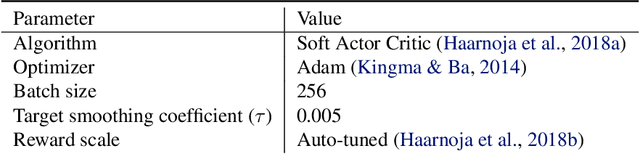

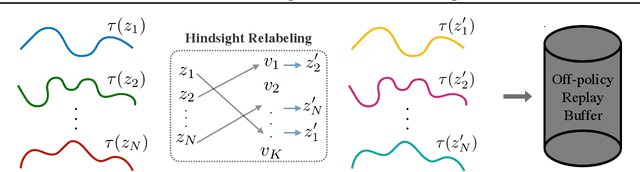

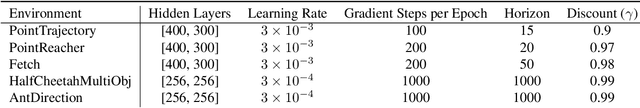

Generalized Hindsight for Reinforcement Learning

Feb 26, 2020

Abstract:One of the key reasons for the high sample complexity in reinforcement learning (RL) is the inability to transfer knowledge from one task to another. In standard multi-task RL settings, low-reward data collected while trying to solve one task provides little to no signal for solving that particular task and is hence effectively wasted. However, we argue that this data, which is uninformative for one task, is likely a rich source of information for other tasks. To leverage this insight and efficiently reuse data, we present Generalized Hindsight: an approximate inverse reinforcement learning technique for relabeling behaviors with the right tasks. Intuitively, given a behavior generated under one task, Generalized Hindsight returns a different task that the behavior is better suited for. Then, the behavior is relabeled with this new task before being used by an off-policy RL optimizer. Compared to standard relabeling techniques, Generalized Hindsight provides a substantially more efficient reuse of samples, which we empirically demonstrate on a suite of multi-task navigation and manipulation tasks. Videos and code can be accessed here: https://sites.google.com/view/generalized-hindsight.

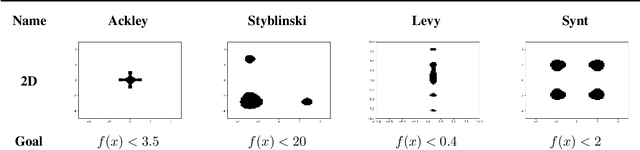

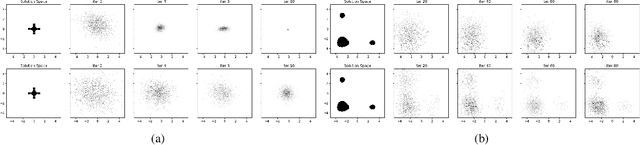

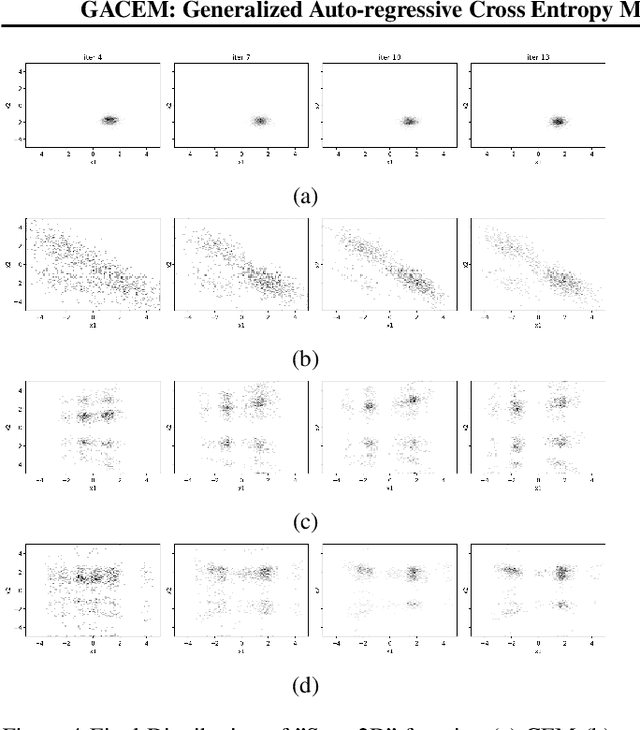

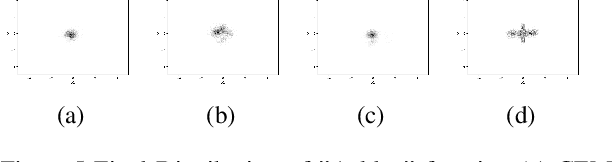

GACEM: Generalized Autoregressive Cross Entropy Method for Multi-Modal Black Box Constraint Satisfaction

Feb 17, 2020

Abstract:In this work we present a new method of black-box optimization and constraint satisfaction. Existing algorithms that have attempted to solve this problem are unable to consider multiple modes, and are not able to adapt to changes in environment dynamics. To address these issues, we developed a modified Cross-Entropy Method (CEM) that uses a masked auto-regressive neural network for modeling uniform distributions over the solution space. We train the model using maximum entropy policy gradient methods from Reinforcement Learning. Our algorithm is able to express complicated solution spaces, thus allowing it to track a variety of different solution regions. We empirically compare our algorithm with variations of CEM, including one with a Gaussian prior with fixed variance, and demonstrate better performance in terms of: number of diverse solutions, better mode discovery in multi-modal problems, and better sample efficiency in certain cases.

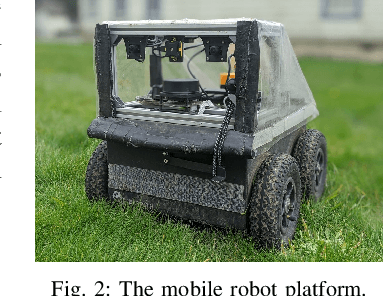

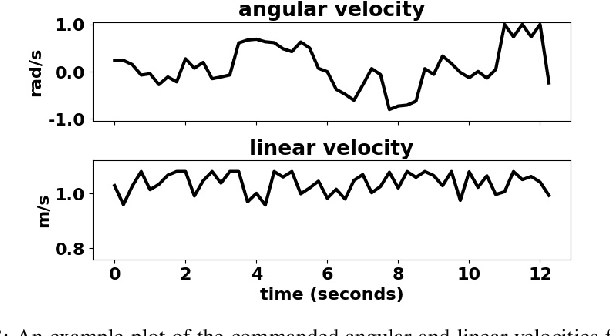

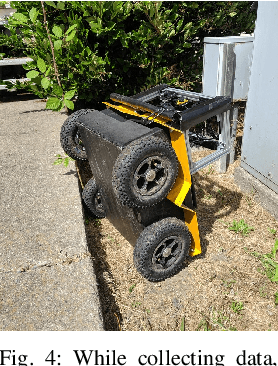

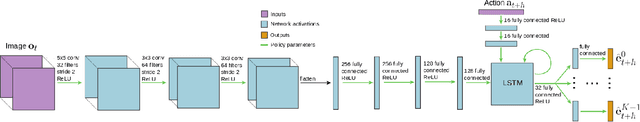

BADGR: An Autonomous Self-Supervised Learning-Based Navigation System

Feb 13, 2020

Abstract:Mobile robot navigation is typically regarded as a geometric problem, in which the robot's objective is to perceive the geometry of the environment in order to plan collision-free paths towards a desired goal. However, a purely geometric view of the world can can be insufficient for many navigation problems. For example, a robot navigating based on geometry may avoid a field of tall grass because it believes it is untraversable, and will therefore fail to reach its desired goal. In this work, we investigate how to move beyond these purely geometric-based approaches using a method that learns about physical navigational affordances from experience. Our approach, which we call BADGR, is an end-to-end learning-based mobile robot navigation system that can be trained with self-supervised off-policy data gathered in real-world environments, without any simulation or human supervision. BADGR can navigate in real-world urban and off-road environments with geometrically distracting obstacles. It can also incorporate terrain preferences, generalize to novel environments, and continue to improve autonomously by gathering more data. Videos, code, and other supplemental material are available on our website https://sites.google.com/view/badgr

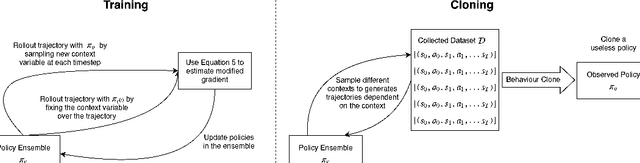

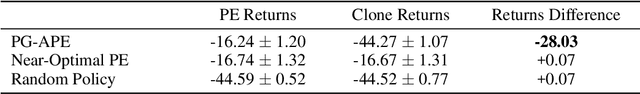

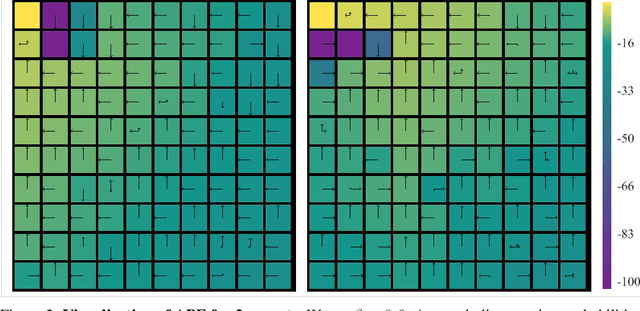

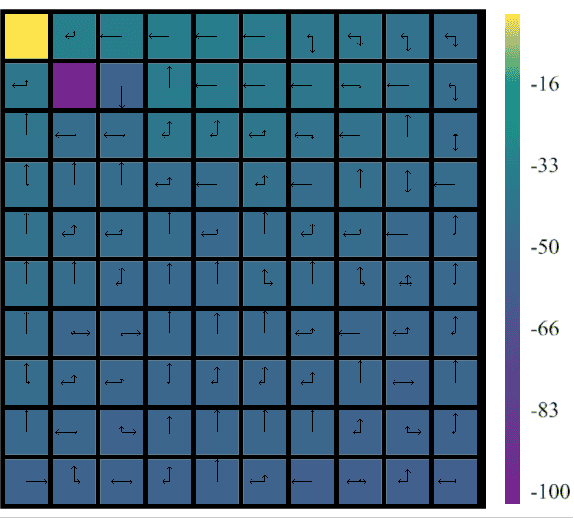

Preventing Imitation Learning with Adversarial Policy Ensembles

Jan 31, 2020

Abstract:Imitation learning can reproduce policies by observing experts, which poses a problem regarding policy privacy. Policies, such as human, or policies on deployed robots, can all be cloned without consent from the owners. How can we protect against external observers cloning our proprietary policies? To answer this question we introduce a new reinforcement learning framework, where we train an ensemble of near-optimal policies, whose demonstrations are guaranteed to be useless for an external observer. We formulate this idea by a constrained optimization problem, where the objective is to improve proprietary policies, and at the same time deteriorate the virtual policy of an eventual external observer. We design a tractable algorithm to solve this new optimization problem by modifying the standard policy gradient algorithm. Our formulation can be interpreted in lenses of confidentiality and adversarial behaviour, which enables a broader perspective of this work. We demonstrate the existence of "non-clonable" ensembles, providing a solution to the above optimization problem, which is calculated by our modified policy gradient algorithm. To our knowledge, this is the first work regarding the protection of policies in Reinforcement Learning.

Hierarchical Variational Imitation Learning of Control Programs

Dec 29, 2019

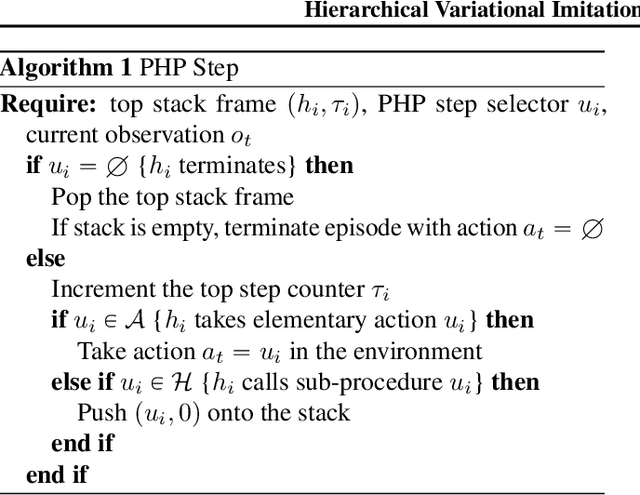

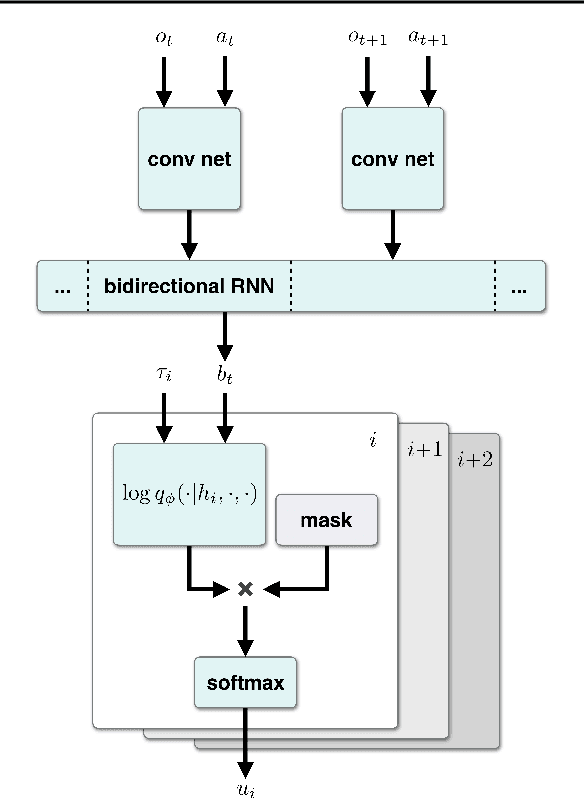

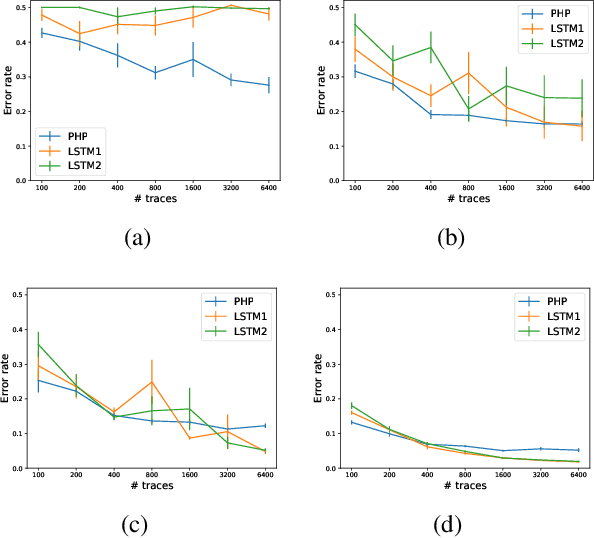

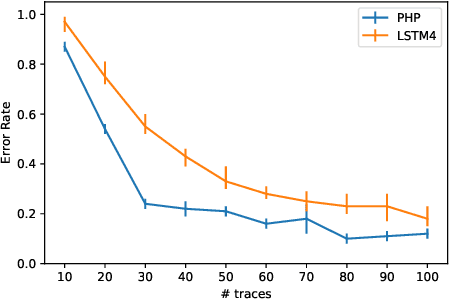

Abstract:Autonomous agents can learn by imitating teacher demonstrations of the intended behavior. Hierarchical control policies are ubiquitously useful for such learning, having the potential to break down structured tasks into simpler sub-tasks, thereby improving data efficiency and generalization. In this paper, we propose a variational inference method for imitation learning of a control policy represented by parametrized hierarchical procedures (PHP), a program-like structure in which procedures can invoke sub-procedures to perform sub-tasks. Our method discovers the hierarchical structure in a dataset of observation-action traces of teacher demonstrations, by learning an approximate posterior distribution over the latent sequence of procedure calls and terminations. Samples from this learned distribution then guide the training of the hierarchical control policy. We identify and demonstrate a novel benefit of variational inference in the context of hierarchical imitation learning: in decomposing the policy into simpler procedures, inference can leverage acausal information that is unused by other methods. Training PHP with variational inference outperforms LSTM baselines in terms of data efficiency and generalization, requiring less than half as much data to achieve a 24% error rate in executing the bubble sort algorithm, and to achieve no error in executing Karel programs.

Predictive Coding for Boosting Deep Reinforcement Learning with Sparse Rewards

Dec 21, 2019

Abstract:While recent progress in deep reinforcement learning has enabled robots to learn complex behaviors, tasks with long horizons and sparse rewards remain an ongoing challenge. In this work, we propose an effective reward shaping method through predictive coding to tackle sparse reward problems. By learning predictive representations offline and using these representations for reward shaping, we gain access to reward signals that understand the structure and dynamics of the environment. In particular, our method achieves better learning by providing reward signals that 1) understand environment dynamics 2) emphasize on features most useful for learning 3) resist noise in learned representations through reward accumulation. We demonstrate the usefulness of this approach in different domains ranging from robotic manipulation to navigation, and we show that reward signals produced through predictive coding are as effective for learning as hand-crafted rewards.

AVID: Learning Multi-Stage Tasks via Pixel-Level Translation of Human Videos

Dec 10, 2019

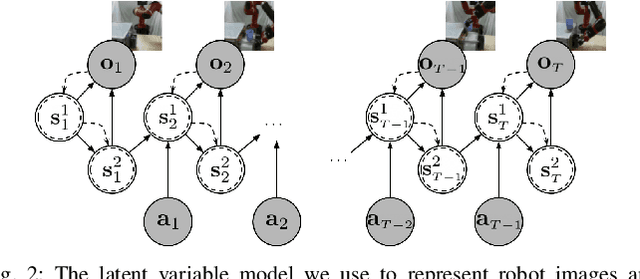

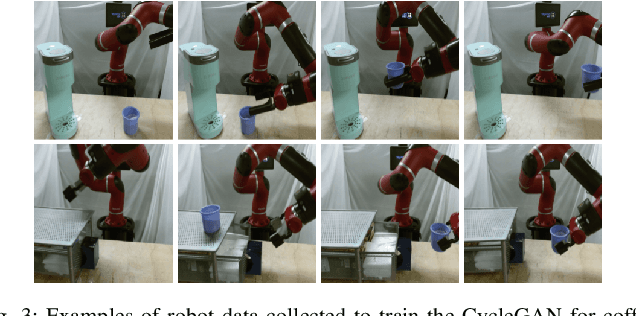

Abstract:Robotic reinforcement learning (RL) holds the promise of enabling robots to learn complex behaviors through experience. However, realizing this promise requires not only effective and scalable RL algorithms, but also mechanisms to reduce human burden in terms of defining the task and resetting the environment. In this paper, we study how these challenges can be alleviated with an automated robotic learning framework, in which multi-stage tasks are defined simply by providing videos of a human demonstrator and then learned autonomously by the robot from raw image observations. A central challenge in imitating human videos is the difference in morphology between the human and robot, which typically requires manual correspondence. We instead take an automated approach and perform pixel-level image translation via CycleGAN to convert the human demonstration into a video of a robot, which can then be used to construct a reward function for a model-based RL algorithm. The robot then learns the task one stage at a time, automatically learning how to reset each stage to retry it multiple times without human-provided resets. This makes the learning process largely automatic, from intuitive task specification via a video to automated training with minimal human intervention. We demonstrate that our approach is capable of learning complex tasks, such as operating a coffee machine, directly from raw image observations, requiring only 20 minutes to provide human demonstrations and about 180 minutes of robot interaction with the environment. A supplementary video depicting the experimental setup, learning process, and our method's final performance is available from https://sites.google.com/view/icra20avid

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge