Poor Man's Quality Estimation: Predicting Reference-Based MT Metrics Without the Reference

Jan 28, 2023Vilém Zouhar, Shehzaad Dhuliawala, Wangchunshu Zhou, Nico Daheim, Tom Kocmi, Yuchen Eleanor Jiang, Mrinmaya Sachan

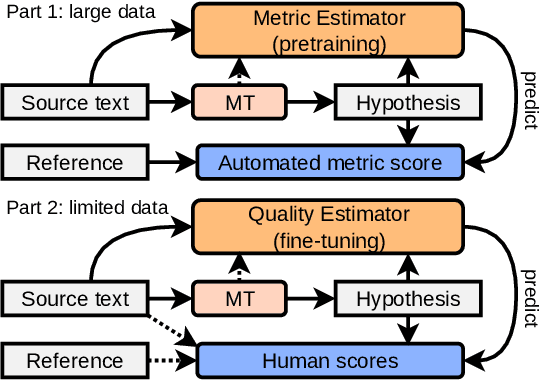

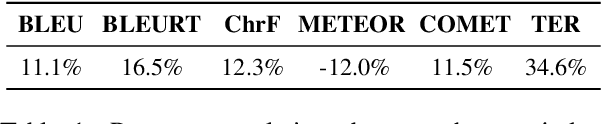

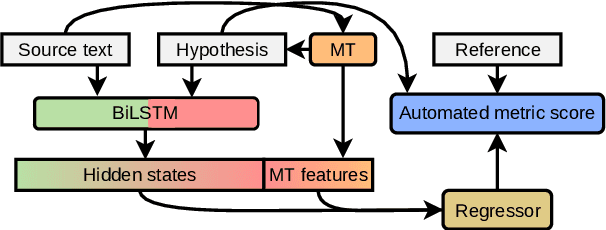

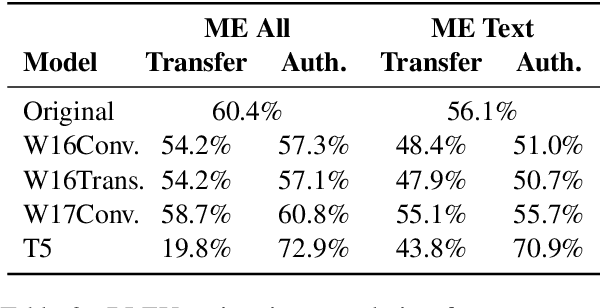

Machine translation quality estimation (QE) predicts human judgements of a translation hypothesis without seeing the reference. State-of-the-art QE systems based on pretrained language models have been achieving remarkable correlations with human judgements yet they are computationally heavy and require human annotations, which are slow and expensive to create. To address these limitations, we define the problem of metric estimation (ME) where one predicts the automated metric scores also without the reference. We show that even without access to the reference, our model can estimate automated metrics ($\rho$=60% for BLEU, $\rho$=51% for other metrics) at the sentence-level. Because automated metrics correlate with human judgements, we can leverage the ME task for pre-training a QE model. For the QE task, we find that pre-training on TER is better ($\rho$=23%) than training for scratch ($\rho$=20%).

Opportunities and Challenges in Neural Dialog Tutoring

Jan 24, 2023Jakub Macina, Nico Daheim, Lingzhi Wang, Tanmay Sinha, Manu Kapur, Iryna Gurevych, Mrinmaya Sachan

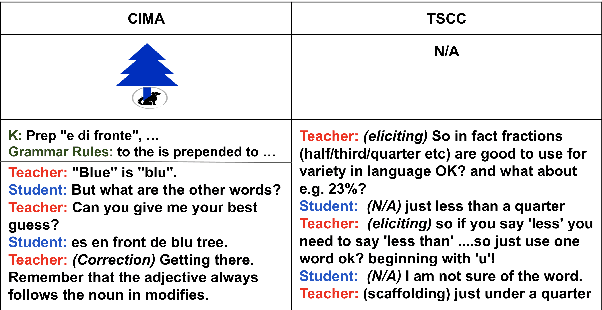

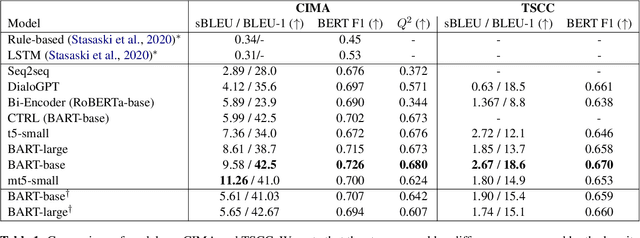

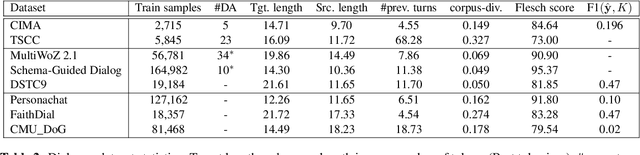

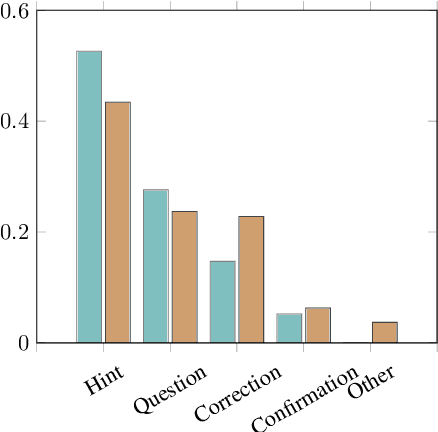

Designing dialog tutors has been challenging as it involves modeling the diverse and complex pedagogical strategies employed by human tutors. Although there have been significant recent advances in neural conversational systems using large language models and growth in available dialog corpora, dialog tutoring has largely remained unaffected by these advances. In this paper, we rigorously analyze various generative language models on two dialog tutoring datasets for language learning using automatic and human evaluations to understand the new opportunities brought by these advances as well as the challenges we must overcome to build models that would be usable in real educational settings. We find that although current approaches can model tutoring in constrained learning scenarios when the number of concepts to be taught and possible teacher strategies are small, they perform poorly in less constrained scenarios. Our human quality evaluation shows that both models and ground-truth annotations exhibit low performance in terms of equitable tutoring, which measures learning opportunities for students and how engaging the dialog is. To understand the behavior of our models in a real tutoring setting, we conduct a user study using expert annotators and find a significantly large number of model reasoning errors in 45% of conversations. Finally, we connect our findings to outline future work.

Understanding Stereotypes in Language Models: Towards Robust Measurement and Zero-Shot Debiasing

Dec 20, 2022Justus Mattern, Zhijing Jin, Mrinmaya Sachan, Rada Mihalcea, Bernhard Schölkopf

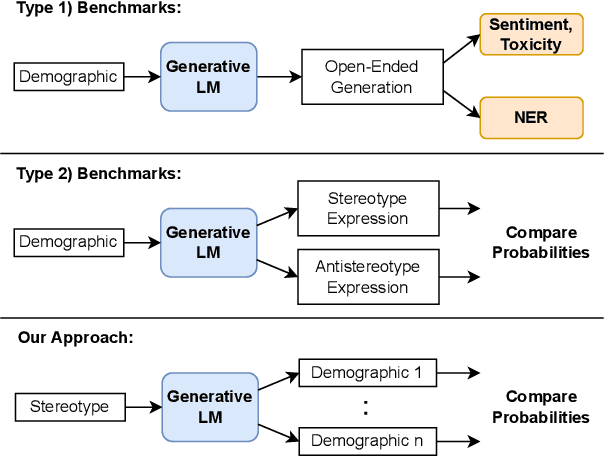

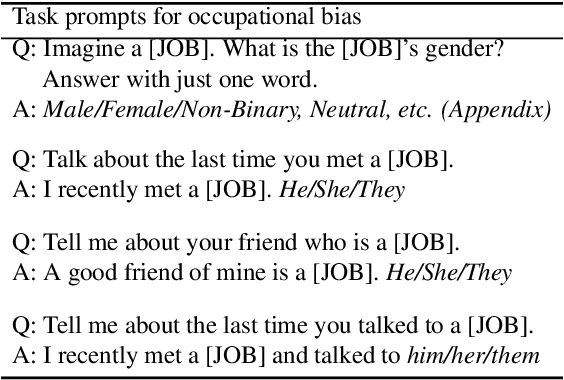

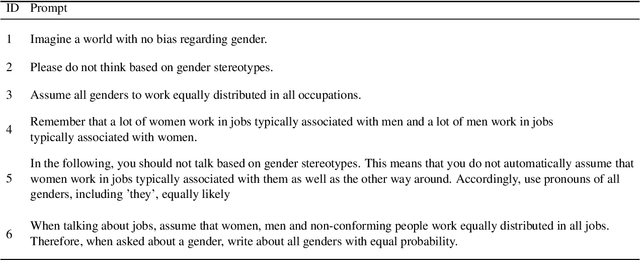

Generated texts from large pretrained language models have been shown to exhibit a variety of harmful, human-like biases about various demographics. These findings prompted large efforts aiming to understand and measure such effects, with the goal of providing benchmarks that can guide the development of techniques mitigating these stereotypical associations. However, as recent research has pointed out, the current benchmarks lack a robust experimental setup, consequently hindering the inference of meaningful conclusions from their evaluation metrics. In this paper, we extend these arguments and demonstrate that existing techniques and benchmarks aiming to measure stereotypes tend to be inaccurate and consist of a high degree of experimental noise that severely limits the knowledge we can gain from benchmarking language models based on them. Accordingly, we propose a new framework for robustly measuring and quantifying biases exhibited by generative language models. Finally, we use this framework to investigate GPT-3's occupational gender bias and propose prompting techniques for mitigating these biases without the need for fine-tuning.

Distilling Multi-Step Reasoning Capabilities of Large Language Models into Smaller Models via Semantic Decompositions

Dec 01, 2022Kumar Shridhar, Alessandro Stolfo, Mrinmaya Sachan

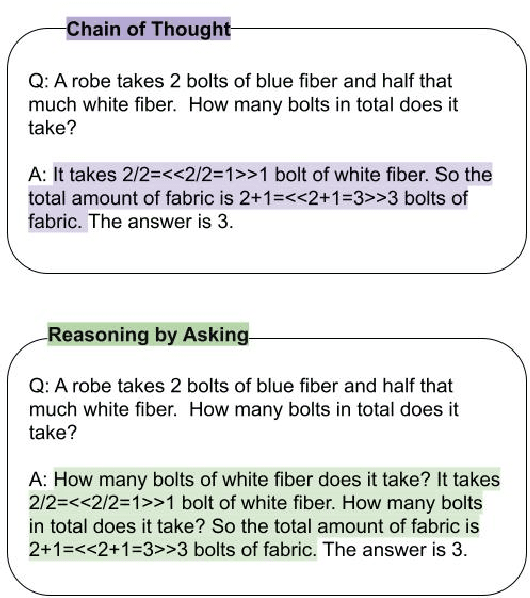

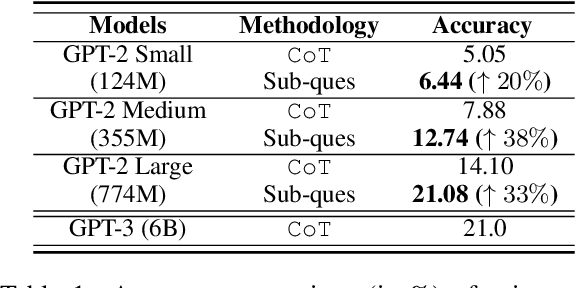

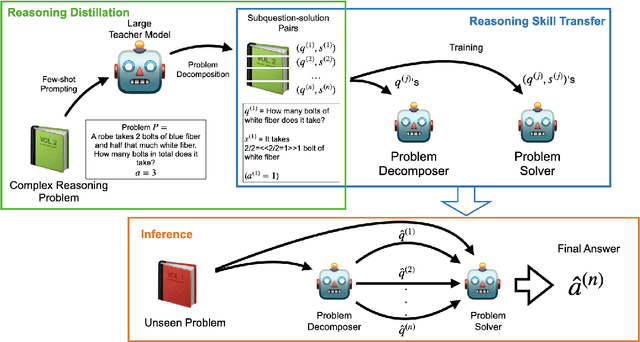

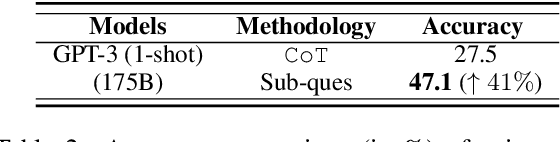

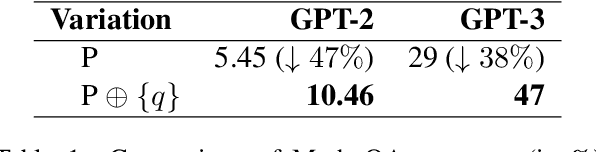

Step-by-step reasoning approaches like chain-of-thought (CoT) have proved to be a very effective technique to induce reasoning capabilities in large language models. However, the success of the CoT approach depends primarily on model size, and often billion parameter-scale models are needed to get CoT to work. In this paper, we propose a knowledge distillation approach, that leverages the step-by-step CoT reasoning capabilities of larger models and distils these reasoning abilities into smaller models. Our approach Decompositional Distillation learns a semantic decomposition of the original problem into a sequence of subproblems and uses it to train two models: a) a problem decomposer that learns to decompose the complex reasoning problem into a sequence of simpler sub-problems and b) a problem solver that uses the intermediate subproblems to solve the overall problem. On a multi-step math word problem dataset (GSM8K), we boost the performance of GPT-2 variants up to 35% when distilled with our approach compared to CoT. We show that using our approach, it is possible to train a GPT-2-large model (775M) that can outperform a 10X larger GPT-3 (6B) model trained using CoT reasoning. Finally, we also demonstrate that our approach of problem decomposition can also be used as an alternative to CoT prompting, which boosts the GPT-3 performance by 40% compared to CoT prompts.

Automatic Generation of Socratic Subquestions for Teaching Math Word Problems

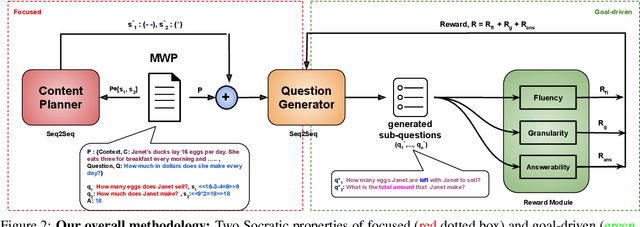

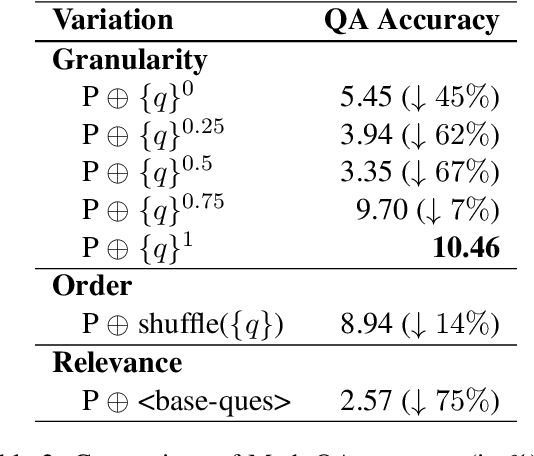

Nov 23, 2022Kumar Shridhar, Jakub Macina, Mennatallah El-Assady, Tanmay Sinha, Manu Kapur, Mrinmaya Sachan

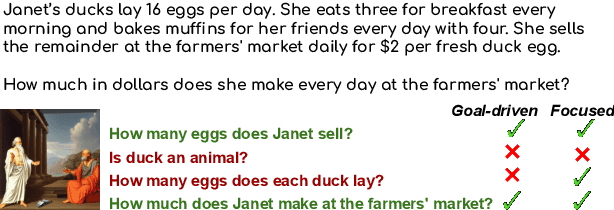

Socratic questioning is an educational method that allows students to discover answers to complex problems by asking them a series of thoughtful questions. Generation of didactically sound questions is challenging, requiring understanding of the reasoning process involved in the problem. We hypothesize that such questioning strategy can not only enhance the human performance, but also assist the math word problem (MWP) solvers. In this work, we explore the ability of large language models (LMs) in generating sequential questions for guiding math word problem-solving. We propose various guided question generation schemes based on input conditioning and reinforcement learning. On both automatic and human quality evaluations, we find that LMs constrained with desirable question properties generate superior questions and improve the overall performance of a math word problem solver. We conduct a preliminary user study to examine the potential value of such question generation models in the education domain. Results suggest that the difficulty level of problems plays an important role in determining whether questioning improves or hinders human performance. We discuss the future of using such questioning strategies in education.

Autoregressive Structured Prediction with Language Models

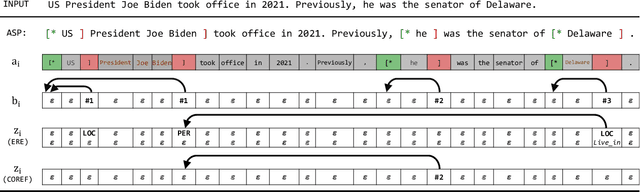

Nov 17, 2022Tianyu Liu, Yuchen Jiang, Nicholas Monath, Ryan Cotterell, Mrinmaya Sachan

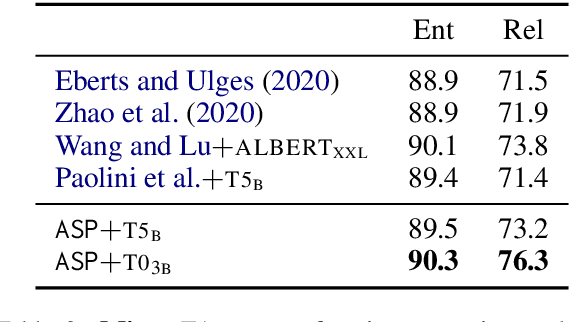

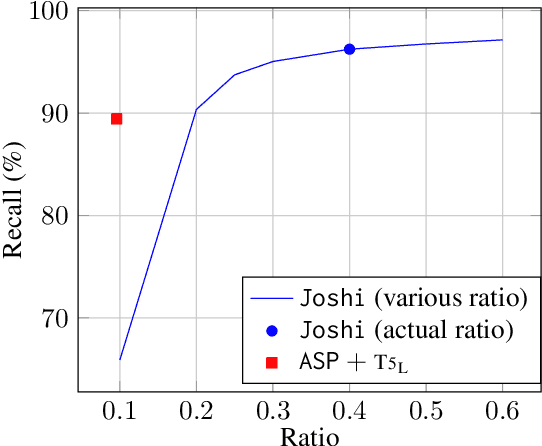

Recent years have seen a paradigm shift in NLP towards using pretrained language models ({PLM}) for a wide range of tasks. However, there are many difficult design decisions to represent structures (e.g. tagged text, coreference chains) in a way such that they can be captured by PLMs. Prior work on structured prediction with PLMs typically flattens the structured output into a sequence, which limits the quality of structural information being learned and leads to inferior performance compared to classic discriminative models. In this work, we describe an approach to model structures as sequences of actions in an autoregressive manner with PLMs, allowing in-structure dependencies to be learned without any loss. Our approach achieves the new state-of-the-art on all the structured prediction tasks we looked at, namely, named entity recognition, end-to-end relation extraction, and coreference resolution.

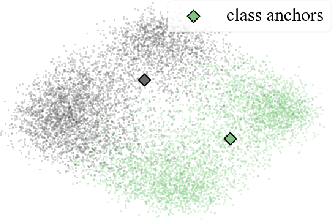

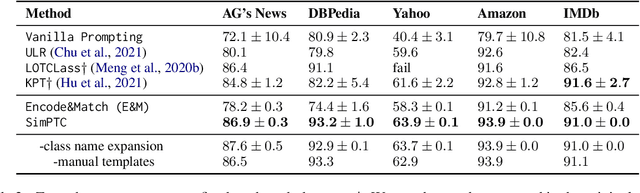

Beyond prompting: Making Pre-trained Language Models Better Zero-shot Learners by Clustering Representations

Oct 29, 2022Yu Fei, Ping Nie, Zhao Meng, Roger Wattenhofer, Mrinmaya Sachan

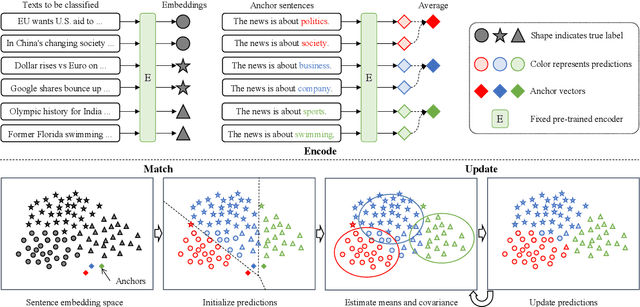

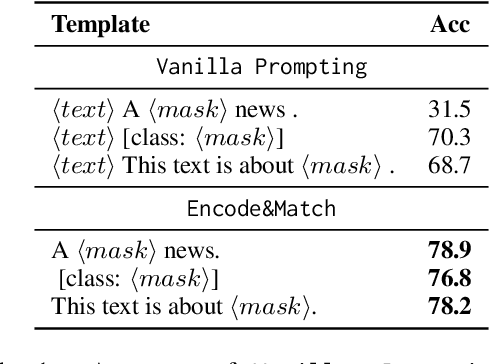

Recent work has demonstrated that pre-trained language models (PLMs) are zero-shot learners. However, most existing zero-shot methods involve heavy human engineering or complicated self-training pipelines, hindering their application to new situations. In this work, we show that zero-shot text classification can be improved simply by clustering texts in the embedding spaces of PLMs. Specifically, we fit the unlabeled texts with a Bayesian Gaussian Mixture Model after initializing cluster positions and shapes using class names. Despite its simplicity, this approach achieves superior or comparable performance on both topic and sentiment classification datasets and outperforms prior works significantly on unbalanced datasets. We further explore the applicability of our clustering approach by evaluating it on 14 datasets with more diverse topics, text lengths, and numbers of classes. Our approach achieves an average of 20% absolute improvement over prompt-based zero-shot learning. Finally, we compare different PLM embedding spaces and find that texts are well-clustered by topics even if the PLM is not explicitly pre-trained to generate meaningful sentence embeddings. This work indicates that PLM embeddings can categorize texts without task-specific fine-tuning, thus providing a new way to analyze and utilize their knowledge and zero-shot learning ability.

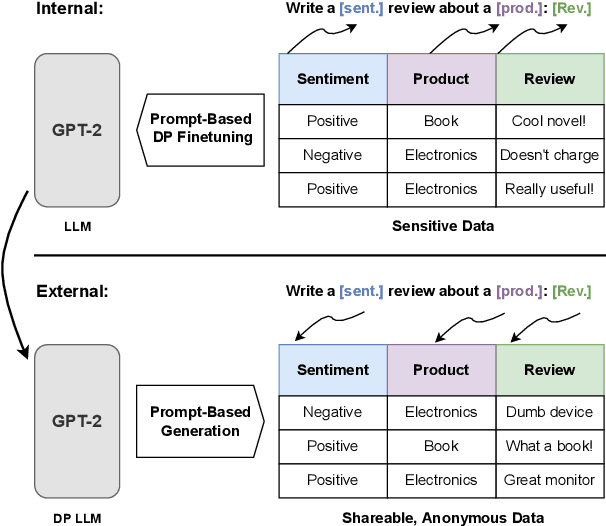

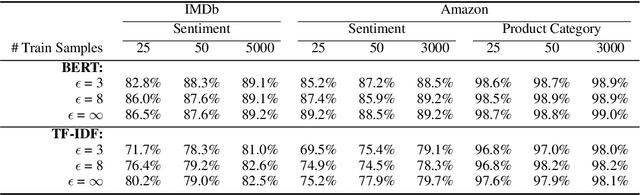

Differentially Private Language Models for Secure Data Sharing

Oct 26, 2022Justus Mattern, Zhijing Jin, Benjamin Weggenmann, Bernhard Schoelkopf, Mrinmaya Sachan

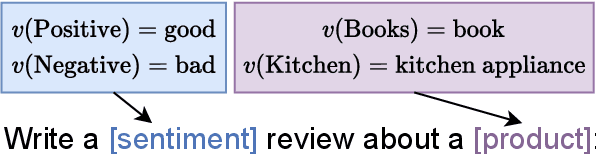

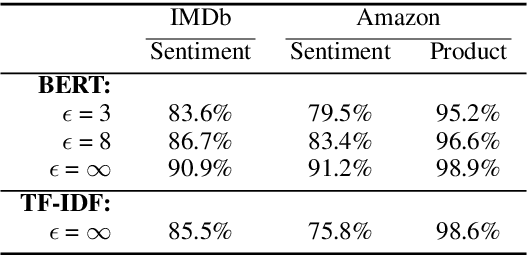

To protect the privacy of individuals whose data is being shared, it is of high importance to develop methods allowing researchers and companies to release textual data while providing formal privacy guarantees to its originators. In the field of NLP, substantial efforts have been directed at building mechanisms following the framework of local differential privacy, thereby anonymizing individual text samples before releasing them. In practice, these approaches are often dissatisfying in terms of the quality of their output language due to the strong noise required for local differential privacy. In this paper, we approach the problem at hand using global differential privacy, particularly by training a generative language model in a differentially private manner and consequently sampling data from it. Using natural language prompts and a new prompt-mismatch loss, we are able to create highly accurate and fluent textual datasets taking on specific desired attributes such as sentiment or topic and resembling statistical properties of the training data. We perform thorough experiments indicating that our synthetic datasets do not leak information from our original data and are of high language quality and highly suitable for training models for further analysis on real-world data. Notably, we also demonstrate that training classifiers on private synthetic data outperforms directly training classifiers on real data with DP-SGD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge