Lorenz Wellhausen

Learning Robust Autonomous Navigation and Locomotion for Wheeled-Legged Robots

May 03, 2024Abstract:Autonomous wheeled-legged robots have the potential to transform logistics systems, improving operational efficiency and adaptability in urban environments. Navigating urban environments, however, poses unique challenges for robots, necessitating innovative solutions for locomotion and navigation. These challenges include the need for adaptive locomotion across varied terrains and the ability to navigate efficiently around complex dynamic obstacles. This work introduces a fully integrated system comprising adaptive locomotion control, mobility-aware local navigation planning, and large-scale path planning within the city. Using model-free reinforcement learning (RL) techniques and privileged learning, we develop a versatile locomotion controller. This controller achieves efficient and robust locomotion over various rough terrains, facilitated by smooth transitions between walking and driving modes. It is tightly integrated with a learned navigation controller through a hierarchical RL framework, enabling effective navigation through challenging terrain and various obstacles at high speed. Our controllers are integrated into a large-scale urban navigation system and validated by autonomous, kilometer-scale navigation missions conducted in Zurich, Switzerland, and Seville, Spain. These missions demonstrate the system's robustness and adaptability, underscoring the importance of integrated control systems in achieving seamless navigation in complex environments. Our findings support the feasibility of wheeled-legged robots and hierarchical RL for autonomous navigation, with implications for last-mile delivery and beyond.

Learning to walk in confined spaces using 3D representation

Feb 29, 2024

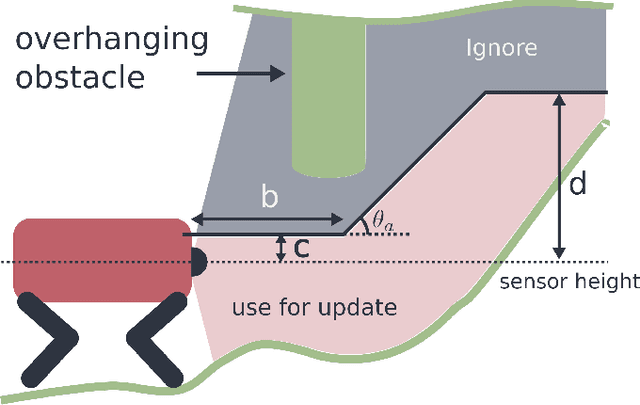

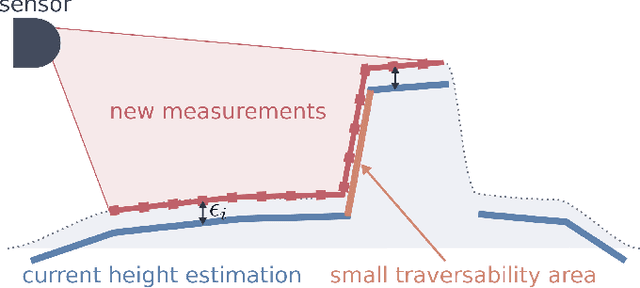

Abstract:Legged robots have the potential to traverse complex terrain and access confined spaces beyond the reach of traditional platforms thanks to their ability to carefully select footholds and flexibly adapt their body posture while walking. However, robust deployment in real-world applications is still an open challenge. In this paper, we present a method for legged locomotion control using reinforcement learning and 3D volumetric representations to enable robust and versatile locomotion in confined and unstructured environments. By employing a two-layer hierarchical policy structure, we exploit the capabilities of a highly robust low-level policy to follow 6D commands and a high-level policy to enable three-dimensional spatial awareness for navigating under overhanging obstacles. Our study includes the development of a procedural terrain generator to create diverse training environments. We present a series of experimental evaluations in both simulation and real-world settings, demonstrating the effectiveness of our approach in controlling a quadruped robot in confined, rough terrain. By achieving this, our work extends the applicability of legged robots to a broader range of scenarios.

ArtPlanner: Robust Legged Robot Navigation in the Field

Mar 02, 2023

Abstract:Due to the highly complex environment present during the DARPA Subterranean Challenge, all six funded teams relied on legged robots as part of their robotic team. Their unique locomotion skills of being able to step over obstacles require special considerations for navigation planning. In this work, we present and examine ArtPlanner, the navigation planner used by team CERBERUS during the Finals. It is based on a sampling-based method that determines valid poses with a reachability abstraction and uses learned foothold scores to restrict areas considered safe for stepping. The resulting planning graph is assigned learned motion costs by a neural network trained in simulation to minimize traversal time and limit the risk of failure. Our method achieves real-time performance with a bounded computation time. We present extensive experimental results gathered during the Finals event of the DARPA Subterranean Challenge, where this method contributed to team CERBERUS winning the competition. It powered navigation of four ANYmal quadrupeds for 90 minutes of autonomous operation without a single planning or locomotion failure.

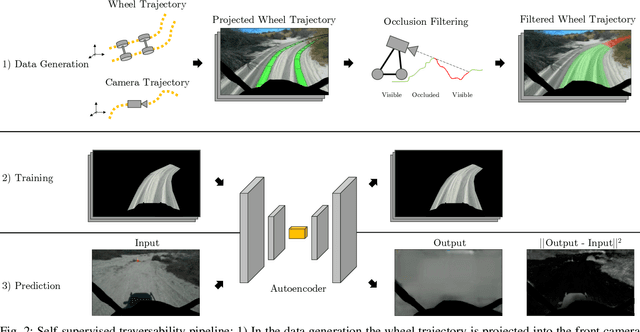

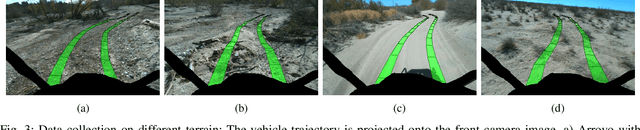

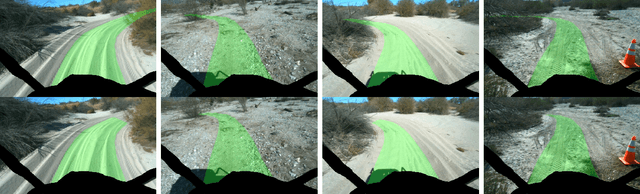

Self-Supervised Traversability Prediction by Learning to Reconstruct Safe Terrain

Aug 02, 2022

Abstract:Navigating off-road with a fast autonomous vehicle depends on a robust perception system that differentiates traversable from non-traversable terrain. Typically, this depends on a semantic understanding which is based on supervised learning from images annotated by a human expert. This requires a significant investment in human time, assumes correct expert classification, and small details can lead to misclassification. To address these challenges, we propose a method for predicting high- and low-risk terrains from only past vehicle experience in a self-supervised fashion. First, we develop a tool that projects the vehicle trajectory into the front camera image. Second, occlusions in the 3D representation of the terrain are filtered out. Third, an autoencoder trained on masked vehicle trajectory regions identifies low- and high-risk terrains based on the reconstruction error. We evaluated our approach with two models and different bottleneck sizes with two different training and testing sites with a fourwheeled off-road vehicle. Comparison with two independent test sets of semantic labels from similar terrain as training sites demonstrates the ability to separate the ground as low-risk and the vegetation as high-risk with 81.1% and 85.1% accuracy.

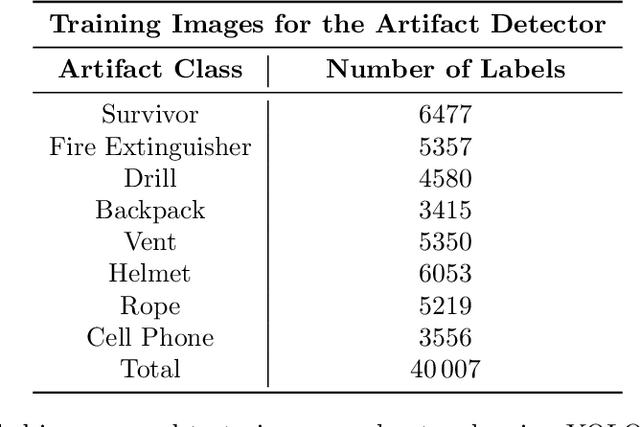

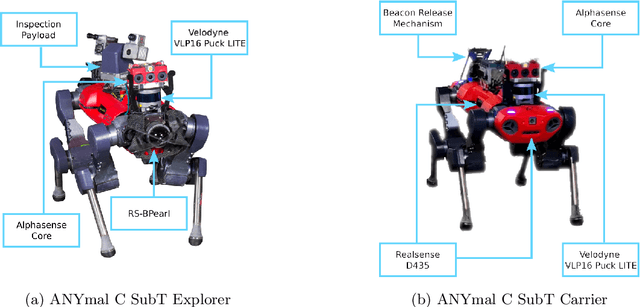

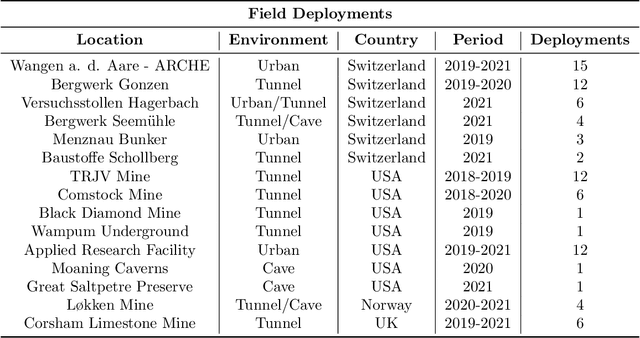

Team CERBERUS Wins the DARPA Subterranean Challenge: Technical Overview and Lessons Learned

Jul 11, 2022

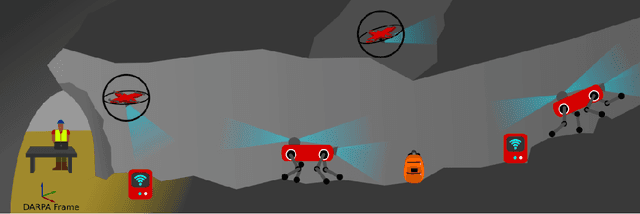

Abstract:This article presents the CERBERUS robotic system-of-systems, which won the DARPA Subterranean Challenge Final Event in 2021. The Subterranean Challenge was organized by DARPA with the vision to facilitate the novel technologies necessary to reliably explore diverse underground environments despite the grueling challenges they present for robotic autonomy. Due to their geometric complexity, degraded perceptual conditions combined with lack of GPS support, austere navigation conditions, and denied communications, subterranean settings render autonomous operations particularly demanding. In response to this challenge, we developed the CERBERUS system which exploits the synergy of legged and flying robots, coupled with robust control especially for overcoming perilous terrain, multi-modal and multi-robot perception for localization and mapping in conditions of sensor degradation, and resilient autonomy through unified exploration path planning and local motion planning that reflects robot-specific limitations. Based on its ability to explore diverse underground environments and its high-level command and control by a single human supervisor, CERBERUS demonstrated efficient exploration, reliable detection of objects of interest, and accurate mapping. In this article, we report results from both the preliminary runs and the final Prize Round of the DARPA Subterranean Challenge, and discuss highlights and challenges faced, alongside lessons learned for the benefit of the community.

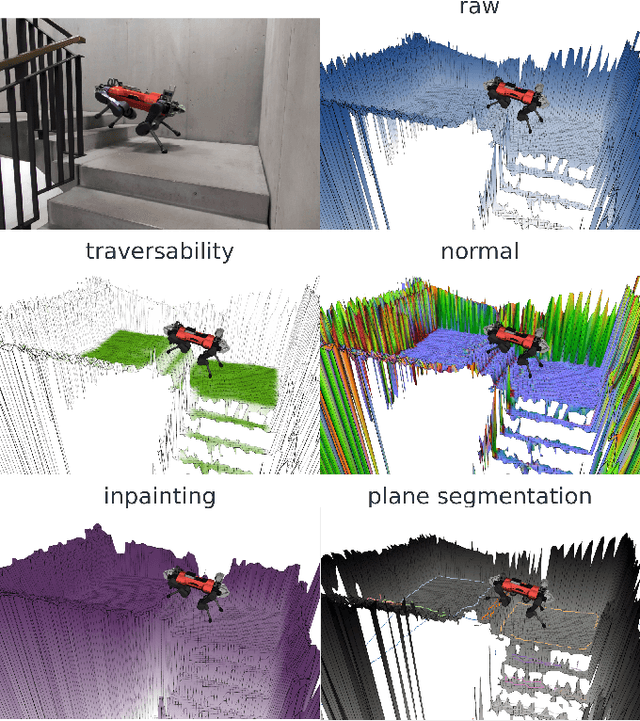

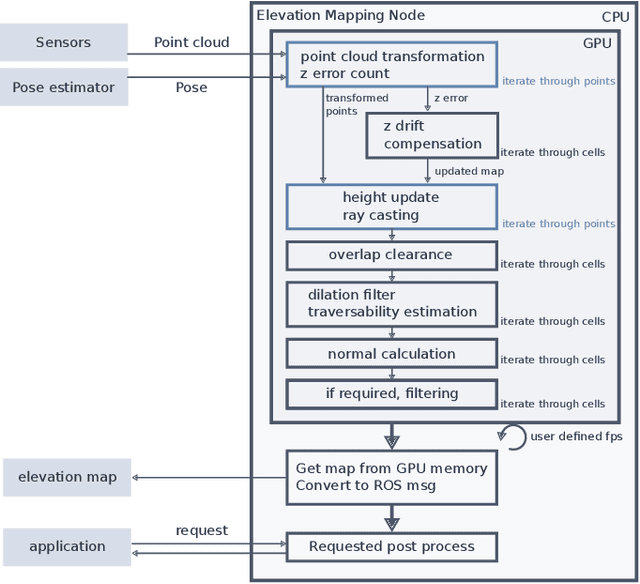

Elevation Mapping for Locomotion and Navigation using GPU

Apr 27, 2022

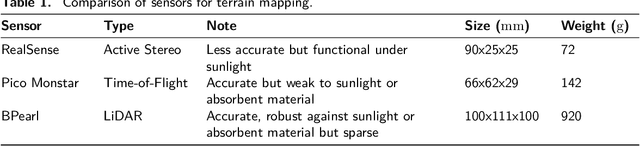

Abstract:Perceiving the surrounding environment is crucial for autonomous mobile robots. An elevation map provides a memory-efficient and simple yet powerful geometric representation for ground robots. The robots can use this information for navigation in an unknown environment or perceptive locomotion control over rough terrain. Depending on the application, various post processing steps may be incorporated, such as smoothing, inpainting or plane segmentation. In this work, we present an elevation mapping pipeline leveraging GPU for fast and efficient processing with additional features both for navigation and locomotion. We demonstrated our mapping framework through extensive hardware experiments. Our mapping software was successfully deployed for underground exploration during DARPA Subterranean Challenge and for various experiments of quadrupedal locomotion.

Learning robust perceptive locomotion for quadrupedal robots in the wild

Jan 20, 2022Abstract:Legged robots that can operate autonomously in remote and hazardous environments will greatly increase opportunities for exploration into under-explored areas. Exteroceptive perception is crucial for fast and energy-efficient locomotion: perceiving the terrain before making contact with it enables planning and adaptation of the gait ahead of time to maintain speed and stability. However, utilizing exteroceptive perception robustly for locomotion has remained a grand challenge in robotics. Snow, vegetation, and water visually appear as obstacles on which the robot cannot step~-- or are missing altogether due to high reflectance. Additionally, depth perception can degrade due to difficult lighting, dust, fog, reflective or transparent surfaces, sensor occlusion, and more. For this reason, the most robust and general solutions to legged locomotion to date rely solely on proprioception. This severely limits locomotion speed, because the robot has to physically feel out the terrain before adapting its gait accordingly. Here we present a robust and general solution to integrating exteroceptive and proprioceptive perception for legged locomotion. We leverage an attention-based recurrent encoder that integrates proprioceptive and exteroceptive input. The encoder is trained end-to-end and learns to seamlessly combine the different perception modalities without resorting to heuristics. The result is a legged locomotion controller with high robustness and speed. The controller was tested in a variety of challenging natural and urban environments over multiple seasons and completed an hour-long hike in the Alps in the time recommended for human hikers.

CERBERUS: Autonomous Legged and Aerial Robotic Exploration in the Tunnel and Urban Circuits of the DARPA Subterranean Challenge

Jan 18, 2022

Abstract:Autonomous exploration of subterranean environments constitutes a major frontier for robotic systems as underground settings present key challenges that can render robot autonomy hard to achieve. This has motivated the DARPA Subterranean Challenge, where teams of robots search for objects of interest in various underground environments. In response, the CERBERUS system-of-systems is presented as a unified strategy towards subterranean exploration using legged and flying robots. As primary robots, ANYmal quadruped systems are deployed considering their endurance and potential to traverse challenging terrain. For aerial robots, both conventional and collision-tolerant multirotors are utilized to explore spaces too narrow or otherwise unreachable by ground systems. Anticipating degraded sensing conditions, a complementary multi-modal sensor fusion approach utilizing camera, LiDAR, and inertial data for resilient robot pose estimation is proposed. Individual robot pose estimates are refined by a centralized multi-robot map optimization approach to improve the reported location accuracy of detected objects of interest in the DARPA-defined coordinate frame. Furthermore, a unified exploration path planning policy is presented to facilitate the autonomous operation of both legged and aerial robots in complex underground networks. Finally, to enable communication between the robots and the base station, CERBERUS utilizes a ground rover with a high-gain antenna and an optical fiber connection to the base station, alongside breadcrumbing of wireless nodes by our legged robots. We report results from the CERBERUS system-of-systems deployment at the DARPA Subterranean Challenge Tunnel and Urban Circuits, along with the current limitations and the lessons learned for the benefit of the community.

Deep Measurement Updates for Bayes Filters

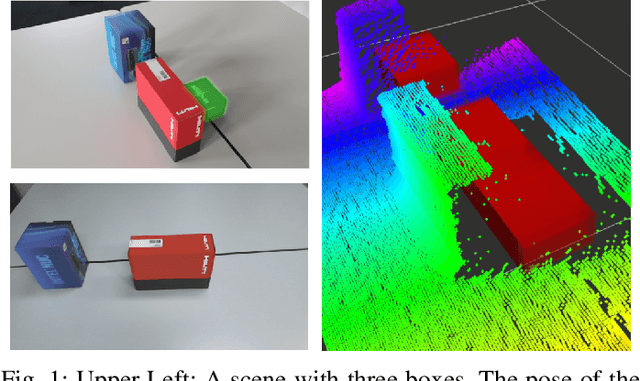

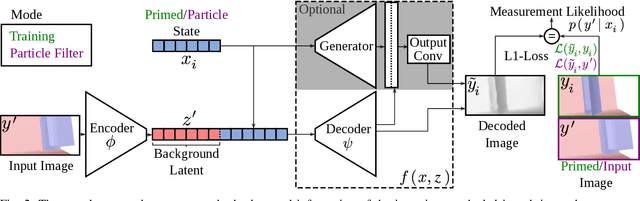

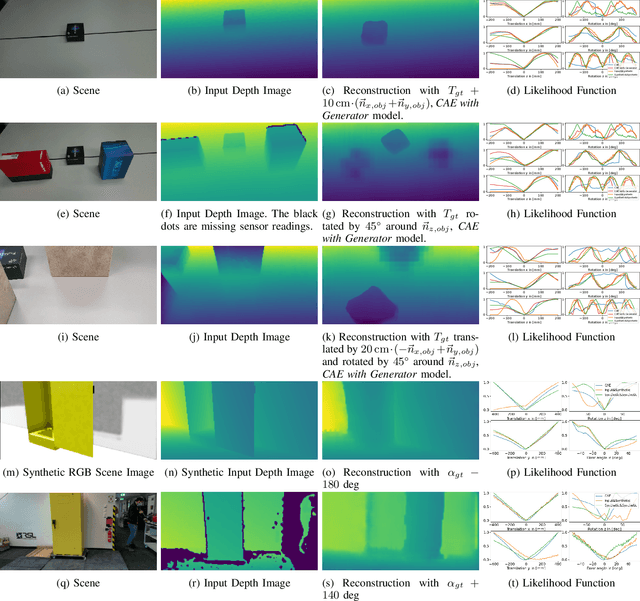

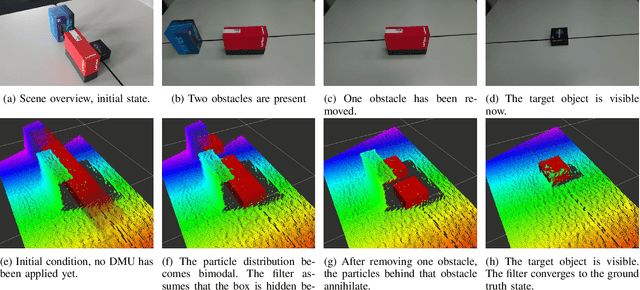

Dec 01, 2021

Abstract:Measurement update rules for Bayes filters often contain hand-crafted heuristics to compute observation probabilities for high-dimensional sensor data, like images. In this work, we propose the novel approach Deep Measurement Update (DMU) as a general update rule for a wide range of systems. DMU has a conditional encoder-decoder neural network structure to process depth images as raw inputs. Even though the network is trained only on synthetic data, the model shows good performance at evaluation time on real-world data. With our proposed training scheme primed data training , we demonstrate how the DMU models can be trained efficiently to be sensitive to condition variables without having to rely on a stochastic information bottleneck. We validate the proposed methods in multiple scenarios of increasing complexity, beginning with the pose estimation of a single object to the joint estimation of the pose and the internal state of an articulated system. Moreover, we provide a benchmark against Articulated Signed Distance Functions(A-SDF) on the RBO dataset as a baseline comparison for articulation state estimation.

Learning a State Representation and Navigation in Cluttered and Dynamic Environments

Mar 07, 2021

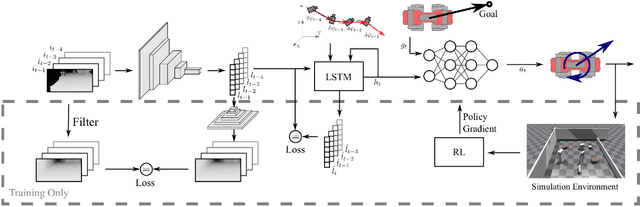

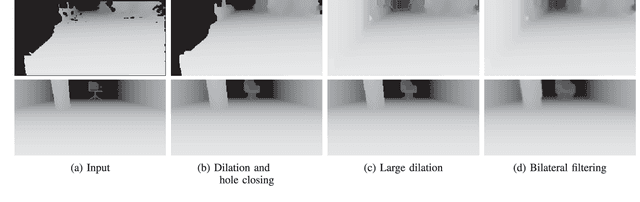

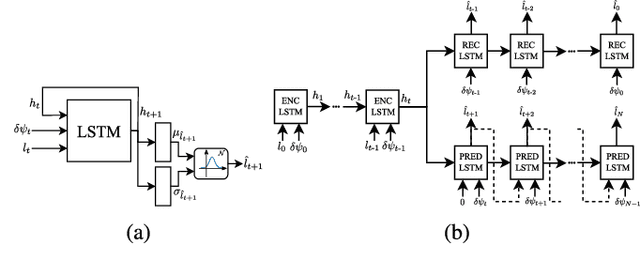

Abstract:In this work, we present a learning-based pipeline to realise local navigation with a quadrupedal robot in cluttered environments with static and dynamic obstacles. Given high-level navigation commands, the robot is able to safely locomote to a target location based on frames from a depth camera without any explicit mapping of the environment. First, the sequence of images and the current trajectory of the camera are fused to form a model of the world using state representation learning. The output of this lightweight module is then directly fed into a target-reaching and obstacle-avoiding policy trained with reinforcement learning. We show that decoupling the pipeline into these components results in a sample efficient policy learning stage that can be fully trained in simulation in just a dozen minutes. The key part is the state representation, which is trained to not only estimate the hidden state of the world in an unsupervised fashion, but also helps bridging the reality gap, enabling successful sim-to-real transfer. In our experiments with the quadrupedal robot ANYmal in simulation and in reality, we show that our system can handle noisy depth images, avoid dynamic obstacles unseen during training, and is endowed with local spatial awareness.

* 8 pages, 8 figures, 2 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge