Exploiting Constructive Interference for Backscatter Communication Systems

May 22, 2022Gu Bowen, Li Dong, Liu Ye, Xu Yongjun

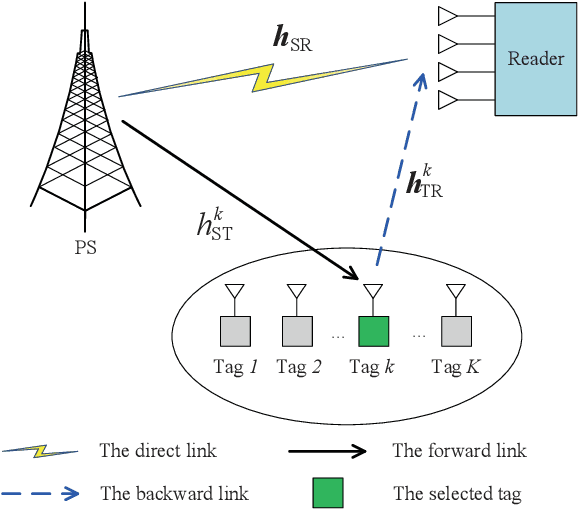

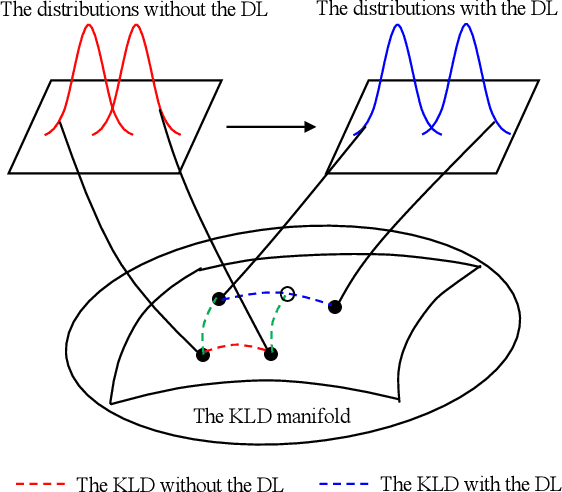

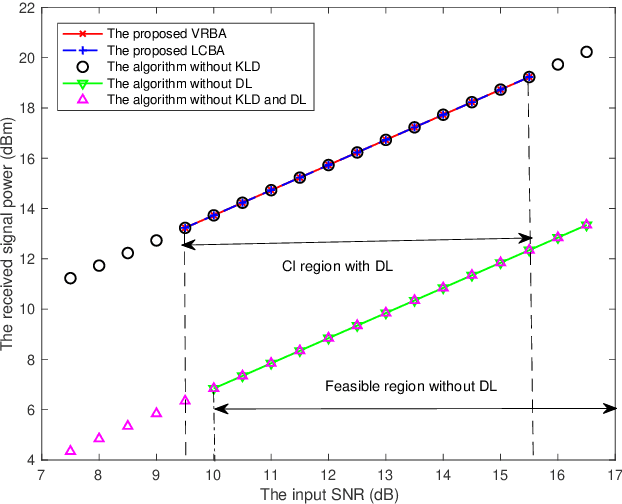

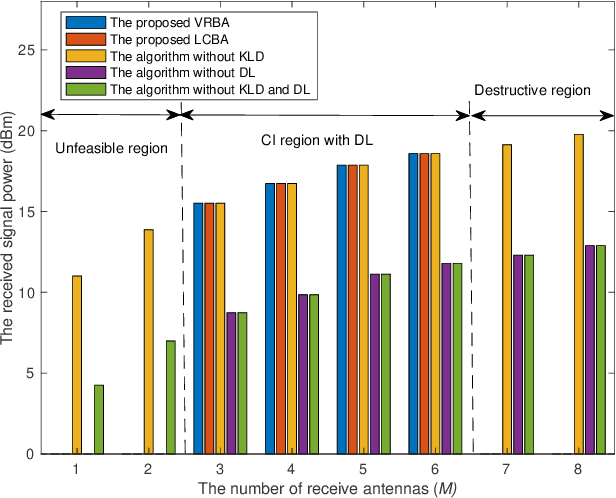

Backscatter communication (BackCom), one of the core technologies to realize zero-power communication, is expected to be a pivotal paradigm for the next generation of the Internet of Things (IoT). However, the "strong" direct link (DL) interference (DLI) is traditionally assumed to be harmful, and generally drowns out the "weak" backscattered signals accordingly, thus deteriorating the performance of BackCom. In contrast to the previous efforts to eliminate the DLI, in this paper, we exploit the constructive interference (CI), in which the DLI contributes to the backscattered signal. To be specific, our objective is to maximize the received signal power by jointly optimizing the receive beamforming vectors and tag selection factors, which is, however, non-convex and difficult to solve due to constraints on the Kullback-Leibler (KL) divergence. In order to solve this problem, we first decompose the original problem, and then propose two algorithms to solve the sub-problem with beamforming design via a change of variables and semi-definite programming (SDP) and a greedy algorithm to solve the sub-problem with tag selection. In order to gain insight into the CI, we consider a special case with the single-antenna reader to reveal the channel angle between the backscattering link (BL) and the DL, in which the DLI will become constructive. Simulation results show that significant performance gain can always be achieved in the proposed algorithms compared with the traditional algorithms without the DL in terms of the strength of the received signal. The derived constructive channel angle for the BackCom system with the single-antenna reader is also confirmed by simulation results.

Prototypical Calibration for Few-shot Learning of Language Models

May 20, 2022Zhixiong Han, Yaru Hao, Li Dong, Furu Wei

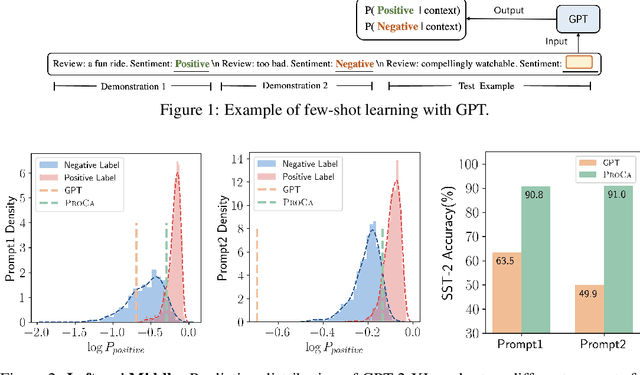

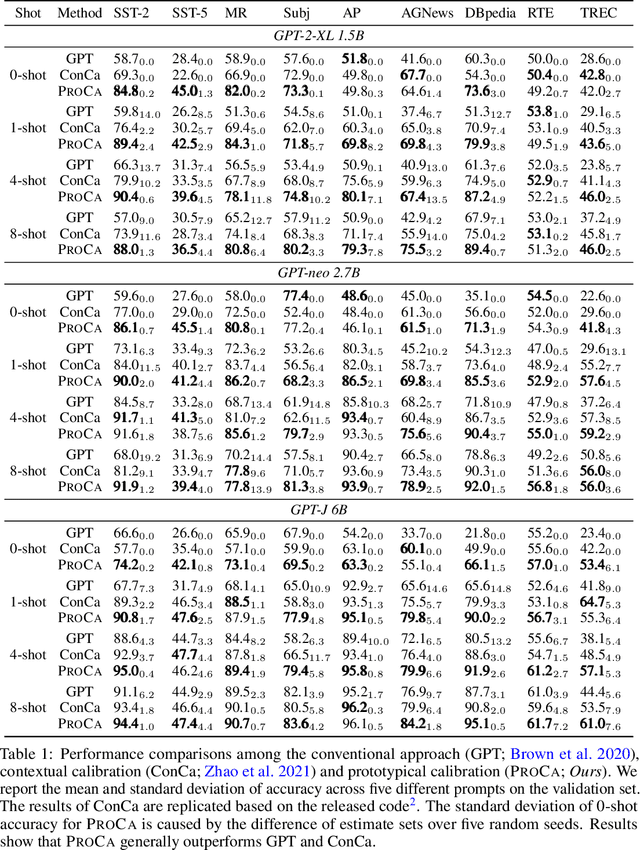

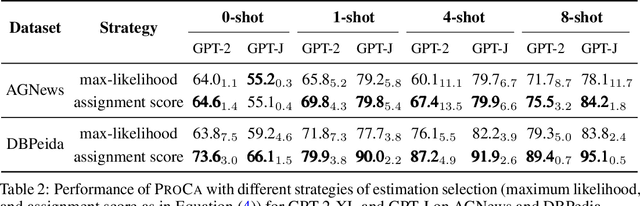

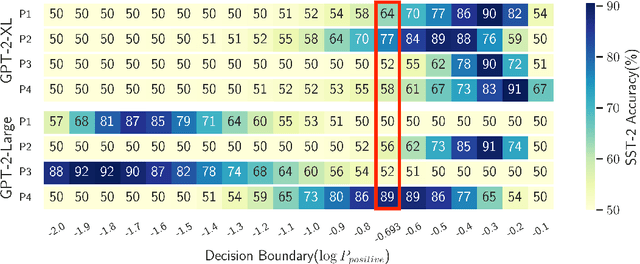

In-context learning of GPT-like models has been recognized as fragile across different hand-crafted templates, and demonstration permutations. In this work, we propose prototypical calibration to adaptively learn a more robust decision boundary for zero- and few-shot classification, instead of greedy decoding. Concretely, our method first adopts Gaussian mixture distribution to estimate the prototypical clusters for all categories. Then we assign each cluster to the corresponding label by solving a weighted bipartite matching problem. Given an example, its prediction is calibrated by the likelihood of prototypical clusters. Experimental results show that prototypical calibration yields a 15% absolute improvement on a diverse set of tasks. Extensive analysis across different scales also indicates that our method calibrates the decision boundary as expected, greatly improving the robustness of GPT to templates, permutations, and class imbalance.

Visually-Augmented Language Modeling

May 20, 2022Weizhi Wang, Li Dong, Hao Cheng, Haoyu Song, Xiaodong Liu, Xifeng Yan, Jianfeng Gao, Furu Wei

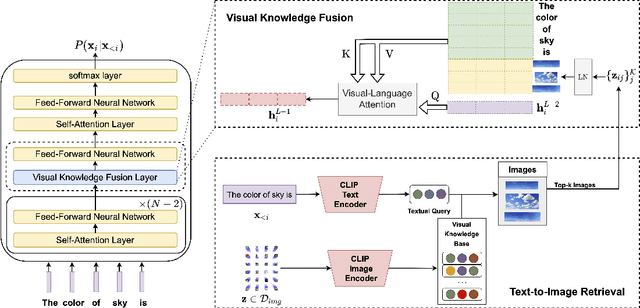

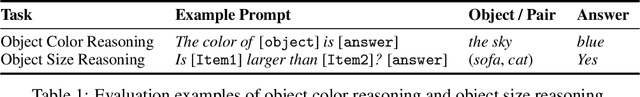

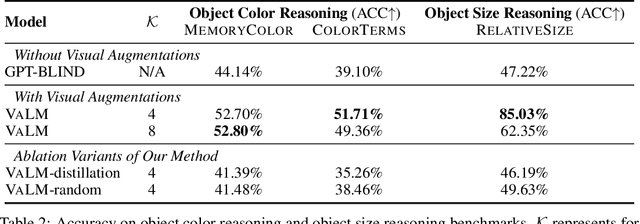

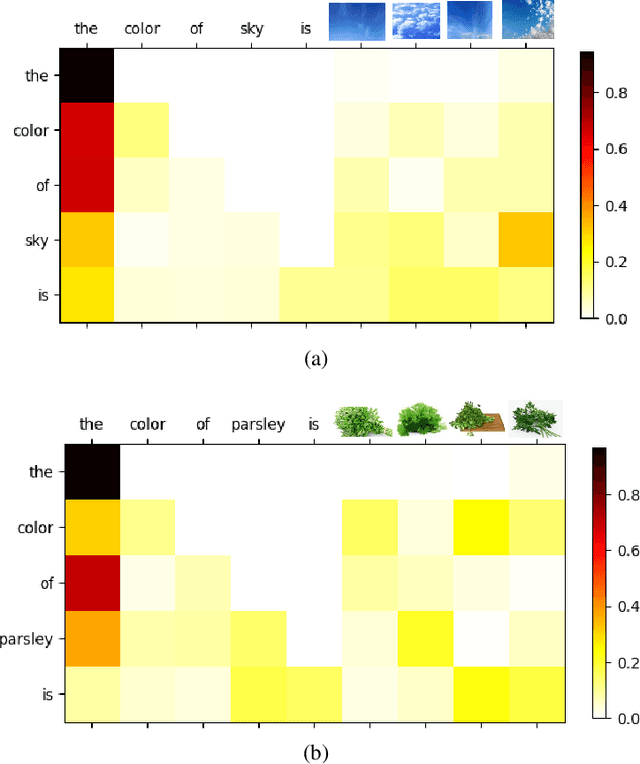

Human language is grounded on multimodal knowledge including visual knowledge like colors, sizes, and shapes. However, current large-scale pre-trained language models rely on the text-only self-supervised training with massive text data, which precludes them from utilizing relevant visual information when necessary. To address this, we propose a novel pre-training framework, named VaLM, to Visually-augment text tokens with retrieved relevant images for Language Modeling. Specifically, VaLM builds on a novel text-vision alignment method via an image retrieval module to fetch corresponding images given a textual context. With the visually-augmented context, VaLM uses a visual knowledge fusion layer to enable multimodal grounded language modeling by attending on both text context and visual knowledge in images. We evaluate the proposed model on various multimodal commonsense reasoning tasks, which require visual information to excel. VaLM outperforms the text-only baseline with substantial gains of +8.66% and +37.81% accuracy on object color and size reasoning, respectively.

Transferability of Adversarial Attacks on Synthetic Speech Detection

May 16, 2022Jiacheng Deng, Shunyi Chen, Li Dong, Diqun Yan, Rangding Wang

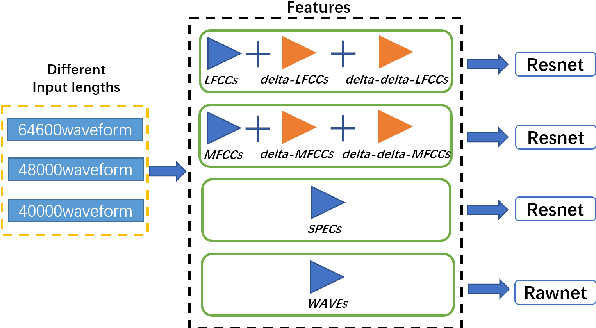

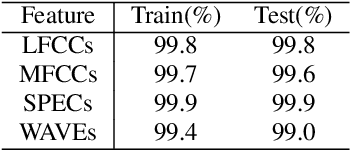

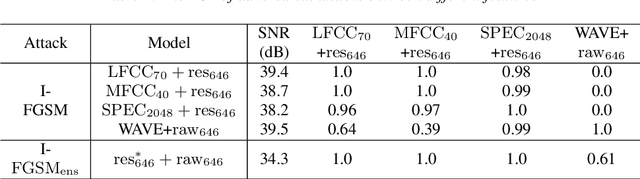

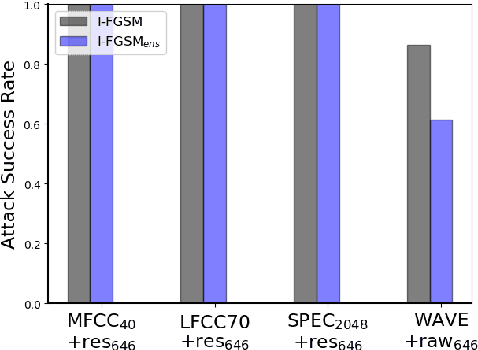

Synthetic speech detection is one of the most important research problems in audio security. Meanwhile, deep neural networks are vulnerable to adversarial attacks. Therefore, we establish a comprehensive benchmark to evaluate the transferability of adversarial attacks on the synthetic speech detection task. Specifically, we attempt to investigate: 1) The transferability of adversarial attacks between different features. 2) The influence of varying extraction hyperparameters of features on the transferability of adversarial attacks. 3) The effect of clipping or self-padding operation on the transferability of adversarial attacks. By performing these analyses, we summarise the weaknesses of synthetic speech detectors and the transferability behaviours of adversarial attacks, which provide insights for future research. More details can be found at https://gitee.com/djc_QRICK/Attack-Transferability-On-Synthetic-Detection.

Many a little Makes a Mickle: Probing Backscattering Energy Recycling for Backscatter Communications

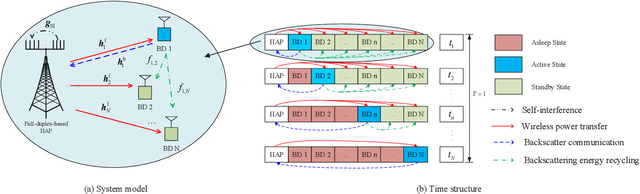

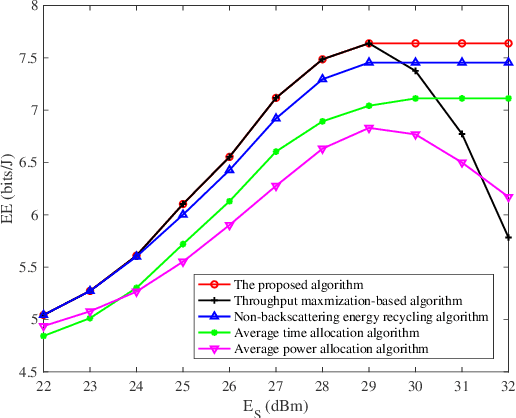

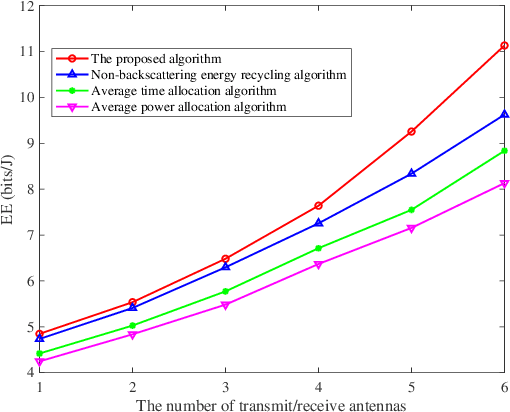

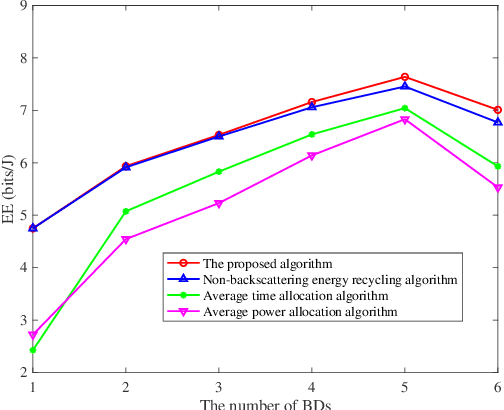

May 01, 2022Gu Bowen, Li Dong, Xu Yongjun, Li Chunguo, Sun Sumei

In this paper, we investigate and analyze full-duplex-based backscatter communications with multiple backscatter devices (BDs). Different from previous works where only the energy from the energy source is harvested, BDs are also allowed to harvest energy from previous BDs by recycling the backscattering energy. Our objective is to maximize the total energy efficiency (EE) of the system via joint time scheduling, beamforming design, and reflection coefficient (RC) adjustment while satisfying the constraints on the total time, the transmit energy consumption, the circuit energy consumption and the achievable throughput for each BD by taking the causality and the non-linearity of energy harvesting into account. To deal with this intractable non-convex problem, we reformulate the problem by utilizing the Dinkelbach's method. Subsequently, an alternative iterative algorithm is designed to solve it. Simulation results show that the proposed algorithm achieves a much better EE than the benchmark algorithms.

On the Representation Collapse of Sparse Mixture of Experts

Apr 20, 2022Zewen Chi, Li Dong, Shaohan Huang, Damai Dai, Shuming Ma, Barun Patra, Saksham Singhal, Payal Bajaj, Xia Song, Furu Wei

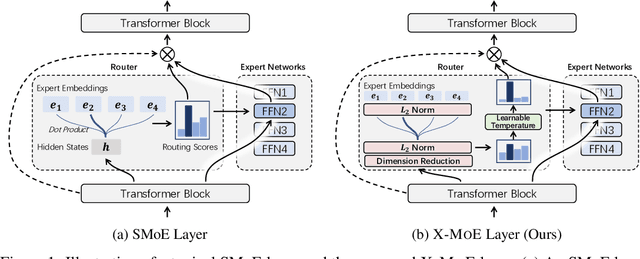

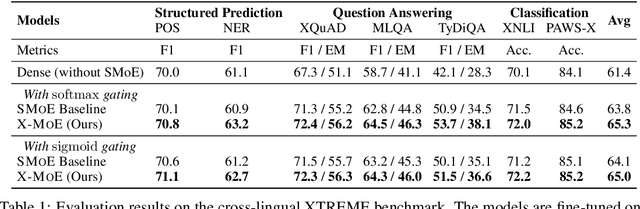

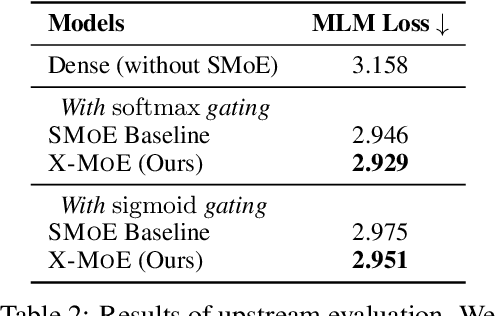

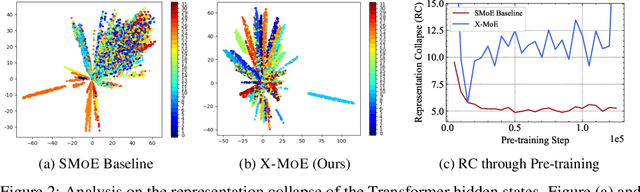

Sparse mixture of experts provides larger model capacity while requiring a constant computational overhead. It employs the routing mechanism to distribute input tokens to the best-matched experts according to their hidden representations. However, learning such a routing mechanism encourages token clustering around expert centroids, implying a trend toward representation collapse. In this work, we propose to estimate the routing scores between tokens and experts on a low-dimensional hypersphere. We conduct extensive experiments on cross-lingual language model pre-training and fine-tuning on downstream tasks. Experimental results across seven multilingual benchmarks show that our method achieves consistent gains. We also present a comprehensive analysis on the representation and routing behaviors of our models. Our method alleviates the representation collapse issue and achieves more consistent routing than the baseline mixture-of-experts methods.

StableMoE: Stable Routing Strategy for Mixture of Experts

Apr 18, 2022Damai Dai, Li Dong, Shuming Ma, Bo Zheng, Zhifang Sui, Baobao Chang, Furu Wei

The Mixture-of-Experts (MoE) technique can scale up the model size of Transformers with an affordable computational overhead. We point out that existing learning-to-route MoE methods suffer from the routing fluctuation issue, i.e., the target expert of the same input may change along with training, but only one expert will be activated for the input during inference. The routing fluctuation tends to harm sample efficiency because the same input updates different experts but only one is finally used. In this paper, we propose StableMoE with two training stages to address the routing fluctuation problem. In the first training stage, we learn a balanced and cohesive routing strategy and distill it into a lightweight router decoupled from the backbone model. In the second training stage, we utilize the distilled router to determine the token-to-expert assignment and freeze it for a stable routing strategy. We validate our method on language modeling and multilingual machine translation. The results show that StableMoE outperforms existing MoE methods in terms of both convergence speed and performance.

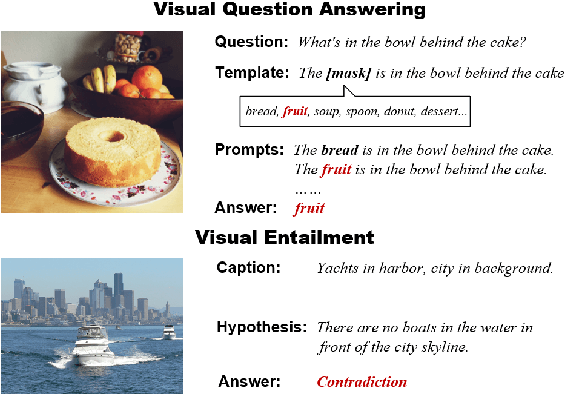

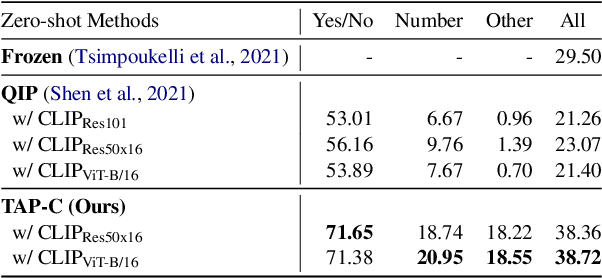

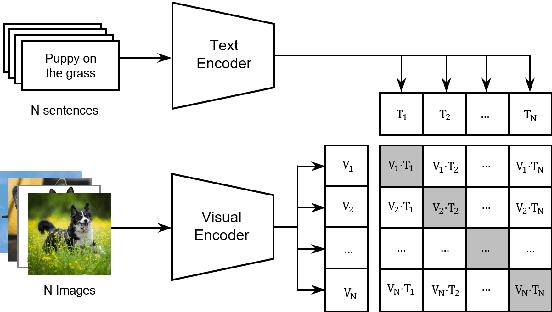

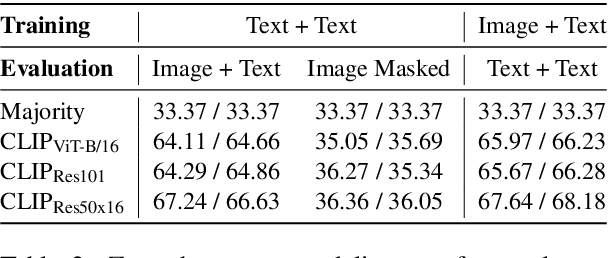

CLIP Models are Few-shot Learners: Empirical Studies on VQA and Visual Entailment

Mar 14, 2022Haoyu Song, Li Dong, Wei-Nan Zhang, Ting Liu, Furu Wei

CLIP has shown a remarkable zero-shot capability on a wide range of vision tasks. Previously, CLIP is only regarded as a powerful visual encoder. However, after being pre-trained by language supervision from a large amount of image-caption pairs, CLIP itself should also have acquired some few-shot abilities for vision-language tasks. In this work, we empirically show that CLIP can be a strong vision-language few-shot learner by leveraging the power of language. We first evaluate CLIP's zero-shot performance on a typical visual question answering task and demonstrate a zero-shot cross-modality transfer capability of CLIP on the visual entailment task. Then we propose a parameter-efficient fine-tuning strategy to boost the few-shot performance on the vqa task. We achieve competitive zero/few-shot results on the visual question answering and visual entailment tasks without introducing any additional pre-training procedure.

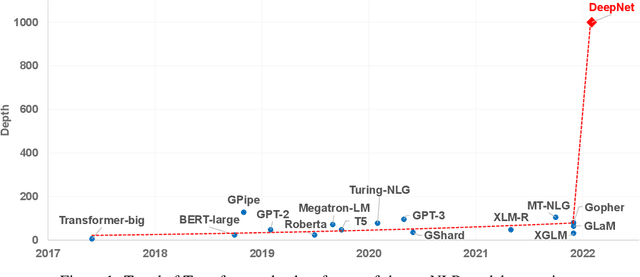

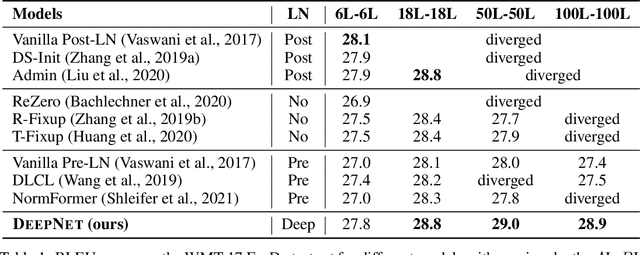

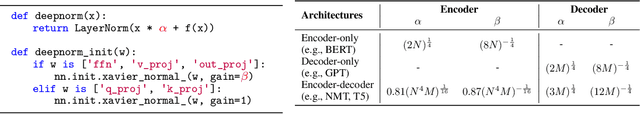

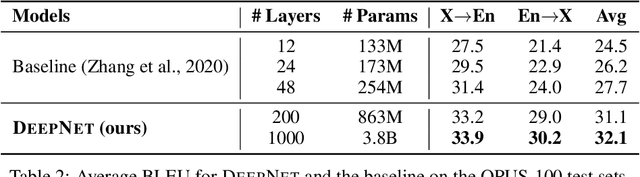

DeepNet: Scaling Transformers to 1,000 Layers

Mar 01, 2022Hongyu Wang, Shuming Ma, Li Dong, Shaohan Huang, Dongdong Zhang, Furu Wei

In this paper, we propose a simple yet effective method to stabilize extremely deep Transformers. Specifically, we introduce a new normalization function (DeepNorm) to modify the residual connection in Transformer, accompanying with theoretically derived initialization. In-depth theoretical analysis shows that model updates can be bounded in a stable way. The proposed method combines the best of two worlds, i.e., good performance of Post-LN and stable training of Pre-LN, making DeepNorm a preferred alternative. We successfully scale Transformers up to 1,000 layers (i.e., 2,500 attention and feed-forward network sublayers) without difficulty, which is one order of magnitude deeper than previous deep Transformers. Remarkably, on a multilingual benchmark with 7,482 translation directions, our 200-layer model with 3.2B parameters significantly outperforms the 48-layer state-of-the-art model with 12B parameters by 5 BLEU points, which indicates a promising scaling direction.

Controllable Natural Language Generation with Contrastive Prefixes

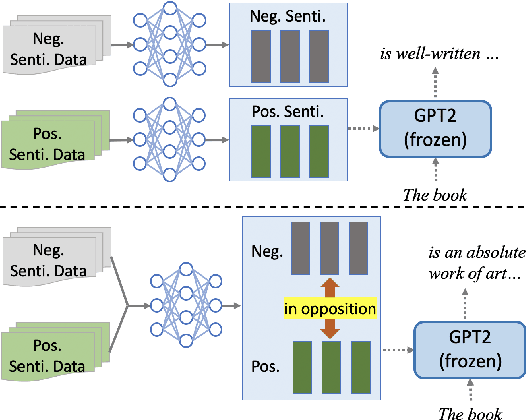

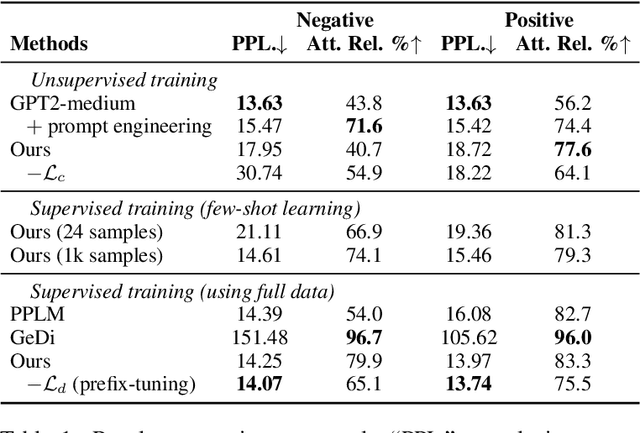

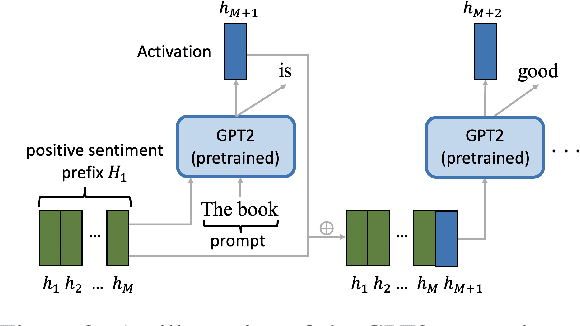

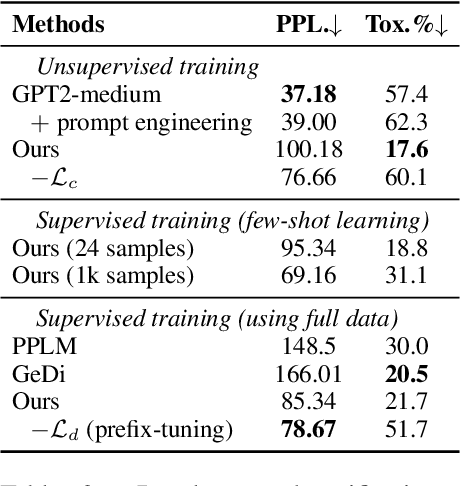

Feb 27, 2022Jing Qian, Li Dong, Yelong Shen, Furu Wei, Weizhu Chen

To guide the generation of large pretrained language models (LM), previous work has focused on directly fine-tuning the language model or utilizing an attribute discriminator. In this work, we propose a novel lightweight framework for controllable GPT2 generation, which utilizes a set of small attribute-specific vectors, called prefixes, to steer natural language generation. Different from prefix-tuning, where each prefix is trained independently, we take the relationship among prefixes into consideration and train multiple prefixes simultaneously. We propose a novel supervised method and also an unsupervised method to train the prefixes for single-aspect control while the combination of these two methods can achieve multi-aspect control. Experimental results on both single-aspect and multi-aspect control show that our methods can guide generation towards the desired attributes while keeping high linguistic quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge