Kui Jiang

SfMamba: Efficient Source-Free Domain Adaptation via Selective Scan Modeling

Jan 13, 2026Abstract:Source-free domain adaptation (SFDA) tackles the critical challenge of adapting source-pretrained models to unlabeled target domains without access to source data, overcoming data privacy and storage limitations in real-world applications. However, existing SFDA approaches struggle with the trade-off between perception field and computational efficiency in domain-invariant feature learning. Recently, Mamba has offered a promising solution through its selective scan mechanism, which enables long-range dependency modeling with linear complexity. However, the Visual Mamba (i.e., VMamba) remains limited in capturing channel-wise frequency characteristics critical for domain alignment and maintaining spatial robustness under significant domain shifts. To address these, we propose a framework called SfMamba to fully explore the stable dependency in source-free model transfer. SfMamba introduces Channel-wise Visual State-Space block that enables channel-sequence scanning for domain-invariant feature extraction. In addition, SfMamba involves a Semantic-Consistent Shuffle strategy that disrupts background patch sequences in 2D selective scan while preserving prediction consistency to mitigate error accumulation. Comprehensive evaluations across multiple benchmarks show that SfMamba achieves consistently stronger performance than existing methods while maintaining favorable parameter efficiency, offering a practical solution for SFDA. Our code is available at https://github.com/chenxi52/SfMamba.

Learning Depth from Past Selves: Self-Evolution Contrast for Robust Depth Estimation

Nov 19, 2025Abstract:Self-supervised depth estimation has gained significant attention in autonomous driving and robotics. However, existing methods exhibit substantial performance degradation under adverse weather conditions such as rain and fog, where reduced visibility critically impairs depth prediction. To address this issue, we propose a novel self-evolution contrastive learning framework called SEC-Depth for self-supervised robust depth estimation tasks. Our approach leverages intermediate parameters generated during training to construct temporally evolving latency models. Using these, we design a self-evolution contrastive scheme to mitigate performance loss under challenging conditions. Concretely, we first design a dynamic update strategy of latency models for the depth estimation task to capture optimization states across training stages. To effectively leverage latency models, we introduce a self-evolution contrastive Loss (SECL) that treats outputs from historical latency models as negative samples. This mechanism adaptively adjusts learning objectives while implicitly sensing weather degradation severity, reducing the needs for manual intervention. Experiments show that our method integrates seamlessly into diverse baseline models and significantly enhances robustness in zero-shot evaluations.

Semantics and Content Matter: Towards Multi-Prior Hierarchical Mamba for Image Deraining

Nov 17, 2025Abstract:Rain significantly degrades the performance of computer vision systems, particularly in applications like autonomous driving and video surveillance. While existing deraining methods have made considerable progress, they often struggle with fidelity of semantic and spatial details. To address these limitations, we propose the Multi-Prior Hierarchical Mamba (MPHM) network for image deraining. This novel architecture synergistically integrates macro-semantic textual priors (CLIP) for task-level semantic guidance and micro-structural visual priors (DINOv2) for scene-aware structural information. To alleviate potential conflicts between heterogeneous priors, we devise a progressive Priors Fusion Injection (PFI) that strategically injects complementary cues at different decoder levels. Meanwhile, we equip the backbone network with an elaborate Hierarchical Mamba Module (HMM) to facilitate robust feature representation, featuring a Fourier-enhanced dual-path design that concurrently addresses global context modeling and local detail recovery. Comprehensive experiments demonstrate MPHM's state-of-the-art performance, achieving a 0.57 dB PSNR gain on the Rain200H dataset while delivering superior generalization on real-world rainy scenarios.

ICLR: Inter-Chrominance and Luminance Interaction for Natural Color Restoration in Low-Light Image Enhancement

Nov 17, 2025Abstract:Low-Light Image Enhancement (LLIE) task aims at improving contrast while restoring details and textures for images captured in low-light conditions. HVI color space has made significant progress in this task by enabling precise decoupling of chrominance and luminance. However, for the interaction of chrominance and luminance branches, substantial distributional differences between the two branches prevalent in natural images limit complementary feature extraction, and luminance errors are propagated to chrominance channels through the nonlinear parameter. Furthermore, for interaction between different chrominance branches, images with large homogeneous-color regions usually exhibit weak correlation between chrominance branches due to concentrated distributions. Traditional pixel-wise losses exploit strong inter-branch correlations for co-optimization, causing gradient conflicts in weakly correlated regions. Therefore, we propose an Inter-Chrominance and Luminance Interaction (ICLR) framework including a Dual-stream Interaction Enhancement Module (DIEM) and a Covariance Correction Loss (CCL). The DIEM improves the extraction of complementary information from two dimensions, fusion and enhancement, respectively. The CCL utilizes luminance residual statistics to penalize chrominance errors and balances gradient conflicts by constraining chrominance branches covariance. Experimental results on multiple datasets show that the proposed ICLR framework outperforms state-of-the-art methods.

MambaOVSR: Multiscale Fusion with Global Motion Modeling for Chinese Opera Video Super-Resolution

Nov 09, 2025

Abstract:Chinese opera is celebrated for preserving classical art. However, early filming equipment limitations have degraded videos of last-century performances by renowned artists (e.g., low frame rates and resolution), hindering archival efforts. Although space-time video super-resolution (STVSR) has advanced significantly, applying it directly to opera videos remains challenging. The scarcity of datasets impedes the recovery of high frequency details, and existing STVSR methods lack global modeling capabilities, compromising visual quality when handling opera's characteristic large motions. To address these challenges, we pioneer a large scale Chinese Opera Video Clip (COVC) dataset and propose the Mamba-based multiscale fusion network for space-time Opera Video Super-Resolution (MambaOVSR). Specifically, MambaOVSR involves three novel components: the Global Fusion Module (GFM) for motion modeling through a multiscale alternating scanning mechanism, and the Multiscale Synergistic Mamba Module (MSMM) for alignment across different sequence lengths. Additionally, our MambaVR block resolves feature artifacts and positional information loss during alignment. Experimental results on the COVC dataset show that MambaOVSR significantly outperforms the SOTA STVSR method by an average of 1.86 dB in terms of PSNR. Dataset and Code will be publicly released.

S2ML: Spatio-Spectral Mutual Learning for Depth Completion

Nov 08, 2025Abstract:The raw depth images captured by RGB-D cameras using Time-of-Flight (TOF) or structured light often suffer from incomplete depth values due to weak reflections, boundary shadows, and artifacts, which limit their applications in downstream vision tasks. Existing methods address this problem through depth completion in the image domain, but they overlook the physical characteristics of raw depth images. It has been observed that the presence of invalid depth areas alters the frequency distribution pattern. In this work, we propose a Spatio-Spectral Mutual Learning framework (S2ML) to harmonize the advantages of both spatial and frequency domains for depth completion. Specifically, we consider the distinct properties of amplitude and phase spectra and devise a dedicated spectral fusion module. Meanwhile, the local and global correlations between spatial-domain and frequency-domain features are calculated in a unified embedding space. The gradual mutual representation and refinement encourage the network to fully explore complementary physical characteristics and priors for more accurate depth completion. Extensive experiments demonstrate the effectiveness of our proposed S2ML method, outperforming the state-of-the-art method CFormer by 0.828 dB and 0.834 dB on the NYU-Depth V2 and SUN RGB-D datasets, respectively.

AHDMIL: Asymmetric Hierarchical Distillation Multi-Instance Learning for Fast and Accurate Whole-Slide Image Classification

Aug 07, 2025Abstract:Although multi-instance learning (MIL) has succeeded in pathological image classification, it faces the challenge of high inference costs due to the need to process thousands of patches from each gigapixel whole slide image (WSI). To address this, we propose AHDMIL, an Asymmetric Hierarchical Distillation Multi-Instance Learning framework that enables fast and accurate classification by eliminating irrelevant patches through a two-step training process. AHDMIL comprises two key components: the Dynamic Multi-Instance Network (DMIN), which operates on high-resolution WSIs, and the Dual-Branch Lightweight Instance Pre-screening Network (DB-LIPN), which analyzes corresponding low-resolution counterparts. In the first step, self-distillation (SD), DMIN is trained for WSI classification while generating per-instance attention scores to identify irrelevant patches. These scores guide the second step, asymmetric distillation (AD), where DB-LIPN learns to predict the relevance of each low-resolution patch. The relevant patches predicted by DB-LIPN have spatial correspondence with patches in high-resolution WSIs, which are used for fine-tuning and efficient inference of DMIN. In addition, we design the first Chebyshev-polynomial-based Kolmogorov-Arnold (CKA) classifier in computational pathology, which improves classification performance through learnable activation layers. Extensive experiments on four public datasets demonstrate that AHDMIL consistently outperforms previous state-of-the-art methods in both classification performance and inference speed. For example, on the Camelyon16 dataset, it achieves a relative improvement of 5.3% in accuracy and accelerates inference by 1.2.times. Across all datasets, area under the curve (AUC), accuracy, f1 score, and brier score show consistent gains, with average inference speedups ranging from 1.2 to 2.1 times. The code is available.

NTIRE 2025 Challenge on HR Depth from Images of Specular and Transparent Surfaces

Jun 06, 2025Abstract:This paper reports on the NTIRE 2025 challenge on HR Depth From images of Specular and Transparent surfaces, held in conjunction with the New Trends in Image Restoration and Enhancement (NTIRE) workshop at CVPR 2025. This challenge aims to advance the research on depth estimation, specifically to address two of the main open issues in the field: high-resolution and non-Lambertian surfaces. The challenge proposes two tracks on stereo and single-image depth estimation, attracting about 177 registered participants. In the final testing stage, 4 and 4 participating teams submitted their models and fact sheets for the two tracks.

Boosting All-in-One Image Restoration via Self-Improved Privilege Learning

May 30, 2025Abstract:Unified image restoration models for diverse and mixed degradations often suffer from unstable optimization dynamics and inter-task conflicts. This paper introduces Self-Improved Privilege Learning (SIPL), a novel paradigm that overcomes these limitations by innovatively extending the utility of privileged information (PI) beyond training into the inference stage. Unlike conventional Privilege Learning, where ground-truth-derived guidance is typically discarded after training, SIPL empowers the model to leverage its own preliminary outputs as pseudo-privileged signals for iterative self-refinement at test time. Central to SIPL is Proxy Fusion, a lightweight module incorporating a learnable Privileged Dictionary. During training, this dictionary distills essential high-frequency and structural priors from privileged feature representations. Critically, at inference, the same learned dictionary then interacts with features derived from the model's initial restoration, facilitating a self-correction loop. SIPL can be seamlessly integrated into various backbone architectures, offering substantial performance improvements with minimal computational overhead. Extensive experiments demonstrate that SIPL significantly advances the state-of-the-art on diverse all-in-one image restoration benchmarks. For instance, when integrated with the PromptIR model, SIPL achieves remarkable PSNR improvements of +4.58 dB on composite degradation tasks and +1.28 dB on diverse five-task benchmarks, underscoring its effectiveness and broad applicability. Codes are available at our project page https://github.com/Aitical/SIPL.

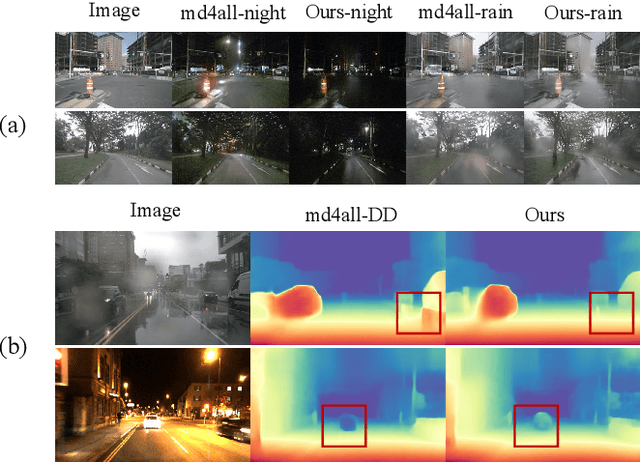

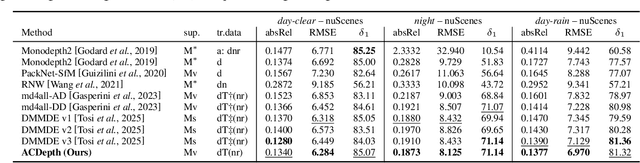

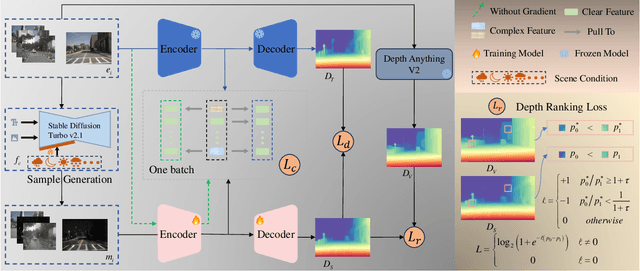

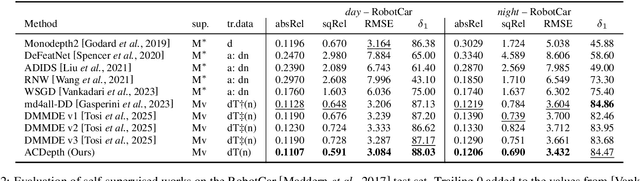

Always Clear Depth: Robust Monocular Depth Estimation under Adverse Weather

May 18, 2025

Abstract:Monocular depth estimation is critical for applications such as autonomous driving and scene reconstruction. While existing methods perform well under normal scenarios, their performance declines in adverse weather, due to challenging domain shifts and difficulties in extracting scene information. To address this issue, we present a robust monocular depth estimation method called \textbf{ACDepth} from the perspective of high-quality training data generation and domain adaptation. Specifically, we introduce a one-step diffusion model for generating samples that simulate adverse weather conditions, constructing a multi-tuple degradation dataset during training. To ensure the quality of the generated degradation samples, we employ LoRA adapters to fine-tune the generation weights of diffusion model. Additionally, we integrate circular consistency loss and adversarial training to guarantee the fidelity and naturalness of the scene contents. Furthermore, we elaborate on a multi-granularity knowledge distillation strategy (MKD) that encourages the student network to absorb knowledge from both the teacher model and pretrained Depth Anything V2. This strategy guides the student model in learning degradation-agnostic scene information from various degradation inputs. In particular, we introduce an ordinal guidance distillation mechanism (OGD) that encourages the network to focus on uncertain regions through differential ranking, leading to a more precise depth estimation. Experimental results demonstrate that our ACDepth surpasses md4all-DD by 2.50\% for night scene and 2.61\% for rainy scene on the nuScenes dataset in terms of the absRel metric.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge