Jinpeng Li

Structure Regularized Attentive Network for Automatic Femoral Head Necrosis Diagnosis and Localization

Aug 23, 2022

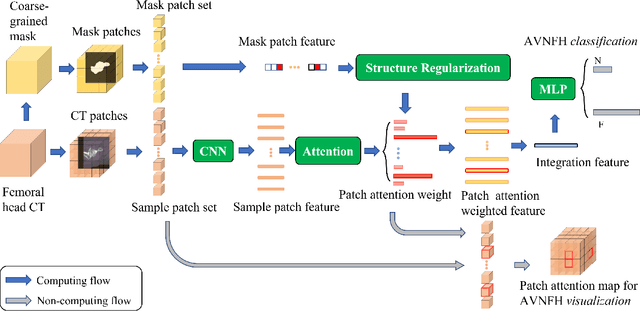

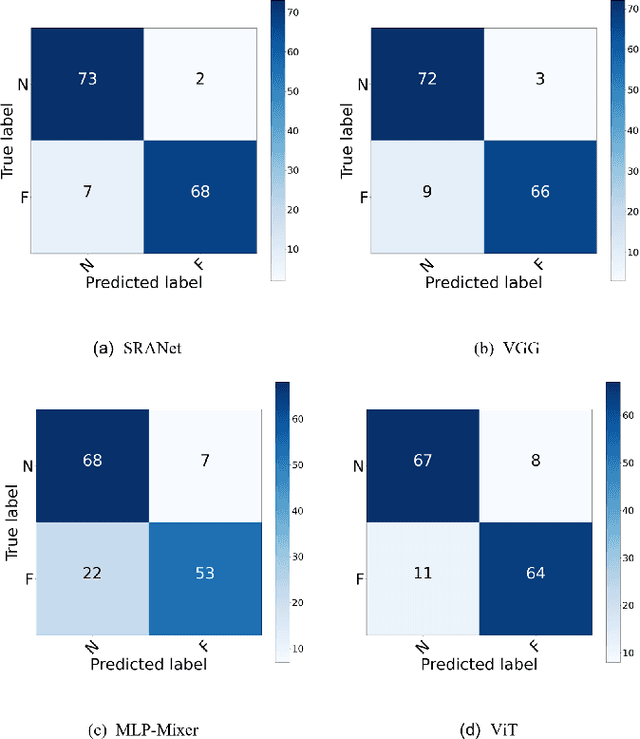

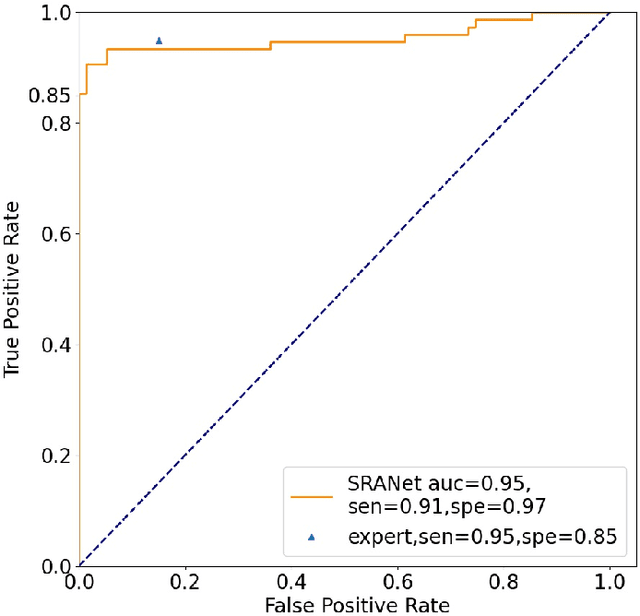

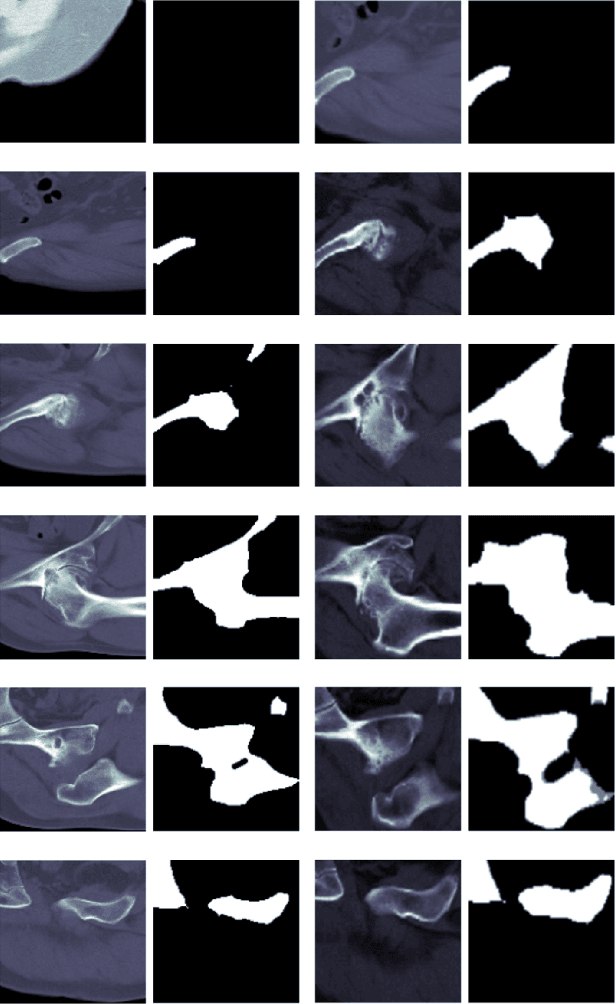

Abstract:In recent years, several works have adopted the convolutional neural network (CNN) to diagnose the avascular necrosis of the femoral head (AVNFH) based on X-ray images or magnetic resonance imaging (MRI). However, due to the tissue overlap, X-ray images are difficult to provide fine-grained features for early diagnosis. MRI, on the other hand, has a long imaging time, is more expensive, making it impractical in mass screening. Computed tomography (CT) shows layer-wise tissues, is faster to image, and is less costly than MRI. However, to our knowledge, there is no work on CT-based automated diagnosis of AVNFH. In this work, we collected and labeled a large-scale dataset for AVNFH ranking. In addition, existing end-to-end CNNs only yields the classification result and are difficult to provide more information for doctors in diagnosis. To address this issue, we propose the structure regularized attentive network (SRANet), which is able to highlight the necrotic regions during classification based on patch attention. SRANet extracts features in chunks of images, obtains weight via the attention mechanism to aggregate the features, and constrains them by a structural regularizer with prior knowledge to improve the generalization. SRANet was evaluated on our AVNFH-CT dataset. Experimental results show that SRANet is superior to CNNs for AVNFH classification, moreover, it can localize lesions and provide more information to assist doctors in diagnosis. Our codes are made public at https://github.com/tomas-lilingfeng/SRANet.

Spiral Contrastive Learning: An Efficient 3D Representation Learning Method for Unannotated CT Lesions

Aug 23, 2022

Abstract:Computed tomography (CT) samples with pathological annotations are difficult to obtain. As a result, the computer-aided diagnosis (CAD) algorithms are trained on small datasets (e.g., LIDC-IDRI with 1,018 samples), limiting their accuracies and reliability. In the past five years, several works have tailored for unsupervised representations of CT lesions via two-dimensional (2D) and three-dimensional (3D) self-supervised learning (SSL) algorithms. The 2D algorithms have difficulty capturing 3D information, and existing 3D algorithms are computationally heavy. Light-weight 3D SSL remains the boundary to explore. In this paper, we propose the spiral contrastive learning (SCL), which yields 3D representations in a computationally efficient manner. SCL first transforms 3D lesions to the 2D plane using an information-preserving spiral transformation, and then learn transformation-invariant features using 2D contrastive learning. For the augmentation, we consider natural image augmentations and medical image augmentations. We evaluate SCL by training a classification head upon the embedding layer. Experimental results show that SCL achieves state-of-the-art accuracy on LIDC-IDRI (89.72%), LNDb (82.09%) and TianChi (90.16%) for unsupervised representation learning. With 10% annotated data for fine-tune, the performance of SCL is comparable to that of supervised learning algorithms (85.75% vs. 85.03% on LIDC-IDRI, 78.20% vs. 73.44% on LNDb and 87.85% vs. 83.34% on TianChi, respectively). Meanwhile, SCL reduces the computational effort by 66.98% compared to other 3D SSL algorithms, demonstrating the efficiency of the proposed method in unsupervised pre-training.

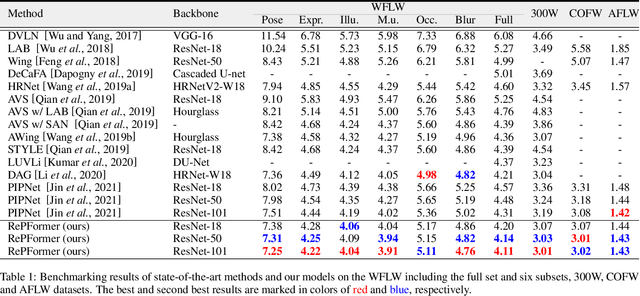

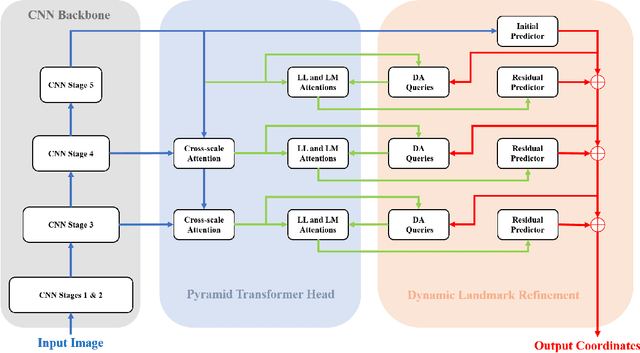

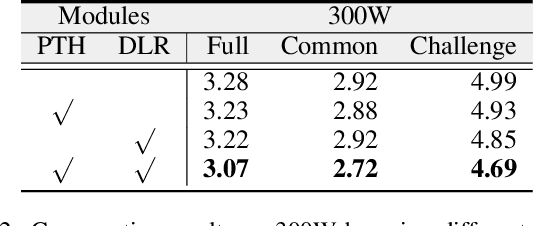

RePFormer: Refinement Pyramid Transformer for Robust Facial Landmark Detection

Jul 08, 2022

Abstract:This paper presents a Refinement Pyramid Transformer (RePFormer) for robust facial landmark detection. Most facial landmark detectors focus on learning representative image features. However, these CNN-based feature representations are not robust enough to handle complex real-world scenarios due to ignoring the internal structure of landmarks, as well as the relations between landmarks and context. In this work, we formulate the facial landmark detection task as refining landmark queries along pyramid memories. Specifically, a pyramid transformer head (PTH) is introduced to build both homologous relations among landmarks and heterologous relations between landmarks and cross-scale contexts. Besides, a dynamic landmark refinement (DLR) module is designed to decompose the landmark regression into an end-to-end refinement procedure, where the dynamically aggregated queries are transformed to residual coordinates predictions. Extensive experimental results on four facial landmark detection benchmarks and their various subsets demonstrate the superior performance and high robustness of our framework.

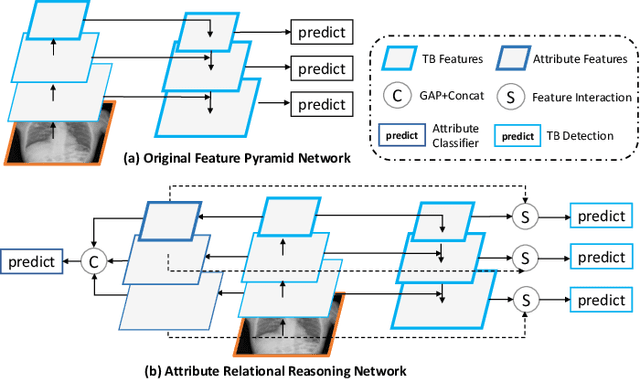

Computer-aided Tuberculosis Diagnosis with Attribute Reasoning Assistance

Jul 01, 2022

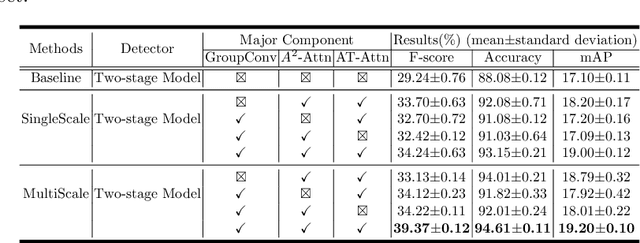

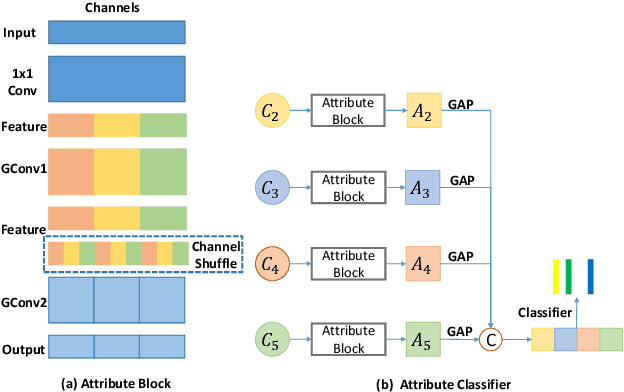

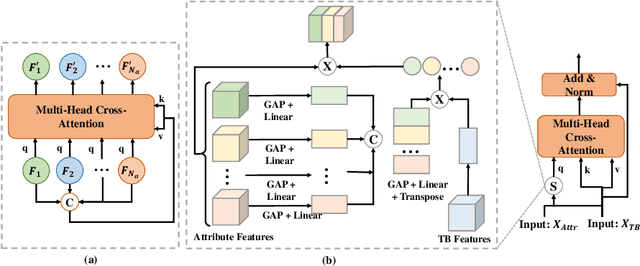

Abstract:Although deep learning algorithms have been intensively developed for computer-aided tuberculosis diagnosis (CTD), they mainly depend on carefully annotated datasets, leading to much time and resource consumption. Weakly supervised learning (WSL), which leverages coarse-grained labels to accomplish fine-grained tasks, has the potential to solve this problem. In this paper, we first propose a new large-scale tuberculosis (TB) chest X-ray dataset, namely the tuberculosis chest X-ray attribute dataset (TBX-Att), and then establish an attribute-assisted weakly-supervised framework to classify and localize TB by leveraging the attribute information to overcome the insufficiency of supervision in WSL scenarios. Specifically, first, the TBX-Att dataset contains 2000 X-ray images with seven kinds of attributes for TB relational reasoning, which are annotated by experienced radiologists. It also includes the public TBX11K dataset with 11200 X-ray images to facilitate weakly supervised detection. Second, we exploit a multi-scale feature interaction model for TB area classification and detection with attribute relational reasoning. The proposed model is evaluated on the TBX-Att dataset and will serve as a solid baseline for future research. The code and data will be available at https://github.com/GangmingZhao/tb-attribute-weak-localization.

The THUEE System Description for the IARPA OpenASR21 Challenge

Jun 29, 2022

Abstract:This paper describes the THUEE team's speech recognition system for the IARPA Open Automatic Speech Recognition Challenge (OpenASR21), with further experiment explorations. We achieve outstanding results under both the Constrained and Constrained-plus training conditions. For the Constrained training condition, we construct our basic ASR system based on the standard hybrid architecture. To alleviate the Out-Of-Vocabulary (OOV) problem, we extend the pronunciation lexicon using Grapheme-to-Phoneme (G2P) techniques for both OOV and potential new words. Standard acoustic model structures such as CNN-TDNN-F and CNN-TDNN-F-A are adopted. In addition, multiple data augmentation techniques are applied. For the Constrained-plus training condition, we use the self-supervised learning framework wav2vec2.0. We experiment with various fine-tuning techniques with the Connectionist Temporal Classification (CTC) criterion on top of the publicly available pre-trained model XLSR-53. We find that the frontend feature extractor plays an important role when applying the wav2vec2.0 pre-trained model to the encoder-decoder based CTC/Attention ASR architecture. Extra improvements can be achieved by using the CTC model finetuned in the target language as the frontend feature extractor.

Boundary Smoothing for Named Entity Recognition

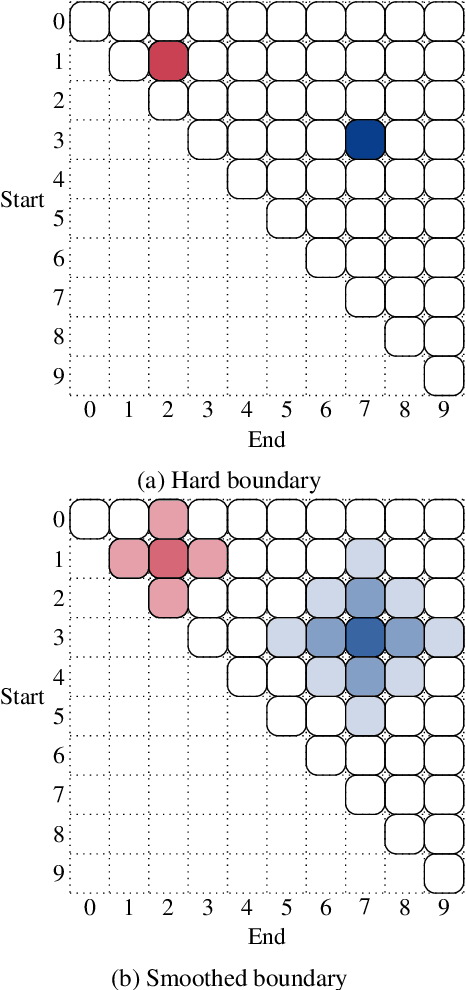

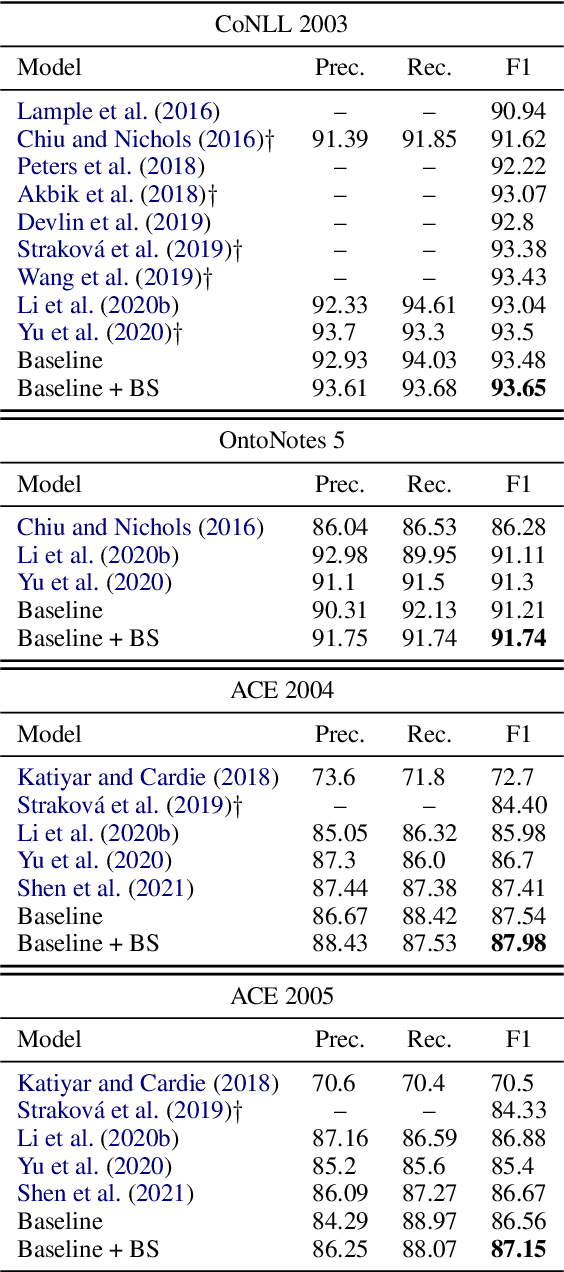

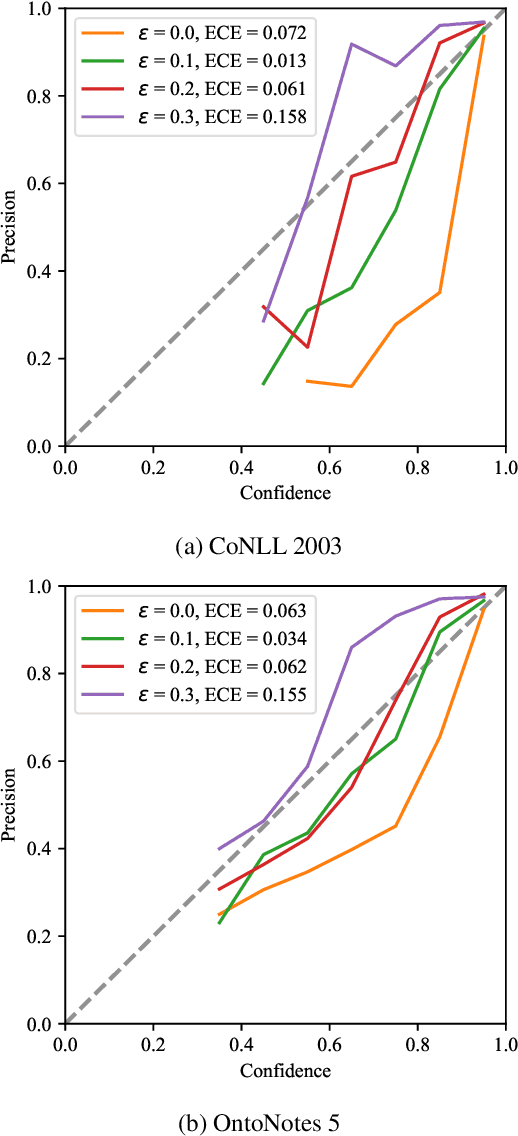

Apr 26, 2022

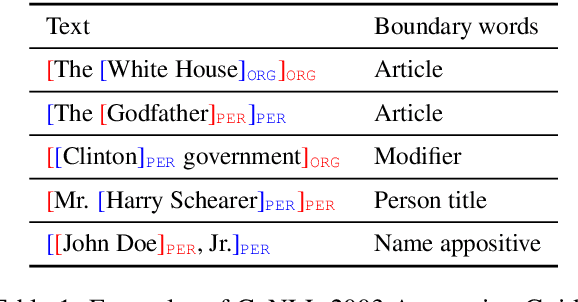

Abstract:Neural named entity recognition (NER) models may easily encounter the over-confidence issue, which degrades the performance and calibration. Inspired by label smoothing and driven by the ambiguity of boundary annotation in NER engineering, we propose boundary smoothing as a regularization technique for span-based neural NER models. It re-assigns entity probabilities from annotated spans to the surrounding ones. Built on a simple but strong baseline, our model achieves results better than or competitive with previous state-of-the-art systems on eight well-known NER benchmarks. Further empirical analysis suggests that boundary smoothing effectively mitigates over-confidence, improves model calibration, and brings flatter neural minima and more smoothed loss landscapes.

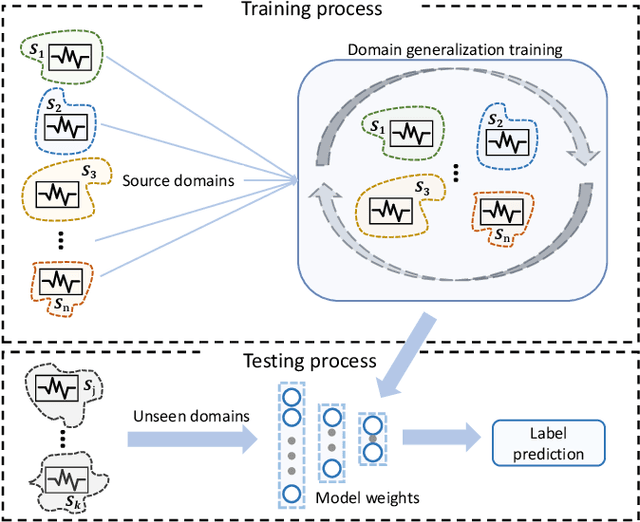

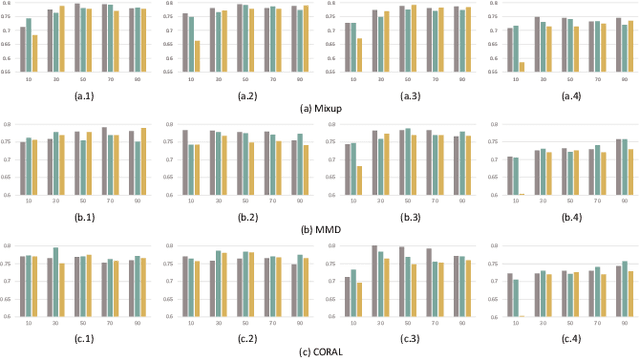

Benchmarking Domain Generalization on EEG-based Emotion Recognition

Apr 18, 2022

Abstract:Electroencephalography (EEG) based emotion recognition has demonstrated tremendous improvement in recent years. Specifically, numerous domain adaptation (DA) algorithms have been exploited in the past five years to enhance the generalization of emotion recognition models across subjects. The DA methods assume that calibration data (although unlabeled) exists in the target domain (new user). However, this assumption conflicts with the application scenario that the model should be deployed without the time-consuming calibration experiments. We argue that domain generalization (DG) is more reasonable than DA in these applications. DG learns how to generalize to unseen target domains by leveraging knowledge from multiple source domains, which provides a new possibility to train general models. In this paper, we for the first time benchmark state-of-the-art DG algorithms on EEG-based emotion recognition. Since convolutional neural network (CNN), deep brief network (DBN) and multilayer perceptron (MLP) have been proved to be effective emotion recognition models, we use these three models as solid baselines. Experimental results show that DG achieves an accuracy of up to 79.41\% on the SEED dataset for recognizing three emotions, indicting the potential of DG in zero-training emotion recognition when multiple sources are available.

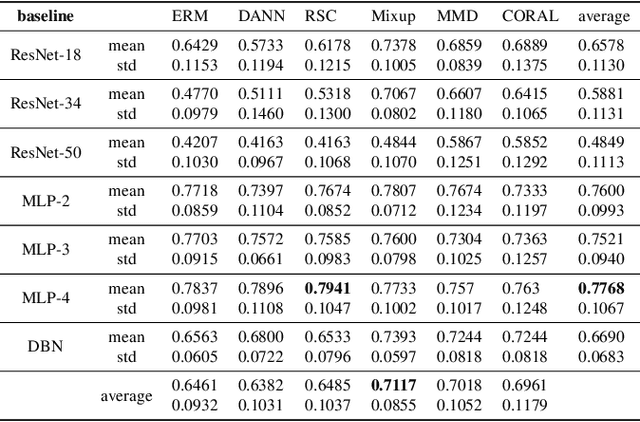

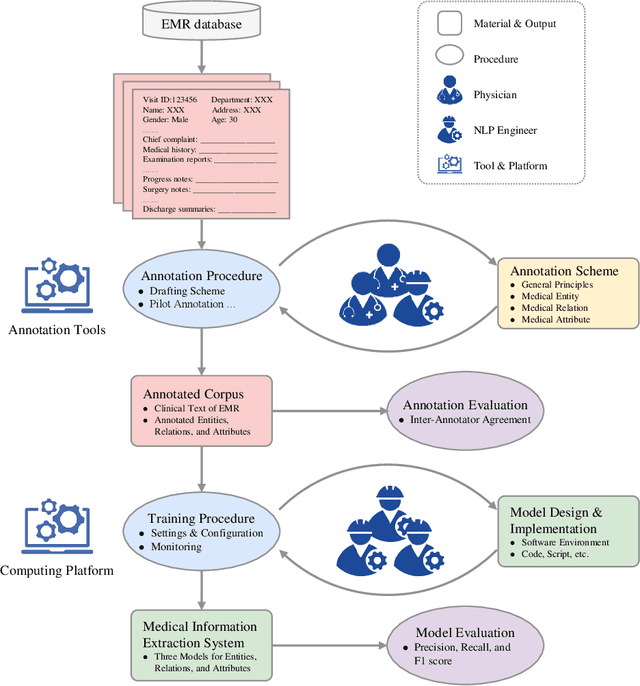

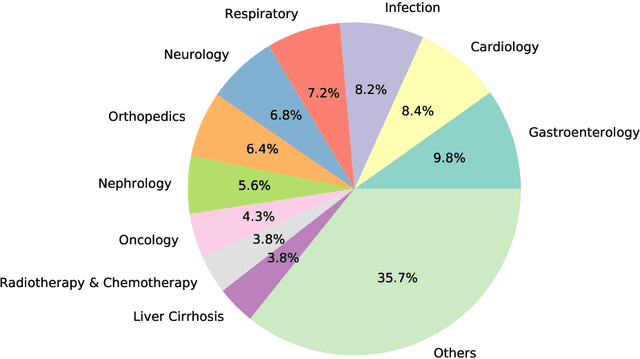

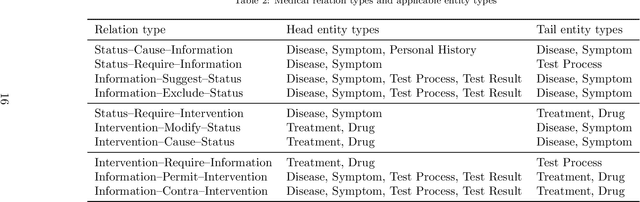

A Unified Framework of Medical Information Annotation and Extraction for Chinese Clinical Text

Mar 08, 2022

Abstract:Medical information extraction consists of a group of natural language processing (NLP) tasks, which collaboratively convert clinical text to pre-defined structured formats. Current state-of-the-art (SOTA) NLP models are highly integrated with deep learning techniques and thus require massive annotated linguistic data. This study presents an engineering framework of medical entity recognition, relation extraction and attribute extraction, which are unified in annotation, modeling and evaluation. Specifically, the annotation scheme is comprehensive, and compatible between tasks, especially for the medical relations. The resulted annotated corpus includes 1,200 full medical records (or 18,039 broken-down documents), and achieves inter-annotator agreements (IAAs) of 94.53%, 73.73% and 91.98% F 1 scores for the three tasks. Three task-specific neural network models are developed within a shared structure, and enhanced by SOTA NLP techniques, i.e., pre-trained language models. Experimental results show that the system can retrieve medical entities, relations and attributes with F 1 scores of 93.47%, 67.14% and 90.89%, respectively. This study, in addition to our publicly released annotation scheme and code, provides solid and practical engineering experience of developing an integrated medical information extraction system.

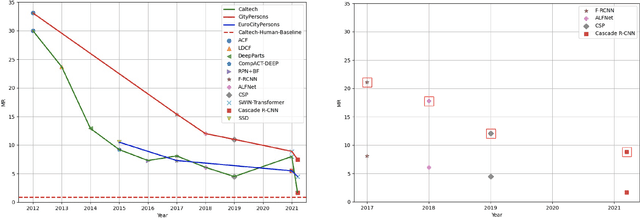

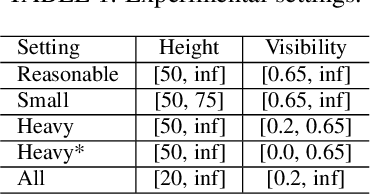

Pedestrian Detection: Domain Generalization, CNNs, Transformers and Beyond

Jan 10, 2022

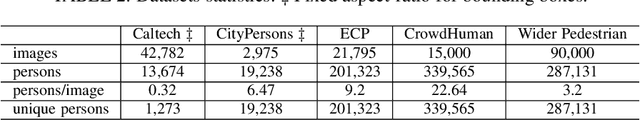

Abstract:Pedestrian detection is the cornerstone of many vision based applications, starting from object tracking to video surveillance and more recently, autonomous driving. With the rapid development of deep learning in object detection, pedestrian detection has achieved very good performance in traditional single-dataset training and evaluation setting. However, in this study on generalizable pedestrian detectors, we show that, current pedestrian detectors poorly handle even small domain shifts in cross-dataset evaluation. We attribute the limited generalization to two main factors, the method and the current sources of data. Regarding the method, we illustrate that biasness present in the design choices (e.g anchor settings) of current pedestrian detectors are the main contributing factor to the limited generalization. Most modern pedestrian detectors are tailored towards target dataset, where they do achieve high performance in traditional single training and testing pipeline, but suffer a degrade in performance when evaluated through cross-dataset evaluation. Consequently, a general object detector performs better in cross-dataset evaluation compared with state of the art pedestrian detectors, due to its generic design. As for the data, we show that the autonomous driving benchmarks are monotonous in nature, that is, they are not diverse in scenarios and dense in pedestrians. Therefore, benchmarks curated by crawling the web (which contain diverse and dense scenarios), are an efficient source of pre-training for providing a more robust representation. Accordingly, we propose a progressive fine-tuning strategy which improves generalization. Code and models cab accessed at https://github.com/hasanirtiza/Pedestron.

Efficient Person Search: An Anchor-Free Approach

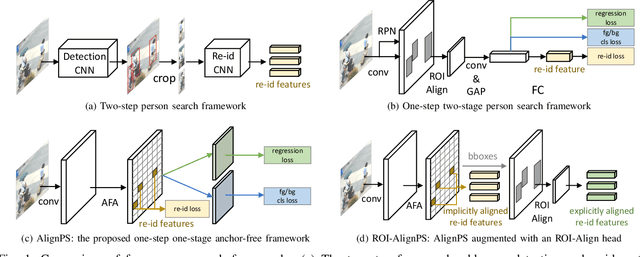

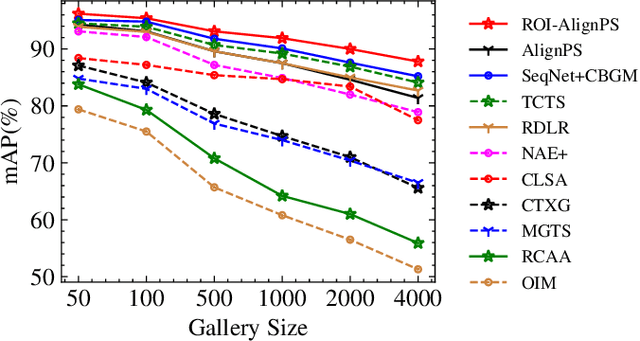

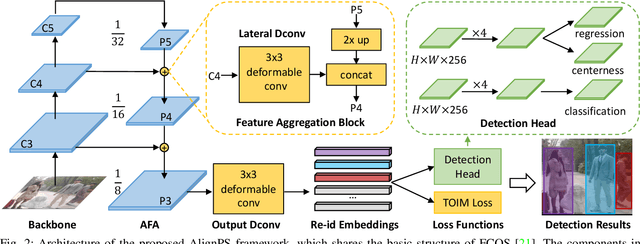

Sep 01, 2021

Abstract:Person search aims to simultaneously localize and identify a query person from realistic, uncropped images. To achieve this goal, state-of-the-art models typically add a re-id branch upon two-stage detectors like Faster R-CNN. Owing to the ROI-Align operation, this pipeline yields promising accuracy as re-id features are explicitly aligned with the corresponding object regions, but in the meantime, it introduces high computational overhead due to dense object anchors. In this work, we present an anchor-free approach to efficiently tackling this challenging task, by introducing the following dedicated designs. First, we select an anchor-free detector (i.e., FCOS) as the prototype of our framework. Due to the lack of dense object anchors, it exhibits significantly higher efficiency compared with existing person search models. Second, when directly accommodating this anchor-free detector for person search, there exist several major challenges in learning robust re-id features, which we summarize as the misalignment issues in different levels (i.e., scale, region, and task). To address these issues, we propose an aligned feature aggregation module to generate more discriminative and robust feature embeddings. Accordingly, we name our model as Feature-Aligned Person Search Network (AlignPS). Third, by investigating the advantages of both anchor-based and anchor-free models, we further augment AlignPS with an ROI-Align head, which significantly improves the robustness of re-id features while still keeping our model highly efficient. Extensive experiments conducted on two challenging benchmarks (i.e., CUHK-SYSU and PRW) demonstrate that our framework achieves state-of-the-art or competitive performance, while displaying higher efficiency. All the source codes, data, and trained models are available at: https://github.com/daodaofr/alignps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge