Jingyi Zhang

Generalizable Black-Box Adversarial Attack with Meta Learning

Jan 01, 2023Abstract:In the scenario of black-box adversarial attack, the target model's parameters are unknown, and the attacker aims to find a successful adversarial perturbation based on query feedback under a query budget. Due to the limited feedback information, existing query-based black-box attack methods often require many queries for attacking each benign example. To reduce query cost, we propose to utilize the feedback information across historical attacks, dubbed example-level adversarial transferability. Specifically, by treating the attack on each benign example as one task, we develop a meta-learning framework by training a meta-generator to produce perturbations conditioned on benign examples. When attacking a new benign example, the meta generator can be quickly fine-tuned based on the feedback information of the new task as well as a few historical attacks to produce effective perturbations. Moreover, since the meta-train procedure consumes many queries to learn a generalizable generator, we utilize model-level adversarial transferability to train the meta-generator on a white-box surrogate model, then transfer it to help the attack against the target model. The proposed framework with the two types of adversarial transferability can be naturally combined with any off-the-shelf query-based attack methods to boost their performance, which is verified by extensive experiments.

Domain Adaptive Scene Text Detection via Subcategorization

Dec 01, 2022Abstract:Most existing scene text detectors require large-scale training data which cannot scale well due to two major factors: 1) scene text images often have domain-specific distributions; 2) collecting large-scale annotated scene text images is laborious. We study domain adaptive scene text detection, a largely neglected yet very meaningful task that aims for optimal transfer of labelled scene text images while handling unlabelled images in various new domains. Specifically, we design SCAST, a subcategory-aware self-training technique that mitigates the network overfitting and noisy pseudo labels in domain adaptive scene text detection effectively. SCAST consists of two novel designs. For labelled source data, it introduces pseudo subcategories for both foreground texts and background stuff which helps train more generalizable source models with multi-class detection objectives. For unlabelled target data, it mitigates the network overfitting by co-regularizing the binary and subcategory classifiers trained in the source domain. Extensive experiments show that SCAST achieves superior detection performance consistently across multiple public benchmarks, and it also generalizes well to other domain adaptive detection tasks such as vehicle detection.

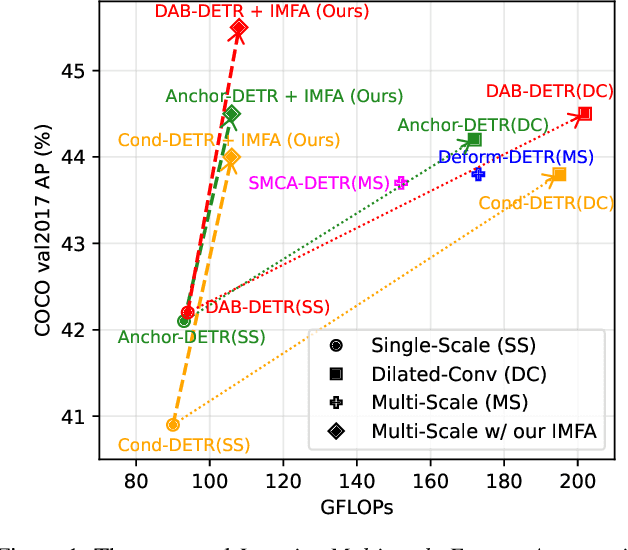

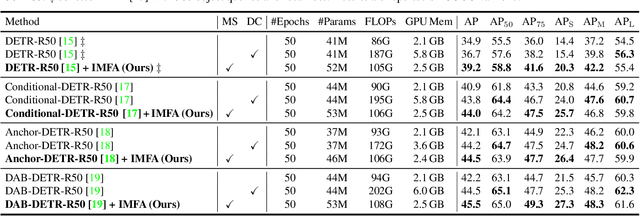

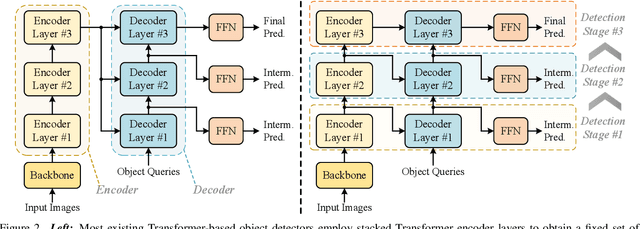

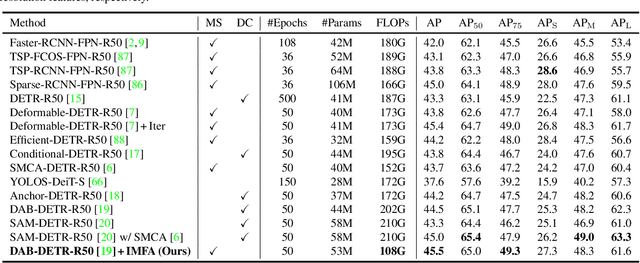

Towards Efficient Use of Multi-Scale Features in Transformer-Based Object Detectors

Aug 24, 2022

Abstract:Multi-scale features have been proven highly effective for object detection, and most ConvNet-based object detectors adopt Feature Pyramid Network (FPN) as a basic component for exploiting multi-scale features. However, for the recently proposed Transformer-based object detectors, directly incorporating multi-scale features leads to prohibitive computational overhead due to the high complexity of the attention mechanism for processing high-resolution features. This paper presents Iterative Multi-scale Feature Aggregation (IMFA) -- a generic paradigm that enables the efficient use of multi-scale features in Transformer-based object detectors. The core idea is to exploit sparse multi-scale features from just a few crucial locations, and it is achieved with two novel designs. First, IMFA rearranges the Transformer encoder-decoder pipeline so that the encoded features can be iteratively updated based on the detection predictions. Second, IMFA sparsely samples scale-adaptive features for refined detection from just a few keypoint locations under the guidance of prior detection predictions. As a result, the sampled multi-scale features are sparse yet still highly beneficial for object detection. Extensive experiments show that the proposed IMFA boosts the performance of multiple Transformer-based object detectors significantly yet with slight computational overhead. Project page: https://github.com/ZhangGongjie/IMFA.

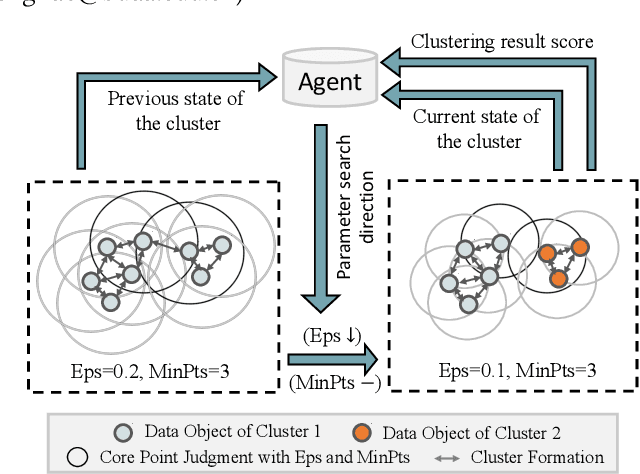

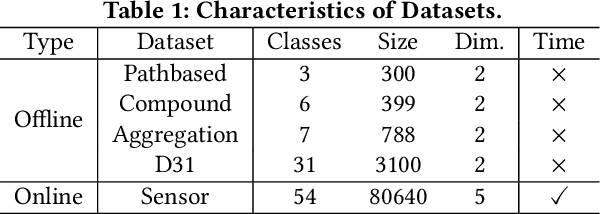

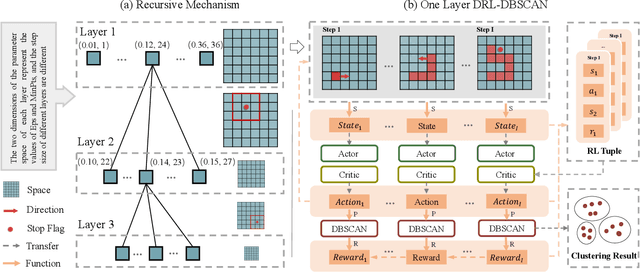

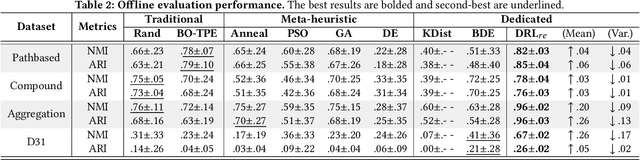

Automating DBSCAN via Deep Reinforcement Learning

Aug 09, 2022

Abstract:DBSCAN is widely used in many scientific and engineering fields because of its simplicity and practicality. However, due to its high sensitivity parameters, the accuracy of the clustering result depends heavily on practical experience. In this paper, we first propose a novel Deep Reinforcement Learning guided automatic DBSCAN parameters search framework, namely DRL-DBSCAN. The framework models the process of adjusting the parameter search direction by perceiving the clustering environment as a Markov decision process, which aims to find the best clustering parameters without manual assistance. DRL-DBSCAN learns the optimal clustering parameter search policy for different feature distributions via interacting with the clusters, using a weakly-supervised reward training policy network. In addition, we also present a recursive search mechanism driven by the scale of the data to efficiently and controllably process large parameter spaces. Extensive experiments are conducted on five artificial and real-world datasets based on the proposed four working modes. The results of offline and online tasks show that the DRL-DBSCAN not only consistently improves DBSCAN clustering accuracy by up to 26% and 25% respectively, but also can stably find the dominant parameters with high computational efficiency. The code is available at https://github.com/RingBDStack/DRL-DBSCAN.

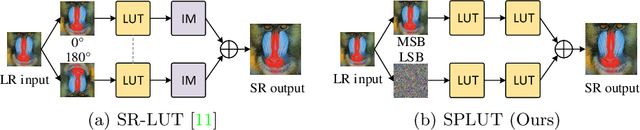

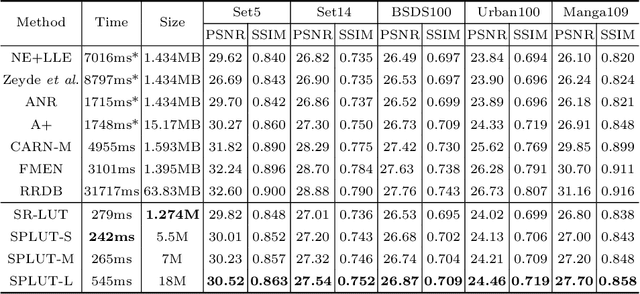

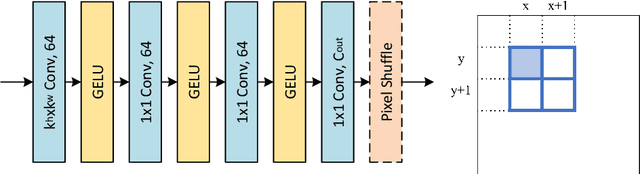

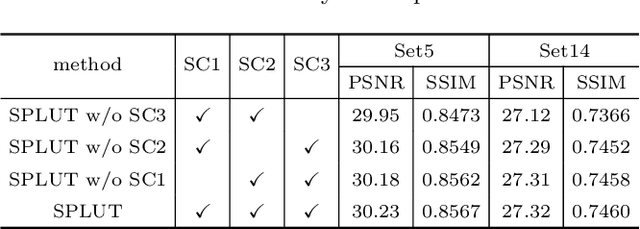

Learning Series-Parallel Lookup Tables for Efficient Image Super-Resolution

Jul 26, 2022

Abstract:Lookup table (LUT) has shown its efficacy in low-level vision tasks due to the valuable characteristics of low computational cost and hardware independence. However, recent attempts to address the problem of single image super-resolution (SISR) with lookup tables are highly constrained by the small receptive field size. Besides, their frameworks of single-layer lookup tables limit the extension and generalization capacities of the model. In this paper, we propose a framework of series-parallel lookup tables (SPLUT) to alleviate the above issues and achieve efficient image super-resolution. On the one hand, we cascade multiple lookup tables to enlarge the receptive field of each extracted feature vector. On the other hand, we propose a parallel network which includes two branches of cascaded lookup tables which process different components of the input low-resolution images. By doing so, the two branches collaborate with each other and compensate for the precision loss of discretizing input pixels when establishing lookup tables. Compared to previous lookup table-based methods, our framework has stronger representation abilities with more flexible architectures. Furthermore, we no longer need interpolation methods which introduce redundant computations so that our method can achieve faster inference speed. Extensive experimental results on five popular benchmark datasets show that our method obtains superior SISR performance in a more efficient way. The code is available at https://github.com/zhjy2016/SPLUT.

Hierarchical Mask Calibration for Unified Domain Adaptive Panoptic Segmentation

Jun 30, 2022

Abstract:Domain adaptive panoptic segmentation aims to mitigate data annotation challenge by leveraging off-the-shelf annotated data in one or multiple related source domains. However, existing studies employ two networks for instance segmentation and semantic segmentation separately which lead to a large amount of network parameters with complicated and computationally intensive training and inference processes. We design UniDAPS, a Unified Domain Adaptive Panoptic Segmentation network that is simple but capable of achieving domain adaptive instance segmentation and semantic segmentation simultaneously within a single network. UniDAPS introduces Hierarchical Mask Calibration (HMC) that rectifies the predicted pseudo masks, pseudo superpixels and pseudo pixels and performs network re-training via an online self-training process on the fly. It has three unique features: 1) it enables unified domain adaptive panoptic adaptation; 2) it mitigates false predictions and improves domain adaptive panoptic segmentation effectively; 3) it is end-to-end trainable with much less parameters and simpler training and inference pipeline. Extensive experiments over multiple public benchmarks show that UniDAPS achieves superior domain adaptive panoptic segmentation as compared with the state-of-the-art.

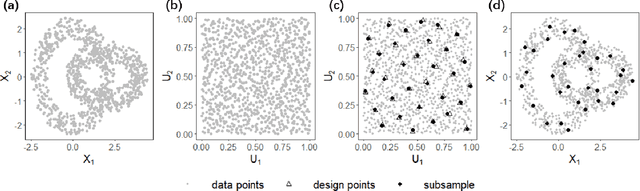

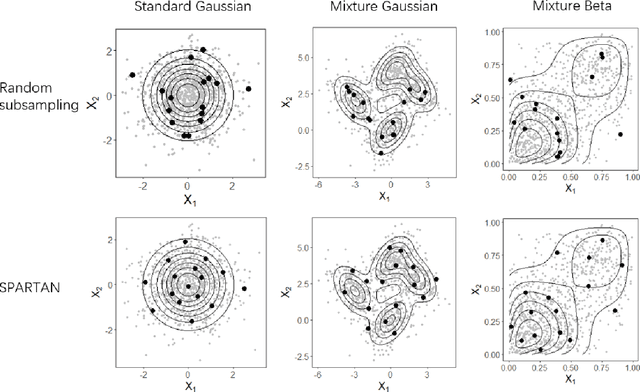

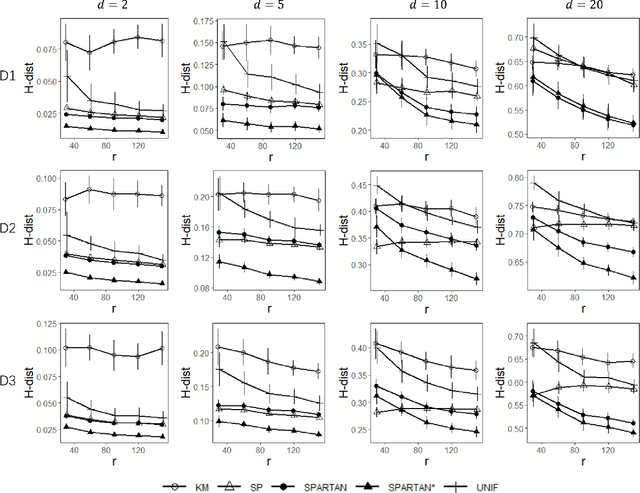

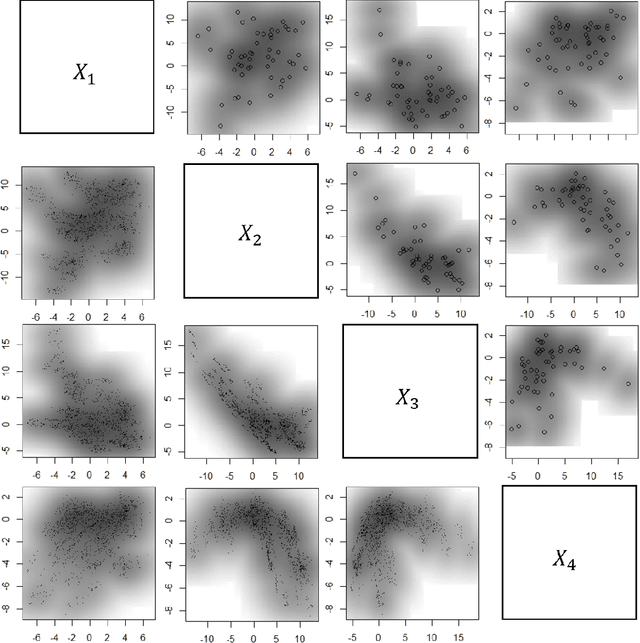

An optimal transport approach for selecting a representative subsample with application in efficient kernel density estimation

May 31, 2022

Abstract:Subsampling methods aim to select a subsample as a surrogate for the observed sample. Such methods have been used pervasively in large-scale data analytics, active learning, and privacy-preserving analysis in recent decades. Instead of model-based methods, in this paper, we study model-free subsampling methods, which aim to identify a subsample that is not confined by model assumptions. Existing model-free subsampling methods are usually built upon clustering techniques or kernel tricks. Most of these methods suffer from either a large computational burden or a theoretical weakness. In particular, the theoretical weakness is that the empirical distribution of the selected subsample may not necessarily converge to the population distribution. Such computational and theoretical limitations hinder the broad applicability of model-free subsampling methods in practice. We propose a novel model-free subsampling method by utilizing optimal transport techniques. Moreover, we develop an efficient subsampling algorithm that is adaptive to the unknown probability density function. Theoretically, we show the selected subsample can be used for efficient density estimation by deriving the convergence rate for the proposed subsample kernel density estimator. We also provide the optimal bandwidth for the proposed estimator. Numerical studies on synthetic and real-world datasets demonstrate the performance of the proposed method is superior.

LiDARCap: Long-range Marker-less 3D Human Motion Capture with LiDAR Point Clouds

Mar 28, 2022

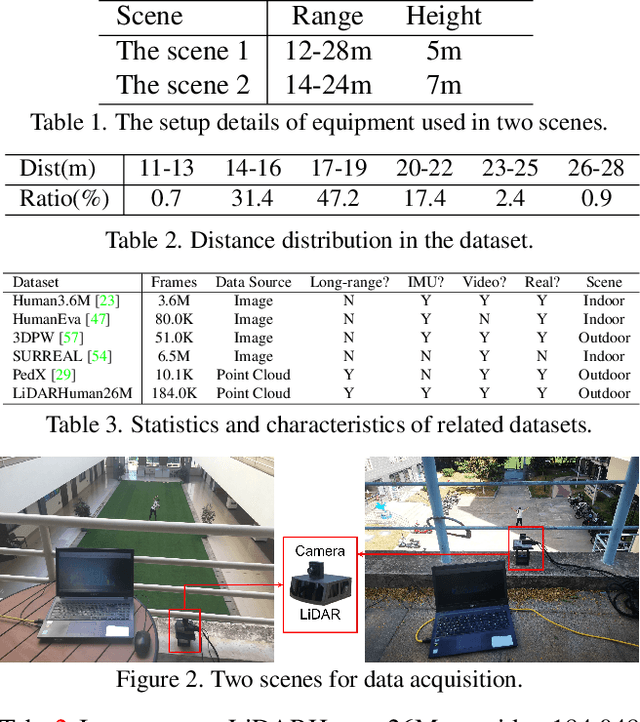

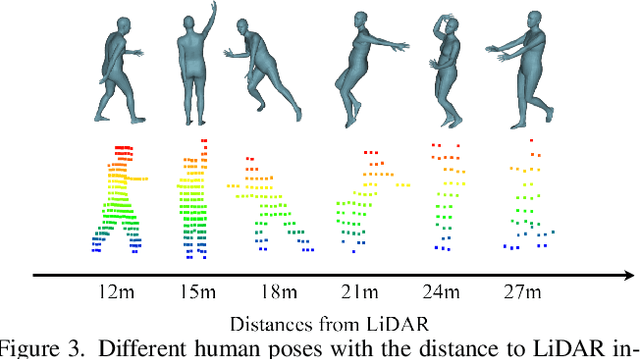

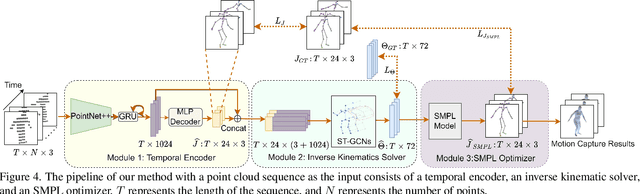

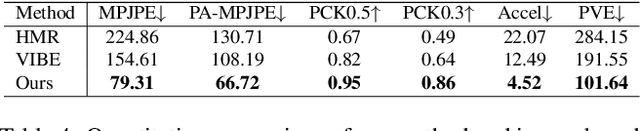

Abstract:Existing motion capture datasets are largely short-range and cannot yet fit the need of long-range applications. We propose LiDARHuman26M, a new human motion capture dataset captured by LiDAR at a much longer range to overcome this limitation. Our dataset also includes the ground truth human motions acquired by the IMU system and the synchronous RGB images. We further present a strong baseline method, LiDARCap, for LiDAR point cloud human motion capture. Specifically, we first utilize PointNet++ to encode features of points and then employ the inverse kinematics solver and SMPL optimizer to regress the pose through aggregating the temporally encoded features hierarchically. Quantitative and qualitative experiments show that our method outperforms the techniques based only on RGB images. Ablation experiments demonstrate that our dataset is challenging and worthy of further research. Finally, the experiments on the KITTI Dataset and the Waymo Open Dataset show that our method can be generalized to different LiDAR sensor settings.

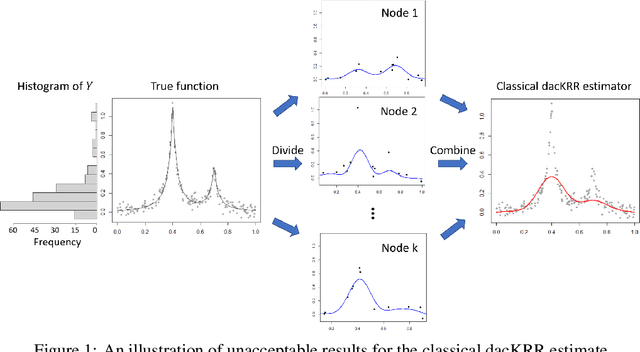

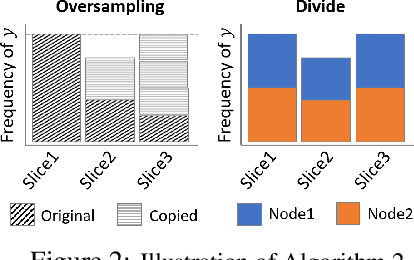

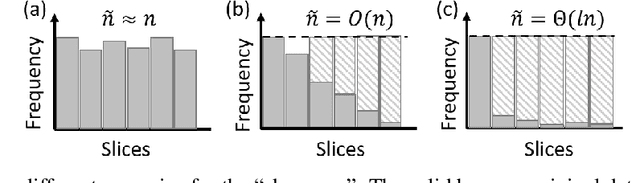

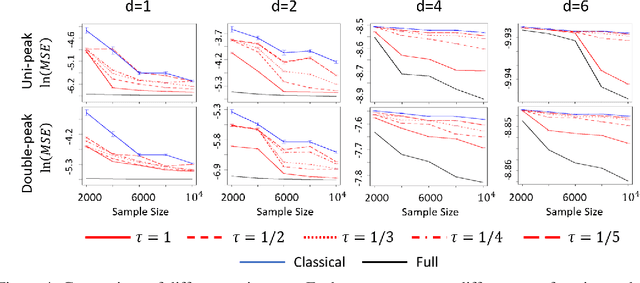

Oversampling Divide-and-conquer for Response-skewed Kernel Ridge Regression

Jul 13, 2021

Abstract:The divide-and-conquer method has been widely used for estimating large-scale kernel ridge regression estimates. Unfortunately, when the response variable is highly skewed, the divide-and-conquer kernel ridge regression (dacKRR) may overlook the underrepresented region and result in unacceptable results. We develop a novel response-adaptive partition strategy to overcome the limitation. In particular, we propose to allocate the replicates of some carefully identified informative observations to multiple nodes (local processors). The idea is analogous to the popular oversampling technique. Although such a technique has been widely used for addressing discrete label skewness, extending it to the dacKRR setting is nontrivial. We provide both theoretical and practical guidance on how to effectively over-sample the observations under the dacKRR setting. Furthermore, we show the proposed estimate has a smaller asymptotic mean squared error (AMSE) than that of the classical dacKRR estimate under mild conditions. Our theoretical findings are supported by both simulated and real-data analyses.

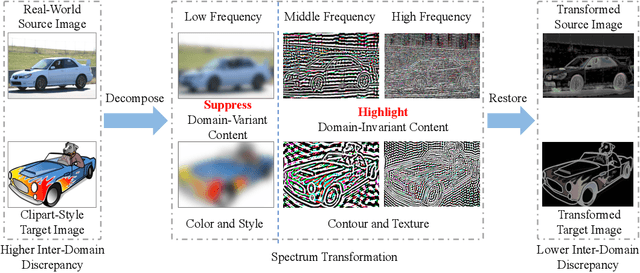

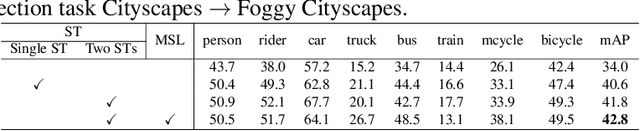

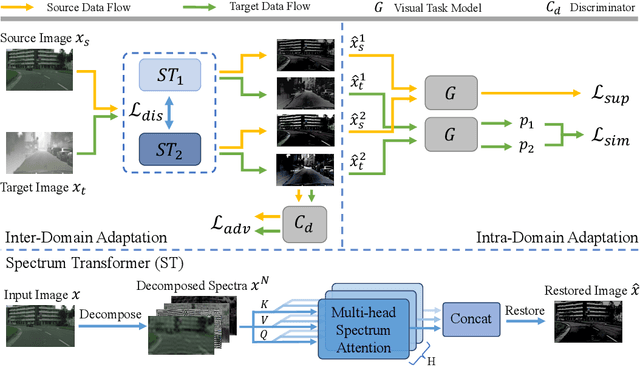

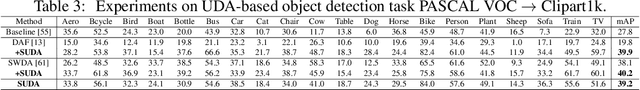

Spectral Unsupervised Domain Adaptation for Visual Recognition

Jun 11, 2021

Abstract:Unsupervised domain adaptation (UDA) aims to learn a well-performed model in an unlabeled target domain by leveraging labeled data from one or multiple related source domains. It remains a great challenge due to 1) the lack of annotations in the target domain and 2) the rich discrepancy between the distributions of source and target data. We propose Spectral UDA (SUDA), an efficient yet effective UDA technique that works in the spectral space and is generic across different visual recognition tasks in detection, classification and segmentation. SUDA addresses UDA challenges from two perspectives. First, it mitigates inter-domain discrepancies by a spectrum transformer (ST) that maps source and target images into spectral space and learns to enhance domain-invariant spectra while suppressing domain-variant spectra simultaneously. To this end, we design novel adversarial multi-head spectrum attention that leverages contextual information to identify domain-variant and domain-invariant spectra effectively. Second, it mitigates the lack of annotations in target domain by introducing multi-view spectral learning which aims to learn comprehensive yet confident target representations by maximizing the mutual information among multiple ST augmentations capturing different spectral views of each target sample. Extensive experiments over different visual tasks (e.g., detection, classification and segmentation) show that SUDA achieves superior accuracy and it is also complementary with state-of-the-art UDA methods with consistent performance boosts but little extra computation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge