Haibo Zhang

Frequency-Aware Contrastive Learning for Neural Machine Translation

Dec 29, 2021

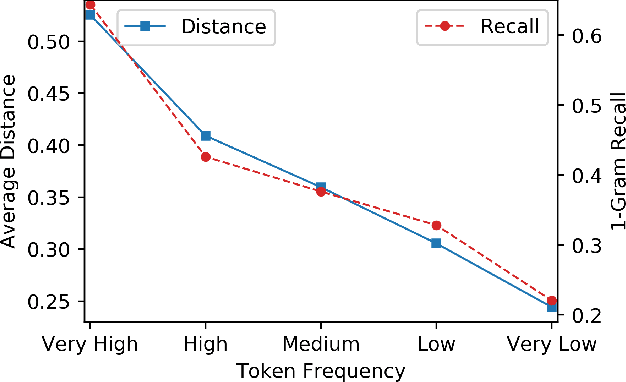

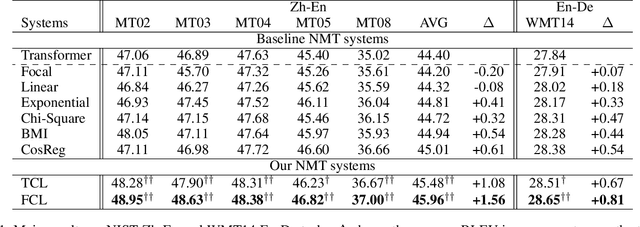

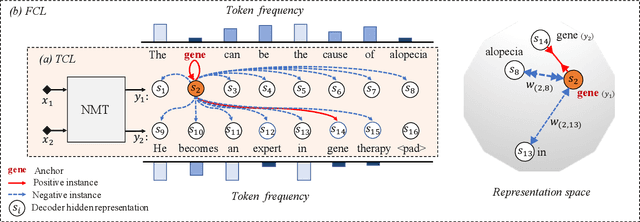

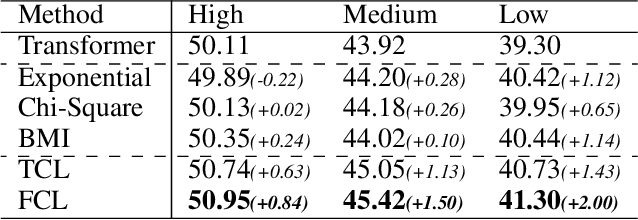

Abstract:Low-frequency word prediction remains a challenge in modern neural machine translation (NMT) systems. Recent adaptive training methods promote the output of infrequent words by emphasizing their weights in the overall training objectives. Despite the improved recall of low-frequency words, their prediction precision is unexpectedly hindered by the adaptive objectives. Inspired by the observation that low-frequency words form a more compact embedding space, we tackle this challenge from a representation learning perspective. Specifically, we propose a frequency-aware token-level contrastive learning method, in which the hidden state of each decoding step is pushed away from the counterparts of other target words, in a soft contrastive way based on the corresponding word frequencies. We conduct experiments on widely used NIST Chinese-English and WMT14 English-German translation tasks. Empirical results show that our proposed methods can not only significantly improve the translation quality but also enhance lexical diversity and optimize word representation space. Further investigation reveals that, comparing with related adaptive training strategies, the superiority of our method on low-frequency word prediction lies in the robustness of token-level recall across different frequencies without sacrificing precision.

KGR^4: Retrieval, Retrospect, Refine and Rethink for Commonsense Generation

Dec 15, 2021

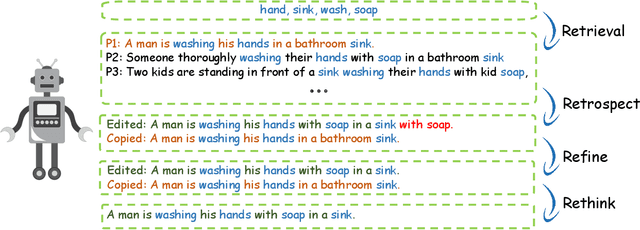

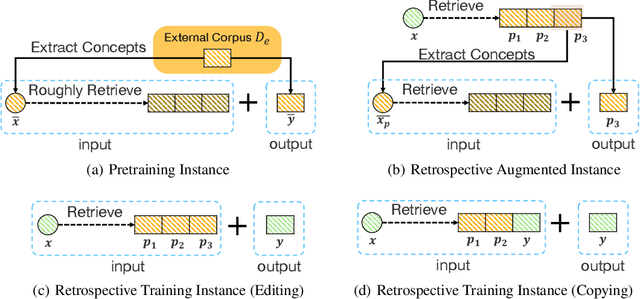

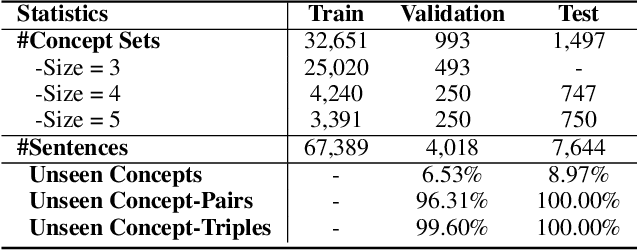

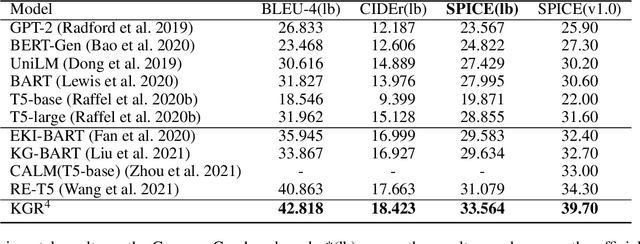

Abstract:Generative commonsense reasoning requires machines to generate sentences describing an everyday scenario given several concepts, which has attracted much attention recently. However, existing models cannot perform as well as humans, since sentences they produce are often implausible and grammatically incorrect. In this paper, inspired by the process of humans creating sentences, we propose a novel Knowledge-enhanced Commonsense Generation framework, termed KGR^4, consisting of four stages: Retrieval, Retrospect, Refine, Rethink. Under this framework, we first perform retrieval to search for relevant sentences from external corpus as the prototypes. Then, we train the generator that either edits or copies these prototypes to generate candidate sentences, of which potential errors will be fixed by an autoencoder-based refiner. Finally, we select the output sentence from candidate sentences produced by generators with different hyper-parameters. Experimental results and in-depth analysis on the CommonGen benchmark strongly demonstrate the effectiveness of our framework. Particularly, KGR^4 obtains 33.56 SPICE points in the official leaderboard, outperforming the previously-reported best result by 2.49 SPICE points and achieving state-of-the-art performance.

Leveraging Advantages of Interactive and Non-Interactive Models for Vector-Based Cross-Lingual Information Retrieval

Nov 03, 2021

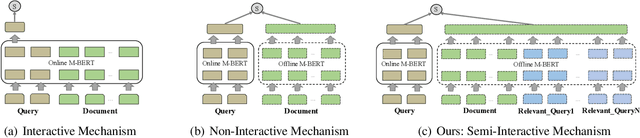

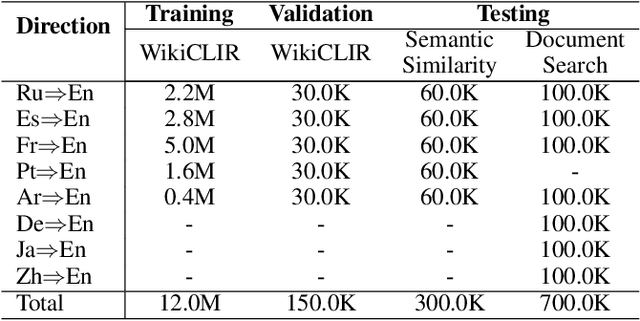

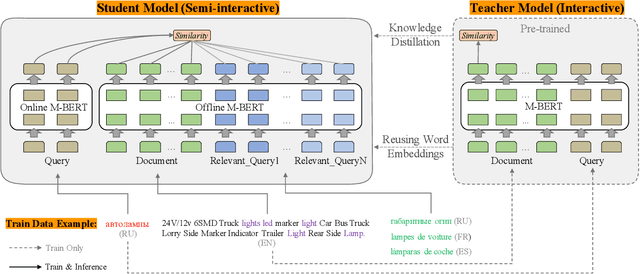

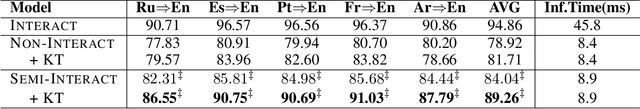

Abstract:Interactive and non-interactive model are the two de-facto standard frameworks in vector-based cross-lingual information retrieval (V-CLIR), which embed queries and documents in synchronous and asynchronous fashions, respectively. From the retrieval accuracy and computational efficiency perspectives, each model has its own superiority and shortcoming. In this paper, we propose a novel framework to leverage the advantages of these two paradigms. Concretely, we introduce semi-interactive mechanism, which builds our model upon non-interactive architecture but encodes each document together with its associated multilingual queries. Accordingly, cross-lingual features can be better learned like an interactive model. Besides, we further transfer knowledge from a well-trained interactive model to ours by reusing its word embeddings and adopting knowledge distillation. Our model is initialized from a multilingual pre-trained language model M-BERT, and evaluated on two open-resource CLIR datasets derived from Wikipedia and an in-house dataset collected from a real-world search engine. Extensive analyses reveal that our methods significantly boost the retrieval accuracy while maintaining the computational efficiency.

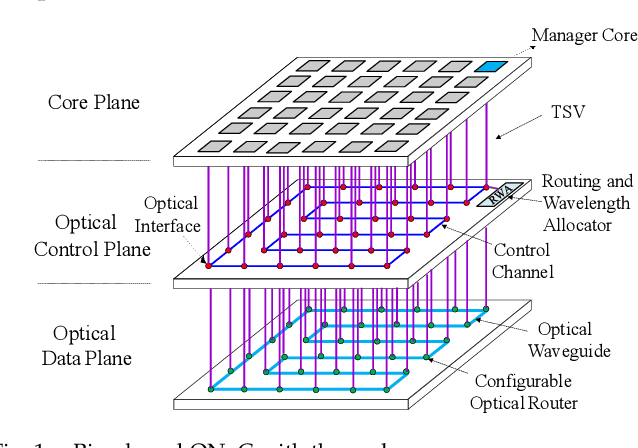

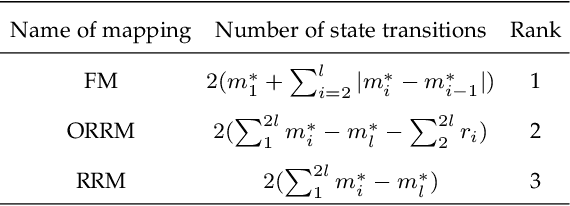

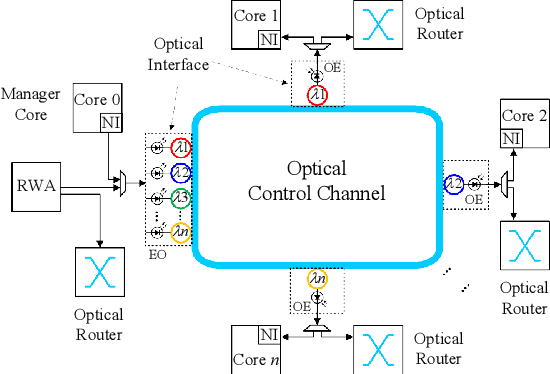

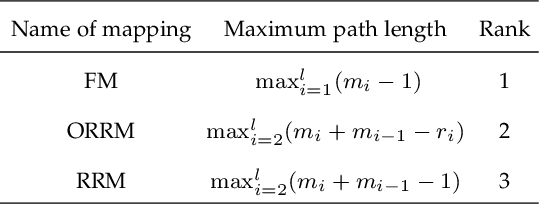

Accelerating Fully Connected Neural Network on Optical Network-on-Chip (ONoC)

Sep 30, 2021

Abstract:Fully Connected Neural Network (FCNN) is a class of Artificial Neural Networks widely used in computer science and engineering, whereas the training process can take a long time with large datasets in existing many-core systems. Optical Network-on-Chip (ONoC), an emerging chip-scale optical interconnection technology, has great potential to accelerate the training of FCNN with low transmission delay, low power consumption, and high throughput. However, existing methods based on Electrical Network-on-Chip (ENoC) cannot fit in ONoC because of the unique properties of ONoC. In this paper, we propose a fine-grained parallel computing model for accelerating FCNN training on ONoC and derive the optimal number of cores for each execution stage with the objective of minimizing the total amount of time to complete one epoch of FCNN training. To allocate the optimal number of cores for each execution stage, we present three mapping strategies and compare their advantages and disadvantages in terms of hotspot level, memory requirement, and state transitions. Simulation results show that the average prediction error for the optimal number of cores in NN benchmarks is within 2.3%. We further carry out extensive simulations which demonstrate that FCNN training time can be reduced by 22.28% and 4.91% on average using our proposed scheme, compared with traditional parallel computing methods that either allocate a fixed number of cores or allocate as many cores as possible, respectively. Compared with ENoC, simulation results show that under batch sizes of 64 and 128, on average ONoC can achieve 21.02% and 12.95% on reducing training time with 47.85% and 39.27% on saving energy, respectively.

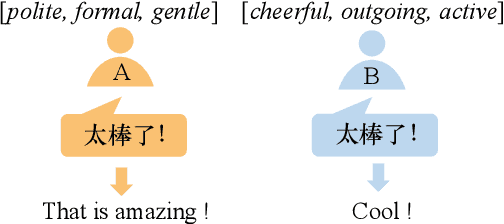

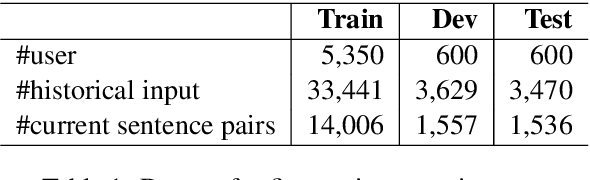

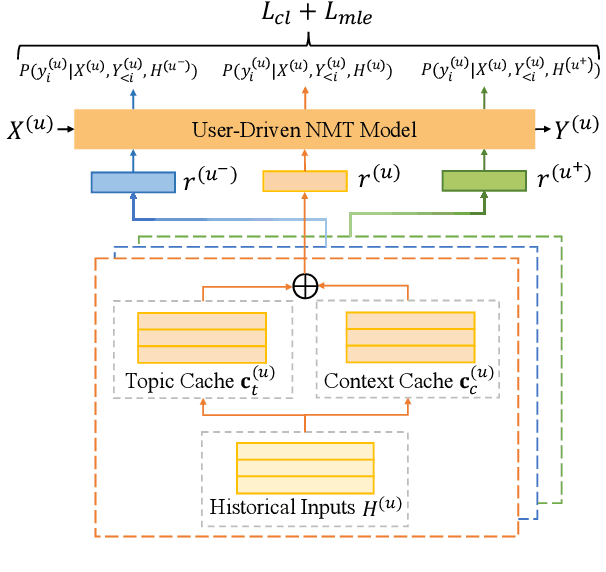

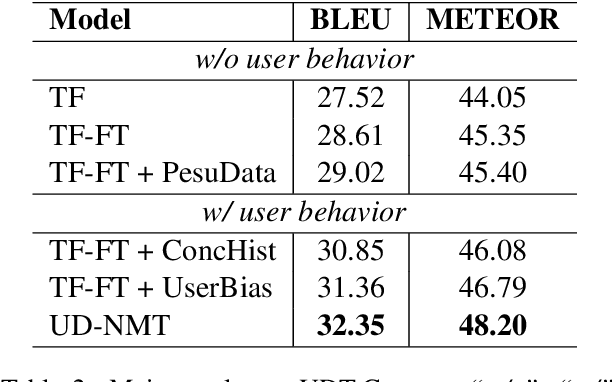

Towards User-Driven Neural Machine Translation

Jun 11, 2021

Abstract:A good translation should not only translate the original content semantically, but also incarnate personal traits of the original text. For a real-world neural machine translation (NMT) system, these user traits (e.g., topic preference, stylistic characteristics and expression habits) can be preserved in user behavior (e.g., historical inputs). However, current NMT systems marginally consider the user behavior due to: 1) the difficulty of modeling user portraits in zero-shot scenarios, and 2) the lack of user-behavior annotated parallel dataset. To fill this gap, we introduce a novel framework called user-driven NMT. Specifically, a cache-based module and a user-driven contrastive learning method are proposed to offer NMT the ability to capture potential user traits from their historical inputs under a zero-shot learning fashion. Furthermore, we contribute the first Chinese-English parallel corpus annotated with user behavior called UDT-Corpus. Experimental results confirm that the proposed user-driven NMT can generate user-specific translations.

Bridging Subword Gaps in Pretrain-Finetune Paradigm for Natural Language Generation

Jun 11, 2021

Abstract:A well-known limitation in pretrain-finetune paradigm lies in its inflexibility caused by the one-size-fits-all vocabulary. This potentially weakens the effect when applying pretrained models into natural language generation (NLG) tasks, especially for the subword distributions between upstream and downstream tasks with significant discrepancy. Towards approaching this problem, we extend the vanilla pretrain-finetune pipeline with an extra embedding transfer step. Specifically, a plug-and-play embedding generator is introduced to produce the representation of any input token, according to pre-trained embeddings of its morphologically similar ones. Thus, embeddings of mismatch tokens in downstream tasks can also be efficiently initialized. We conduct experiments on a variety of NLG tasks under the pretrain-finetune fashion. Experimental results and extensive analyses show that the proposed strategy offers us opportunities to feel free to transfer the vocabulary, leading to more efficient and better performed downstream NLG models.

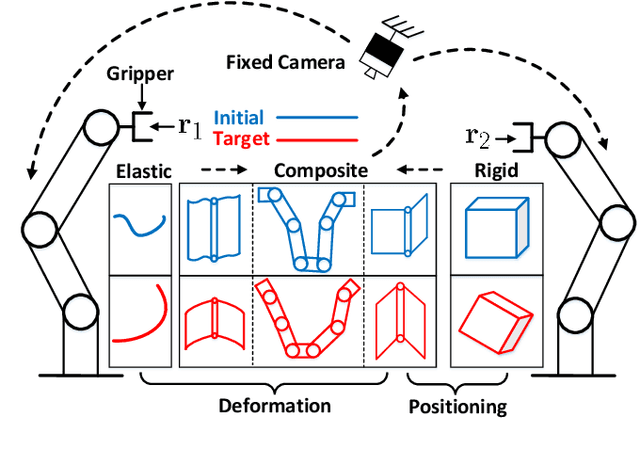

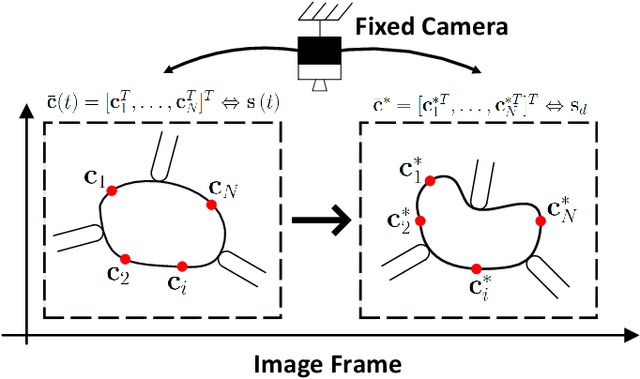

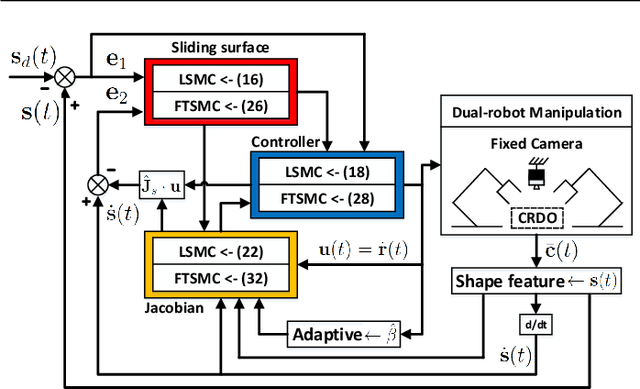

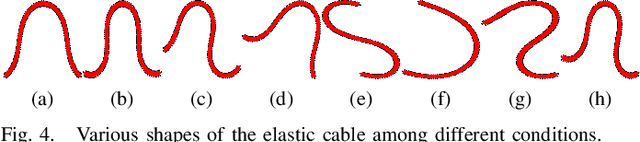

Contour Moments Based Manipulation of Composite Rigid-Deformable Objects with Finite Time Model Estimation and Shape/Position Control

Jun 04, 2021

Abstract:The robotic manipulation of composite rigid-deformable objects (i.e. those with mixed non-homogeneous stiffness properties) is a challenging problem with clear practical applications that, despite the recent progress in the field, it has not been sufficiently studied in the literature. To deal with this issue, in this paper we propose a new visual servoing method that has the capability to manipulate this broad class of objects (which varies from soft to rigid) with the same adaptive strategy. To quantify the object's infinite-dimensional configuration, our new approach computes a compact feedback vector of 2D contour moments features. A sliding mode control scheme is then designed to simultaneously ensure the finite-time convergence of both the feedback shape error and the model estimation error. The stability of the proposed framework (including the boundedness of all the signals) is rigorously proved with Lyapunov theory. Detailed simulations and experiments are presented to validate the effectiveness of the proposed approach. To the best of the author's knowledge, this is the first time that contour moments along with finite-time control have been used to solve this difficult manipulation problem.

$E^2Coop$: Energy Efficient and Cooperative Obstacle Detection and Avoidance for UAV Swarms

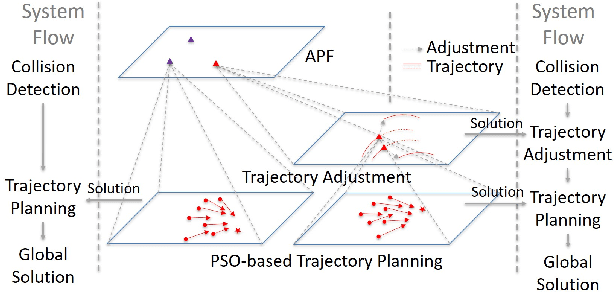

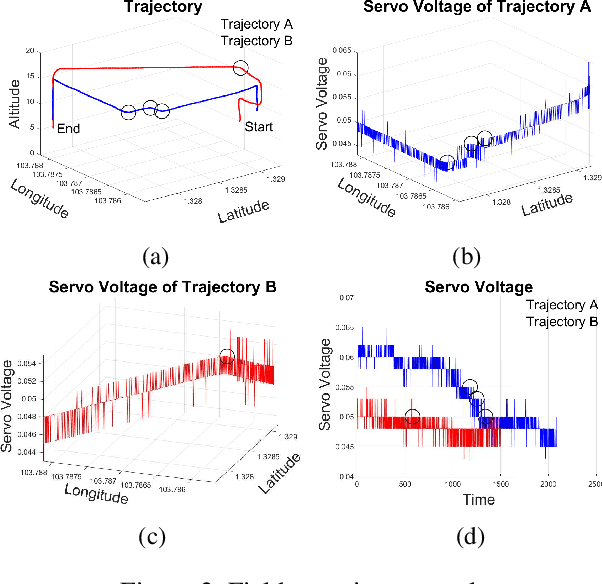

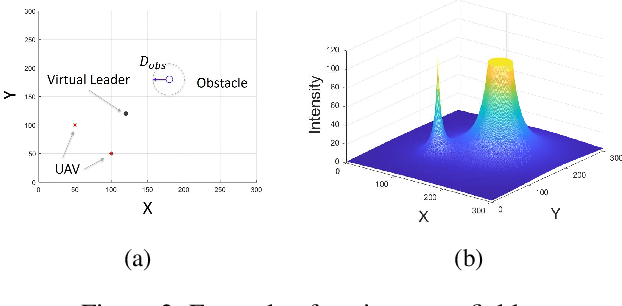

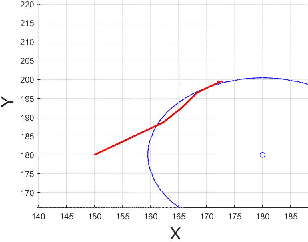

May 08, 2021

Abstract:Energy efficiency is of critical importance to trajectory planning for UAV swarms in obstacle avoidance. In this paper, we present $E^2Coop$, a new scheme designed to avoid collisions for UAV swarms by tightly coupling Artificial Potential Field (APF) with Particle Swarm Planning (PSO) based trajectory planning. In $E^2Coop$, swarm members perform trajectory planning cooperatively to avoid collisions in an energy-efficient manner. $E^2Coop$ exploits the advantages of the active contour model in image processing for trajectory planning. Each swarm member plans its trajectories on the contours of the environment field to save energy and avoid collisions to obstacles. Swarm members that fall within the safeguard distance of each other plan their trajectories on different contours to avoid collisions with each other. Simulation results demonstrate that $E^2Coop$ can save energy up to 51\% compared with two state-of-the-art schemes.

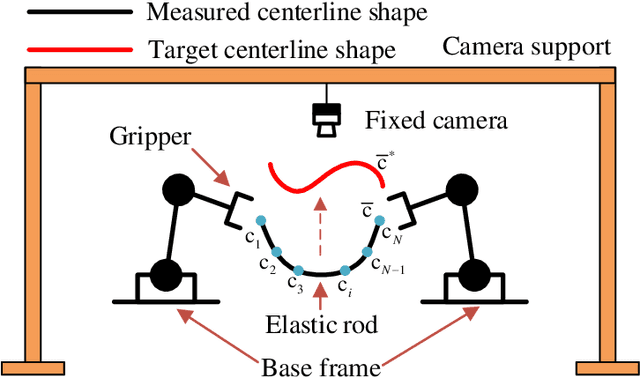

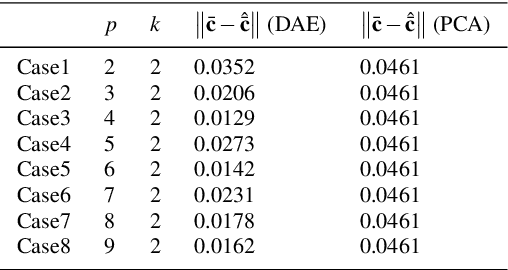

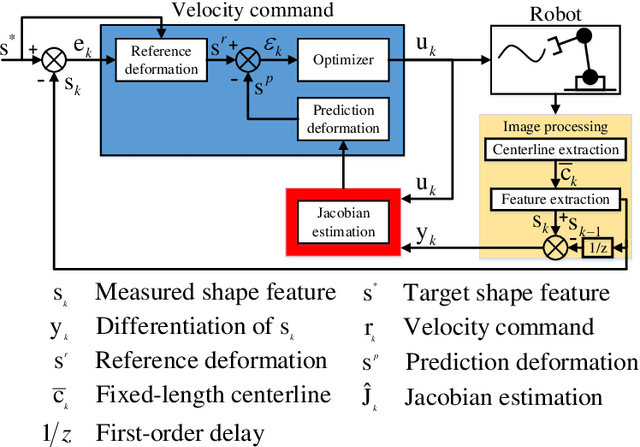

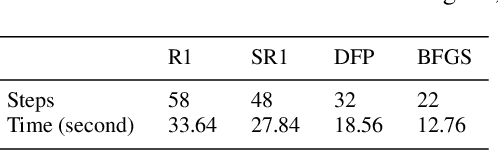

Towards Latent Space Based Manipulation of Elastic Rods using Autoencoder Models and Robust Centerline Extractions

Feb 09, 2021

Abstract:The automatic shape control of deformable objects is a challenging (and currently hot) manipulation problem due to their high-dimensional geometric features and complex physical properties. In this study, a new methodology to manipulate elastic rods automatically into 2D desired shapes is presented. An efficient vision-based controller that uses a deep autoencoder network is designed to compute a compact representation of the object's infinite-dimensional shape. An online algorithm that approximates the sensorimotor mapping between the robot's configuration and the object's shape features is used to deal with the latter's (typically unknown) mechanical properties. The proposed approach computes the rod's centerline from raw visual data in real-time by introducing an adaptive algorithm on the basis of a self-organizing network. Its effectiveness is thoroughly validated with simulations and experiments.

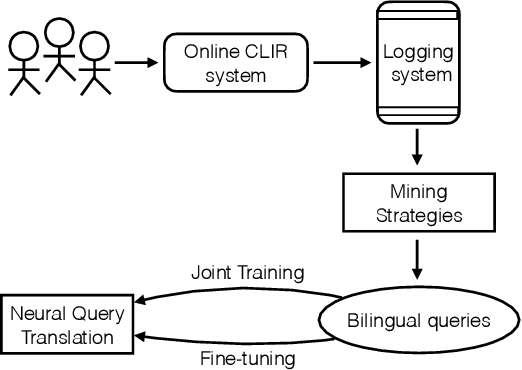

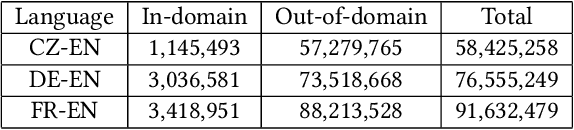

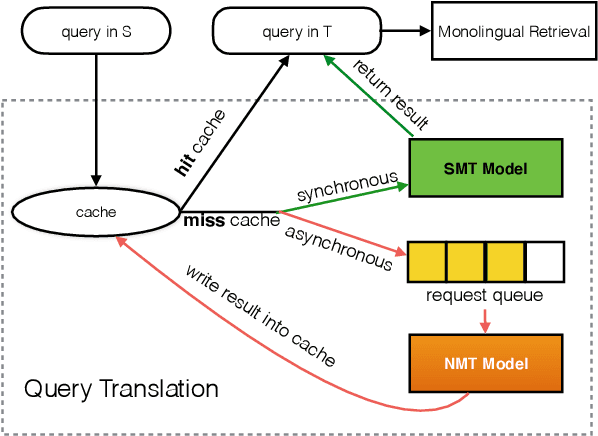

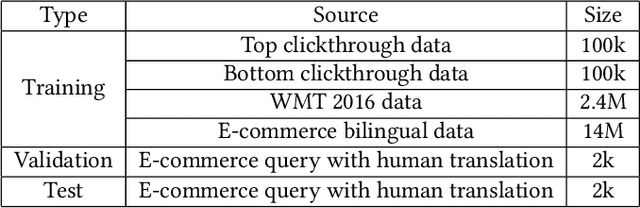

Exploiting Neural Query Translation into Cross Lingual Information Retrieval

Oct 26, 2020

Abstract:As a crucial role in cross-language information retrieval (CLIR), query translation has three main challenges: 1) the adequacy of translation; 2) the lack of in-domain parallel training data; and 3) the requisite of low latency. To this end, existing CLIR systems mainly exploit statistical-based machine translation (SMT) rather than the advanced neural machine translation (NMT), limiting the further improvements on both translation and retrieval quality. In this paper, we investigate how to exploit neural query translation model into CLIR system. Specifically, we propose a novel data augmentation method that extracts query translation pairs according to user clickthrough data, thus to alleviate the problem of domain-adaptation in NMT. Then, we introduce an asynchronous strategy which is able to leverage the advantages of the real-time in SMT and the veracity in NMT. Experimental results reveal that the proposed approach yields better retrieval quality than strong baselines and can be well applied into a real-world CLIR system, i.e. Aliexpress e-Commerce search engine. Readers can examine and test their cases on our website: https://aliexpress.com .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge