Fuqiang Gu

MambaSeg: Harnessing Mamba for Accurate and Efficient Image-Event Semantic Segmentation

Dec 30, 2025Abstract:Semantic segmentation is a fundamental task in computer vision with wide-ranging applications, including autonomous driving and robotics. While RGB-based methods have achieved strong performance with CNNs and Transformers, their effectiveness degrades under fast motion, low-light, or high dynamic range conditions due to limitations of frame cameras. Event cameras offer complementary advantages such as high temporal resolution and low latency, yet lack color and texture, making them insufficient on their own. To address this, recent research has explored multimodal fusion of RGB and event data; however, many existing approaches are computationally expensive and focus primarily on spatial fusion, neglecting the temporal dynamics inherent in event streams. In this work, we propose MambaSeg, a novel dual-branch semantic segmentation framework that employs parallel Mamba encoders to efficiently model RGB images and event streams. To reduce cross-modal ambiguity, we introduce the Dual-Dimensional Interaction Module (DDIM), comprising a Cross-Spatial Interaction Module (CSIM) and a Cross-Temporal Interaction Module (CTIM), which jointly perform fine-grained fusion along both spatial and temporal dimensions. This design improves cross-modal alignment, reduces ambiguity, and leverages the complementary properties of each modality. Extensive experiments on the DDD17 and DSEC datasets demonstrate that MambaSeg achieves state-of-the-art segmentation performance while significantly reducing computational cost, showcasing its promise for efficient, scalable, and robust multimodal perception.

Heteroscedastic Bayesian Optimization-Based Dynamic PID Tuning for Accurate and Robust UAV Trajectory Tracking

Dec 30, 2025Abstract:Unmanned Aerial Vehicles (UAVs) play an important role in various applications, where precise trajectory tracking is crucial. However, conventional control algorithms for trajectory tracking often exhibit limited performance due to the underactuated, nonlinear, and highly coupled dynamics of quadrotor systems. To address these challenges, we propose HBO-PID, a novel control algorithm that integrates the Heteroscedastic Bayesian Optimization (HBO) framework with the classical PID controller to achieve accurate and robust trajectory tracking. By explicitly modeling input-dependent noise variance, the proposed method can better adapt to dynamic and complex environments, and therefore improve the accuracy and robustness of trajectory tracking. To accelerate the convergence of optimization, we adopt a two-stage optimization strategy that allow us to more efficiently find the optimal controller parameters. Through experiments in both simulation and real-world scenarios, we demonstrate that the proposed method significantly outperforms state-of-the-art (SOTA) methods. Compared to SOTA methods, it improves the position accuracy by 24.7% to 42.9%, and the angular accuracy by 40.9% to 78.4%.

* Accepted by IROS 2025 (2025 IEEE/RSJ International Conference on Intelligent Robots and Systems)

Efficient Event-based Semantic Segmentation with Spike-driven Lightweight Transformer-based Networks

Dec 17, 2024

Abstract:Event-based semantic segmentation has great potential in autonomous driving and robotics due to the advantages of event cameras, such as high dynamic range, low latency, and low power cost. Unfortunately, current artificial neural network (ANN)-based segmentation methods suffer from high computational demands, the requirements for image frames, and massive energy consumption, limiting their efficiency and application on resource-constrained edge/mobile platforms. To address these problems, we introduce SLTNet, a spike-driven lightweight transformer-based network designed for event-based semantic segmentation. Specifically, SLTNet is built on efficient spike-driven convolution blocks (SCBs) to extract rich semantic features while reducing the model's parameters. Then, to enhance the long-range contextural feature interaction, we propose novel spike-driven transformer blocks (STBs) with binary mask operations. Based on these basic blocks, SLTNet employs a high-efficiency single-branch architecture while maintaining the low energy consumption of the Spiking Neural Network (SNN). Finally, extensive experiments on DDD17 and DSEC-Semantic datasets demonstrate that SLTNet outperforms state-of-the-art (SOTA) SNN-based methods by at least 7.30% and 3.30% mIoU, respectively, with extremely 5.48x lower energy consumption and 1.14x faster inference speed.

A Novel Wide-Area Multiobject Detection System with High-Probability Region Searching

May 07, 2024

Abstract:In recent years, wide-area visual surveillance systems have been widely applied in various industrial and transportation scenarios. These systems, however, face significant challenges when implementing multi-object detection due to conflicts arising from the need for high-resolution imaging, efficient object searching, and accurate localization. To address these challenges, this paper presents a hybrid system that incorporates a wide-angle camera, a high-speed search camera, and a galvano-mirror. In this system, the wide-angle camera offers panoramic images as prior information, which helps the search camera capture detailed images of the targeted objects. This integrated approach enhances the overall efficiency and effectiveness of wide-area visual detection systems. Specifically, in this study, we introduce a wide-angle camera-based method to generate a panoramic probability map (PPM) for estimating high-probability regions of target object presence. Then, we propose a probability searching module that uses the PPM-generated prior information to dynamically adjust the sampling range and refine target coordinates based on uncertainty variance computed by the object detector. Finally, the integration of PPM and the probability searching module yields an efficient hybrid vision system capable of achieving 120 fps multi-object search and detection. Extensive experiments are conducted to verify the system's effectiveness and robustness.

* Accepted by ICRA 2024

MoMa-Pos: Where Should Mobile Manipulators Stand in Cluttered Environment Before Task Execution?

Mar 29, 2024

Abstract:Mobile manipulators always need to determine feasible base positions prior to carrying out navigation-manipulation tasks. Real-world environments are often cluttered with various furniture, obstacles, and dozens of other objects. Efficiently computing base positions poses a challenge. In this work, we introduce a framework named MoMa-Pos to address this issue. MoMa-Pos first learns to predict a small set of objects that, taken together, would be sufficient for finding base positions using a graph embedding architecture. MoMa-Pos then calculates standing positions by considering furniture structures, robot models, and obstacles comprehensively. We have extensively evaluated the proposed MoMa-Pos across different settings (e.g., environment and algorithm parameters) and with various mobile manipulators. Our empirical results show that MoMa-Pos demonstrates remarkable effectiveness and efficiency in its performance, surpassing the methods in the literature. %, but also is adaptable to cluttered environments and different robot models. Supplementary material can be found at \url{https://yding25.com/MoMa-Pos}.

EdgeVO: An Efficient and Accurate Edge-based Visual Odometry

Feb 19, 2023Abstract:Visual odometry is important for plenty of applications such as autonomous vehicles, and robot navigation. It is challenging to conduct visual odometry in textureless scenes or environments with sudden illumination changes where popular feature-based methods or direct methods cannot work well. To address this challenge, some edge-based methods have been proposed, but they usually struggle between the efficiency and accuracy. In this work, we propose a novel visual odometry approach called \textit{EdgeVO}, which is accurate, efficient, and robust. By efficiently selecting a small set of edges with certain strategies, we significantly improve the computational efficiency without sacrificing the accuracy. Compared to existing edge-based method, our method can significantly reduce the computational complexity while maintaining similar accuracy or even achieving better accuracy. This is attributed to that our method removes useless or noisy edges. Experimental results on the TUM datasets indicate that EdgeVO significantly outperforms other methods in terms of efficiency, accuracy and robustness.

Location reference recognition from texts: A survey and comparison

Jul 04, 2022

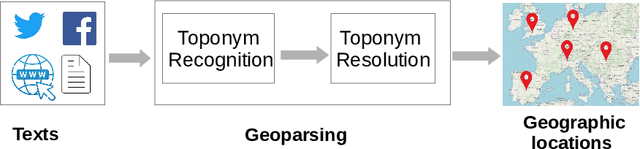

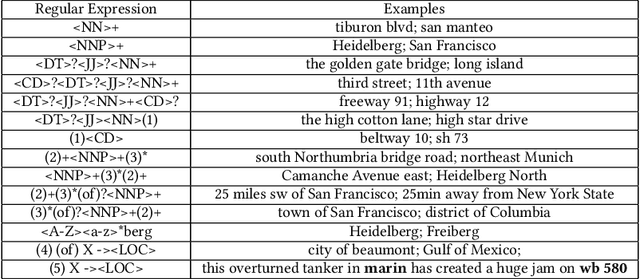

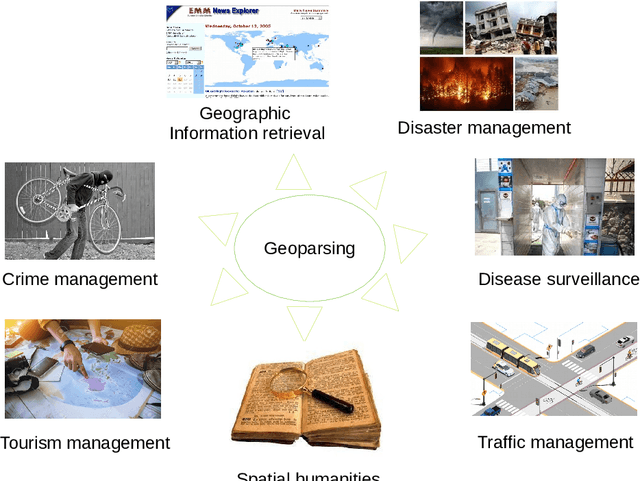

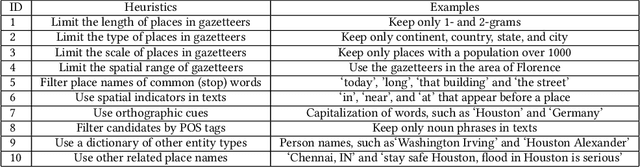

Abstract:A vast amount of location information exists in unstructured texts, such as social media posts, news stories, scientific articles, web pages, travel blogs, and historical archives. Geoparsing refers to the process of recognizing location references from texts and identifying their geospatial representations. While geoparsing can benefit many domains, a summary of the specific applications is still missing. Further, there lacks a comprehensive review and comparison of existing approaches for location reference recognition, which is the first and a core step of geoparsing. To fill these research gaps, this review first summarizes seven typical application domains of geoparsing: geographic information retrieval, disaster management, disease surveillance, traffic management, spatial humanities, tourism management, and crime management. We then review existing approaches for location reference recognition by categorizing these approaches into four groups based on their underlying functional principle: rule-based, gazetteer matching-based, statistical learning-based, and hybrid approaches. Next, we thoroughly evaluate the correctness and computational efficiency of the 27 most widely used approaches for location reference recognition based on 26 public datasets with different types of texts (e.g., social media posts and news stories) containing 39,736 location references across the world. Results from this thorough evaluation can help inform future methodological developments for location reference recognition, and can help guide the selection of proper approaches based on application needs.

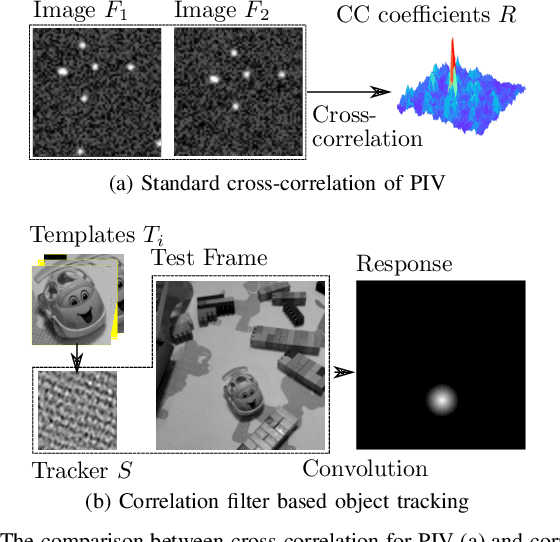

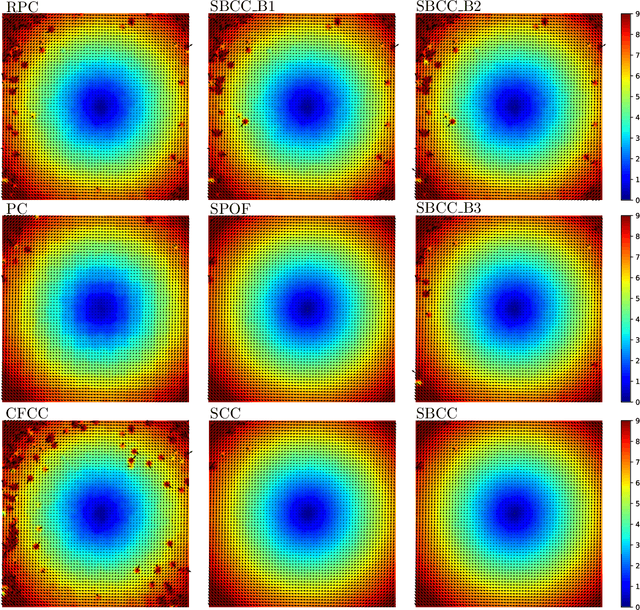

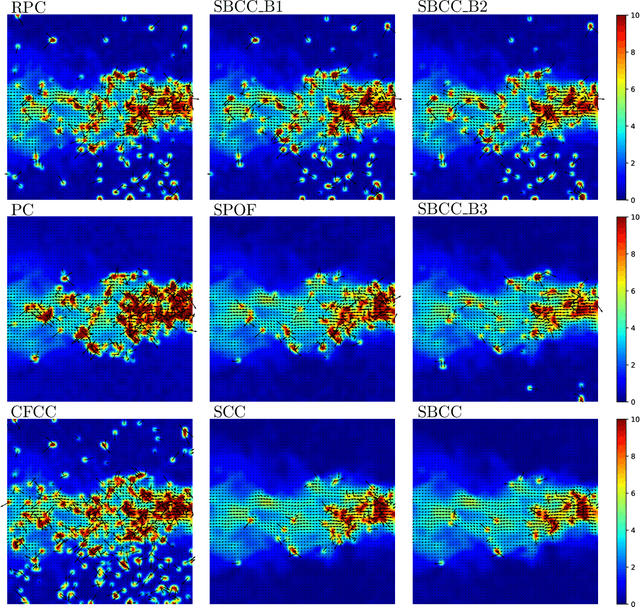

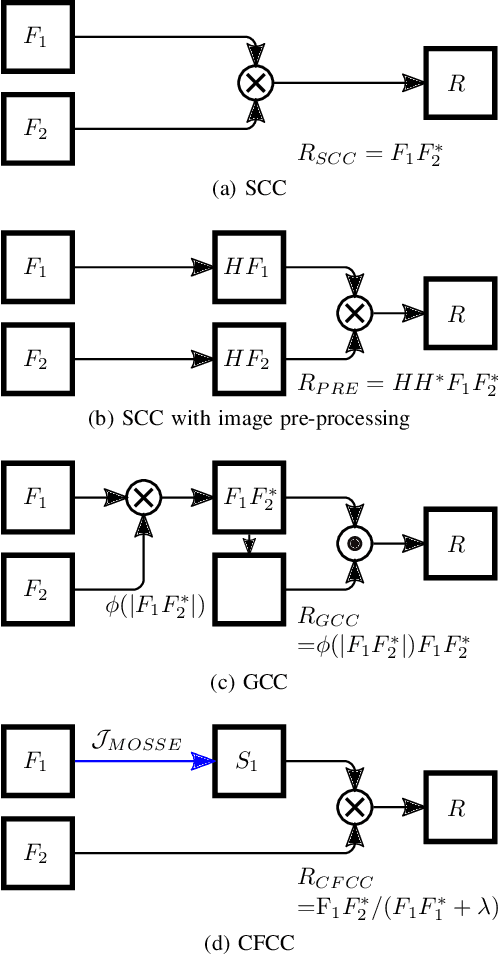

Surrogate-based cross-correlation for particle image velocimetry

Dec 10, 2021

Abstract:This paper presents a novel surrogate-based cross-correlation (SBCC) framework to improve the correlation performance between two image signals. The basic idea behind the SBCC is that an optimized surrogate filter/image, supplanting one original image, will produce a more robust and more accurate correlation signal. The cross-correlation estimation of the SBCC is formularized with an objective function composed of surrogate loss and correlation consistency loss. The closed-form solution provides an efficient estimation. To our surprise, the SBCC framework could provide an alternative view to explain a set of generalized cross-correlation (GCC) methods and comprehend the meaning of parameters. With the help of our SBCC framework, we further propose four new specific cross-correlation methods, and provide some suggestions for improving existing GCC methods. A noticeable fact is that the SBCC could enhance the correlation robustness by incorporating other negative context images. Considering the sub-pixel accuracy and robustness requirement of particle image velocimetry (PIV), the contribution of each term in the objective function is investigated with particles' images. Compared with the state-of-the-art baseline methods, the SBCC methods exhibit improved performance (accuracy and robustness) on the synthetic dataset and several challenging real experimental PIV cases.

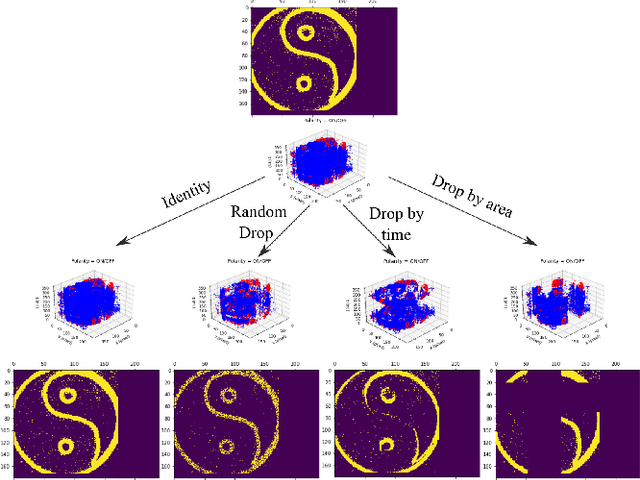

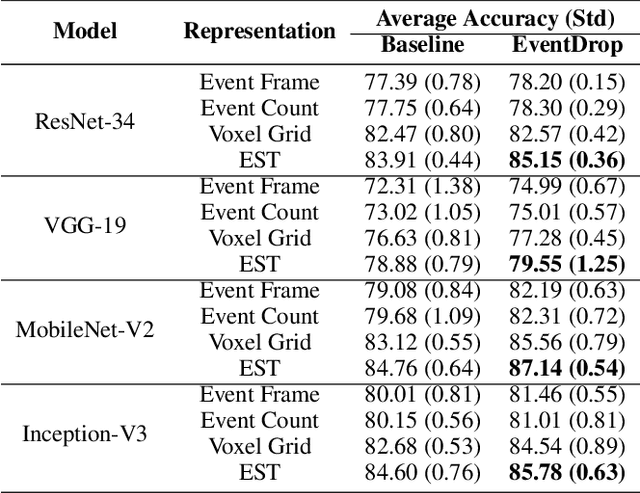

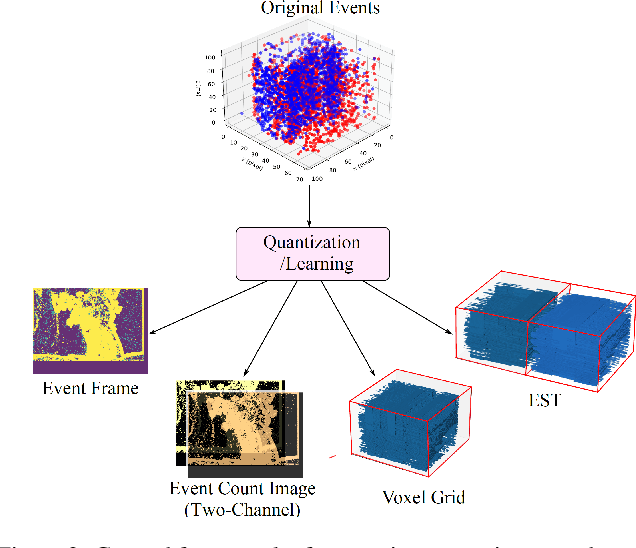

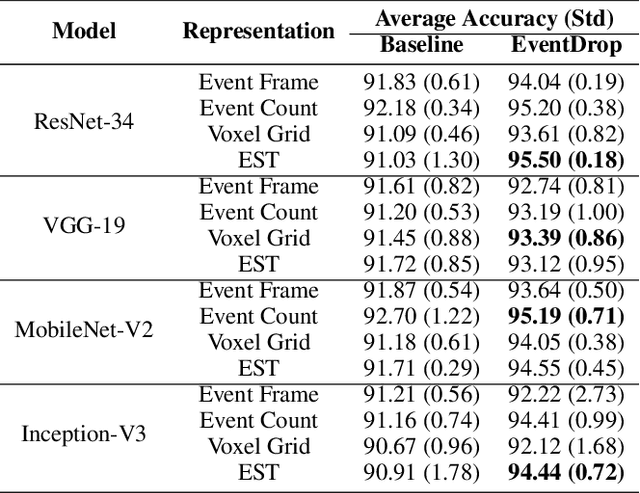

EventDrop: data augmentation for event-based learning

Jun 07, 2021

Abstract:The advantages of event-sensing over conventional sensors (e.g., higher dynamic range, lower time latency, and lower power consumption) have spurred research into machine learning for event data. Unsurprisingly, deep learning has emerged as a competitive methodology for learning with event sensors; in typical setups, discrete and asynchronous events are first converted into frame-like tensors on which standard deep networks can be applied. However, over-fitting remains a challenge, particularly since event datasets remain small relative to conventional datasets (e.g., ImageNet). In this paper, we introduce EventDrop, a new method for augmenting asynchronous event data to improve the generalization of deep models. By dropping events selected with various strategies, we are able to increase the diversity of training data (e.g., to simulate various levels of occlusion). From a practical perspective, EventDrop is simple to implement and computationally low-cost. Experiments on two event datasets (N-Caltech101 and N-Cars) demonstrate that EventDrop can significantly improve the generalization performance across a variety of deep networks.

Fast and Reliable WiFi Fingerprint Collection for Indoor Localization

Aug 01, 2020

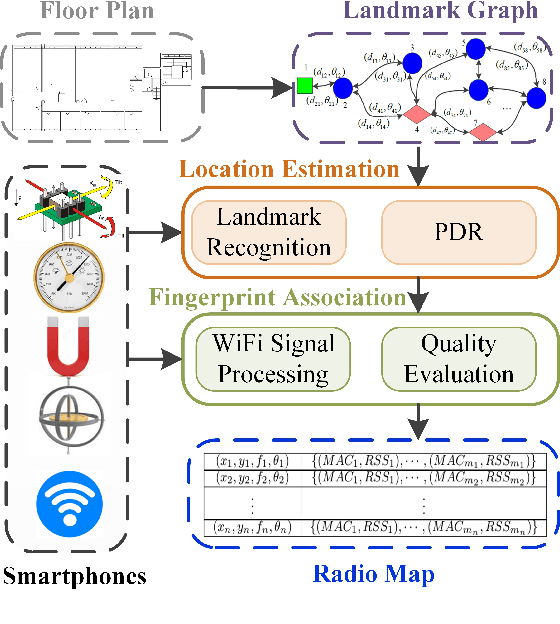

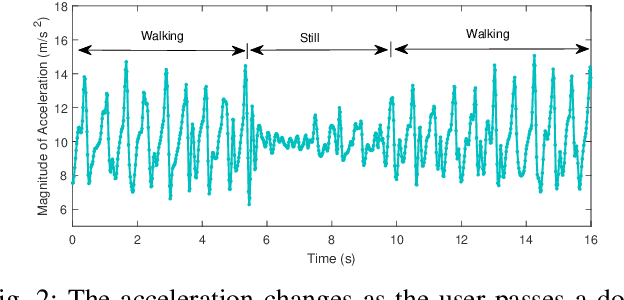

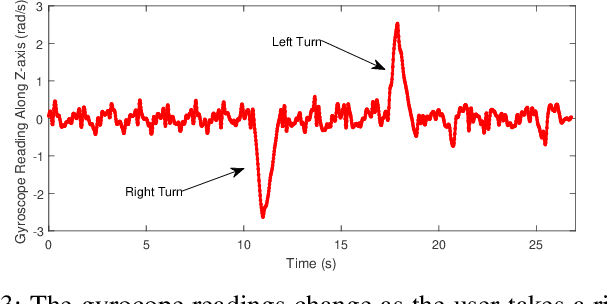

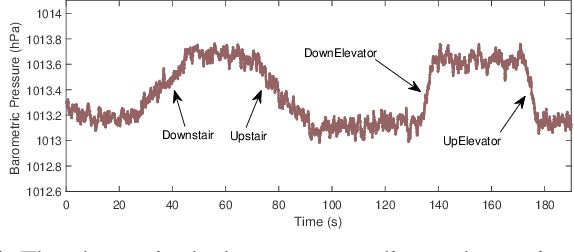

Abstract:Fingerprinting is a popular indoor localization technique since it can utilize existing infrastructures (e.g., access points). However, its site survey process is a labor-intensive and time-consuming task, which limits the application of such systems in practice. In this paper, motivated by the availability of advanced sensing capabilities in smartphones, we propose a fast and reliable fingerprint collection method to reduce the time and labor required for site survey. The proposed method uses a landmark graph-based method to automatically associate the collected fingerprints, which does not require active user participation. We will show that besides fast fingerprint data collection, the proposed method results in accurate location estimate compared to the state-of-the-art methods. Experimental results show that the proposed method is an order of magnitude faster than the manual fingerprint collection method, and using the radio map generated by our method achieves a much better accuracy compared to the existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge