Danica Kragic

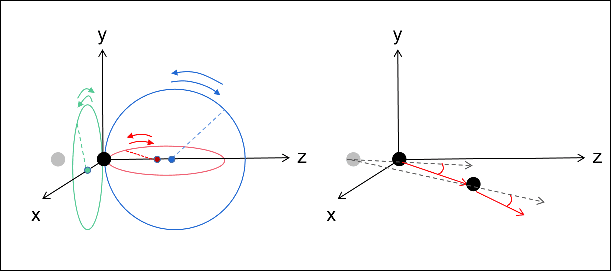

Learning Deep Neural Policies with Stability Guarantees

Mar 30, 2021

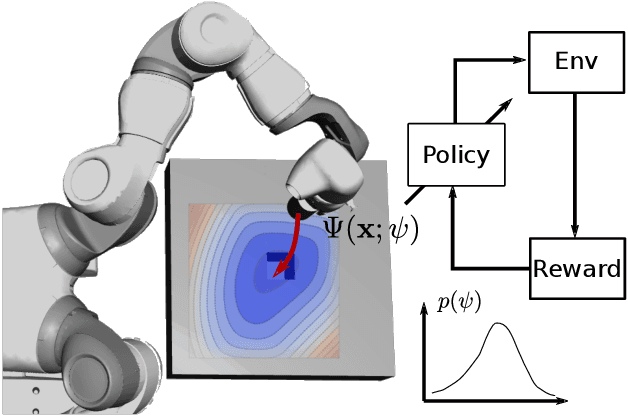

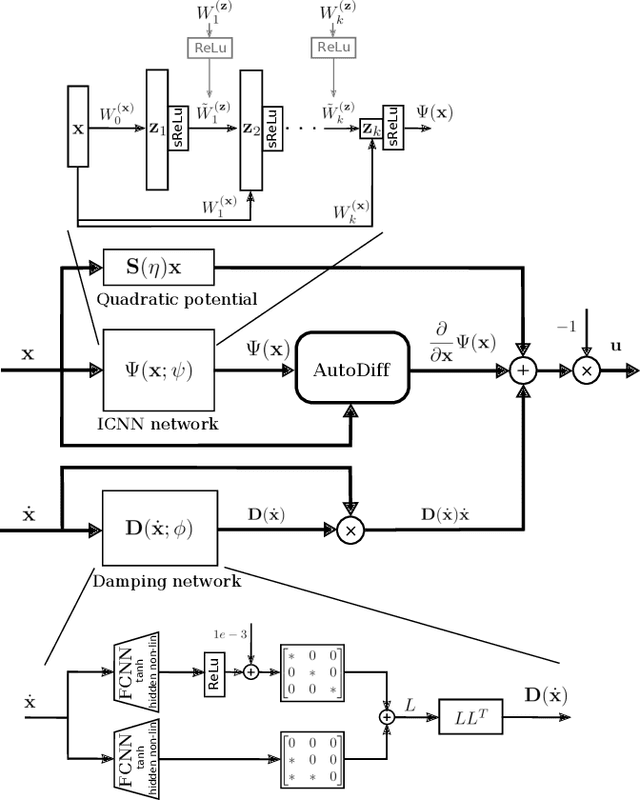

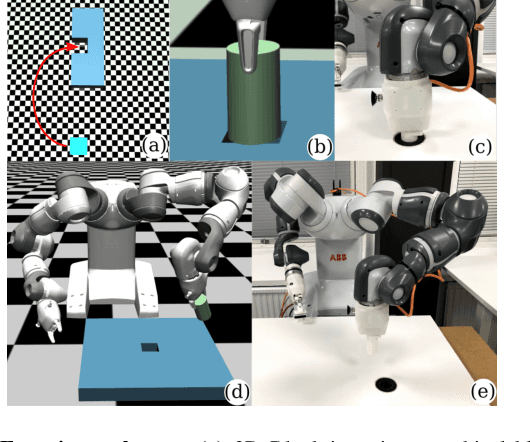

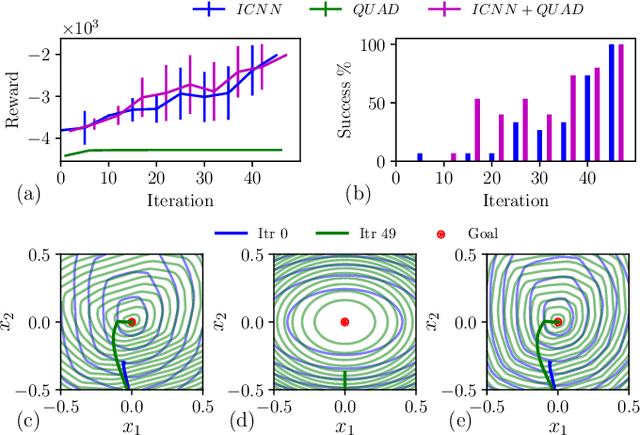

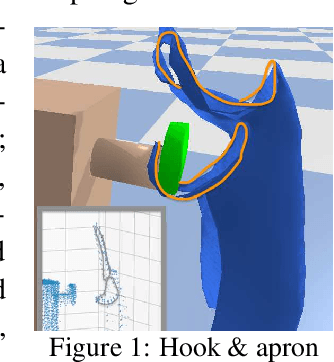

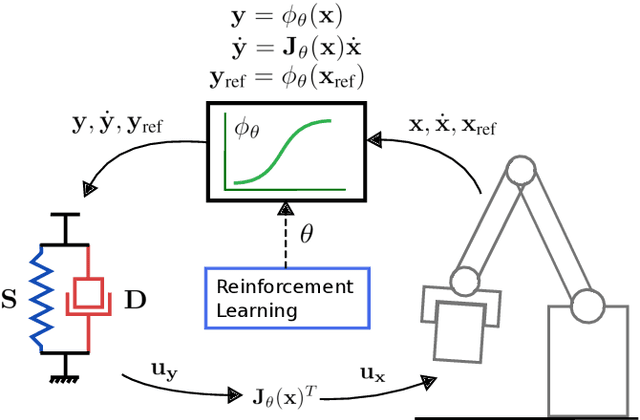

Abstract:Reinforcement learning (RL) has been successfully used to solve various robotic control tasks. However, most of the existing works do not address the issue of control stability. This is in sharp contrast to the control theory community where the well-established norm is to prove stability whenever a control law is synthesized. What makes guaranteeing stability during RL difficult is threefold: non interpretable neural network policies, unknown system dynamics and random exploration. We contribute towards solving the stable RL problem in the context of robotic manipulation that may involve physical contact with the environment. Our solution is derived from physics-based prior that originates from Lagrangian mechanics and does not involve learning any dynamics model. We show how to parameterize the resulting $\textit{energy shaping}$ policy as a deep neural network that consists of a convex potential function and a velocity dependent damping component. Our experiments, that include a real-world peg insertion task by a 7-DOF robot, validate the proposed policy structure and demonstrate the benefits of stability in RL.

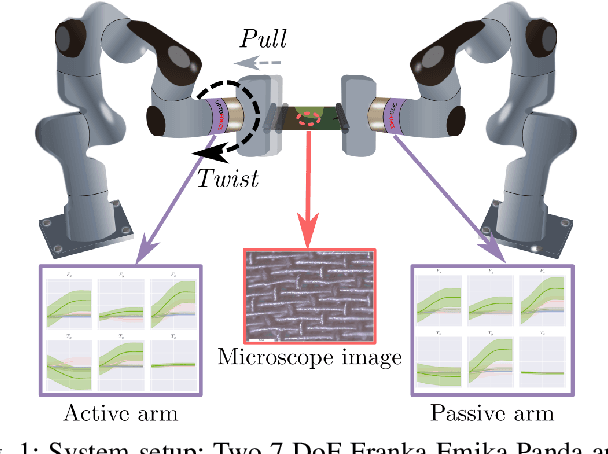

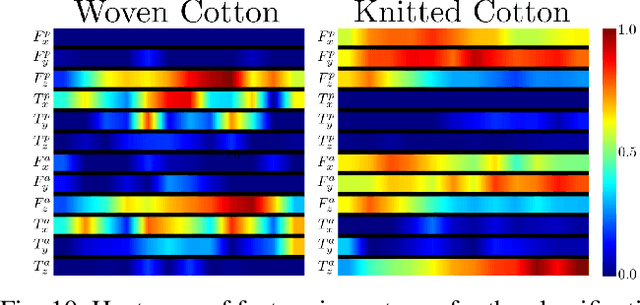

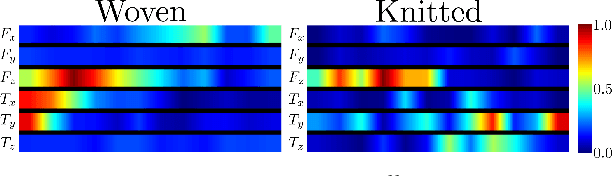

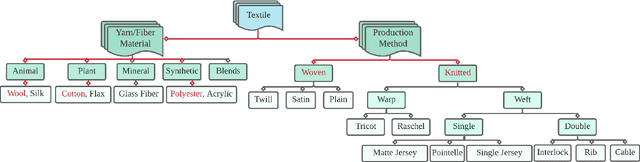

Textile Taxonomy and Classification Using Pulling and Twisting

Mar 17, 2021

Abstract:Identification of textile properties is an important milestone toward advanced robotic manipulation tasks that consider interaction with clothing items such as assisted dressing, laundry folding, automated sewing, textile recycling and reusing. Despite the abundance of work considering this class of deformable objects, many open problems remain. These relate to the choice and modelling of the sensory feedback as well as the control and planning of the interaction and manipulation strategies. Most importantly, there is no structured approach for studying and assessing different approaches that may bridge the gap between the robotics community and textile production industry. To this end, we outline a textile taxonomy considering fiber types and production methods, commonly used in textile industry. We devise datasets according to the taxonomy, and study how robotic actions, such as pulling and twisting of the textile samples, can be used for the classification. We also provide important insights from the perspective of visualization and interpretability of the gathered data.

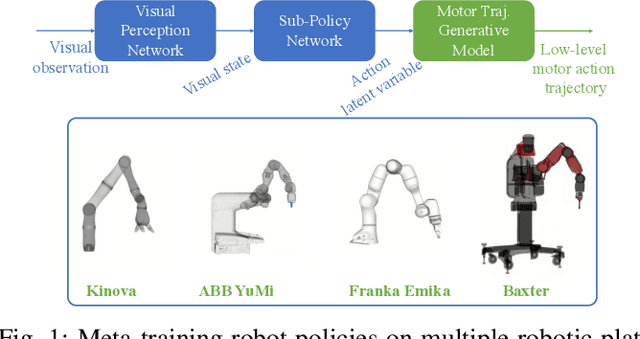

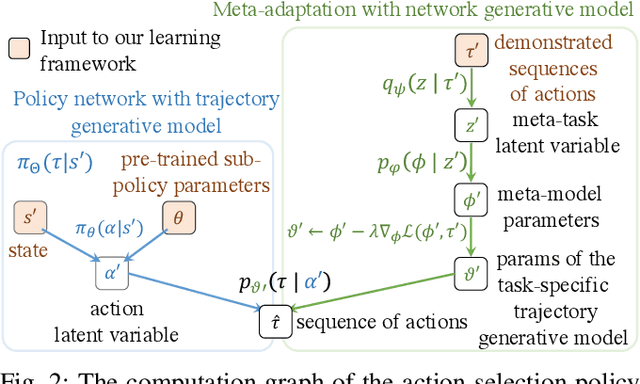

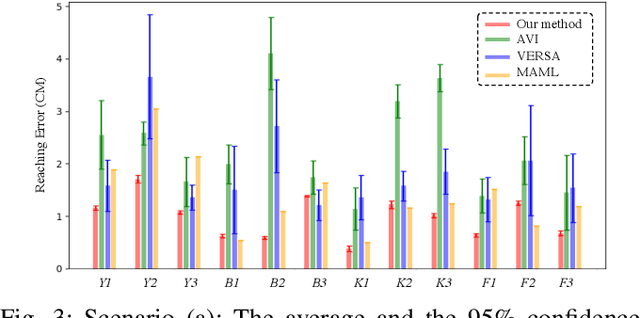

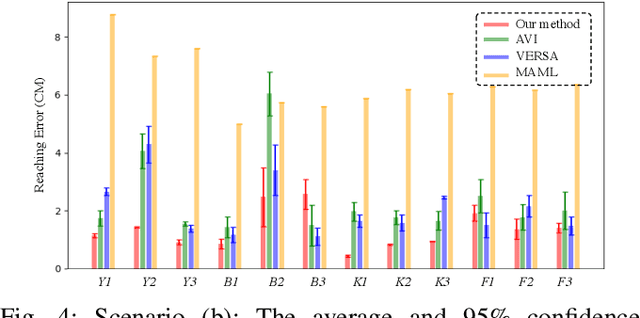

Bayesian Meta-Learning for Few-Shot Policy Adaptation Across Robotic Platforms

Mar 05, 2021

Abstract:Reinforcement learning methods can achieve significant performance but require a large amount of training data collected on the same robotic platform. A policy trained with expensive data is rendered useless after making even a minor change to the robot hardware. In this paper, we address the challenging problem of adapting a policy, trained to perform a task, to a novel robotic hardware platform given only few demonstrations of robot motion trajectories on the target robot. We formulate it as a few-shot meta-learning problem where the goal is to find a meta-model that captures the common structure shared across different robotic platforms such that data-efficient adaptation can be performed. We achieve such adaptation by introducing a learning framework consisting of a probabilistic gradient-based meta-learning algorithm that models the uncertainty arising from the few-shot setting with a low-dimensional latent variable. We experimentally evaluate our framework on a simulated reaching and a real-robot picking task using 400 simulated robots generated by varying the physical parameters of an existing set of robotic platforms. Our results show that the proposed method can successfully adapt a trained policy to different robotic platforms with novel physical parameters and the superiority of our meta-learning algorithm compared to state-of-the-art methods for the introduced few-shot policy adaptation problem.

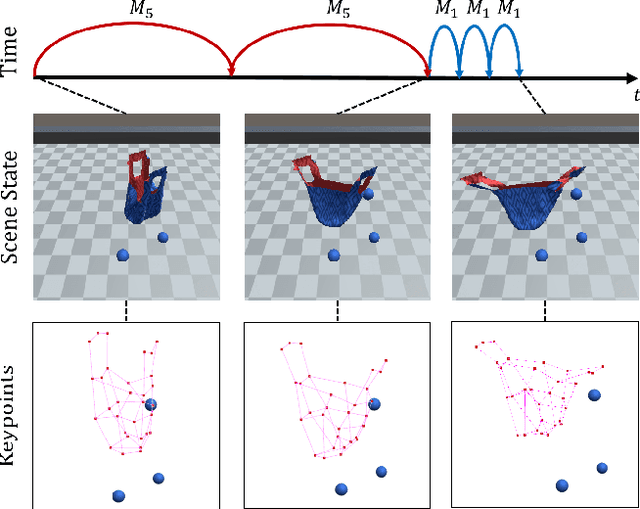

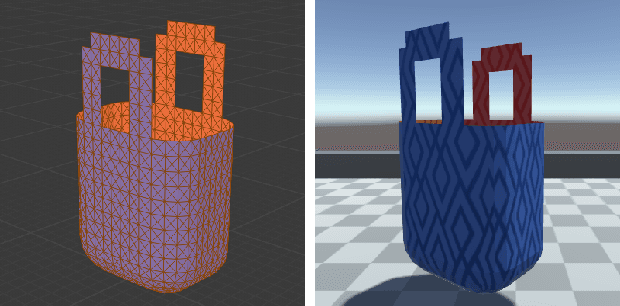

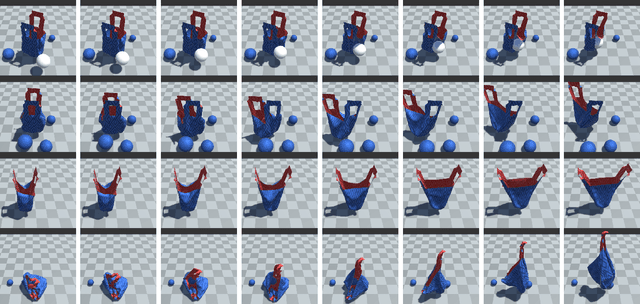

Graph-based Task-specific Prediction Models for Interactions between Deformable and Rigid Objects

Mar 04, 2021

Abstract:Capturing scene dynamics and predicting the future scene state is challenging but essential for robotic manipulation tasks, especially when the scene contains both rigid and deformable objects. In this work, we contribute a simulation environment and generate a novel dataset for task-specific manipulation, involving interactions between rigid objects and a deformable bag. The dataset incorporates a rich variety of scenarios including different object sizes, object numbers and manipulation actions. We approach dynamics learning by proposing an object-centric graph representation and two modules which are Active Prediction Module (APM) and Position Prediction Module (PPM) based on graph neural networks with an encode-process-decode architecture. At the inference stage, we build a two-stage model based on the learned modules for single time step prediction. We combine modules with different prediction horizons into a mixed-horizon model which addresses long-term prediction. In an ablation study, we show the benefits of the two-stage model for single time step prediction and the effectiveness of the mixed-horizon model for long-term prediction tasks. Supplementary material is available at https://github.com/wengzehang/deformable_rigid_interaction_prediction

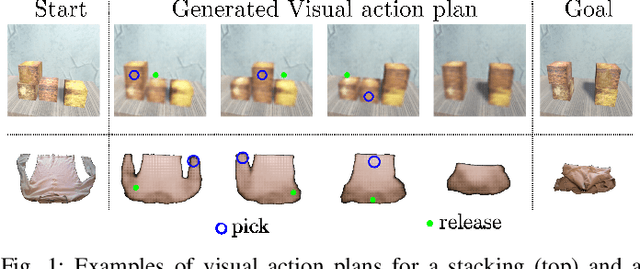

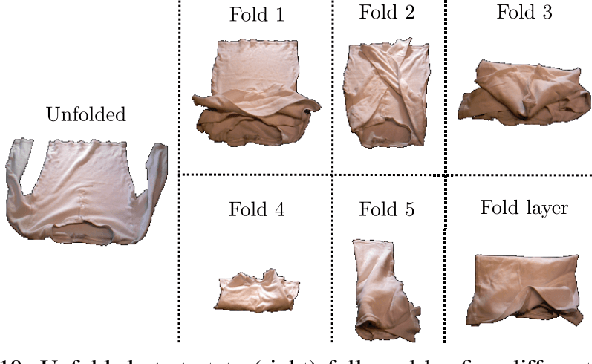

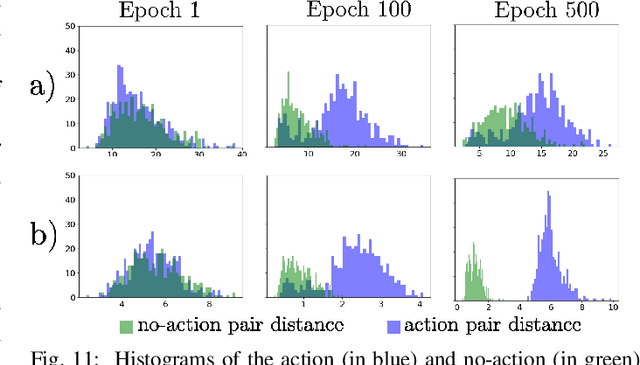

Enabling Visual Action Planning for Object Manipulation through Latent Space Roadmap

Mar 03, 2021

Abstract:We present a framework for visual action planning of complex manipulation tasks with high-dimensional state spaces, focusing on manipulation of deformable objects. We propose a Latent Space Roadmap (LSR) for task planning, a graph-based structure capturing globally the system dynamics in a low-dimensional latent space. Our framework consists of three parts: (1) a Mapping Module (MM) that maps observations, given in the form of images, into a structured latent space extracting the respective states, that generates observations from the latent states, (2) the LSR which builds and connects clusters containing similar states in order to find the latent plans between start and goal states extracted by MM, and (3) the Action Proposal Module that complements the latent plan found by the LSR with the corresponding actions. We present a thorough investigation of our framework on two simulated box stacking tasks and a folding task executed on a real robot.

Interpretability in Contact-Rich Manipulation via Kinodynamic Images

Feb 23, 2021

Abstract:Deep Neural Networks (NNs) have been widely utilized in contact-rich manipulation tasks to model the complicated contact dynamics. However, NN-based models are often difficult to decipher which can lead to seemingly inexplicable behaviors and unidentifiable failure cases. In this work, we address the interpretability of NN-based models by introducing the kinodynamic images. We propose a methodology that creates images from the kinematic and dynamic data of a contact-rich manipulation task. Our formulation visually reflects the task's state by encoding its kinodynamic variations and temporal evolution. By using images as the state representation, we enable the application of interpretability modules that were previously limited to vision-based tasks. We use this representation to train Convolution-based Networks and we extract interpretations of the model's decisions with Grad-CAM, a technique that produces visual explanations. Our method is versatile and can be applied to any classification problem using synchronous features in manipulation to visually interpret which parts of the input drive the model's decisions and distinguish its failure modes. We evaluate this approach on two examples of real-world contact-rich manipulation: pushing and cutting, with known and unknown objects. Finally, we demonstrate that our method enables both detailed visual inspections of sequences in a task, as well as high-level evaluations of a model's behavior and tendencies. Data and code for this work are available at https://github.com/imitsioni/interpretable_manipulation.

Sequential Topological Representations for Predictive Models of Deformable Objects

Nov 23, 2020

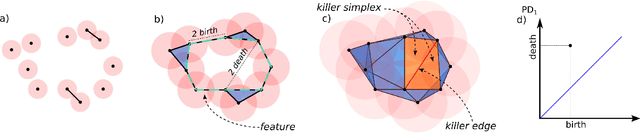

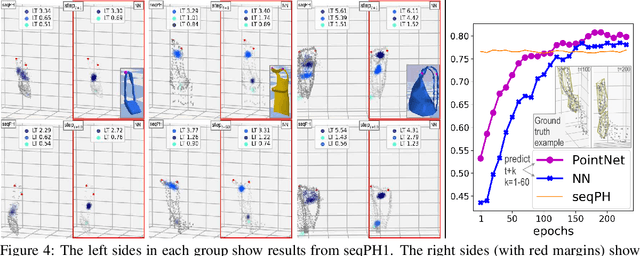

Abstract:Deformable objects present a formidable challenge for robotic manipulation due to the lack of canonical low-dimensional representations and the difficulty of capturing, predicting, and controlling such objects. We construct compact topological representations to capture the state of highly deformable objects that are topologically nontrivial. We develop an approach that tracks the evolution of this topological state through time. Under several mild assumptions, we prove that the topology of the scene and its evolution can be recovered from point clouds representing the scene. Our further contribution is a method to learn predictive models that take a sequence of past point cloud observations as input and predict a sequence of topological states, conditioned on target/future control actions. Our experiments with highly deformable objects in simulation show that the proposed multistep predictive models yield more precise results than those obtained from computational topology libraries. These models can leverage patterns inferred across various objects and offer fast multistep predictions suitable for real-time applications.

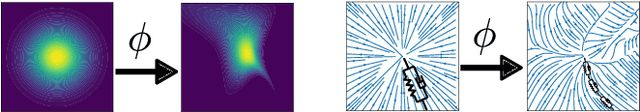

Learning Stable Normalizing-Flow Control for Robotic Manipulation

Oct 30, 2020

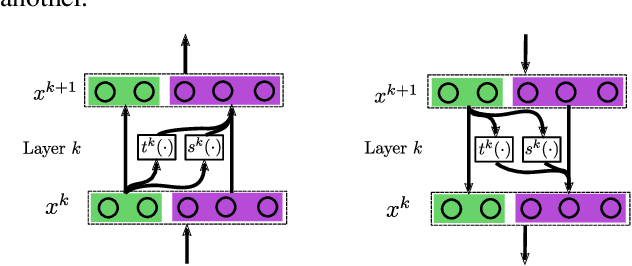

Abstract:Reinforcement Learning (RL) of robotic manipulation skills, despite its impressive successes, stands to benefit from incorporating domain knowledge from control theory. One of the most important properties that is of interest is control stability. Ideally, one would like to achieve stability guarantees while staying within the framework of state-of-the-art deep RL algorithms. Such a solution does not exist in general, especially one that scales to complex manipulation tasks. We contribute towards closing this gap by introducing $\textit{normalizing-flow}$ control structure, that can be deployed in any latest deep RL algorithms. While stable exploration is not guaranteed, our method is designed to ultimately produce deterministic controllers with provable stability. In addition to demonstrating our method on challenging contact-rich manipulation tasks, we also show that it is possible to achieve considerable exploration efficiency--reduced state space coverage and actuation efforts--without losing learning efficiency.

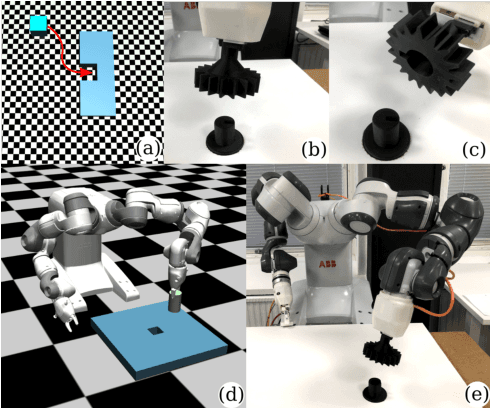

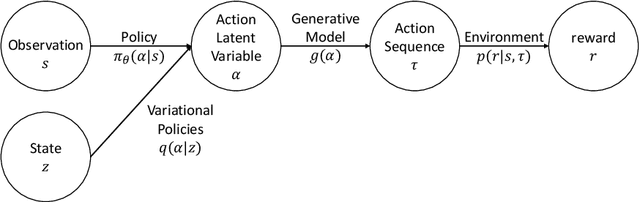

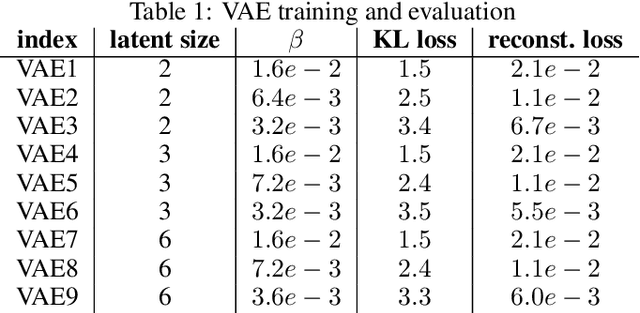

Data-efficient visuomotor policy training using reinforcement learning and generative models

Jul 26, 2020

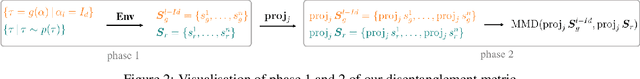

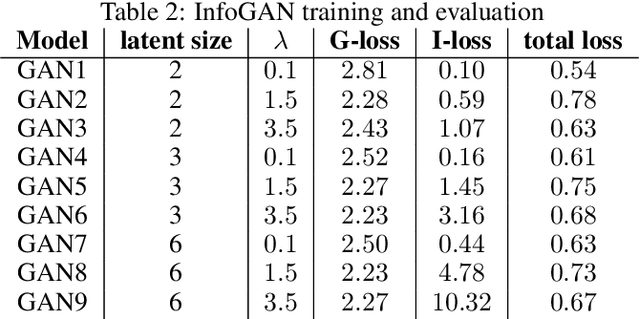

Abstract:We present a data-efficient framework for solving deep visuomotor sequential decision-making problems which exploits the combination of reinforcement learning (RL) with the latent variable generative models. Our framework trains deep visuomotor policies by introducing an action latent variable such that the feed-forward policy search can be divided into two parts: (1) training a sub-policy that outputs a distribution over the action latent variable given a state of the system, and (2) training a generative model that outputs a sequence of motor actions given a latent action representation. Our approach enables safe exploration and alleviates the data-inefficiency problem as it exploits prior knowledge about valid sequences of motor actions. Moreover, by evaluating the quality of the generative models we are able to predict the performance of the RL policy training prior to the actual training on the physical robot. We achieve this by defining two novel measures, disentanglement and local linearity, for assessing the quality of generative models' latent spaces, and complementing them with the existing measures for evaluation of generative models. We demonstrate the efficiency of our approach on a picking task using several different generative models and determine which of their properties have the most influence on the final policy training.

Human-centered collaborative robots with deep reinforcement learning

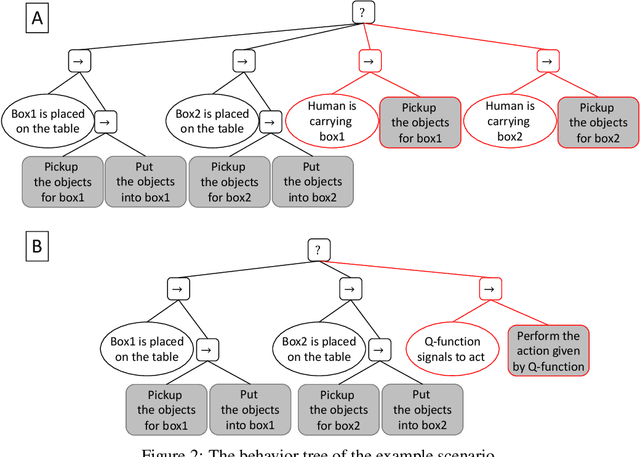

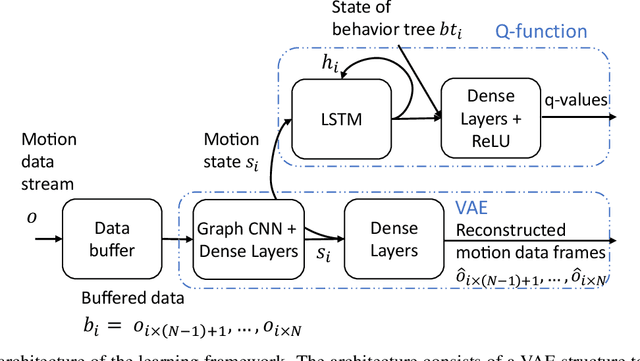

Jul 02, 2020

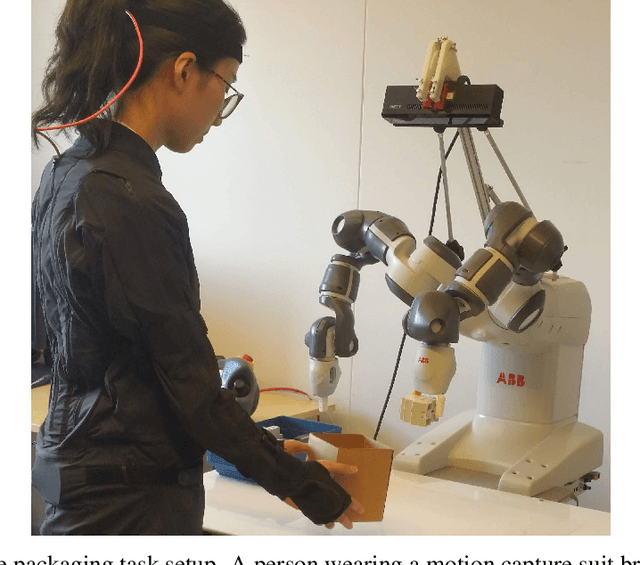

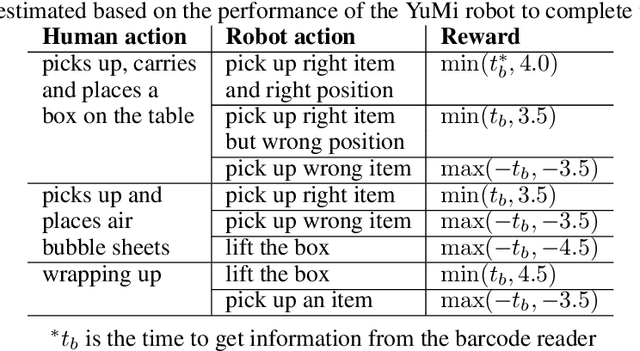

Abstract:We present a reinforcement learning based framework for human-centered collaborative systems. The framework is proactive and balances the benefits of timely actions with the risk of taking improper actions by minimizing the total time spent to complete the task. The framework is learned end-to-end in an unsupervised fashion addressing the perception uncertainties and decision making in an integrated manner. The framework is shown to provide more fluent coordination between human and robot partners on an example task of packaging compared to alternatives for which perception and decision-making systems are learned independently, using supervised learning. The foremost benefit of the proposed approach is that it allows for fast adaptation to new human partners and tasks since tedious annotation of motion data is avoided and the learning is performed on-line.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge