VicTR: Video-conditioned Text Representations for Activity Recognition

Apr 05, 2023Kumara Kahatapitiya, Anurag Arnab, Arsha Nagrani, Michael S. Ryoo

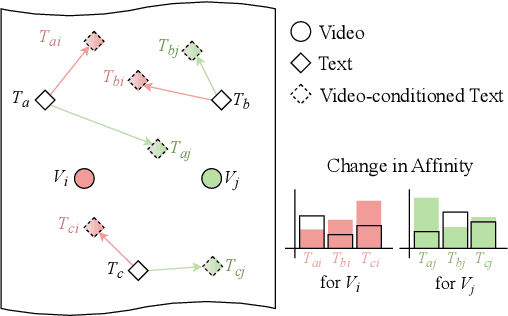

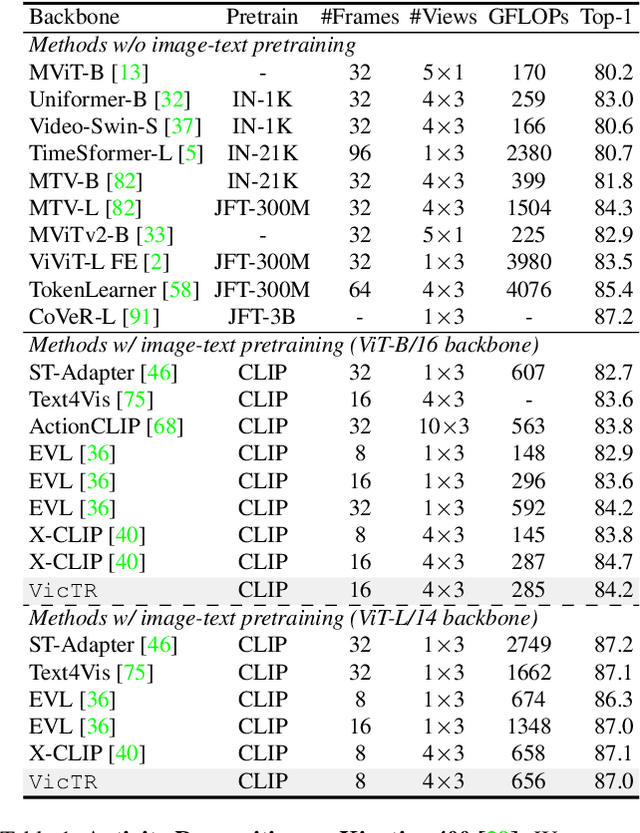

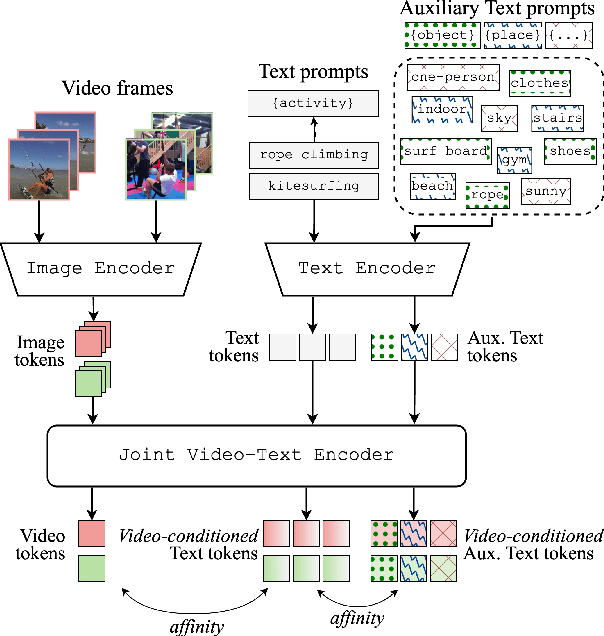

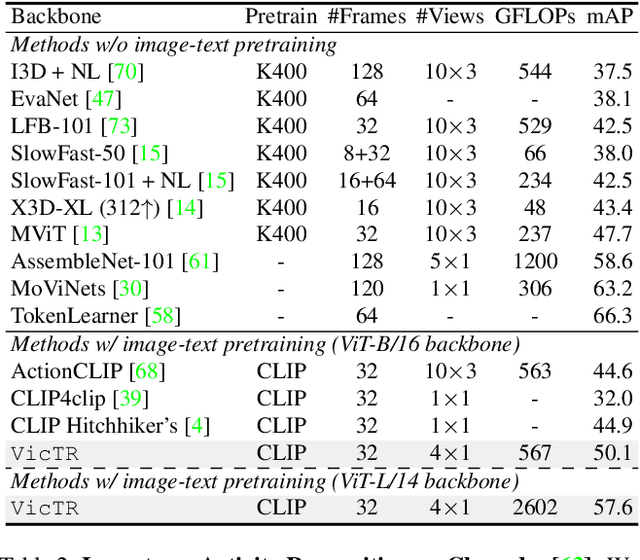

Vision-Language models have shown strong performance in the image-domain -- even in zero-shot settings, thanks to the availability of large amount of pretraining data (i.e., paired image-text examples). However for videos, such paired data is not as abundant. Thus, video-text models are usually designed by adapting pretrained image-text models to video-domain, instead of training from scratch. All such recipes rely on augmenting visual embeddings with temporal information (i.e., image -> video), often keeping text embeddings unchanged or even being discarded. In this paper, we argue that such adapted video-text models can benefit more by augmenting text rather than visual information. We propose VicTR, which jointly-optimizes text and video tokens, generating 'Video-conditioned Text' embeddings. Our method can further make use of freely-available semantic information, in the form of visually-grounded auxiliary text (e.g., object or scene information). We conduct experiments on multiple benchmarks including supervised (Kinetics-400, Charades), zero-shot and few-shot (HMDB-51, UCF-101) settings, showing competitive performance on activity recognition based on video-text models.

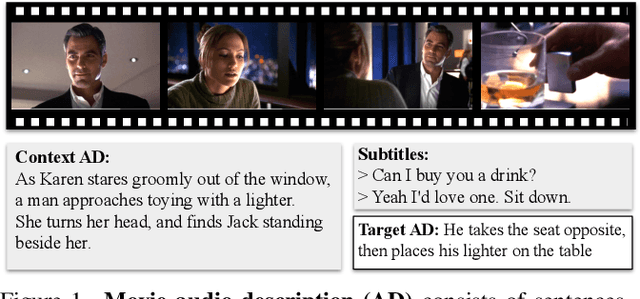

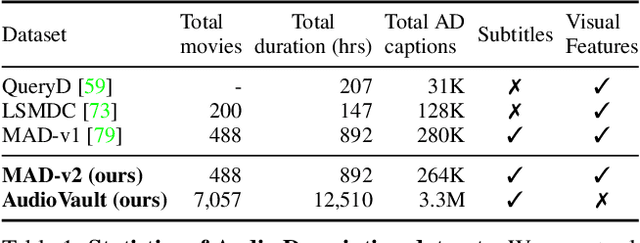

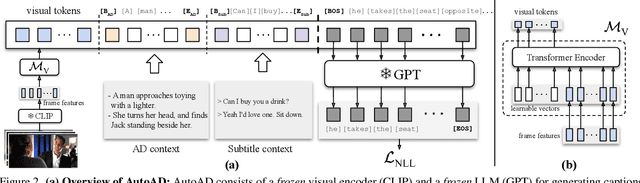

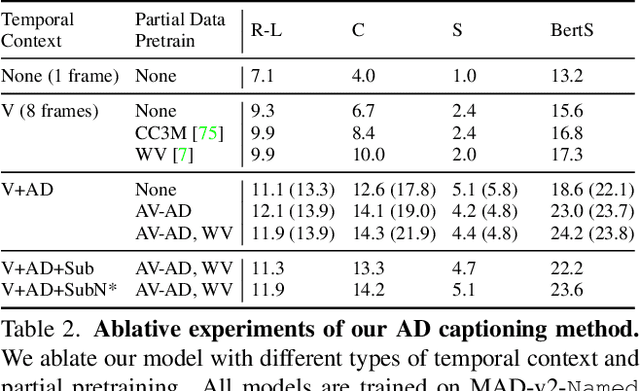

AutoAD: Movie Description in Context

Mar 29, 2023Tengda Han, Max Bain, Arsha Nagrani, Gül Varol, Weidi Xie, Andrew Zisserman

The objective of this paper is an automatic Audio Description (AD) model that ingests movies and outputs AD in text form. Generating high-quality movie AD is challenging due to the dependency of the descriptions on context, and the limited amount of training data available. In this work, we leverage the power of pretrained foundation models, such as GPT and CLIP, and only train a mapping network that bridges the two models for visually-conditioned text generation. In order to obtain high-quality AD, we make the following four contributions: (i) we incorporate context from the movie clip, AD from previous clips, as well as the subtitles; (ii) we address the lack of training data by pretraining on large-scale datasets, where visual or contextual information is unavailable, e.g. text-only AD without movies or visual captioning datasets without context; (iii) we improve on the currently available AD datasets, by removing label noise in the MAD dataset, and adding character naming information; and (iv) we obtain strong results on the movie AD task compared with previous methods.

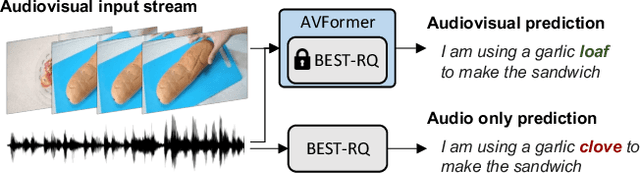

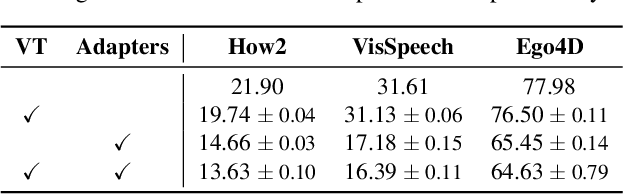

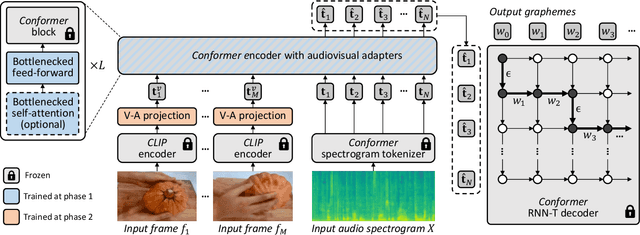

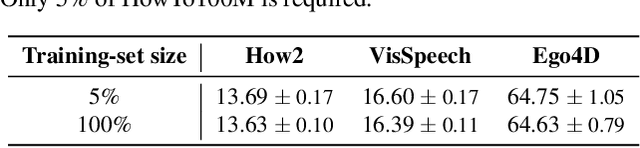

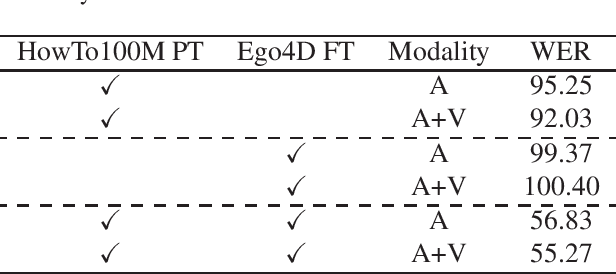

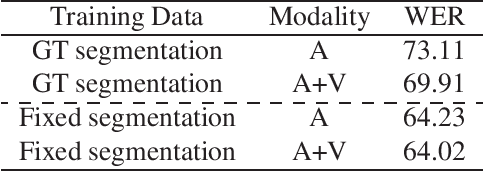

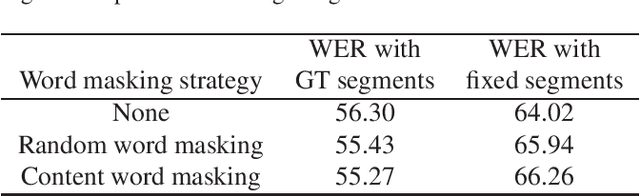

AVFormer: Injecting Vision into Frozen Speech Models for Zero-Shot AV-ASR

Mar 29, 2023Paul Hongsuck Seo, Arsha Nagrani, Cordelia Schmid

Audiovisual automatic speech recognition (AV-ASR) aims to improve the robustness of a speech recognition system by incorporating visual information. Training fully supervised multimodal models for this task from scratch, however is limited by the need for large labelled audiovisual datasets (in each downstream domain of interest). We present AVFormer, a simple method for augmenting audio-only models with visual information, at the same time performing lightweight domain adaptation. We do this by (i) injecting visual embeddings into a frozen ASR model using lightweight trainable adaptors. We show that these can be trained on a small amount of weakly labelled video data with minimum additional training time and parameters. (ii) We also introduce a simple curriculum scheme during training which we show is crucial to enable the model to jointly process audio and visual information effectively; and finally (iii) we show that our model achieves state of the art zero-shot results on three different AV-ASR benchmarks (How2, VisSpeech and Ego4D), while also crucially preserving decent performance on traditional audio-only speech recognition benchmarks (LibriSpeech). Qualitative results show that our model effectively leverages visual information for robust speech recognition.

Vid2Seq: Large-Scale Pretraining of a Visual Language Model for Dense Video Captioning

Mar 21, 2023Antoine Yang, Arsha Nagrani, Paul Hongsuck Seo, Antoine Miech, Jordi Pont-Tuset, Ivan Laptev, Josef Sivic, Cordelia Schmid

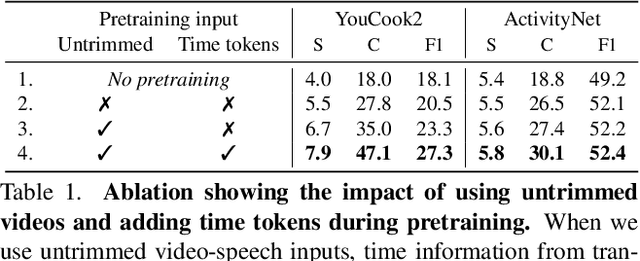

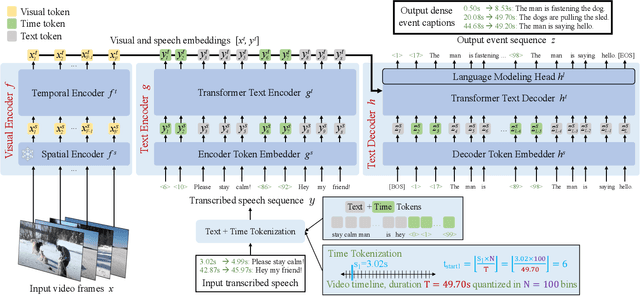

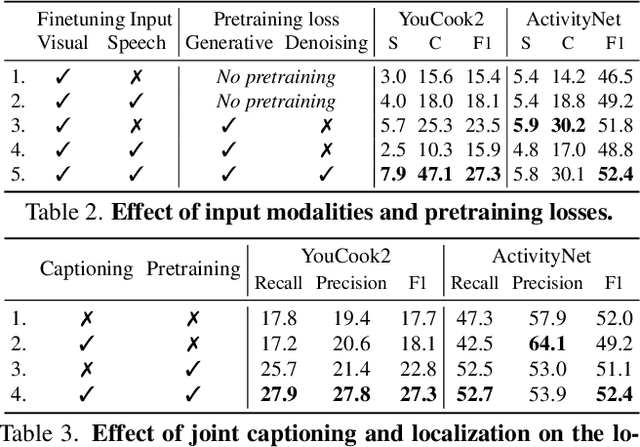

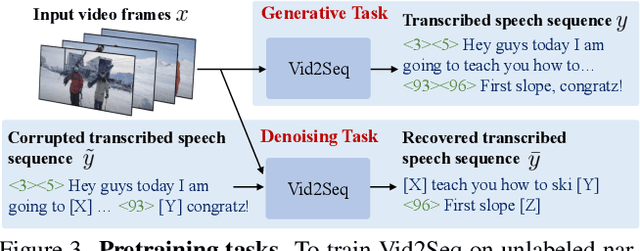

In this work, we introduce Vid2Seq, a multi-modal single-stage dense event captioning model pretrained on narrated videos which are readily-available at scale. The Vid2Seq architecture augments a language model with special time tokens, allowing it to seamlessly predict event boundaries and textual descriptions in the same output sequence. Such a unified model requires large-scale training data, which is not available in current annotated datasets. We show that it is possible to leverage unlabeled narrated videos for dense video captioning, by reformulating sentence boundaries of transcribed speech as pseudo event boundaries, and using the transcribed speech sentences as pseudo event captions. The resulting Vid2Seq model pretrained on the YT-Temporal-1B dataset improves the state of the art on a variety of dense video captioning benchmarks including YouCook2, ViTT and ActivityNet Captions. Vid2Seq also generalizes well to the tasks of video paragraph captioning and video clip captioning, and to few-shot settings. Our code is publicly available at https://antoyang.github.io/vid2seq.html.

VoxSRC 2022: The Fourth VoxCeleb Speaker Recognition Challenge

Mar 06, 2023Jaesung Huh, Andrew Brown, Jee-weon Jung, Joon Son Chung, Arsha Nagrani, Daniel Garcia-Romero, Andrew Zisserman

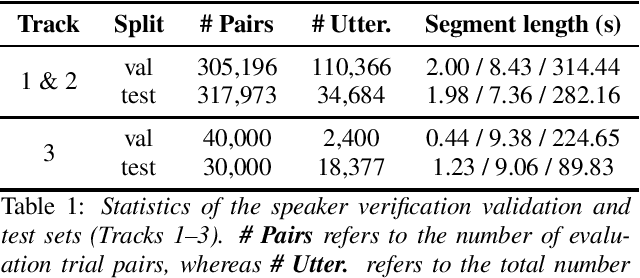

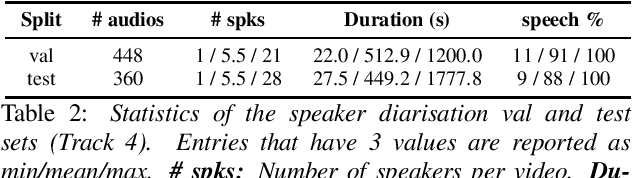

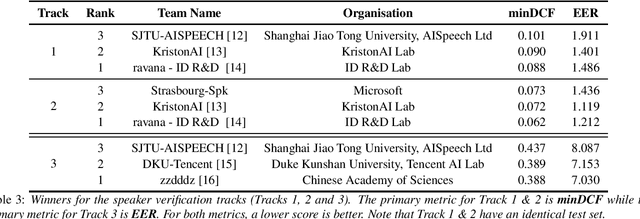

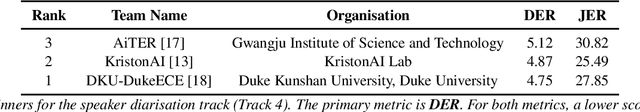

This paper summarises the findings from the VoxCeleb Speaker Recognition Challenge 2022 (VoxSRC-22), which was held in conjunction with INTERSPEECH 2022. The goal of this challenge was to evaluate how well state-of-the-art speaker recognition systems can diarise and recognise speakers from speech obtained "in the wild". The challenge consisted of: (i) the provision of publicly available speaker recognition and diarisation data from YouTube videos together with ground truth annotation and standardised evaluation software; and (ii) a public challenge and hybrid workshop held at INTERSPEECH 2022. We describe the four tracks of our challenge along with the baselines, methods, and results. We conclude with a discussion on the new domain-transfer focus of VoxSRC-22, and on the progression of the challenge from the previous three editions.

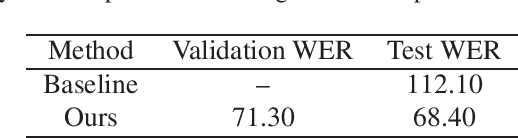

AVATAR submission to the Ego4D AV Transcription Challenge

Nov 18, 2022Paul Hongsuck Seo, Arsha Nagrani, Cordelia Schmid

In this report, we describe our submission to the Ego4D AudioVisual (AV) Speech Transcription Challenge 2022. Our pipeline is based on AVATAR, a state of the art encoder-decoder model for AV-ASR that performs early fusion of spectrograms and RGB images. We describe the datasets, experimental settings and ablations. Our final method achieves a WER of 68.40 on the challenge test set, outperforming the baseline by 43.7%, and winning the challenge.

TL;DW? Summarizing Instructional Videos with Task Relevance & Cross-Modal Saliency

Aug 14, 2022Medhini Narasimhan, Arsha Nagrani, Chen Sun, Michael Rubinstein, Trevor Darrell, Anna Rohrbach, Cordelia Schmid

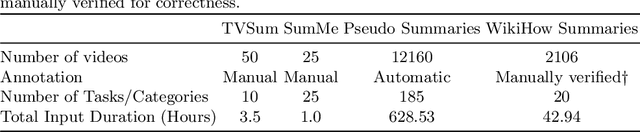

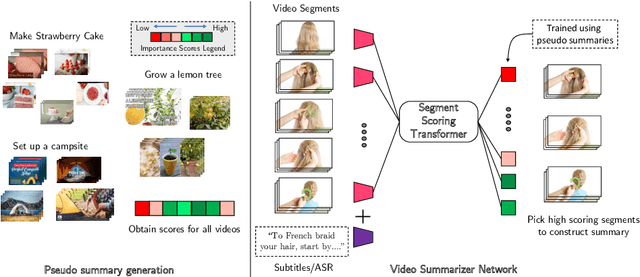

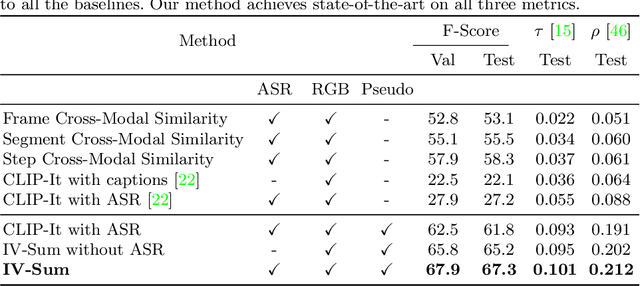

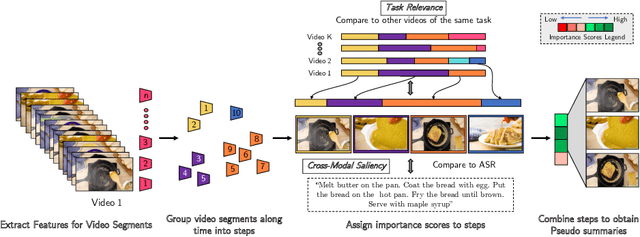

YouTube users looking for instructions for a specific task may spend a long time browsing content trying to find the right video that matches their needs. Creating a visual summary (abridged version of a video) provides viewers with a quick overview and massively reduces search time. In this work, we focus on summarizing instructional videos, an under-explored area of video summarization. In comparison to generic videos, instructional videos can be parsed into semantically meaningful segments that correspond to important steps of the demonstrated task. Existing video summarization datasets rely on manual frame-level annotations, making them subjective and limited in size. To overcome this, we first automatically generate pseudo summaries for a corpus of instructional videos by exploiting two key assumptions: (i) relevant steps are likely to appear in multiple videos of the same task (Task Relevance), and (ii) they are more likely to be described by the demonstrator verbally (Cross-Modal Saliency). We propose an instructional video summarization network that combines a context-aware temporal video encoder and a segment scoring transformer. Using pseudo summaries as weak supervision, our network constructs a visual summary for an instructional video given only video and transcribed speech. To evaluate our model, we collect a high-quality test set, WikiHow Summaries, by scraping WikiHow articles that contain video demonstrations and visual depictions of steps allowing us to obtain the ground-truth summaries. We outperform several baselines and a state-of-the-art video summarization model on this new benchmark.

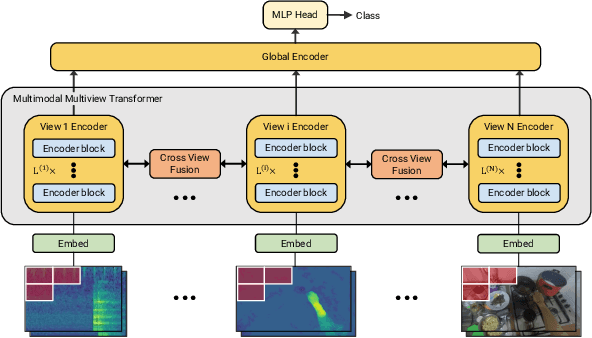

M&M Mix: A Multimodal Multiview Transformer Ensemble

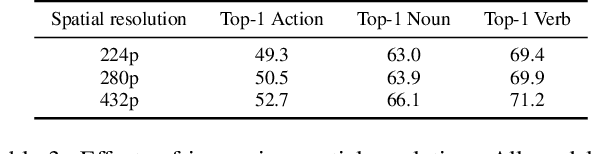

Jun 20, 2022Xuehan Xiong, Anurag Arnab, Arsha Nagrani, Cordelia Schmid

This report describes the approach behind our winning solution to the 2022 Epic-Kitchens Action Recognition Challenge. Our approach builds upon our recent work, Multiview Transformer for Video Recognition (MTV), and adapts it to multimodal inputs. Our final submission consists of an ensemble of Multimodal MTV (M&M) models varying backbone sizes and input modalities. Our approach achieved 52.8% Top-1 accuracy on the test set in action classes, which is 4.1% higher than last year's winning entry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge