Zi Wang

Dj

A Faithful Deep Sensitivity Estimation for Accelerated Magnetic Resonance Imaging

Oct 23, 2022Abstract:Recent deep learning is superior in providing high-quality images and ultra-fast reconstructions in accelerated magnetic resonance imaging (MRI). Faithful coil sensitivity estimation is vital for MRI reconstruction. However, most deep learning methods still rely on pre-estimated sensitivity maps and ignore their inaccuracy, resulting in the significant quality degradation of reconstructed images. In this work, we propose a Joint Deep Sensitivity estimation and Image reconstruction network, called JDSI. During the image artifacts removal, it gradually provides more faithful sensitivity maps, leading to greatly improved image reconstructions. To understand the behavior of the network, the mutual promotion of sensitivity estimation and image reconstruction is revealed through the visualization of network intermediate results. Results on in vivo datasets and radiologist reader study demonstrate that, the proposed JDSI achieves the state-of-the-art performance visually and quantitatively, especially when the accelerated factor is high. Additionally, JDSI owns nice robustness to abnormal subjects and different number of autocalibration signals.

Physics-informed deep diffusion MRI reconstruction: break the bottleneck of training data in artificial intelligence

Oct 20, 2022

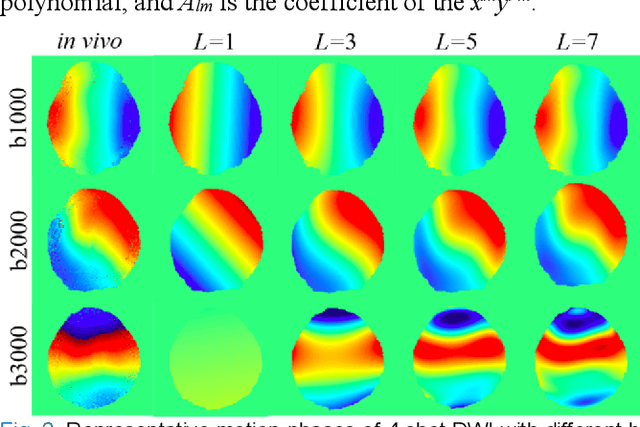

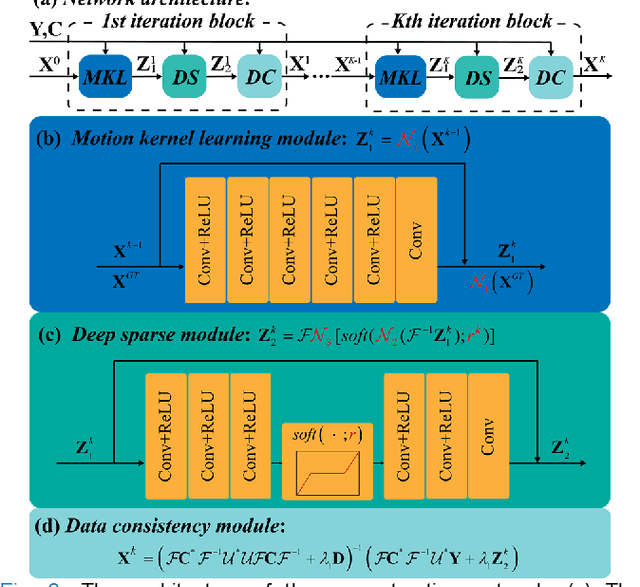

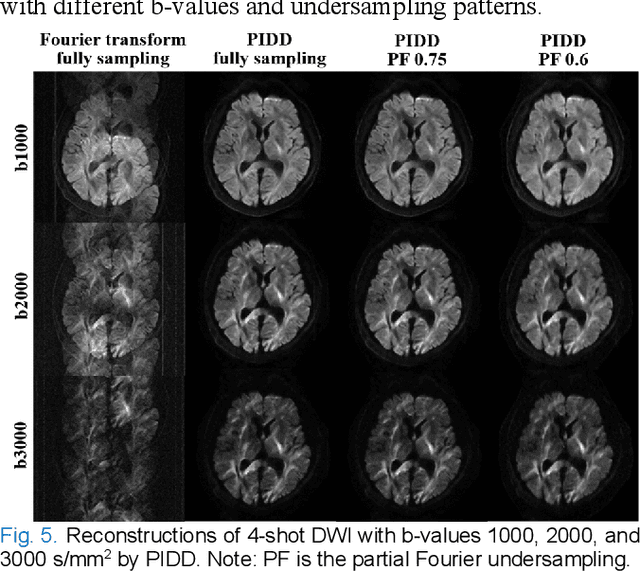

Abstract:In this work, we propose a Physics-Informed Deep Diffusion magnetic resonance imaging (DWI) reconstruction method (PIDD). PIDD contains two main components: The multi-shot DWI data synthesis and a deep learning reconstruction network. For data synthesis, we first mathematically analyze the motion during the multi-shot data acquisition and approach it by a simplified physical motion model. The motion model inspires a polynomial model for motion-induced phase synthesis. Then, lots of synthetic phases are combined with a few real data to generate a large amount of training data. For reconstruction network, we exploit the smoothness property of each shot image phase as learnable convolution kernels in the k-space and complementary sparsity in the image domain. Results on both synthetic and in vivo brain data show that, the proposed PIDD trained on synthetic data enables sub-second ultra-fast, high-quality, and robust reconstruction with different b-values and undersampling patterns.

Plex: Towards Reliability using Pretrained Large Model Extensions

Jul 15, 2022

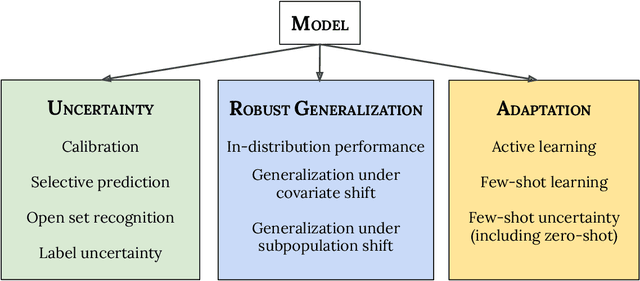

Abstract:A recent trend in artificial intelligence is the use of pretrained models for language and vision tasks, which have achieved extraordinary performance but also puzzling failures. Probing these models' abilities in diverse ways is therefore critical to the field. In this paper, we explore the reliability of models, where we define a reliable model as one that not only achieves strong predictive performance but also performs well consistently over many decision-making tasks involving uncertainty (e.g., selective prediction, open set recognition), robust generalization (e.g., accuracy and proper scoring rules such as log-likelihood on in- and out-of-distribution datasets), and adaptation (e.g., active learning, few-shot uncertainty). We devise 10 types of tasks over 40 datasets in order to evaluate different aspects of reliability on both vision and language domains. To improve reliability, we developed ViT-Plex and T5-Plex, pretrained large model extensions for vision and language modalities, respectively. Plex greatly improves the state-of-the-art across reliability tasks, and simplifies the traditional protocol as it improves the out-of-the-box performance and does not require designing scores or tuning the model for each task. We demonstrate scaling effects over model sizes up to 1B parameters and pretraining dataset sizes up to 4B examples. We also demonstrate Plex's capabilities on challenging tasks including zero-shot open set recognition, active learning, and uncertainty in conversational language understanding.

Pre-training helps Bayesian optimization too

Jul 07, 2022

Abstract:Bayesian optimization (BO) has become a popular strategy for global optimization of many expensive real-world functions. Contrary to a common belief that BO is suited to optimizing black-box functions, it actually requires domain knowledge on characteristics of those functions to deploy BO successfully. Such domain knowledge often manifests in Gaussian process priors that specify initial beliefs on functions. However, even with expert knowledge, it is not an easy task to select a prior. This is especially true for hyperparameter tuning problems on complex machine learning models, where landscapes of tuning objectives are often difficult to comprehend. We seek an alternative practice for setting these functional priors. In particular, we consider the scenario where we have data from similar functions that allow us to pre-train a tighter distribution a priori. To verify our approach in realistic model training setups, we collected a large multi-task hyperparameter tuning dataset by training tens of thousands of configurations of near-state-of-the-art models on popular image and text datasets, as well as a protein sequence dataset. Our results show that on average, our method is able to locate good hyperparameters at least 3 times more efficiently than the best competing methods.

Towards Learning Universal Hyperparameter Optimizers with Transformers

May 26, 2022

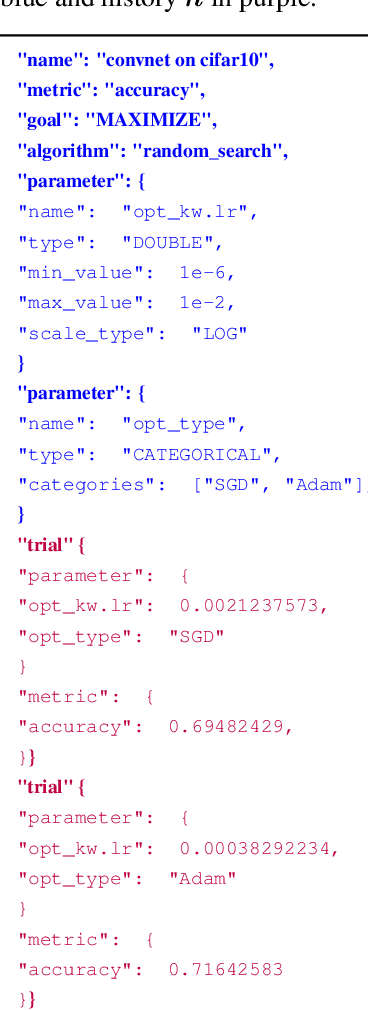

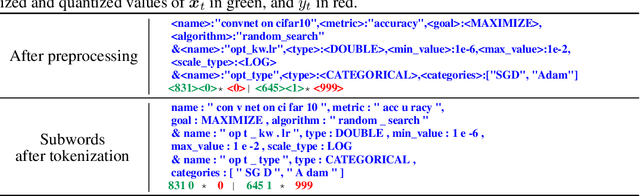

Abstract:Meta-learning hyperparameter optimization (HPO) algorithms from prior experiments is a promising approach to improve optimization efficiency over objective functions from a similar distribution. However, existing methods are restricted to learning from experiments sharing the same set of hyperparameters. In this paper, we introduce the OptFormer, the first text-based Transformer HPO framework that provides a universal end-to-end interface for jointly learning policy and function prediction when trained on vast tuning data from the wild. Our extensive experiments demonstrate that the OptFormer can imitate at least 7 different HPO algorithms, which can be further improved via its function uncertainty estimates. Compared to a Gaussian Process, the OptFormer also learns a robust prior distribution for hyperparameter response functions, and can thereby provide more accurate and better calibrated predictions. This work paves the path to future extensions for training a Transformer-based model as a general HPO optimizer.

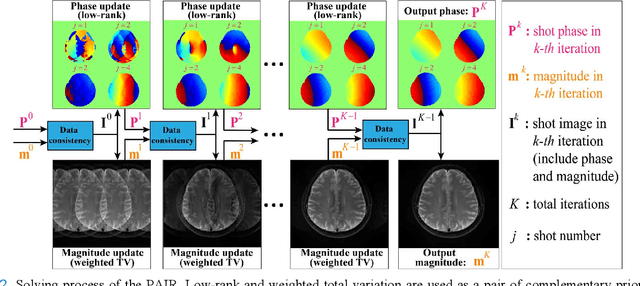

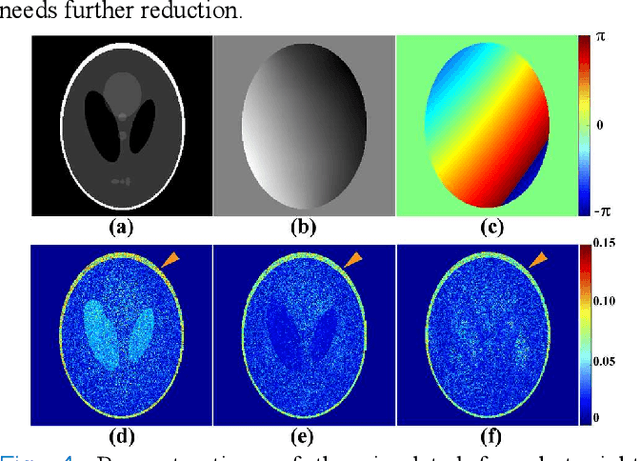

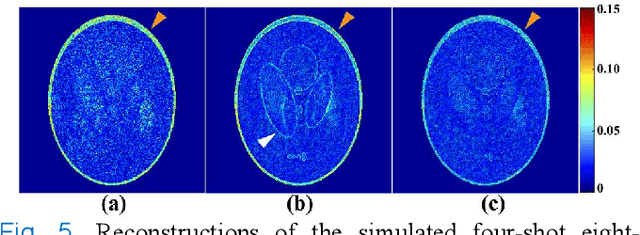

A Paired Phase and Magnitude Reconstruction for Advanced Diffusion-Weighted Imaging

Mar 28, 2022

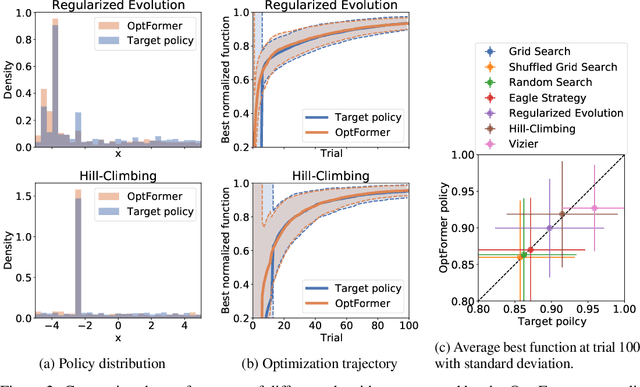

Abstract:Multi-shot interleaved echo planer imaging can obtain diffusion-weighted images (DWI) with high spatial resolution and low distortion, but suffers from ghost artifacts introduced by phase variations between shots. In this work, we aim at solving the challenging reconstructions under severe motions between shots and low signal-to-noise ratio. An explicit phase model with paired phase and magnitude priors is proposed to regularize the reconstruction (PAIR). The former prior is derived from the smoothness of the shot phase and enforced with low-rankness in the k-space domain. The latter explores similar edges among multi-b-value and multi-direction DWI with weighted total variation in the image domain. Extensive simulation and in vivo results show that PAIR can remove ghost image artifacts very well under the high number of shots (8 shots) and significantly suppress the noise under the ultra-high b-value (4000 s/mm2). The explicit phase model PAIR with complementary priors has a good performance on challenging reconstructions under severe motions between shots and low signal-to-noise ratio. PAIR has great potential in the advanced clinical DWI applications and brain function research.

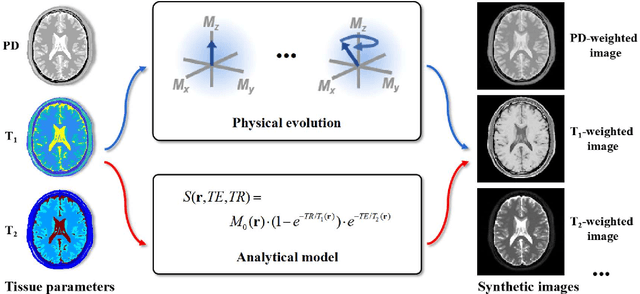

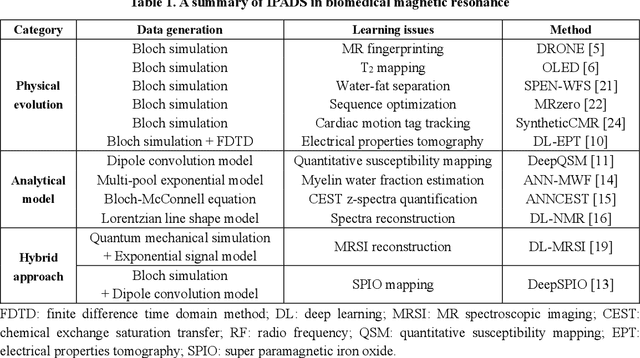

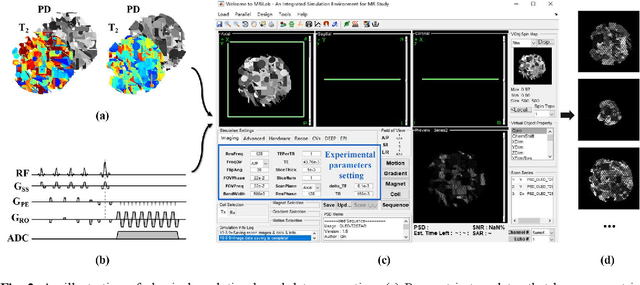

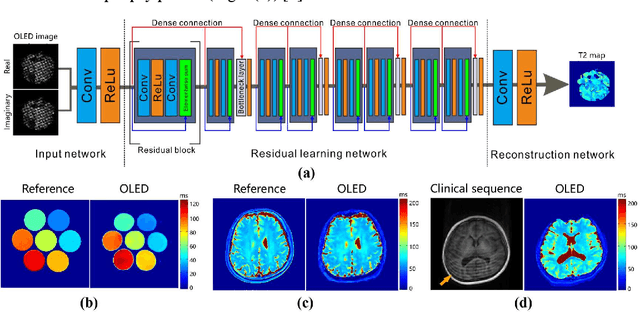

Physics-driven Synthetic Data Learning for Biomedical Magnetic Resonance

Mar 22, 2022

Abstract:Deep learning has innovated the field of computational imaging. One of its bottlenecks is unavailable or insufficient training data. This article reviews an emerging paradigm, imaging physics-based data synthesis (IPADS), that can provide huge training data in biomedical magnetic resonance without or with few real data. Following the physical law of magnetic resonance, IPADS generates signals from differential equations or analytical solution models, making the learning more scalable, explainable, and better protecting privacy. Key components of IPADS learning, including signal generation models, basic deep learning network structures, enhanced data generation, and learning methods are discussed. Great potentials of IPADS have been demonstrated by representative applications in fast imaging, ultrafast signal reconstruction and accurate parameter quantification. Finally, open questions and future work have been discussed.

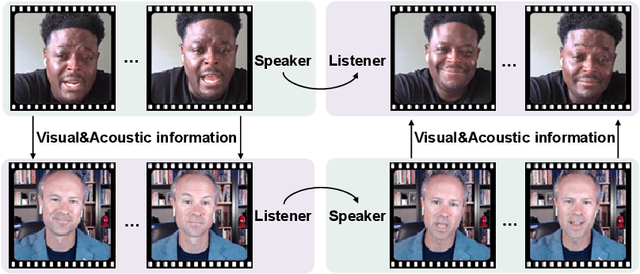

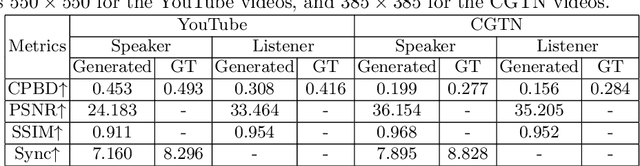

DialogueNeRF: Towards Realistic Avatar Face-to-face Conversation Video Generation

Mar 15, 2022

Abstract:Conversation is an essential component of virtual avatar activities in the metaverse. With the development of natural language processing, textual and vocal conversation generation has achieved a significant breakthrough. Face-to-face conversations account for the vast majority of daily conversations. However, this task has not acquired enough attention. In this paper, we propose a novel task that aims to generate a realistic human avatar face-to-face conversation process and present a new dataset to explore this target. To tackle this novel task, we propose a new framework that utilizes a series of conversation signals, e.g. audio, head pose, and expression, to synthesize face-to-face conversation videos between human avatars, with all the interlocutors modeled within the same network. Our method is evaluated by quantitative and qualitative experiments in different aspects, e.g. image quality, pose sequence trend, and naturalness of the rendering videos. All the code, data, and models will be made publicly available.

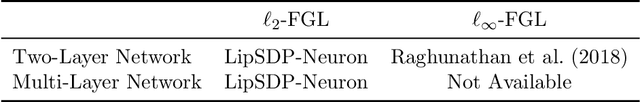

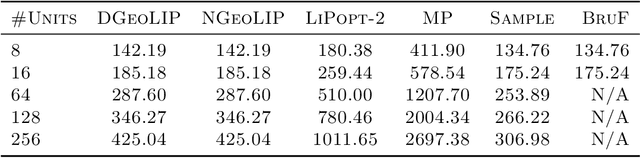

A Quantitative Geometric Approach to Neural Network Smoothness

Mar 02, 2022

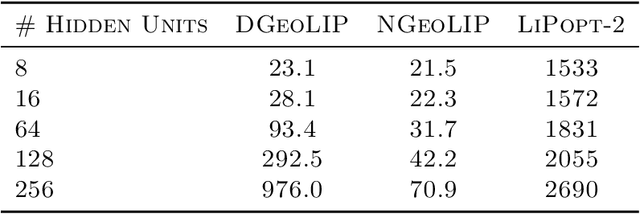

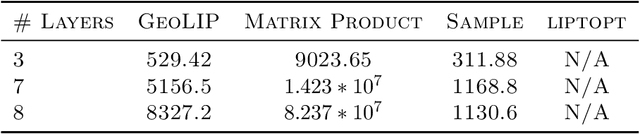

Abstract:Fast and precise Lipschitz constant estimation of neural networks is an important task for deep learning. Researchers have recently found an intrinsic trade-off between the accuracy and smoothness of neural networks, so training a network with a loose Lipschitz constant estimation imposes a strong regularization and can hurt the model accuracy significantly. In this work, we provide a unified theoretical framework, a quantitative geometric approach, to address the Lipschitz constant estimation. By adopting this framework, we can immediately obtain several theoretical results, including the computational hardness of Lipschitz constant estimation and its approximability. Furthermore, the quantitative geometric perspective can also provide some insights into recent empirical observations that techniques for one norm do not usually transfer to another one. We also implement the algorithms induced from this quantitative geometric approach in a tool GeoLIP. These algorithms are based on semidefinite programming (SDP). Our empirical evaluation demonstrates that GeoLIP is more scalable and precise than existing tools on Lipschitz constant estimation for $\ell_\infty$-perturbations. Furthermore, we also show its intricate relations with other recent SDP-based techniques, both theoretically and empirically. We believe that this unified quantitative geometric perspective can bring new insights and theoretical tools to the investigation of neural-network smoothness and robustness.

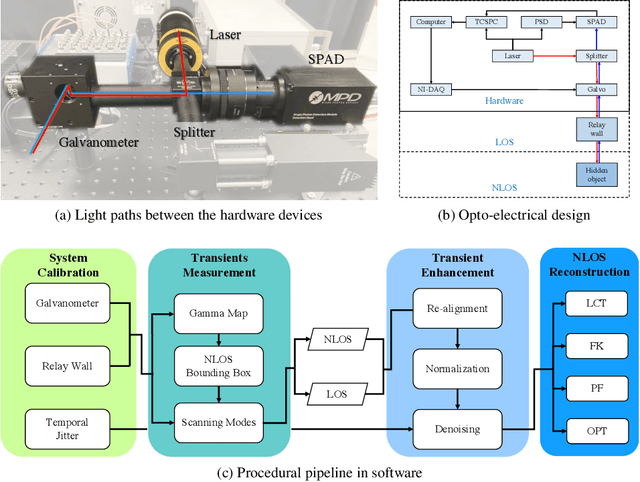

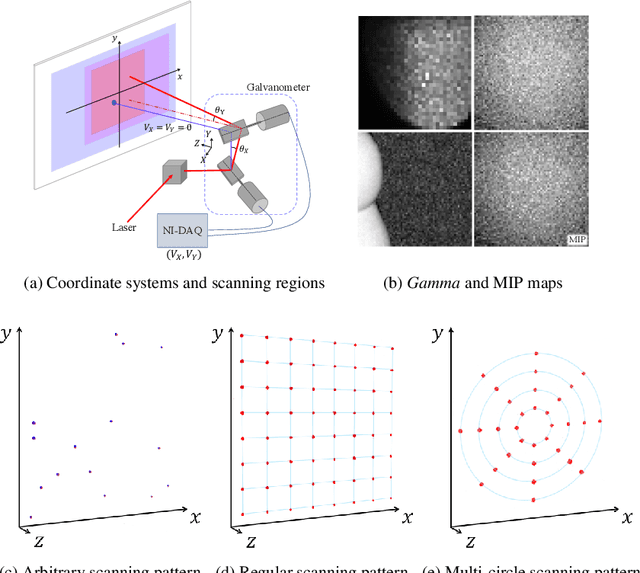

Onsite Non-Line-of-Sight Imaging via Online Calibrations

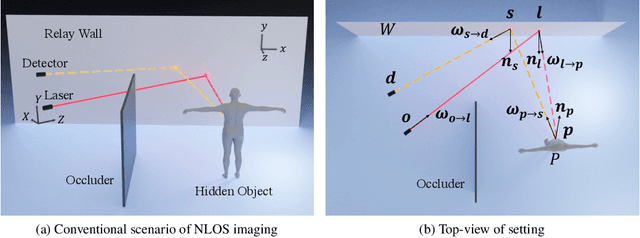

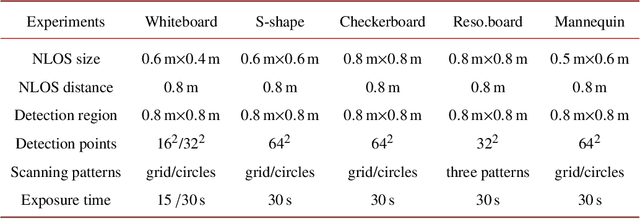

Dec 29, 2021

Abstract:There has been an increasing interest in deploying non-line-of-sight (NLOS) imaging systems for recovering objects behind an obstacle. Existing solutions generally pre-calibrate the system before scanning the hidden objects. Onsite adjustments of the occluder, object and scanning pattern require re-calibration. We present an online calibration technique that directly decouples the acquired transients at onsite scanning into the LOS and hidden components. We use the former to directly (re)calibrate the system upon changes of scene/obstacle configurations, scanning regions, and scanning patterns whereas the latter for hidden object recovery via spatial, frequency or learning based techniques. Our technique avoids using auxiliary calibration apparatus such as mirrors or checkerboards and supports both laboratory validations and real-world deployments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge