Yu Zhu

Spark-Prover-X1: Formal Theorem Proving Through Diverse Data Training

Nov 18, 2025Abstract:Large Language Models (LLMs) have shown significant promise in automated theorem proving, yet progress is often constrained by the scarcity of diverse and high-quality formal language data. To address this issue, we introduce Spark-Prover-X1, a 7B parameter model trained via an three-stage framework designed to unlock the reasoning potential of more accessible and moderately-sized LLMs. The first stage infuses deep knowledge through continuous pre-training on a broad mathematical corpus, enhanced by a suite of novel data tasks. Key innovation is a "CoT-augmented state prediction" task to achieve fine-grained reasoning. The second stage employs Supervised Fine-tuning (SFT) within an expert iteration loop to specialize both the Spark-Prover-X1-7B and Spark-Formalizer-X1-7B models. Finally, a targeted round of Group Relative Policy Optimization (GRPO) is applied to sharpen the prover's capabilities on the most challenging problems. To facilitate robust evaluation, particularly on problems from real-world examinations, we also introduce ExamFormal-Bench, a new benchmark dataset of 402 formal problems. Experimental results demonstrate that Spark-Prover achieves state-of-the-art performance among similarly-sized open-source models within the "Whole-Proof Generation" paradigm. It shows exceptional performance on difficult competition benchmarks, notably solving 27 problems on PutnamBench (pass@32) and achieving 24.0\% on CombiBench (pass@32). Our work validates that this diverse training data and progressively refined training pipeline provides an effective path for enhancing the formal reasoning capabilities of lightweight LLMs. Both Spark-Prover-X1-7B and Spark-Formalizer-X1-7B, along with the ExamFormal-Bench dataset, are made publicly available at: https://www.modelscope.cn/organization/iflytek, https://gitcode.com/ifly_opensource.

CapeNext: Rethinking and refining dynamic support information for category-agnostic pose estimation

Nov 17, 2025

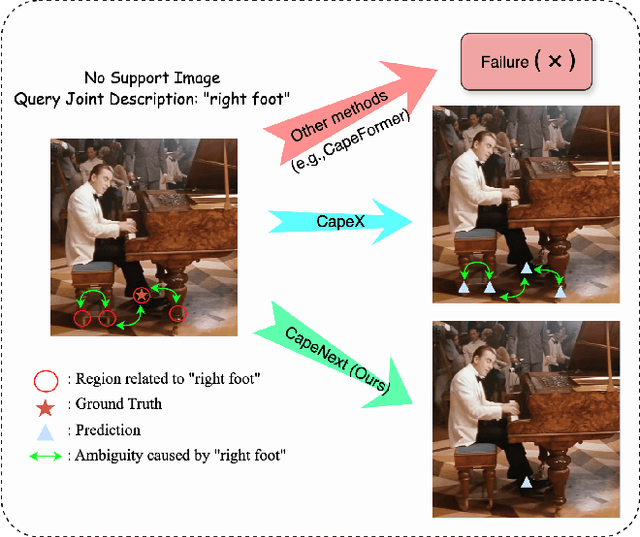

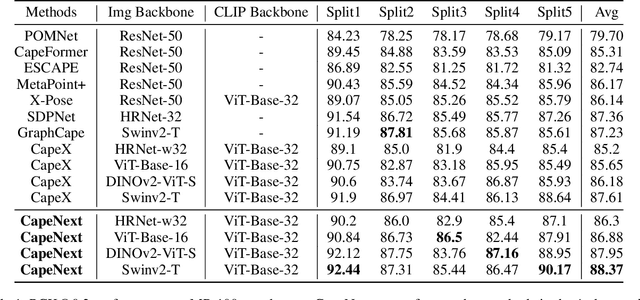

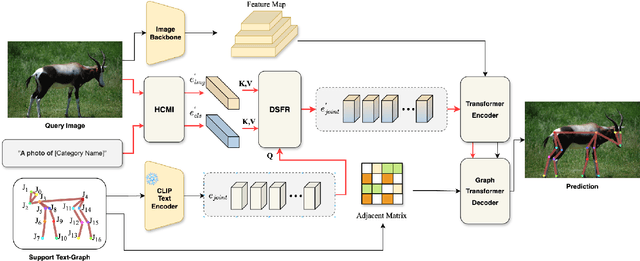

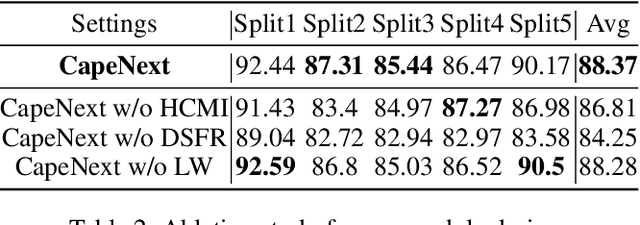

Abstract:Recent research in Category-Agnostic Pose Estimation (CAPE) has adopted fixed textual keypoint description as semantic prior for two-stage pose matching frameworks. While this paradigm enhances robustness and flexibility by disentangling the dependency of support images, our critical analysis reveals two inherent limitations of static joint embedding: (1) polysemy-induced cross-category ambiguity during the matching process(e.g., the concept "leg" exhibiting divergent visual manifestations across humans and furniture), and (2) insufficient discriminability for fine-grained intra-category variations (e.g., posture and fur discrepancies between a sleeping white cat and a standing black cat). To overcome these challenges, we propose a new framework that innovatively integrates hierarchical cross-modal interaction with dual-stream feature refinement, enhancing the joint embedding with both class-level and instance-specific cues from textual description and specific images. Experiments on the MP-100 dataset demonstrate that, regardless of the network backbone, CapeNext consistently outperforms state-of-the-art CAPE methods by a large margin.

SRLF: An Agent-Driven Set-Wise Reflective Learning Framework for Sequential Recommendation

Nov 14, 2025Abstract:LLM-based agents are emerging as a promising paradigm for simulating user behavior to enhance recommender systems. However, their effectiveness is often limited by existing studies that focus on modeling user ratings for individual items. This point-wise approach leads to prevalent issues such as inaccurate user preference comprehension and rigid item-semantic representations. To address these limitations, we propose the novel Set-wise Reflective Learning Framework (SRLF). Our framework operationalizes a closed-loop "assess-validate-reflect" cycle that harnesses the powerful in-context learning capabilities of LLMs. SRLF departs from conventional point-wise assessment by formulating a holistic judgment on an entire set of items. It accomplishes this by comprehensively analyzing both the intricate interrelationships among items within the set and their collective alignment with the user's preference profile. This method of set-level contextual understanding allows our model to capture complex relational patterns essential to user behavior, making it significantly more adept for sequential recommendation. Extensive experiments validate our approach, confirming that this set-wise perspective is crucial for achieving state-of-the-art performance in sequential recommendation tasks.

Early Stopping Chain-of-thoughts in Large Language Models

Sep 17, 2025

Abstract:Reasoning large language models (LLMs) have demonstrated superior capacities in solving complicated problems by generating long chain-of-thoughts (CoT), but such a lengthy CoT incurs high inference costs. In this study, we introduce ES-CoT, an inference-time method that shortens CoT generation by detecting answer convergence and stopping early with minimal performance loss. At the end of each reasoning step, we prompt the LLM to output its current final answer, denoted as a step answer. We then track the run length of consecutive identical step answers as a measure of answer convergence. Once the run length exhibits a sharp increase and exceeds a minimum threshold, the generation is terminated. We provide both empirical and theoretical support for this heuristic: step answers steadily converge to the final answer, and large run-length jumps reliably mark this convergence. Experiments on five reasoning datasets across three LLMs show that ES-CoT reduces the number of inference tokens by about 41\% on average while maintaining accuracy comparable to standard CoT. Further, ES-CoT integrates seamlessly with self-consistency prompting and remains robust across hyperparameter choices, highlighting it as a practical and effective approach for efficient reasoning.

SynBrain: Enhancing Visual-to-fMRI Synthesis via Probabilistic Representation Learning

Aug 14, 2025Abstract:Deciphering how visual stimuli are transformed into cortical responses is a fundamental challenge in computational neuroscience. This visual-to-neural mapping is inherently a one-to-many relationship, as identical visual inputs reliably evoke variable hemodynamic responses across trials, contexts, and subjects. However, existing deterministic methods struggle to simultaneously model this biological variability while capturing the underlying functional consistency that encodes stimulus information. To address these limitations, we propose SynBrain, a generative framework that simulates the transformation from visual semantics to neural responses in a probabilistic and biologically interpretable manner. SynBrain introduces two key components: (i) BrainVAE models neural representations as continuous probability distributions via probabilistic learning while maintaining functional consistency through visual semantic constraints; (ii) A Semantic-to-Neural Mapper acts as a semantic transmission pathway, projecting visual semantics into the neural response manifold to facilitate high-fidelity fMRI synthesis. Experimental results demonstrate that SynBrain surpasses state-of-the-art methods in subject-specific visual-to-fMRI encoding performance. Furthermore, SynBrain adapts efficiently to new subjects with few-shot data and synthesizes high-quality fMRI signals that are effective in improving data-limited fMRI-to-image decoding performance. Beyond that, SynBrain reveals functional consistency across trials and subjects, with synthesized signals capturing interpretable patterns shaped by biological neural variability. The code will be made publicly available.

Heterogeneous-IRS-Assisted MIMO Systems: Channel Estimation and Beamforming

Jun 12, 2025Abstract:Intelligent reflecting surface (IRS) has gained great attention for its ability to create favorable propagation environments. However, the power consumption of conventional IRSs cannot be ignored due to the large number of reflecting elements and control circuits. To balance performance and power consumption, we previously proposed a heterogeneous-IRS (HE-IRS), a green IRS structure integrating dynamically tunable elements (DTEs) and statically tunable elements (STEs). Compared to conventional IRSs with only DTEs, the unique DTE-STE integrated structure introduces new challenges in both channel estimation and beamforming. In this paper, we investigate the channel estimation and beamforming problems in HE-IRS-assisted multi-user multiple-input multiple-output systems. Unlike the overall cascaded channel estimated in conventional IRSs, we show that the HE-IRS channel to be estimated is decomposed into a DTE-based cascaded channel and an STE-based equivalent channel. Leveraging it along with the inherent sparsity of DTE- and STE-based channels and manifold optimization, we propose an efficient channel estimation scheme. To address the rank mismatch problem in the imperfect channel sparsity information, a robust rank selection rule is developed. For beamforming, we propose an offline algorithm to optimize the STE phase shifts for wide beam coverage, and an online algorithm to optimize the BS precoder and the DTE phase shifts using the estimated HE-IRS channel. Simulation results show that the HE-IRS requires less pilot overhead than conventional IRSs with the same number of elements. With the proposed channel estimation and beamforming schemes, the green HE-IRS achieves competitive sum rate performance with significantly reduced power consumption.

Token-level Accept or Reject: A Micro Alignment Approach for Large Language Models

May 26, 2025Abstract:With the rapid development of Large Language Models (LLMs), aligning these models with human preferences and values is critical to ensuring ethical and safe applications. However, existing alignment techniques such as RLHF or DPO often require direct fine-tuning on LLMs with billions of parameters, resulting in substantial computational costs and inefficiencies. To address this, we propose Micro token-level Accept-Reject Aligning (MARA) approach designed to operate independently of the language models. MARA simplifies the alignment process by decomposing sentence-level preference learning into token-level binary classification, where a compact three-layer fully-connected network determines whether candidate tokens are "Accepted" or "Rejected" as part of the response. Extensive experiments across seven different LLMs and three open-source datasets show that MARA achieves significant improvements in alignment performance while reducing computational costs.

Argus: Federated Non-convex Bilevel Learning over 6G Space-Air-Ground Integrated Network

May 14, 2025

Abstract:The space-air-ground integrated network (SAGIN) has recently emerged as a core element in the 6G networks. However, traditional centralized and synchronous optimization algorithms are unsuitable for SAGIN due to infrastructureless and time-varying environments. This paper aims to develop a novel Asynchronous algorithm a.k.a. Argus for tackling non-convex and non-smooth decentralized federated bilevel learning over SAGIN. The proposed algorithm allows networked agents (e.g. autonomous aerial vehicles) to tackle bilevel learning problems in time-varying networks asynchronously, thereby averting stragglers from impeding the overall training speed. We provide a theoretical analysis of the iteration complexity, communication complexity, and computational complexity of Argus. Its effectiveness is further demonstrated through numerical experiments.

Bayesian Federated Cause-of-Death Classification and Quantification Under Distribution Shift

May 04, 2025

Abstract:In regions lacking medically certified causes of death, verbal autopsy (VA) is a critical and widely used tool to ascertain the cause of death through interviews with caregivers. Data collected by VAs are often analyzed using probabilistic algorithms. The performance of these algorithms often degrades due to distributional shift across populations. Most existing VA algorithms rely on centralized training, requiring full access to training data for joint modeling. This is often infeasible due to privacy and logistical constraints. In this paper, we propose a novel Bayesian Federated Learning (BFL) framework that avoids data sharing across multiple training sources. Our method enables reliable individual-level cause-of-death classification and population-level quantification of cause-specific mortality fractions (CSMFs), in a target domain with limited or no local labeled data. The proposed framework is modular, computationally efficient, and compatible with a wide range of existing VA algorithms as candidate models, facilitating flexible deployment in real-world mortality surveillance systems. We validate the performance of BFL through extensive experiments on two real-world VA datasets under varying levels of distribution shift. Our results show that BFL significantly outperforms the base models built on a single domain and achieves comparable or better performance compared to joint modeling.

Sparse2DGS: Geometry-Prioritized Gaussian Splatting for Surface Reconstruction from Sparse Views

Apr 29, 2025Abstract:We present a Gaussian Splatting method for surface reconstruction using sparse input views. Previous methods relying on dense views struggle with extremely sparse Structure-from-Motion points for initialization. While learning-based Multi-view Stereo (MVS) provides dense 3D points, directly combining it with Gaussian Splatting leads to suboptimal results due to the ill-posed nature of sparse-view geometric optimization. We propose Sparse2DGS, an MVS-initialized Gaussian Splatting pipeline for complete and accurate reconstruction. Our key insight is to incorporate the geometric-prioritized enhancement schemes, allowing for direct and robust geometric learning under ill-posed conditions. Sparse2DGS outperforms existing methods by notable margins while being ${2}\times$ faster than the NeRF-based fine-tuning approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge