Bo Tang

Efficient Anti-exploration via VQVAE and Fuzzy Clustering in Offline Reinforcement Learning

Feb 08, 2026Abstract:Pseudo-count is an effective anti-exploration method in offline reinforcement learning (RL) by counting state-action pairs and imposing a large penalty on rare or unseen state-action pair data. Existing anti-exploration methods count continuous state-action pairs by discretizing these data, but often suffer from the issues of dimension disaster and information loss in the discretization process, leading to efficiency and performance reduction, and even failure of policy learning. In this paper, a novel anti-exploration method based on Vector Quantized Variational Autoencoder (VQVAE) and fuzzy clustering in offline RL is proposed. We first propose an efficient pseudo-count method based on the multi-codebook VQVAE to discretize state-action pairs, and design an offline RL anti-exploitation method based on the proposed pseudo-count method to handle the dimension disaster issue and improve the learning efficiency. In addition, a codebook update mechanism based on fuzzy C-means (FCM) clustering is developed to improve the use rate of vectors in codebooks, addressing the information loss issue in the discretization process. The proposed method is evaluated on the benchmark of Datasets for Deep Data-Driven Reinforcement Learning (D4RL), and experimental results show that the proposed method performs better and requires less computing cost in multiple complex tasks compared to state-of-the-art (SOTA) methods.

AI-Driven Fuzzing for Vulnerability Assessment of 5G Traffic Steering Algorithms

Jan 26, 2026Abstract:Traffic Steering (TS) dynamically allocates user traffic across cells to enhance Quality of Experience (QoE), load balance, and spectrum efficiency in 5G networks. However, TS algorithms remain vulnerable to adversarial conditions such as interference spikes, handover storms, and localized outages. To address this, an AI-driven fuzz testing framework based on the Non-Dominated Sorting Genetic Algorithm II (NSGA-II) is proposed to systematically expose hidden vulnerabilities. Using NVIDIA Sionna, five TS algorithms are evaluated across six scenarios. Results show that AI-driven fuzzing detects 34.3% more total vulnerabilities and 5.8% more critical failures than traditional testing, achieving superior diversity and edge-case discovery. The observed variance in critical failure detection underscores the stochastic nature of rare vulnerabilities. These findings demonstrate that AI-driven fuzzing offers an effective and scalable validation approach for improving TS algorithm robustness and ensuring resilient 6G-ready networks.

FairShare: Auditable Geographic Fairness for Multi-Operator LEO Spectrum Sharing

Jan 14, 2026Abstract:Dynamic spectrum sharing (DSS) among multi-operator low Earth orbit (LEO) mega-constellations is essential for coexistence, yet prevailing policies focus almost exclusively on interference mitigation, leaving geographic equity largely unaddressed. This work investigates whether conventional DSS approaches inadvertently exacerbate the rural digital divide. Through large-scale, 3GPP-compliant non-terrestrial network (NTN) simulations with geographically distributed users, we systematically evaluate standard allocation policies. The results uncover a stark and persistent structural bias: SNR-priority scheduling induces a 1.65x urban-rural access disparity, privileging users with favorable satellite geometry. Counter-intuitively, increasing system bandwidth amplifies rather than alleviates this gap, with disparity rising from 1.0x to 1.65x as resources expand. To remedy this, we propose FairShare, a lightweight, quota-based framework that enforces geographic fairness. FairShare not only reverses the bias, achieving an affirmative disparity ratio of Delta_geo = 0.72x, but also reduces scheduler runtime by 3.3%. This demonstrates that algorithmic fairness can be achieved without trading off efficiency or complexity. Our work provides regulators with both a diagnostic metric for auditing fairness and a practical, enforceable mechanism for equitable spectrum governance in next-generation satellite networks.

Inside Out: Evolving User-Centric Core Memory Trees for Long-Term Personalized Dialogue Systems

Jan 08, 2026Abstract:Existing long-term personalized dialogue systems struggle to reconcile unbounded interaction streams with finite context constraints, often succumbing to memory noise accumulation, reasoning degradation, and persona inconsistency. To address these challenges, this paper proposes Inside Out, a framework that utilizes a globally maintained PersonaTree as the carrier of long-term user profiling. By constraining the trunk with an initial schema and updating the branches and leaves, PersonaTree enables controllable growth, achieving memory compression while preserving consistency. Moreover, we train a lightweight MemListener via reinforcement learning with process-based rewards to produce structured, executable, and interpretable {ADD, UPDATE, DELETE, NO_OP} operations, thereby supporting the dynamic evolution of the personalized tree. During response generation, PersonaTree is directly leveraged to enhance outputs in latency-sensitive scenarios; when users require more details, the agentic mode is triggered to introduce details on-demand under the constraints of the PersonaTree. Experiments show that PersonaTree outperforms full-text concatenation and various personalized memory systems in suppressing contextual noise and maintaining persona consistency. Notably, the small MemListener model achieves memory-operation decision performance comparable to, or even surpassing, powerful reasoning models such as DeepSeek-R1-0528 and Gemini-3-Pro.

MemRL: Self-Evolving Agents via Runtime Reinforcement Learning on Episodic Memory

Jan 06, 2026Abstract:The hallmark of human intelligence is the ability to master new skills through Constructive Episodic Simulation-retrieving past experiences to synthesize solutions for novel tasks. While Large Language Models possess strong reasoning capabilities, they struggle to emulate this self-evolution: fine-tuning is computationally expensive and prone to catastrophic forgetting, while existing memory-based methods rely on passive semantic matching that often retrieves noise. To address these challenges, we propose MemRL, a framework that enables agents to self-evolve via non-parametric reinforcement learning on episodic memory. MemRL explicitly separates the stable reasoning of a frozen LLM from the plastic, evolving memory. Unlike traditional methods, MemRL employs a Two-Phase Retrieval mechanism that filters candidates by semantic relevance and then selects them based on learned Q-values (utility). These utilities are continuously refined via environmental feedback in an trial-and-error manner, allowing the agent to distinguish high-value strategies from similar noise. Extensive experiments on HLE, BigCodeBench, ALFWorld, and Lifelong Agent Bench demonstrate that MemRL significantly outperforms state-of-the-art baselines. Our analysis experiments confirm that MemRL effectively reconciles the stability-plasticity dilemma, enabling continuous runtime improvement without weight updates.

SafeLoad: Efficient Admission Control Framework for Identifying Memory-Overloading Queries in Cloud Data Warehouses

Jan 05, 2026Abstract:Memory overload is a common form of resource exhaustion in cloud data warehouses. When database queries fail due to memory overload, it not only wastes critical resources such as CPU time but also disrupts the execution of core business processes, as memory-overloading (MO) queries are typically part of complex workflows. If such queries are identified in advance and scheduled to memory-rich serverless clusters, it can prevent resource wastage and query execution failure. Therefore, cloud data warehouses desire an admission control framework with high prediction precision, interpretability, efficiency, and adaptability to effectively identify MO queries. However, existing admission control frameworks primarily focus on scenarios like SLA satisfaction and resource isolation, with limited precision in identifying MO queries. Moreover, there is a lack of publicly available MO-labeled datasets with workloads for training and benchmarking. To tackle these challenges, we propose SafeLoad, the first query admission control framework specifically designed to identify MO queries. Alongside, we release SafeBench, an open-source, industrial-scale benchmark for this task, which includes 150 million real queries. SafeLoad first filters out memory-safe queries using the interpretable discriminative rule. It then applies a hybrid architecture that integrates both a global model and cluster-level models, supplemented by a misprediction correction module to identify MO queries. Additionally, a self-tuning quota management mechanism dynamically adjusts prediction quotas per cluster to improve precision. Experimental results show that SafeLoad achieves state-of-the-art prediction performance with low online and offline time overhead. Specifically, SafeLoad improves precision by up to 66% over the best baseline and reduces wasted CPU time by up to 8.09x compared to scenarios without SafeLoad.

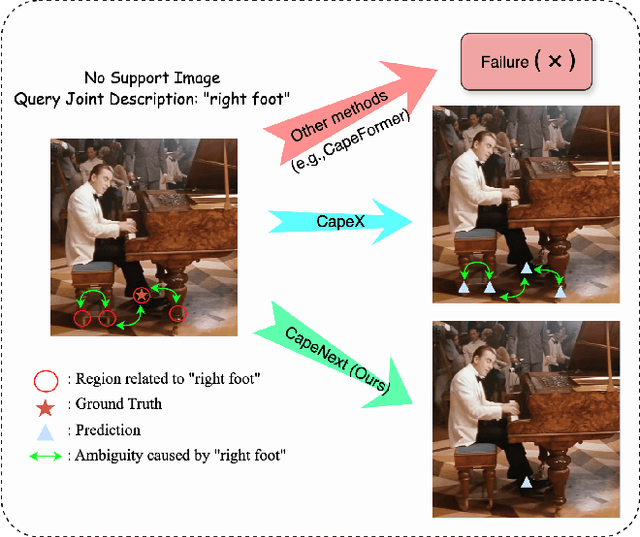

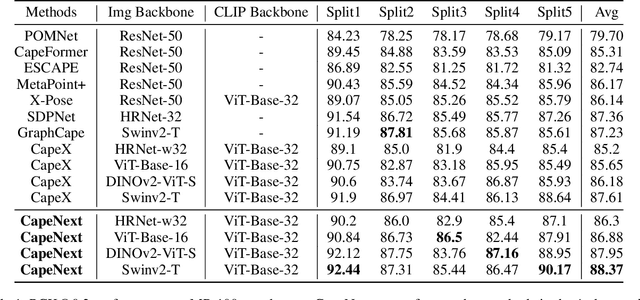

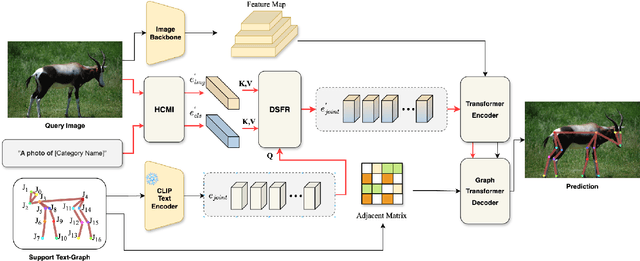

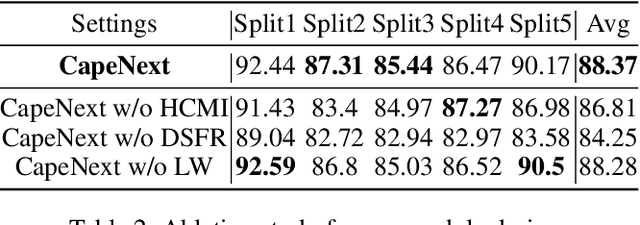

CapeNext: Rethinking and refining dynamic support information for category-agnostic pose estimation

Nov 17, 2025

Abstract:Recent research in Category-Agnostic Pose Estimation (CAPE) has adopted fixed textual keypoint description as semantic prior for two-stage pose matching frameworks. While this paradigm enhances robustness and flexibility by disentangling the dependency of support images, our critical analysis reveals two inherent limitations of static joint embedding: (1) polysemy-induced cross-category ambiguity during the matching process(e.g., the concept "leg" exhibiting divergent visual manifestations across humans and furniture), and (2) insufficient discriminability for fine-grained intra-category variations (e.g., posture and fur discrepancies between a sleeping white cat and a standing black cat). To overcome these challenges, we propose a new framework that innovatively integrates hierarchical cross-modal interaction with dual-stream feature refinement, enhancing the joint embedding with both class-level and instance-specific cues from textual description and specific images. Experiments on the MP-100 dataset demonstrate that, regardless of the network backbone, CapeNext consistently outperforms state-of-the-art CAPE methods by a large margin.

TAdaRAG: Task Adaptive Retrieval-Augmented Generation via On-the-Fly Knowledge Graph Construction

Nov 16, 2025Abstract:Retrieval-Augmented Generation (RAG) improves large language models by retrieving external knowledge, often truncated into smaller chunks due to the input context window, which leads to information loss, resulting in response hallucinations and broken reasoning chains. Moreover, traditional RAG retrieves unstructured knowledge, introducing irrelevant details that hinder accurate reasoning. To address these issues, we propose TAdaRAG, a novel RAG framework for on-the-fly task-adaptive knowledge graph construction from external sources. Specifically, we design an intent-driven routing mechanism to a domain-specific extraction template, followed by supervised fine-tuning and a reinforcement learning-based implicit extraction mechanism, ensuring concise, coherent, and non-redundant knowledge integration. Evaluations on six public benchmarks and a real-world business benchmark (NowNewsQA) across three backbone models demonstrate that TAdaRAG outperforms existing methods across diverse domains and long-text tasks, highlighting its strong generalization and practical effectiveness.

HaluMem: Evaluating Hallucinations in Memory Systems of Agents

Nov 05, 2025Abstract:Memory systems are key components that enable AI systems such as LLMs and AI agents to achieve long-term learning and sustained interaction. However, during memory storage and retrieval, these systems frequently exhibit memory hallucinations, including fabrication, errors, conflicts, and omissions. Existing evaluations of memory hallucinations are primarily end-to-end question answering, which makes it difficult to localize the operational stage within the memory system where hallucinations arise. To address this, we introduce the Hallucination in Memory Benchmark (HaluMem), the first operation level hallucination evaluation benchmark tailored to memory systems. HaluMem defines three evaluation tasks (memory extraction, memory updating, and memory question answering) to comprehensively reveal hallucination behaviors across different operational stages of interaction. To support evaluation, we construct user-centric, multi-turn human-AI interaction datasets, HaluMem-Medium and HaluMem-Long. Both include about 15k memory points and 3.5k multi-type questions. The average dialogue length per user reaches 1.5k and 2.6k turns, with context lengths exceeding 1M tokens, enabling evaluation of hallucinations across different context scales and task complexities. Empirical studies based on HaluMem show that existing memory systems tend to generate and accumulate hallucinations during the extraction and updating stages, which subsequently propagate errors to the question answering stage. Future research should focus on developing interpretable and constrained memory operation mechanisms that systematically suppress hallucinations and improve memory reliability.

Adversarial Preference Learning for Robust LLM Alignment

May 30, 2025

Abstract:Modern language models often rely on Reinforcement Learning from Human Feedback (RLHF) to encourage safe behaviors. However, they remain vulnerable to adversarial attacks due to three key limitations: (1) the inefficiency and high cost of human annotation, (2) the vast diversity of potential adversarial attacks, and (3) the risk of feedback bias and reward hacking. To address these challenges, we introduce Adversarial Preference Learning (APL), an iterative adversarial training method incorporating three key innovations. First, a direct harmfulness metric based on the model's intrinsic preference probabilities, eliminating reliance on external assessment. Second, a conditional generative attacker that synthesizes input-specific adversarial variations. Third, an iterative framework with automated closed-loop feedback, enabling continuous adaptation through vulnerability discovery and mitigation. Experiments on Mistral-7B-Instruct-v0.3 demonstrate that APL significantly enhances robustness, achieving 83.33% harmlessness win rate over the base model (evaluated by GPT-4o), reducing harmful outputs from 5.88% to 0.43% (measured by LLaMA-Guard), and lowering attack success rate by up to 65% according to HarmBench. Notably, APL maintains competitive utility, with an MT-Bench score of 6.59 (comparable to the baseline 6.78) and an LC-WinRate of 46.52% against the base model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge