Wai Lam

Contextualized Knowledge-aware Attentive Neural Network: Enhancing Answer Selection with Knowledge

Apr 12, 2021

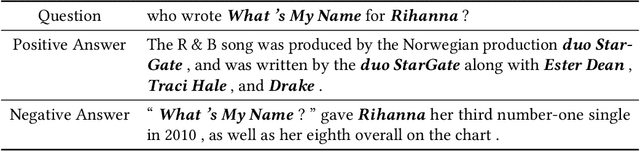

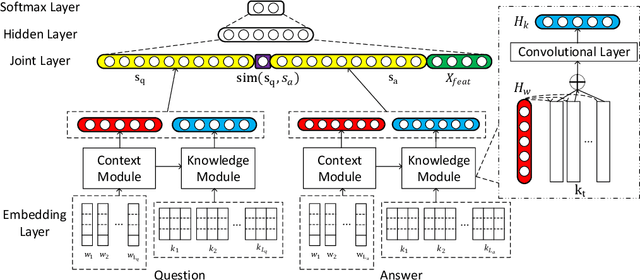

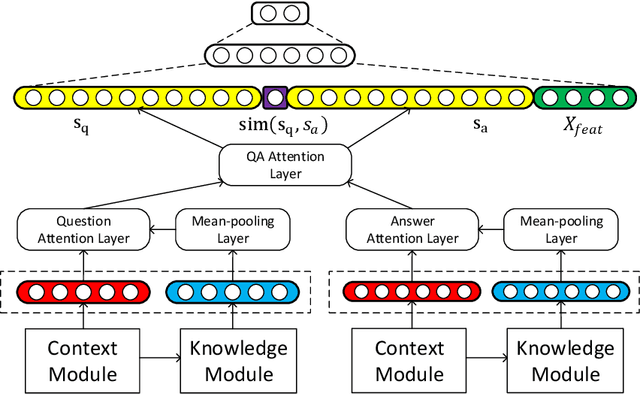

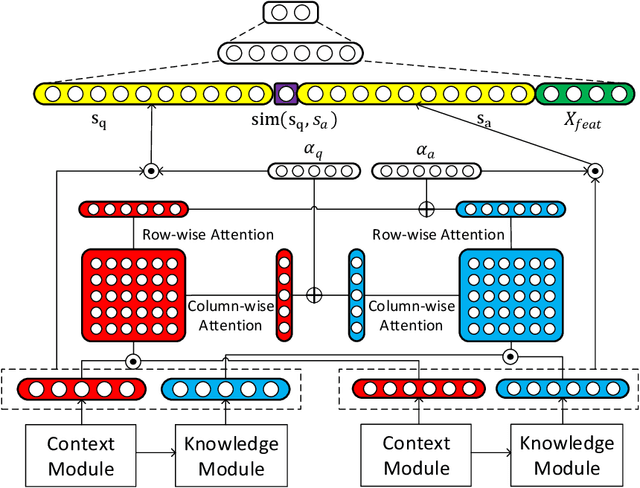

Abstract:Answer selection, which is involved in many natural language processing applications such as dialog systems and question answering (QA), is an important yet challenging task in practice, since conventional methods typically suffer from the issues of ignoring diverse real-world background knowledge. In this paper, we extensively investigate approaches to enhancing the answer selection model with external knowledge from knowledge graph (KG). First, we present a context-knowledge interaction learning framework, Knowledge-aware Neural Network (KNN), which learns the QA sentence representations by considering a tight interaction with the external knowledge from KG and the textual information. Then, we develop two kinds of knowledge-aware attention mechanism to summarize both the context-based and knowledge-based interactions between questions and answers. To handle the diversity and complexity of KG information, we further propose a Contextualized Knowledge-aware Attentive Neural Network (CKANN), which improves the knowledge representation learning with structure information via a customized Graph Convolutional Network (GCN) and comprehensively learns context-based and knowledge-based sentence representation via the multi-view knowledge-aware attention mechanism. We evaluate our method on four widely-used benchmark QA datasets, including WikiQA, TREC QA, InsuranceQA and Yahoo QA. Results verify the benefits of incorporating external knowledge from KG, and show the robust superiority and extensive applicability of our method.

A Theoretical Analysis of the Repetition Problem in Text Generation

Jan 28, 2021

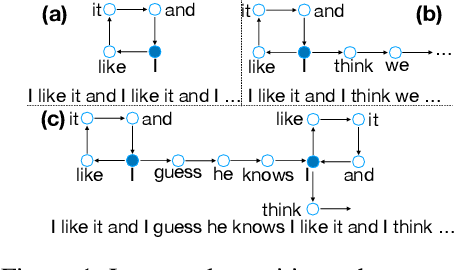

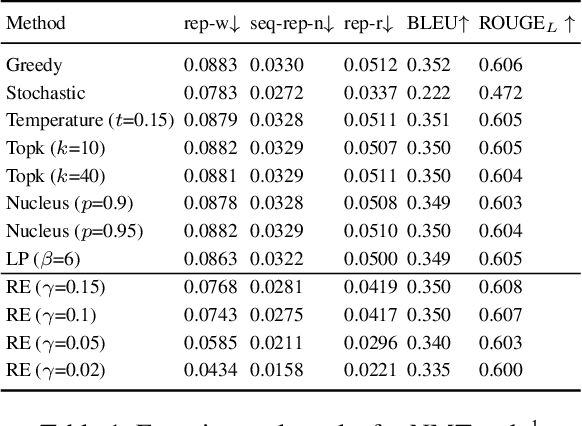

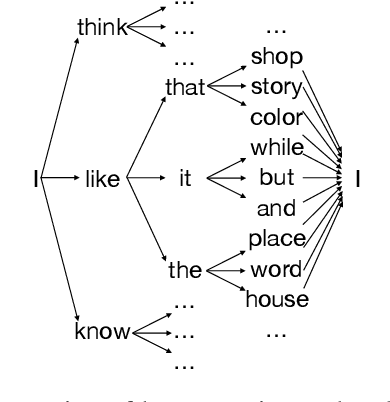

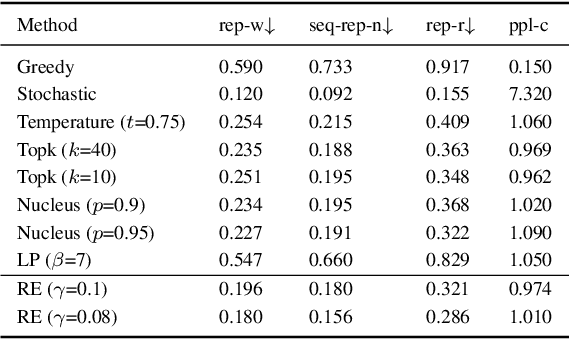

Abstract:Text generation tasks, including translation, summarization, language models, and etc. see rapid growth during recent years. Despite the remarkable achievements, the repetition problem has been observed in nearly all text generation models undermining the generation performance extensively. To solve the repetition problem, many methods have been proposed, but there is no existing theoretical analysis to show why this problem happens and how it is resolved. In this paper, we propose a new framework for theoretical analysis for the repetition problem. We first define the Average Repetition Probability (ARP) to characterize the repetition problem quantitatively. Then, we conduct an extensive analysis of the Markov generation model and derive several upper bounds of the average repetition probability with intuitive understanding. We show that most of the existing methods are essentially minimizing the upper bounds explicitly or implicitly. Grounded on our theory, we show that the repetition problem is, unfortunately, caused by the traits of our language itself. One major reason is attributed to the fact that there exist too many words predicting the same word as the subsequent word with high probability. Consequently, it is easy to go back to that word and form repetitions and we dub it as the high inflow problem. Furthermore, we derive a concentration bound of the average repetition probability for a general generation model. Finally, based on the theoretical upper bounds, we propose a novel rebalanced encoding approach to alleviate the high inflow problem. The experimental results show that our theoretical framework is applicable in general generation models and our proposed rebalanced encoding approach alleviates the repetition problem significantly. The source code of this paper can be obtained from \url{https://github.com/fuzihaofzh/repetition-problem-nlg}.

Unstructured Knowledge Access in Task-oriented Dialog Modeling using Language Inference, Knowledge Retrieval and Knowledge-Integrative Response Generation

Jan 15, 2021

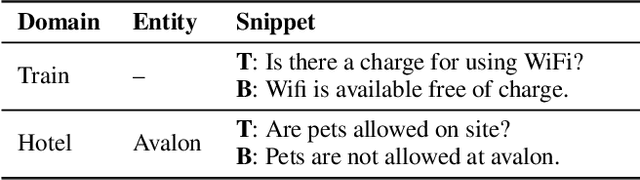

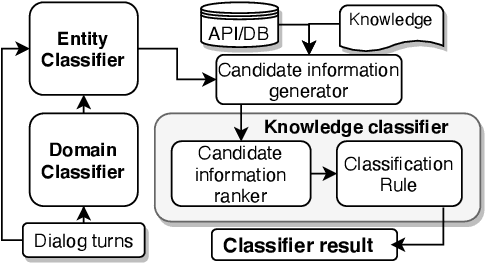

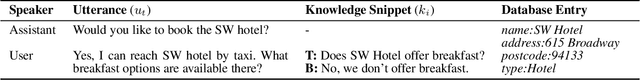

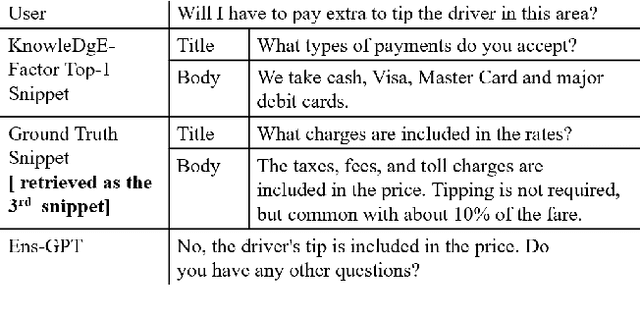

Abstract:Dialog systems enriched with external knowledge can handle user queries that are outside the scope of the supporting databases/APIs. In this paper, we follow the baseline provided in DSTC9 Track 1 and propose three subsystems, KDEAK, KnowleDgEFactor, and Ens-GPT, which form the pipeline for a task-oriented dialog system capable of accessing unstructured knowledge. Specifically, KDEAK performs knowledge-seeking turn detection by formulating the problem as natural language inference using knowledge from dialogs, databases and FAQs. KnowleDgEFactor accomplishes the knowledge selection task by formulating a factorized knowledge/document retrieval problem with three modules performing domain, entity and knowledge level analyses. Ens-GPT generates a response by first processing multiple knowledge snippets, followed by an ensemble algorithm that decides if the response should be solely derived from a GPT2-XL model, or regenerated in combination with the top-ranking knowledge snippet. Experimental results demonstrate that the proposed pipeline system outperforms the baseline and generates high-quality responses, achieving at least 58.77% improvement on BLEU-4 score.

Narrative Incoherence Detection

Dec 21, 2020

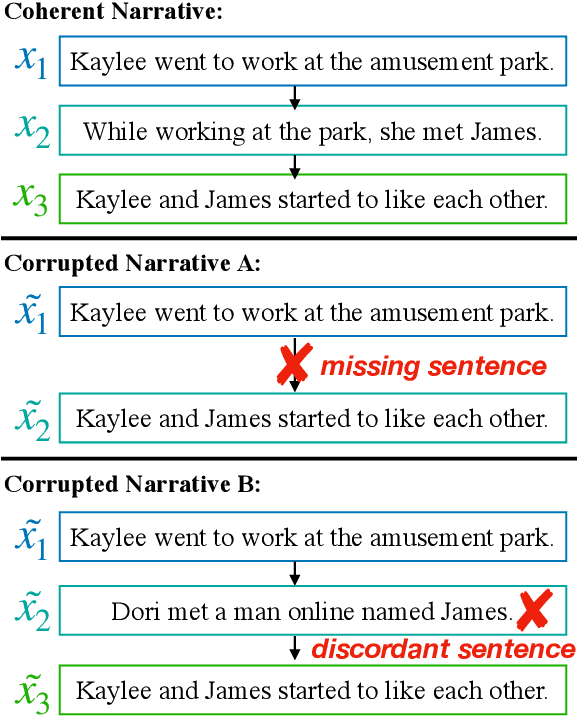

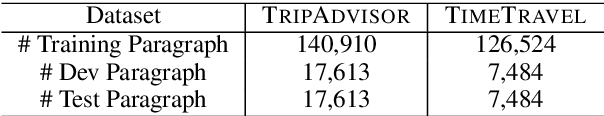

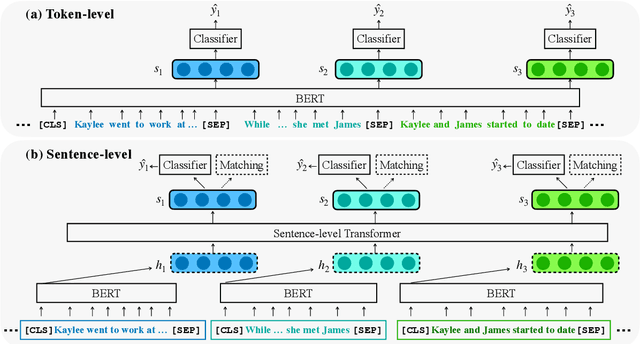

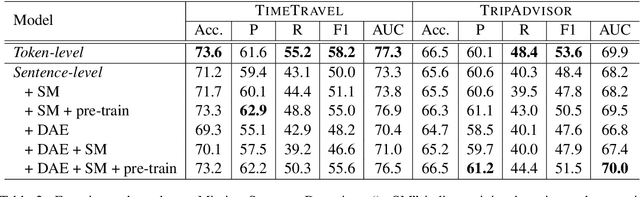

Abstract:Motivated by the increasing popularity of intelligent editing assistant, we introduce and investigate the task of narrative incoherence detection: Given a (corrupted) long-form narrative, decide whether there exists some semantic discrepancy in the narrative flow. Specifically, we focus on the missing sentence and incoherent sentence detection. Despite its simple setup, this task is challenging as the model needs to understand and analyze a multi-sentence narrative text, and make decisions at the sentence level. As an initial step towards this task, we implement several baselines either directly analyzing the raw text (\textit{token-level}) or analyzing learned sentence representations (\textit{sentence-level}). We observe that while token-level modeling enjoys greater expressive power and hence better performance, sentence-level modeling possesses an advantage in efficiency and flexibility. With pre-training on large-scale data and cycle-consistent sentence embedding, our extended sentence-level model can achieve comparable detection accuracy to the token-level model. As a by-product, such a strategy enables simultaneous incoherence detection and infilling/modification suggestions.

Unsupervised Cross-lingual Adaptation for Sequence Tagging and Beyond

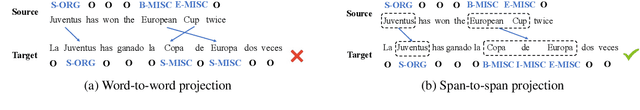

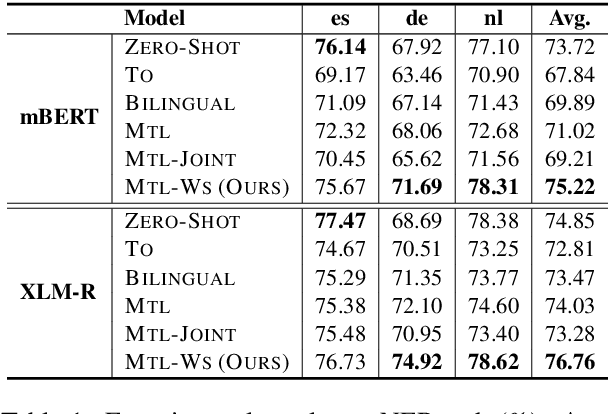

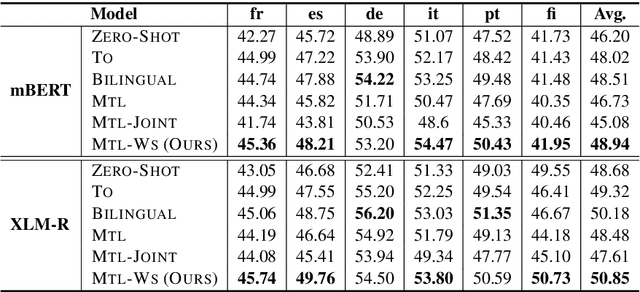

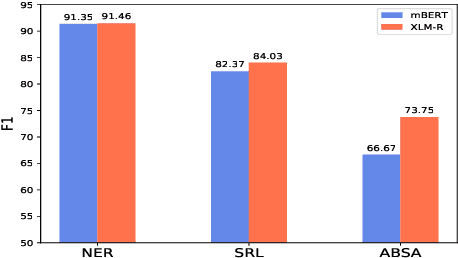

Oct 23, 2020

Abstract:Cross-lingual adaptation with multilingual pre-trained language models (mPTLMs) mainly consists of two lines of works: zero-shot approach and translation-based approach, which have been studied extensively on the sequence-level tasks. We further verify the efficacy of these cross-lingual adaptation approaches by evaluating their performances on more fine-grained sequence tagging tasks. After re-examining their strengths and drawbacks, we propose a novel framework to consolidate the zero-shot approach and the translation-based approach for better adaptation performance. Instead of simply augmenting the source data with the machine-translated data, we tailor-make a warm-up mechanism to quickly update the mPTLMs with the gradients estimated on a few translated data. Then, the adaptation approach is applied to the refined parameters and the cross-lingual transfer is performed in a warm-start way. The experimental results on nine target languages demonstrate that our method is beneficial to the cross-lingual adaptation of various sequence tagging tasks.

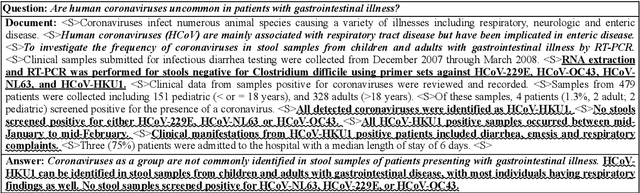

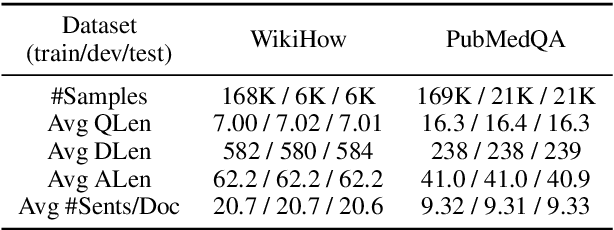

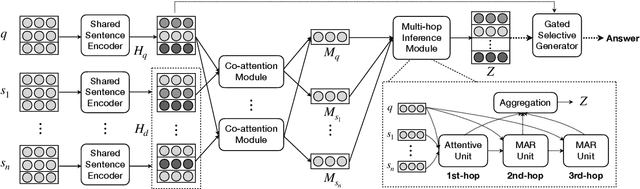

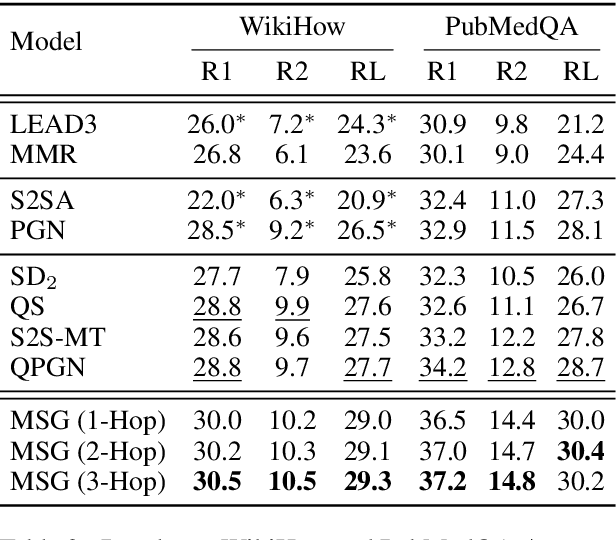

Multi-hop Inference for Question-driven Summarization

Oct 08, 2020

Abstract:Question-driven summarization has been recently studied as an effective approach to summarizing the source document to produce concise but informative answers for non-factoid questions. In this work, we propose a novel question-driven abstractive summarization method, Multi-hop Selective Generator (MSG), to incorporate multi-hop reasoning into question-driven summarization and, meanwhile, provide justifications for the generated summaries. Specifically, we jointly model the relevance to the question and the interrelation among different sentences via a human-like multi-hop inference module, which captures important sentences for justifying the summarized answer. A gated selective pointer generator network with a multi-view coverage mechanism is designed to integrate diverse information from different perspectives. Experimental results show that the proposed method consistently outperforms state-of-the-art methods on two non-factoid QA datasets, namely WikiHow and PubMedQA.

Partially-Aligned Data-to-Text Generation with Distant Supervision

Oct 03, 2020

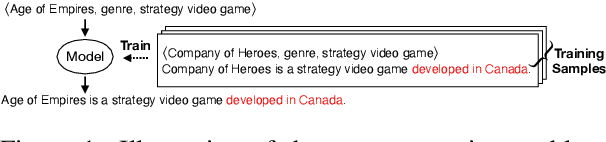

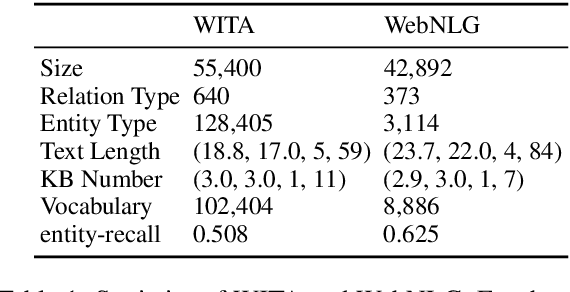

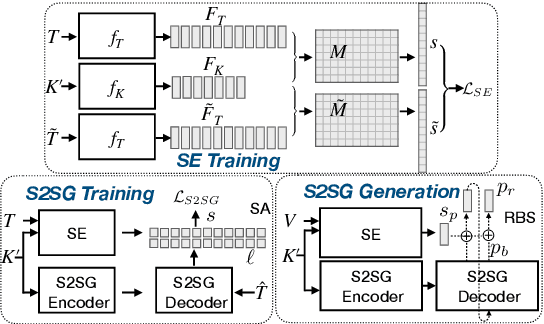

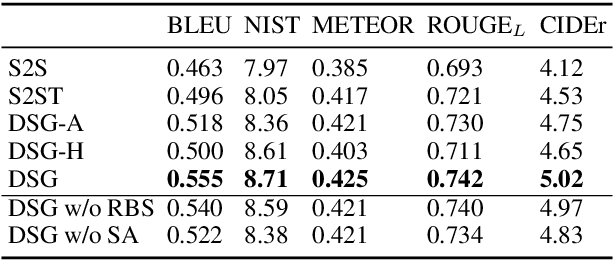

Abstract:The Data-to-Text task aims to generate human-readable text for describing some given structured data enabling more interpretability. However, the typical generation task is confined to a few particular domains since it requires well-aligned data which is difficult and expensive to obtain. Using partially-aligned data is an alternative way of solving the dataset scarcity problem. This kind of data is much easier to obtain since it can be produced automatically. However, using this kind of data induces the over-generation problem posing difficulties for existing models, which tends to add unrelated excerpts during the generation procedure. In order to effectively utilize automatically annotated partially-aligned datasets, we extend the traditional generation task to a refined task called Partially-Aligned Data-to-Text Generation (PADTG) which is more practical since it utilizes automatically annotated data for training and thus considerably expands the application domains. To tackle this new task, we propose a novel distant supervision generation framework. It firstly estimates the input data's supportiveness for each target word with an estimator and then applies a supportiveness adaptor and a rebalanced beam search to harness the over-generation problem in the training and generation phases respectively. We also contribute a partially-aligned dataset (The data and source code of this paper can be obtained from https://github.com/fuzihaofzh/distant_supervision_nlg by sampling sentences from Wikipedia and automatically extracting corresponding KB triples for each sentence from Wikidata. The experimental results show that our framework outperforms all baseline models as well as verify the feasibility of utilizing partially-aligned data.

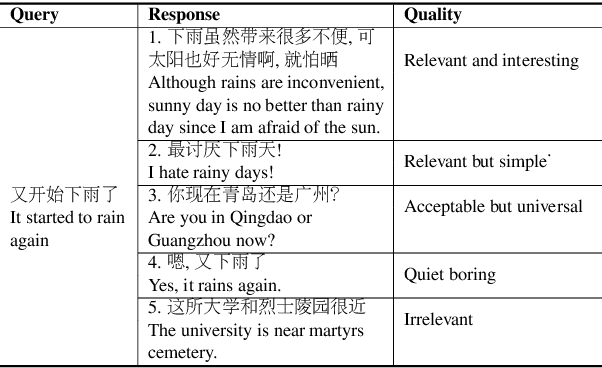

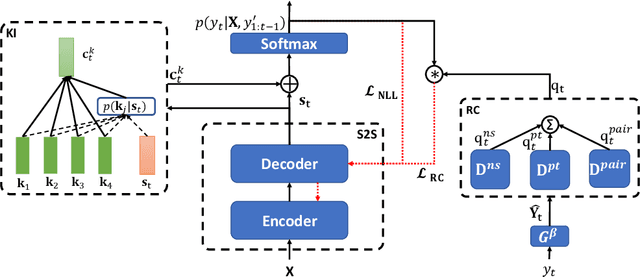

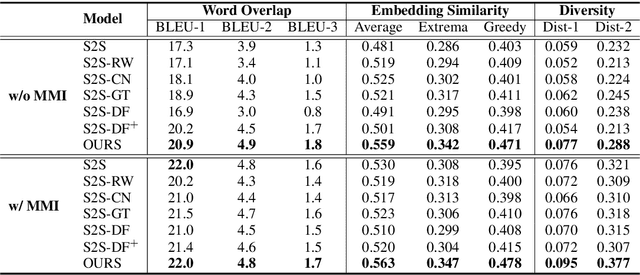

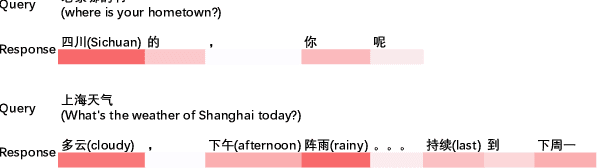

Enhancing Dialogue Generation via Multi-Level Contrastive Learning

Sep 19, 2020

Abstract:Most of the existing works for dialogue generation are data-driven models trained directly on corpora crawled from websites. They mainly focus on improving the model architecture to produce better responses but pay little attention to considering the quality of the training data contrastively. In this paper, we propose a multi-level contrastive learning paradigm to model the fine-grained quality of the responses with respect to the query. A Rank-aware Calibration (RC) network is designed to construct the multi-level contrastive optimization objectives. Since these objectives are calculated based on the sentence level, which may erroneously encourage/suppress the generation of uninformative/informative words. To tackle this incidental issue, on one hand, we design an exquisite token-level strategy for estimating the instance loss more accurately. On the other hand, we build a Knowledge Inference (KI) component to capture the keyword knowledge from the reference during training and exploit such information to encourage the generation of informative words. We evaluate the proposed model on a carefully annotated dialogue dataset and the results suggest that our model can generate more relevant and diverse responses compared to the baseline models.

Opinion-aware Answer Generation for Review-driven Question Answering in E-Commerce

Aug 28, 2020

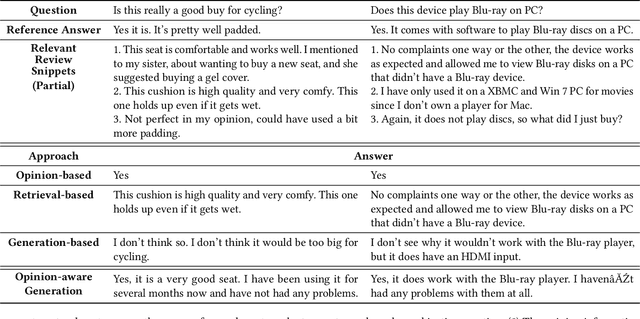

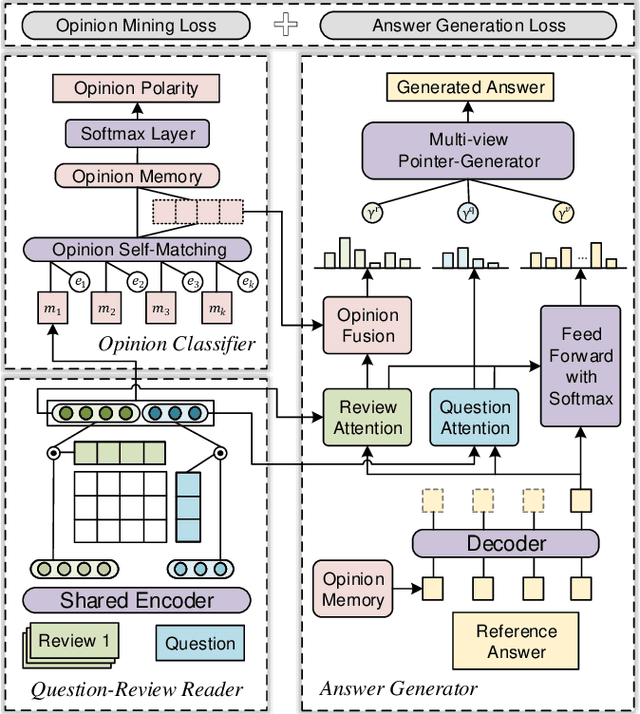

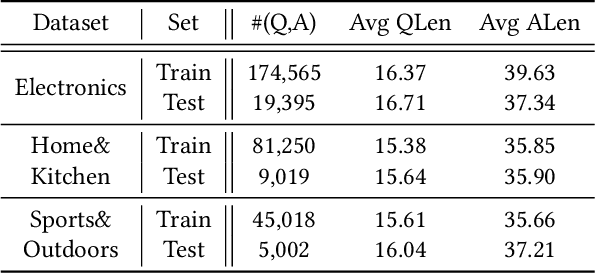

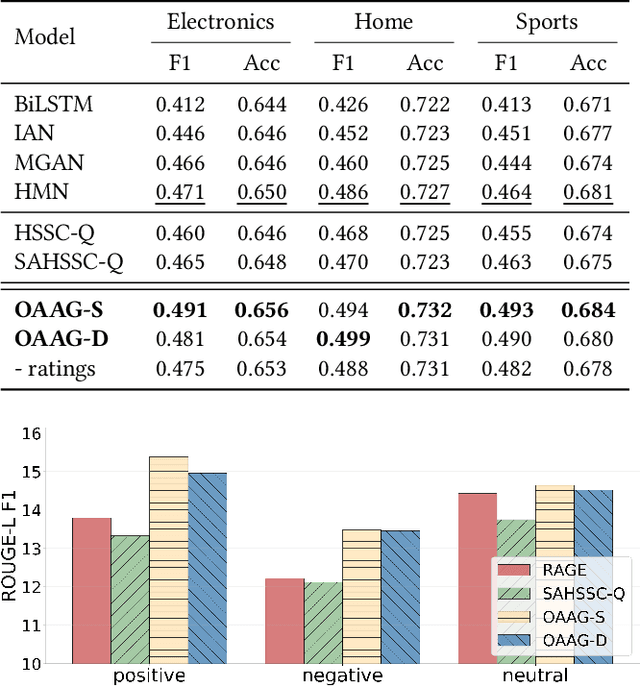

Abstract:Product-related question answering (QA) is an important but challenging task in E-Commerce. It leads to a great demand on automatic review-driven QA, which aims at providing instant responses towards user-posted questions based on diverse product reviews. Nevertheless, the rich information about personal opinions in product reviews, which is essential to answer those product-specific questions, is underutilized in current generation-based review-driven QA studies. There are two main challenges when exploiting the opinion information from the reviews to facilitate the opinion-aware answer generation: (i) jointly modeling opinionated and interrelated information between the question and reviews to capture important information for answer generation, (ii) aggregating diverse opinion information to uncover the common opinion towards the given question. In this paper, we tackle opinion-aware answer generation by jointly learning answer generation and opinion mining tasks with a unified model. Two kinds of opinion fusion strategies, namely, static and dynamic fusion, are proposed to distill and aggregate important opinion information learned from the opinion mining task into the answer generation process. Then a multi-view pointer-generator network is employed to generate opinion-aware answers for a given product-related question. Experimental results show that our method achieves superior performance in real-world E-Commerce QA datasets, and effectively generate opinionated and informative answers.

Contextualized Code Representation Learning for Commit Message Generation

Jul 14, 2020

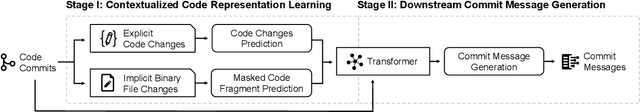

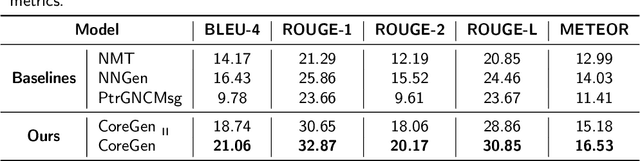

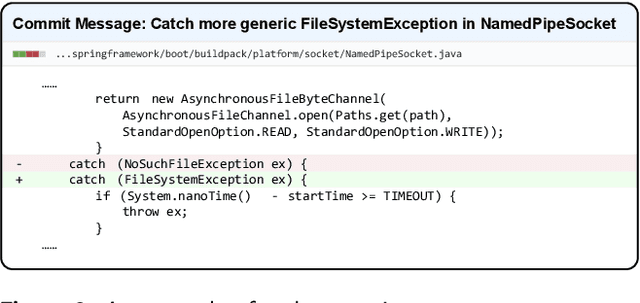

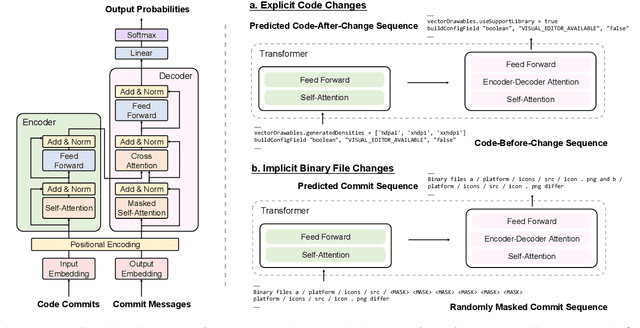

Abstract:Automatic generation of high-quality commit messages for code commits can substantially facilitate developers' works and coordination. However, the semantic gap between source code and natural language poses a major challenge for the task. Several studies have been proposed to alleviate the challenge but none explicitly involves code contextual information during commit message generation. Specifically, existing research adopts static embedding for code tokens, which maps a token to the same vector regardless of its context. In this paper, we propose a novel Contextualized code representation learning method for commit message Generation (CoreGen). CoreGen first learns contextualized code representation which exploits the contextual information behind code commit sequences. The learned representations of code commits built upon Transformer are then transferred for downstream commit message generation. Experiments on the benchmark dataset demonstrate the superior effectiveness of our model over the baseline models with an improvement of 28.18% in terms of BLEU-4 score. Furthermore, we also highlight the future opportunities in training contextualized code representations on larger code corpus as a solution to low-resource settings and adapting the pretrained code representations to other downstream code-to-text generation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge