Siyang Song

MAUGen: A Unified Diffusion Approach for Multi-Identity Facial Expression and AU Label Generation

Jan 31, 2026Abstract:The lack of large-scale, demographically diverse face images with precise Action Unit (AU) occurrence and intensity annotations has long been recognized as a fundamental bottleneck in developing generalizable AU recognition systems. In this paper, we propose MAUGen, a diffusion-based multi-modal framework that jointly generates a large collection of photorealistic facial expressions and anatomically consistent AU labels, including both occurrence and intensity, conditioned on a single descriptive text prompt. Our MAUGen involves two key modules: (1) a Multi-modal Representation Learning (MRL) module that captures the relationships among the paired textual description, facial identity, expression image, and AU activations within a unified latent space; and (2) a Diffusion-based Image label Generator (DIG) that decodes the joint representation into aligned facial image-label pairs across diverse identities. Under this framework, we introduce Multi-Identity Facial Action (MIFA), a large-scale multimodal synthetic dataset featuring comprehensive AU annotations and identity variations. Extensive experiments demonstrate that MAUGen outperforms existing methods in synthesizing photorealistic, demographically diverse facial images along with semantically aligned AU labels.

Beyond Segmentation: An Oil Spill Change Detection Framework Using Synthetic SAR Imagery

Jan 05, 2026Abstract:Marine oil spills are urgent environmental hazards that demand rapid and reliable detection to minimise ecological and economic damage. While Synthetic Aperture Radar (SAR) imagery has become a key tool for large-scale oil spill monitoring, most existing detection methods rely on deep learning-based segmentation applied to single SAR images. These static approaches struggle to distinguish true oil spills from visually similar oceanic features (e.g., biogenic slicks or low-wind zones), leading to high false positive rates and limited generalizability, especially under data-scarce conditions. To overcome these limitations, we introduce Oil Spill Change Detection (OSCD), a new bi-temporal task that focuses on identifying changes between pre- and post-spill SAR images. As real co-registered pre-spill imagery is not always available, we propose the Temporal-Aware Hybrid Inpainting (TAHI) framework, which generates synthetic pre-spill images from post-spill SAR data. TAHI integrates two key components: High-Fidelity Hybrid Inpainting for oil-free reconstruction, and Temporal Realism Enhancement for radiometric and sea-state consistency. Using TAHI, we construct the first OSCD dataset and benchmark several state-of-the-art change detection models. Results show that OSCD significantly reduces false positives and improves detection accuracy compared to conventional segmentation, demonstrating the value of temporally-aware methods for reliable, scalable oil spill monitoring in real-world scenarios.

D^3-Talker: Dual-Branch Decoupled Deformation Fields for Few-Shot 3D Talking Head Synthesis

Aug 20, 2025Abstract:A key challenge in 3D talking head synthesis lies in the reliance on a long-duration talking head video to train a new model for each target identity from scratch. Recent methods have attempted to address this issue by extracting general features from audio through pre-training models. However, since audio contains information irrelevant to lip motion, existing approaches typically struggle to map the given audio to realistic lip behaviors in the target face when trained on only a few frames, causing poor lip synchronization and talking head image quality. This paper proposes D^3-Talker, a novel approach that constructs a static 3D Gaussian attribute field and employs audio and Facial Motion signals to independently control two distinct Gaussian attribute deformation fields, effectively decoupling the predictions of general and personalized deformations. We design a novel similarity contrastive loss function during pre-training to achieve more thorough decoupling. Furthermore, we integrate a Coarse-to-Fine module to refine the rendered images, alleviating blurriness caused by head movements and enhancing overall image quality. Extensive experiments demonstrate that D^3-Talker outperforms state-of-the-art methods in both high-fidelity rendering and accurate audio-lip synchronization with limited training data. Our code will be provided upon acceptance.

Learning Personalised Human Internal Cognition from External Expressive Behaviours for Real Personality Recognition

Jul 31, 2025

Abstract:Automatic real personality recognition (RPR) aims to evaluate human real personality traits from their expressive behaviours. However, most existing solutions generally act as external observers to infer observers' personality impressions based on target individuals' expressive behaviours, which significantly deviate from their real personalities and consistently lead to inferior recognition performance. Inspired by the association between real personality and human internal cognition underlying the generation of expressive behaviours, we propose a novel RPR approach that efficiently simulates personalised internal cognition from easy-accessible external short audio-visual behaviours expressed by the target individual. The simulated personalised cognition, represented as a set of network weights that enforce the personalised network to reproduce the individual-specific facial reactions, is further encoded as a novel graph containing two-dimensional node and edge feature matrices, with a novel 2D Graph Neural Network (2D-GNN) proposed for inferring real personality traits from it. To simulate real personality-related cognition, an end-to-end strategy is designed to jointly train our cognition simulation, 2D graph construction, and personality recognition modules.

OmniResponse: Online Multimodal Conversational Response Generation in Dyadic Interactions

May 27, 2025

Abstract:In this paper, we introduce Online Multimodal Conversational Response Generation (OMCRG), a novel task that aims to online generate synchronized verbal and non-verbal listener feedback, conditioned on the speaker's multimodal input. OMCRG reflects natural dyadic interactions and poses new challenges in achieving synchronization between the generated audio and facial responses of the listener. To address these challenges, we innovatively introduce text as an intermediate modality to bridge the audio and facial responses. We hence propose OmniResponse, a Multimodal Large Language Model (MLLM) that autoregressively generates high-quality multi-modal listener responses. OmniResponse leverages a pretrained LLM enhanced with two novel components: Chrono-Text, which temporally anchors generated text tokens, and TempoVoice, a controllable online TTS module that produces speech synchronized with facial reactions. To support further OMCRG research, we present ResponseNet, a new dataset comprising 696 high-quality dyadic interactions featuring synchronized split-screen videos, multichannel audio, transcripts, and facial behavior annotations. Comprehensive evaluations conducted on ResponseNet demonstrate that OmniResponse significantly outperforms baseline models in terms of semantic speech content, audio-visual synchronization, and generation quality.

REACT 2025: the Third Multiple Appropriate Facial Reaction Generation Challenge

May 22, 2025

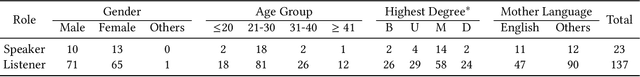

Abstract:In dyadic interactions, a broad spectrum of human facial reactions might be appropriate for responding to each human speaker behaviour. Following the successful organisation of the REACT 2023 and REACT 2024 challenges, we are proposing the REACT 2025 challenge encouraging the development and benchmarking of Machine Learning (ML) models that can be used to generate multiple appropriate, diverse, realistic and synchronised human-style facial reactions expressed by human listeners in response to an input stimulus (i.e., audio-visual behaviours expressed by their corresponding speakers). As a key of the challenge, we provide challenge participants with the first natural and large-scale multi-modal MAFRG dataset (called MARS) recording 137 human-human dyadic interactions containing a total of 2856 interaction sessions covering five different topics. In addition, this paper also presents the challenge guidelines and the performance of our baselines on the two proposed sub-challenges: Offline MAFRG and Online MAFRG, respectively. The challenge baseline code is publicly available at https://github.com/reactmultimodalchallenge/baseline_react2025

Oral Imaging for Malocclusion Issues Assessments: OMNI Dataset, Deep Learning Baselines and Benchmarking

May 21, 2025Abstract:Malocclusion is a major challenge in orthodontics, and its complex presentation and diverse clinical manifestations make accurate localization and diagnosis particularly important. Currently, one of the major shortcomings facing the field of dental image analysis is the lack of large-scale, accurately labeled datasets dedicated to malocclusion issues, which limits the development of automated diagnostics in the field of dentistry and leads to a lack of diagnostic accuracy and efficiency in clinical practice. Therefore, in this study, we propose the Oral and Maxillofacial Natural Images (OMNI) dataset, a novel and comprehensive dental image dataset aimed at advancing the study of analyzing dental images for issues of malocclusion. Specifically, the dataset contains 4166 multi-view images with 384 participants in data collection and annotated by professional dentists. In addition, we performed a comprehensive validation of the created OMNI dataset, including three CNN-based methods, two Transformer-based methods, and one GNN-based method, and conducted automated diagnostic experiments for malocclusion issues. The experimental results show that the OMNI dataset can facilitate the automated diagnosis research of malocclusion issues and provide a new benchmark for the research in this field. Our OMNI dataset and baseline code are publicly available at https://github.com/RoundFaceJ/OMNI.

The First MPDD Challenge: Multimodal Personality-aware Depression Detection

May 15, 2025

Abstract:Depression is a widespread mental health issue affecting diverse age groups, with notable prevalence among college students and the elderly. However, existing datasets and detection methods primarily focus on young adults, neglecting the broader age spectrum and individual differences that influence depression manifestation. Current approaches often establish a direct mapping between multimodal data and depression indicators, failing to capture the complexity and diversity of depression across individuals. This challenge includes two tracks based on age-specific subsets: Track 1 uses the MPDD-Elderly dataset for detecting depression in older adults, and Track 2 uses the MPDD-Young dataset for detecting depression in younger participants. The Multimodal Personality-aware Depression Detection (MPDD) Challenge aims to address this gap by incorporating multimodal data alongside individual difference factors. We provide a baseline model that fuses audio and video modalities with individual difference information to detect depression manifestations in diverse populations. This challenge aims to promote the development of more personalized and accurate de pression detection methods, advancing mental health research and fostering inclusive detection systems. More details are available on the official challenge website: https://hacilab.github.io/MPDDChallenge.github.io.

CD-Lamba: Boosting Remote Sensing Change Detection via a Cross-Temporal Locally Adaptive State Space Model

Jan 26, 2025

Abstract:Mamba, with its advantages of global perception and linear complexity, has been widely applied to identify changes of the target regions within the remote sensing (RS) images captured under complex scenarios and varied conditions. However, existing remote sensing change detection (RSCD) approaches based on Mamba frequently struggle to effectively perceive the inherent locality of change regions as they direct flatten and scan RS images (i.e., the features of the same region of changes are not distributed continuously within the sequence but are mixed with features from other regions throughout the sequence). In this paper, we propose a novel locally adaptive SSM-based approach, termed CD-Lamba, which effectively enhances the locality of change detection while maintaining global perception. Specifically, our CD-Lamba includes a Locally Adaptive State-Space Scan (LASS) strategy for locality enhancement, a Cross-Temporal State-Space Scan (CTSS) strategy for bi-temporal feature fusion, and a Window Shifting and Perception (WSP) mechanism to enhance interactions across segmented windows. These strategies are integrated into a multi-scale Cross-Temporal Locally Adaptive State-Space Scan (CT-LASS) module to effectively highlight changes and refine changes' representations feature generation. CD-Lamba significantly enhances local-global spatio-temporal interactions in bi-temporal images, offering improved performance in RSCD tasks. Extensive experimental results show that CD-Lamba achieves state-of-the-art performance on four benchmark datasets with a satisfactory efficiency-accuracy trade-off. Our code is publicly available at https://github.com/xwmaxwma/rschange.

Benchmarking Graph Representations and Graph Neural Networks for Multivariate Time Series Classification

Jan 14, 2025Abstract:Multivariate Time Series Classification (MTSC) enables the analysis if complex temporal data, and thus serves as a cornerstone in various real-world applications, ranging from healthcare to finance. Since the relationship among variables in MTS usually contain crucial cues, a large number of graph-based MTSC approaches have been proposed, as the graph topology and edges can explicitly represent relationships among variables (channels), where not only various MTS graph representation learning strategies but also different Graph Neural Networks (GNNs) have been explored. Despite such progresses, there is no comprehensive study that fairly benchmarks and investigates the performances of existing widely-used graph representation learning strategies/GNN classifiers in the application of different MTSC tasks. In this paper, we present the first benchmark which systematically investigates the effectiveness of the widely-used three node feature definition strategies, four edge feature learning strategies and five GNN architecture, resulting in 60 different variants for graph-based MTSC. These variants are developed and evaluated with a standardized data pipeline and training/validation/testing strategy on 26 widely-used suspensor MTSC datasets. Our experiments highlight that node features significantly influence MTSC performance, while the visualization of edge features illustrates why adaptive edge learning outperforms other edge feature learning methods. The code of the proposed benchmark is publicly available at \url{https://github.com/CVI-yangwn/Benchmark-GNN-for-Multivariate-Time-Series-Classification}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge