Yuliang Zhao

Fellow, IEEE

The First MPDD Challenge: Multimodal Personality-aware Depression Detection

May 15, 2025

Abstract:Depression is a widespread mental health issue affecting diverse age groups, with notable prevalence among college students and the elderly. However, existing datasets and detection methods primarily focus on young adults, neglecting the broader age spectrum and individual differences that influence depression manifestation. Current approaches often establish a direct mapping between multimodal data and depression indicators, failing to capture the complexity and diversity of depression across individuals. This challenge includes two tracks based on age-specific subsets: Track 1 uses the MPDD-Elderly dataset for detecting depression in older adults, and Track 2 uses the MPDD-Young dataset for detecting depression in younger participants. The Multimodal Personality-aware Depression Detection (MPDD) Challenge aims to address this gap by incorporating multimodal data alongside individual difference factors. We provide a baseline model that fuses audio and video modalities with individual difference information to detect depression manifestations in diverse populations. This challenge aims to promote the development of more personalized and accurate de pression detection methods, advancing mental health research and fostering inclusive detection systems. More details are available on the official challenge website: https://hacilab.github.io/MPDDChallenge.github.io.

Data-Folding and Hyperspace Coding for Multi-Dimensonal Time-Series Data Imaging

Mar 10, 2022

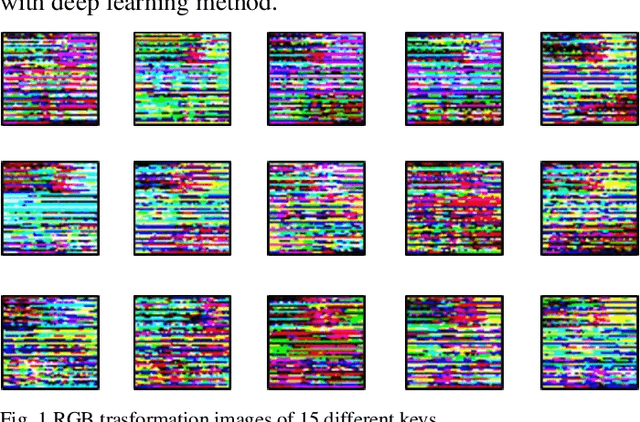

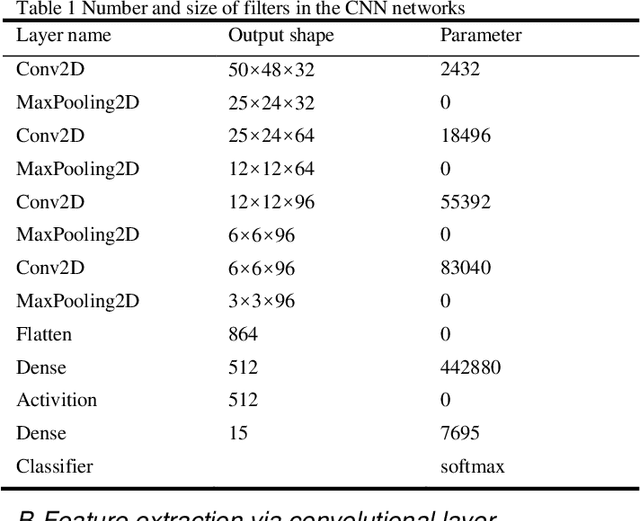

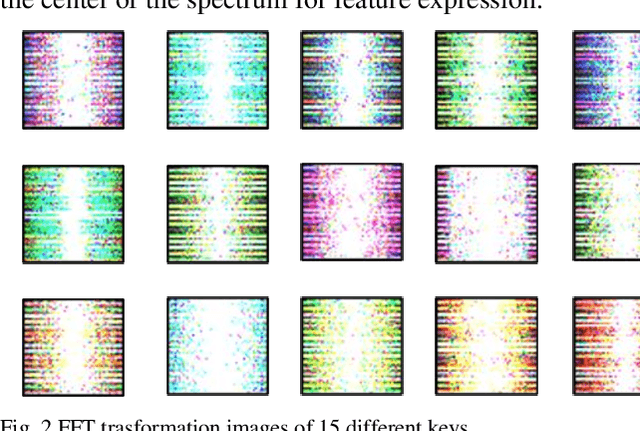

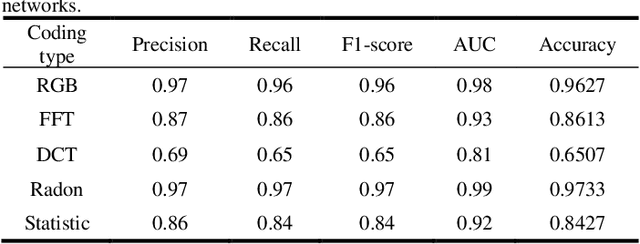

Abstract:Time-series classification and prediction are widely used in many applications. However, traditional machine learning algorithms, due to their limitations, have difficulty improving the performance of time-series classification and prediction. Inspired by the recent successes of the deep learning technology in computer vision, we develop a new time-series image encoding method for data reconstruction. Featuring data-folding and hyperspace coding this method breaks the barriers between time-series signals and images and establishes a close relationship between them, allowing effective application of the deep learning technology for time-series data. Besides a raw data coding method, we also present other four extended coding methods for other potential applications. For comparison purposes, we present the results of the five different types of image coding methods with our previous keystroke recognition datasets. The results show that our method can achieve an impressive accuracy of 96.27% when RGB coding images are used, and an accuracy of up to 97.33% when using radon coding way. We can expect that this method can also be used and perform well in other classification and prediction applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge