Ruoyu Zhang

corresponding author

GRACE: Designing Generative Face Video Codec via Agile Hardware-Centric Workflow

Nov 12, 2025

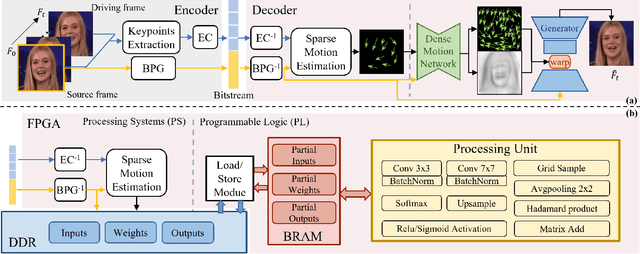

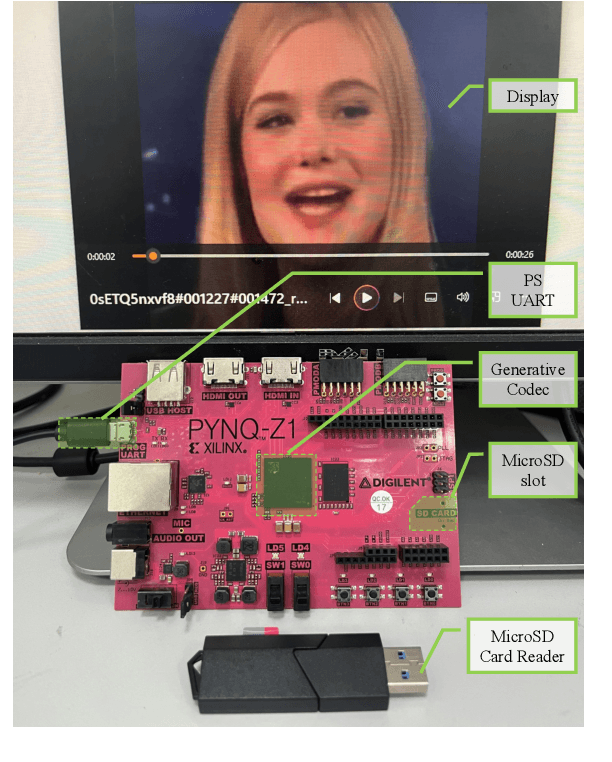

Abstract:The Animation-based Generative Codec (AGC) is an emerging paradigm for talking-face video compression. However, deploying its intricate decoder on resource and power-constrained edge devices presents challenges due to numerous parameters, the inflexibility to adapt to dynamically evolving algorithms, and the high power consumption induced by extensive computations and data transmission. This paper for the first time proposes a novel field programmable gate arrays (FPGAs)-oriented AGC deployment scheme for edge-computing video services. Initially, we analyze the AGC algorithm and employ network compression methods including post-training static quantization and layer fusion techniques. Subsequently, we design an overlapped accelerator utilizing the co-processor paradigm to perform computations through software-hardware co-design. The hardware processing unit comprises engines such as convolution, grid sampling, upsample, etc. Parallelization optimization strategies like double-buffered pipelines and loop unrolling are employed to fully exploit the resources of FPGA. Ultimately, we establish an AGC FPGA prototype on the PYNQ-Z1 platform using the proposed scheme, achieving \textbf{24.9$\times$} and \textbf{4.1$\times$} higher energy efficiency against commercial Central Processing Unit (CPU) and Graphic Processing Unit (GPU), respectively. Specifically, only \textbf{11.7} microjoules ($\upmu$J) are required for one pixel reconstructed by this FPGA system.

Self-Supervised and Topological Signal-Quality Assessment for Any PPG Device

Sep 15, 2025Abstract:Wearable photoplethysmography (PPG) is embedded in billions of devices, yet its optical waveform is easily corrupted by motion, perfusion loss, and ambient light, jeopardizing downstream cardiometric analytics. Existing signal-quality assessment (SQA) methods rely either on brittle heuristics or on data-hungry supervised models. We introduce the first fully unsupervised SQA pipeline for wrist PPG. Stage 1 trains a contrastive 1-D ResNet-18 on 276 h of raw, unlabeled data from heterogeneous sources (varying in device and sampling frequency), yielding optical-emitter- and motion-invariant embeddings (i.e., the learned representation is stable across differences in LED wavelength, drive intensity, and device optics, as well as wrist motion). Stage 2 converts each 512-D encoder embedding into a 4-D topological signature via persistent homology (PH) and clusters these signatures with HDBSCAN. To produce a binary signal-quality index (SQI), the acceptable PPG signals are represented by the densest cluster while the remaining clusters are assumed to mainly contain poor-quality PPG signals. Without re-tuning, the SQI attains Silhouette, Davies-Bouldin, and Calinski-Harabasz scores of 0.72, 0.34, and 6173, respectively, on a stratified sample of 10,000 windows. In this study, we propose a hybrid self-supervised-learning--topological-data-analysis (SSL--TDA) framework that offers a drop-in, scalable, cross-device quality gate for PPG signals.

Generalizable Blood Pressure Estimation from Multi-Wavelength PPG Using Curriculum-Adversarial Learning

Sep 15, 2025Abstract:Accurate and generalizable blood pressure (BP) estimation is vital for the early detection and management of cardiovascular diseases. In this study, we enforce subject-level data splitting on a public multi-wavelength photoplethysmography (PPG) dataset and propose a generalizable BP estimation framework based on curriculum-adversarial learning. Our approach combines curriculum learning, which transitions from hypertension classification to BP regression, with domain-adversarial training that confuses subject identity to encourage the learning of subject-invariant features. Experiments show that multi-channel fusion consistently outperforms single-channel models. On the four-wavelength PPG dataset, our method achieves strong performance under strict subject-level splitting, with mean absolute errors (MAE) of 14.2mmHg for systolic blood pressure (SBP) and 6.4mmHg for diastolic blood pressure (DBP). Additionally, ablation studies validate the effectiveness of both the curriculum and adversarial components. These results highlight the potential of leveraging complementary information in multi-wavelength PPG and curriculum-adversarial strategies for accurate and robust BP estimation.

Rapid Adaptation of SpO2 Estimation to Wearable Devices via Transfer Learning on Low-Sampling-Rate PPG

Sep 15, 2025Abstract:Blood oxygen saturation (SpO2) is a vital marker for healthcare monitoring. Traditional SpO2 estimation methods often rely on complex clinical calibration, making them unsuitable for low-power, wearable applications. In this paper, we propose a transfer learning-based framework for the rapid adaptation of SpO2 estimation to energy-efficient wearable devices using low-sampling-rate (25Hz) dual-channel photoplethysmography (PPG). We first pretrain a bidirectional Long Short-Term Memory (BiLSTM) model with self-attention on a public clinical dataset, then fine-tune it using data collected from our wearable We-Be band and an FDA-approved reference pulse oximeter. Experimental results show that our approach achieves a mean absolute error (MAE) of 2.967% on the public dataset and 2.624% on the private dataset, significantly outperforming traditional calibration and non-transferred machine learning baselines. Moreover, using 25Hz PPG reduces power consumption by 40% compared to 100Hz, excluding baseline draw. Our method also attains an MAE of 3.284% in instantaneous SpO2 prediction, effectively capturing rapid fluctuations. These results demonstrate the rapid adaptation of accurate, low-power SpO2 monitoring on wearable devices without the need for clinical calibration.

Terahertz Integrated Sensing and Communication-Empowered UAVs in 6G: A Transceiver Design Perspective

Feb 07, 2025Abstract:Due to their high maneuverability, flexible deployment, and low cost, unmanned aerial vehicles (UAVs) are expected to play a pivotal role in not only communication, but also sensing. Especially by exploiting the ultra-wide bandwidth of terahertz (THz) bands, integrated sensing and communication (ISAC)-empowered UAV has been a promising technology of 6G space-air-ground integrated networks. In this article, we systematically investigate the key techniques and essential obstacles for THz-ISAC-empowered UAV from a transceiver design perspective, with the highlight of its major challenges and key technologies. Specifically, we discuss the THz-ISAC-UAV wireless propagation environment, based on which several channel characteristics for communication and sensing are revealed. We point out the transceiver payload design peculiarities for THz-ISAC-UAV from the perspective of antenna design, radio frequency front-end, and baseband signal processing. To deal with the specificities faced by the payload, we shed light on three key technologies, i.e., hybrid beamforming for ultra-massive MIMO-ISAC, power-efficient THz-ISAC waveform design, as well as communication and sensing channel state information acquisition, and extensively elaborate their concepts and key issues. More importantly, future research directions and associated open problems are presented, which may unleash the full potential of THz-ISAC-UAV for 6G wireless networks.

DSTSA-GCN: Advancing Skeleton-Based Gesture Recognition with Semantic-Aware Spatio-Temporal Topology Modeling

Jan 21, 2025

Abstract:Graph convolutional networks (GCNs) have emerged as a powerful tool for skeleton-based action and gesture recognition, thanks to their ability to model spatial and temporal dependencies in skeleton data. However, existing GCN-based methods face critical limitations: (1) they lack effective spatio-temporal topology modeling that captures dynamic variations in skeletal motion, and (2) they struggle to model multiscale structural relationships beyond local joint connectivity. To address these issues, we propose a novel framework called Dynamic Spatial-Temporal Semantic Awareness Graph Convolutional Network (DSTSA-GCN). DSTSA-GCN introduces three key modules: Group Channel-wise Graph Convolution (GC-GC), Group Temporal-wise Graph Convolution (GT-GC), and Multi-Scale Temporal Convolution (MS-TCN). GC-GC and GT-GC operate in parallel to independently model channel-specific and frame-specific correlations, enabling robust topology learning that accounts for temporal variations. Additionally, both modules employ a grouping strategy to adaptively capture multiscale structural relationships. Complementing this, MS-TCN enhances temporal modeling through group-wise temporal convolutions with diverse receptive fields. Extensive experiments demonstrate that DSTSA-GCN significantly improves the topology modeling capabilities of GCNs, achieving state-of-the-art performance on benchmark datasets for gesture and action recognition, including SHREC17 Track, DHG-14\/28, NTU-RGB+D, and NTU-RGB+D-120.

DeepSeek-V3 Technical Report

Dec 27, 2024

Abstract:We present DeepSeek-V3, a strong Mixture-of-Experts (MoE) language model with 671B total parameters with 37B activated for each token. To achieve efficient inference and cost-effective training, DeepSeek-V3 adopts Multi-head Latent Attention (MLA) and DeepSeekMoE architectures, which were thoroughly validated in DeepSeek-V2. Furthermore, DeepSeek-V3 pioneers an auxiliary-loss-free strategy for load balancing and sets a multi-token prediction training objective for stronger performance. We pre-train DeepSeek-V3 on 14.8 trillion diverse and high-quality tokens, followed by Supervised Fine-Tuning and Reinforcement Learning stages to fully harness its capabilities. Comprehensive evaluations reveal that DeepSeek-V3 outperforms other open-source models and achieves performance comparable to leading closed-source models. Despite its excellent performance, DeepSeek-V3 requires only 2.788M H800 GPU hours for its full training. In addition, its training process is remarkably stable. Throughout the entire training process, we did not experience any irrecoverable loss spikes or perform any rollbacks. The model checkpoints are available at https://github.com/deepseek-ai/DeepSeek-V3.

GESH-Net: Graph-Enhanced Spherical Harmonic Convolutional Networks for Cortical Surface Registration

Oct 18, 2024Abstract:Currently, cortical surface registration techniques based on classical methods have been well developed. However, a key issue with classical methods is that for each pair of images to be registered, it is necessary to search for the optimal transformation in the deformation space according to a specific optimization algorithm until the similarity measure function converges, which cannot meet the requirements of real-time and high-precision in medical image registration. Researching cortical surface registration based on deep learning models has become a new direction. But so far, there are still only a few studies on cortical surface image registration based on deep learning. Moreover, although deep learning methods theoretically have stronger representation capabilities, surpassing the most advanced classical methods in registration accuracy and distortion control remains a challenge. Therefore, to address this challenge, this paper constructs a deep learning model to study the technology of cortical surface image registration. The specific work is as follows: (1) An unsupervised cortical surface registration network based on a multi-scale cascaded structure is designed, and a convolution method based on spherical harmonic transformation is introduced to register cortical surface data. This solves the problem of scale-inflexibility of spherical feature transformation and optimizes the multi-scale registration process. (2)By integrating the attention mechanism, a graph-enhenced module is introduced into the registration network, using the graph attention module to help the network learn global features of cortical surface data, enhancing the learning ability of the network. The results show that the graph attention module effectively enhances the network's ability to extract global features, and its registration results have significant advantages over other methods.

DySpec: Faster Speculative Decoding with Dynamic Token Tree Structure

Oct 15, 2024Abstract:While speculative decoding has recently appeared as a promising direction for accelerating the inference of large language models (LLMs), the speedup and scalability are strongly bounded by the token acceptance rate. Prevalent methods usually organize predicted tokens as independent chains or fixed token trees, which fails to generalize to diverse query distributions. In this paper, we propose DySpec, a faster speculative decoding algorithm with a novel dynamic token tree structure. We begin by bridging the draft distribution and acceptance rate from intuitive and empirical clues, and successfully show that the two variables are strongly correlated. Based on this, we employ a greedy strategy to dynamically expand the token tree at run time. Theoretically, we show that our method can achieve optimal results under mild assumptions. Empirically, DySpec yields a higher acceptance rate and speedup than fixed trees. DySpec can drastically improve the throughput and reduce the latency of token generation across various data distribution and model sizes, which significantly outperforms strong competitors, including Specinfer and Sequoia. Under low temperature setting, DySpec can improve the throughput up to 9.1$\times$ and reduce the latency up to 9.4$\times$ on Llama2-70B. Under high temperature setting, DySpec can also improve the throughput up to 6.21$\times$, despite the increasing difficulty of speculating more than one token per step for draft model.

Channel Estimation for Movable-Antenna MIMO Systems Via Tensor Decomposition

Jul 26, 2024

Abstract:In this letter, we investigate the channel estimation problem for MIMO wireless communication systems with movable antennas (MAs) at both the transmitter (Tx) and receiver (Rx). To achieve high channel estimation accuracy with low pilot training overhead, we propose a tensor decomposition-based method for estimating the parameters of multi-path channel components, including their azimuth and elevation angles, as well as complex gain coefficients, thereby reconstructing the wireless channel between any pair of Tx and Rx MA positions in the Tx and Rx regions. First, we introduce a two-stage Tx-Rx successive antenna movement pattern for pilot training, such that the received pilot signals in both stages can be expressed as a third-order tensor. Then, we obtain the factor matrices of the tensor via the canonical polyadic decomposition, and thereby estimate the angle/gain parameters for enabling the channel reconstruction between arbitrary Tx/Rx MA positions. In addition, we analyze the uniqueness condition of the tensor decomposition, which ensures the complete channel reconstruction between the whole Tx and Rx regions based on the channel measurements at only a finite number of Tx/Rx MA positions. Finally, simulation results are presented to evaluate the proposed tensor decomposition-based method as compared to existing methods, in terms of channel estimation accuracy and pilot overhead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge