Lei Cheng

When Bayesian Tensor Completion Meets Multioutput Gaussian Processes: Functional Universality and Rank Learning

Dec 25, 2025Abstract:Functional tensor decomposition can analyze multi-dimensional data with real-valued indices, paving the path for applications in machine learning and signal processing. A limitation of existing approaches is the assumption that the tensor rank-a critical parameter governing model complexity-is known. However, determining the optimal rank is a non-deterministic polynomial-time hard (NP-hard) task and there is a limited understanding regarding the expressive power of functional low-rank tensor models for continuous signals. We propose a rank-revealing functional Bayesian tensor completion (RR-FBTC) method. Modeling the latent functions through carefully designed multioutput Gaussian processes, RR-FBTC handles tensors with real-valued indices while enabling automatic tensor rank determination during the inference process. We establish the universal approximation property of the model for continuous multi-dimensional signals, demonstrating its expressive power in a concise format. To learn this model, we employ the variational inference framework and derive an efficient algorithm with closed-form updates. Experiments on both synthetic and real-world datasets demonstrate the effectiveness and superiority of the RR-FBTC over state-of-the-art approaches. The code is available at https://github.com/OceanSTARLab/RR-FBTC.

Less Is More: Generating Time Series with LLaMA-Style Autoregression in Simple Factorized Latent Spaces

Nov 07, 2025Abstract:Generative models for multivariate time series are essential for data augmentation, simulation, and privacy preservation, yet current state-of-the-art diffusion-based approaches are slow and limited to fixed-length windows. We propose FAR-TS, a simple yet effective framework that combines disentangled factorization with an autoregressive Transformer over a discrete, quantized latent space to generate time series. Each time series is decomposed into a data-adaptive basis that captures static cross-channel correlations and temporal coefficients that are vector-quantized into discrete tokens. A LLaMA-style autoregressive Transformer then models these token sequences, enabling fast and controllable generation of sequences with arbitrary length. Owing to its streamlined design, FAR-TS achieves orders-of-magnitude faster generation than Diffusion-TS while preserving cross-channel correlations and an interpretable latent space, enabling high-quality and flexible time series synthesis.

Radar-Camera Fused Multi-Object Tracking: Online Calibration and Common Feature

Oct 23, 2025

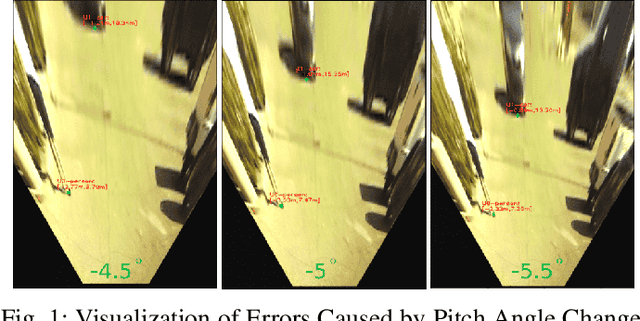

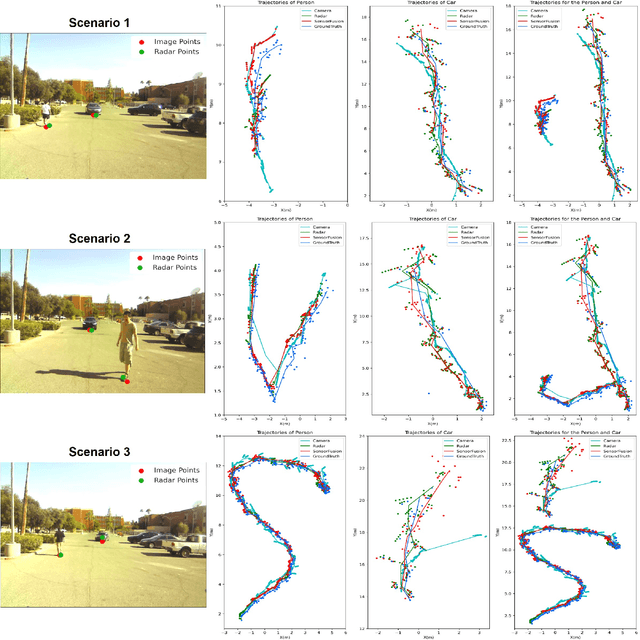

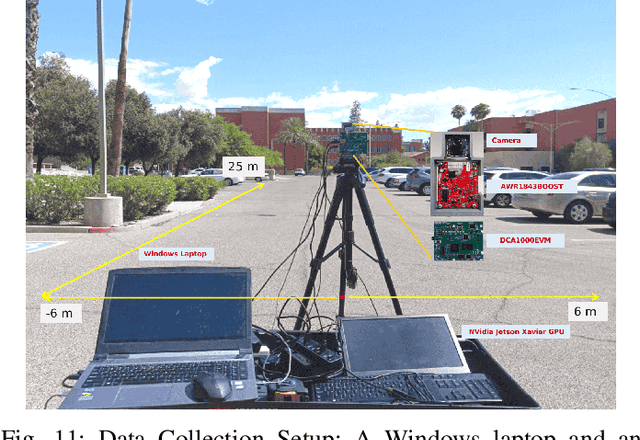

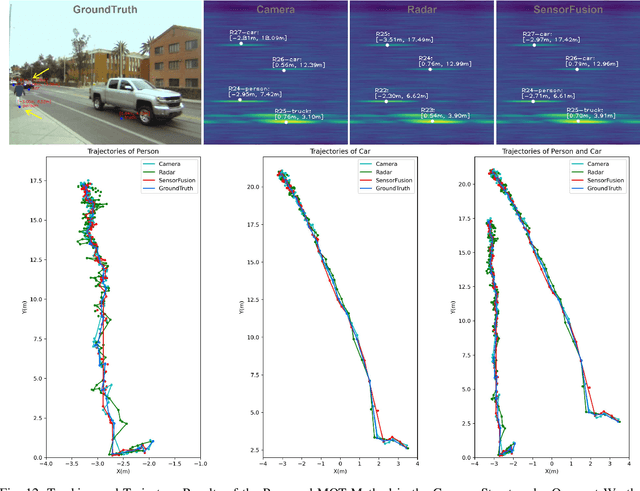

Abstract:This paper presents a Multi-Object Tracking (MOT) framework that fuses radar and camera data to enhance tracking efficiency while minimizing manual interventions. Contrary to many studies that underutilize radar and assign it a supplementary role--despite its capability to provide accurate range/depth information of targets in a world 3D coordinate system--our approach positions radar in a crucial role. Meanwhile, this paper utilizes common features to enable online calibration to autonomously associate detections from radar and camera. The main contributions of this work include: (1) the development of a radar-camera fusion MOT framework that exploits online radar-camera calibration to simplify the integration of detection results from these two sensors, (2) the utilization of common features between radar and camera data to accurately derive real-world positions of detected objects, and (3) the adoption of feature matching and category-consistency checking to surpass the limitations of mere position matching in enhancing sensor association accuracy. To the best of our knowledge, we are the first to investigate the integration of radar-camera common features and their use in online calibration for achieving MOT. The efficacy of our framework is demonstrated by its ability to streamline the radar-camera mapping process and improve tracking precision, as evidenced by real-world experiments conducted in both controlled environments and actual traffic scenarios. Code is available at https://github.com/radar-lab/Radar_Camera_MOT

A Learnable Fully Interacted Two-Tower Model for Pre-Ranking System

Sep 16, 2025Abstract:Pre-ranking plays a crucial role in large-scale recommender systems by significantly improving the efficiency and scalability within the constraints of providing high-quality candidate sets in real time. The two-tower model is widely used in pre-ranking systems due to a good balance between efficiency and effectiveness with decoupled architecture, which independently processes user and item inputs before calculating their interaction (e.g. dot product or similarity measure). However, this independence also leads to the lack of information interaction between the two towers, resulting in less effectiveness. In this paper, a novel architecture named learnable Fully Interacted Two-tower Model (FIT) is proposed, which enables rich information interactions while ensuring inference efficiency. FIT mainly consists of two parts: Meta Query Module (MQM) and Lightweight Similarity Scorer (LSS). Specifically, MQM introduces a learnable item meta matrix to achieve expressive early interaction between user and item features. Moreover, LSS is designed to further obtain effective late interaction between the user and item towers. Finally, experimental results on several public datasets show that our proposed FIT significantly outperforms the state-of-the-art baseline pre-ranking models.

Deep Tensor Learning for Reliable Channel Charting from Incomplete and Noisy Measurements

Sep 16, 2025Abstract:Channel charting has emerged as a powerful tool for user equipment localization and wireless environment sensing. Its efficacy lies in mapping high-dimensional channel data into low-dimensional features that preserve the relative similarities of the original data. However, existing channel charting methods are largely developed using simulated or indoor measurements, often assuming clean and complete channel data across all frequency bands. In contrast, real-world channels collected from base stations are typically incomplete due to frequency hopping and are significantly noisy, particularly at cell edges. These challenging conditions greatly degrade the performance of current methods. To address this, we propose a deep tensor learning method that leverages the inherent tensor structure of wireless channels to effectively extract informative while low-dimensional features (i.e., channel charts) from noisy and incomplete measurements. Experimental results demonstrate the reliability and effectiveness of the proposed approach in these challenging scenarios.

FieldFormer: Self-supervised Reconstruction of Physical Fields via Tensor Attention Prior

Jun 13, 2025Abstract:Reconstructing physical field tensors from \textit{in situ} observations, such as radio maps and ocean sound speed fields, is crucial for enabling environment-aware decision making in various applications, e.g., wireless communications and underwater acoustics. Field data reconstruction is often challenging, due to the limited and noisy nature of the observations, necessitating the incorporation of prior information to aid the reconstruction process. Deep neural network-based data-driven structural constraints (e.g., ``deeply learned priors'') have showed promising performance. However, this family of techniques faces challenges such as model mismatches between training and testing phases. This work introduces FieldFormer, a self-supervised neural prior learned solely from the limited {\it in situ} observations without the need of offline training. Specifically, the proposed framework starts with modeling the fields of interest using the tensor Tucker model of a high multilinear rank, which ensures a universal approximation property for all fields. In the sequel, an attention mechanism is incorporated to learn the sparsity pattern that underlies the core tensor in order to reduce the solution space. In this way, a ``complexity-adaptive'' neural representation, grounded in the Tucker decomposition, is obtained that can flexibly represent various types of fields. A theoretical analysis is provided to support the recoverability of the proposed design. Moreover, extensive experiments, using various physical field tensors, demonstrate the superiority of the proposed approach compared to state-of-the-art baselines.

Generating Full-field Evolution of Physical Dynamics from Irregular Sparse Observations

May 14, 2025Abstract:Modeling and reconstructing multidimensional physical dynamics from sparse and off-grid observations presents a fundamental challenge in scientific research. Recently, diffusion-based generative modeling shows promising potential for physical simulation. However, current approaches typically operate on on-grid data with preset spatiotemporal resolution, but struggle with the sparsely observed and continuous nature of real-world physical dynamics. To fill the gaps, we present SDIFT, Sequential DIffusion in Functional Tucker space, a novel framework that generates full-field evolution of physical dynamics from irregular sparse observations. SDIFT leverages the functional Tucker model as the latent space representer with proven universal approximation property, and represents observations as latent functions and Tucker core sequences. We then construct a sequential diffusion model with temporally augmented UNet in the functional Tucker space, denoising noise drawn from a Gaussian process to generate the sequence of core tensors. At the posterior sampling stage, we propose a Message-Passing Posterior Sampling mechanism, enabling conditional generation of the entire sequence guided by observations at limited time steps. We validate SDIFT on three physical systems spanning astronomical (supernova explosions, light-year scale), environmental (ocean sound speed fields, kilometer scale), and molecular (organic liquid, millimeter scale) domains, demonstrating significant improvements in both reconstruction accuracy and computational efficiency compared to state-of-the-art approaches.

PRISM: Probabilistic Representation for Integrated Shape Modeling and Generation

Apr 06, 2025Abstract:Despite the advancements in 3D full-shape generation, accurately modeling complex geometries and semantics of shape parts remains a significant challenge, particularly for shapes with varying numbers of parts. Current methods struggle to effectively integrate the contextual and structural information of 3D shapes into their generative processes. We address these limitations with PRISM, a novel compositional approach for 3D shape generation that integrates categorical diffusion models with Statistical Shape Models (SSM) and Gaussian Mixture Models (GMM). Our method employs compositional SSMs to capture part-level geometric variations and uses GMM to represent part semantics in a continuous space. This integration enables both high fidelity and diversity in generated shapes while preserving structural coherence. Through extensive experiments on shape generation and manipulation tasks, we demonstrate that our approach significantly outperforms previous methods in both quality and controllability of part-level operations. Our code will be made publicly available.

CalibRefine: Deep Learning-Based Online Automatic Targetless LiDAR-Camera Calibration with Iterative and Attention-Driven Post-Refinement

Feb 26, 2025Abstract:Accurate multi-sensor calibration is essential for deploying robust perception systems in applications such as autonomous driving, robotics, and intelligent transportation. Existing LiDAR-camera calibration methods often rely on manually placed targets, preliminary parameter estimates, or intensive data preprocessing, limiting their scalability and adaptability in real-world settings. In this work, we propose a fully automatic, targetless, and online calibration framework, CalibRefine, which directly processes raw LiDAR point clouds and camera images. Our approach is divided into four stages: (1) a Common Feature Discriminator that trains on automatically detected objects--using relative positions, appearance embeddings, and semantic classes--to generate reliable LiDAR-camera correspondences, (2) a coarse homography-based calibration, (3) an iterative refinement to incrementally improve alignment as additional data frames become available, and (4) an attention-based refinement that addresses non-planar distortions by leveraging a Vision Transformer and cross-attention mechanisms. Through extensive experiments on two urban traffic datasets, we show that CalibRefine delivers high-precision calibration results with minimal human involvement, outperforming state-of-the-art targetless methods and remaining competitive with, or surpassing, manually tuned baselines. Our findings highlight how robust object-level feature matching, together with iterative and self-supervised attention-based adjustments, enables consistent sensor fusion in complex, real-world conditions without requiring ground-truth calibration matrices or elaborate data preprocessing.

DOGR: Leveraging Document-Oriented Contrastive Learning in Generative Retrieval

Feb 12, 2025

Abstract:Generative retrieval constitutes an innovative approach in information retrieval, leveraging generative language models (LM) to generate a ranked list of document identifiers (docid) for a given query. It simplifies the retrieval pipeline by replacing the large external index with model parameters. However, existing works merely learned the relationship between queries and document identifiers, which is unable to directly represent the relevance between queries and documents. To address the above problem, we propose a novel and general generative retrieval framework, namely Leveraging Document-Oriented Contrastive Learning in Generative Retrieval (DOGR), which leverages contrastive learning to improve generative retrieval tasks. It adopts a two-stage learning strategy that captures the relationship between queries and documents comprehensively through direct interactions. Furthermore, negative sampling methods and corresponding contrastive learning objectives are implemented to enhance the learning of semantic representations, thereby promoting a thorough comprehension of the relationship between queries and documents. Experimental results demonstrate that DOGR achieves state-of-the-art performance compared to existing generative retrieval methods on two public benchmark datasets. Further experiments have shown that our framework is generally effective for common identifier construction techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge