Qiang Wu

Middle Tennessee State University

Expression is enough: Improving traffic signal control with advanced traffic state representation

Dec 19, 2021

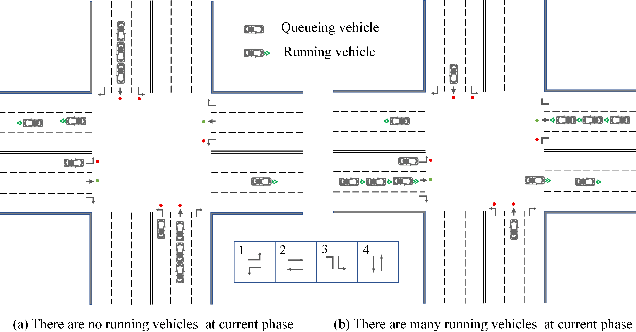

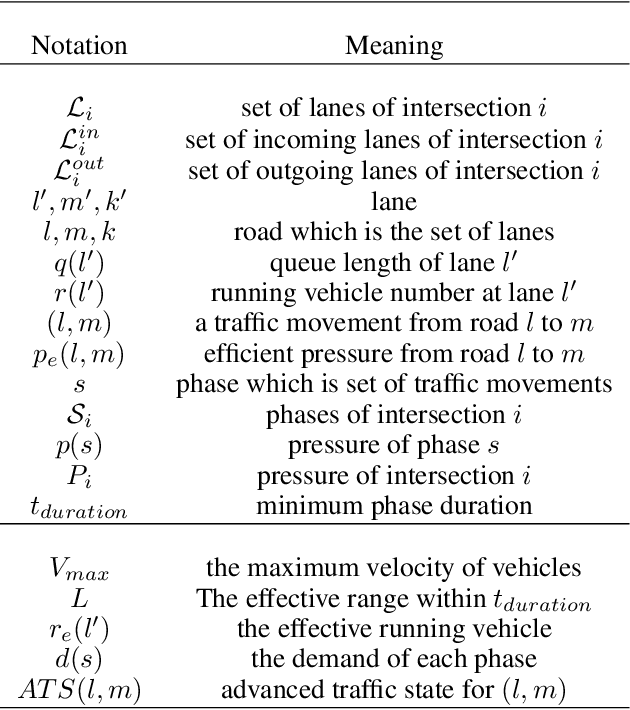

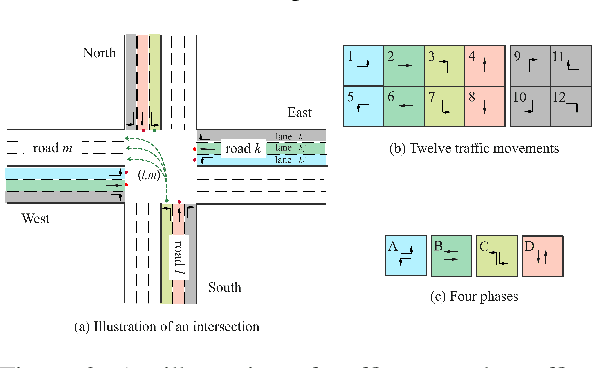

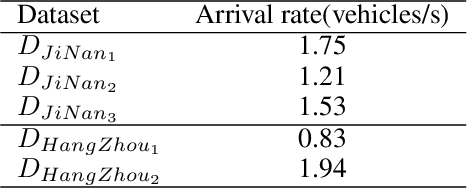

Abstract:Recently, finding fundamental properties for traffic state representation is more critical than complex algorithms for traffic signal control (TSC).In this paper, we (1) present a novel, flexible and straightforward method advanced max pressure (Advanced-MP), taking both running and queueing vehicles into consideration to decide whether to change current phase; (2) novelty design the traffic movement representation with the efficient pressure and effective running vehicles from Advanced-MP, namely advanced traffic state (ATS); (3) develop an RL-based algorithm template Advanced-XLight, by combining ATS with current RL approaches and generate two RL algorithms, "Advanced-MPLight" and "Advanced-CoLight". Comprehensive experiments on multiple real-world datasets show that: (1) the Advanced-MP outperforms baseline methods, which is efficient and reliable for deployment; (2) Advanced-MPLight and Advanced-CoLight could achieve new state-of-the-art. Our code is released on Github.

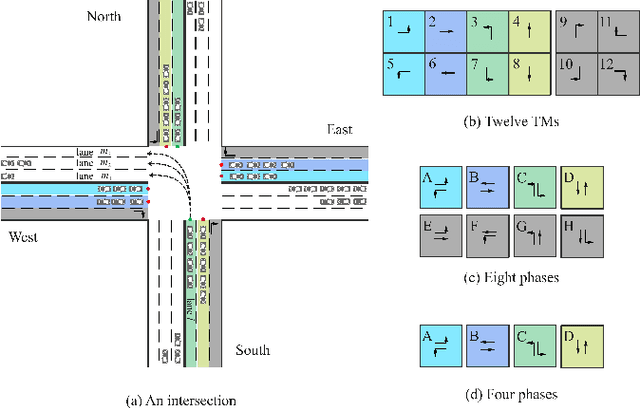

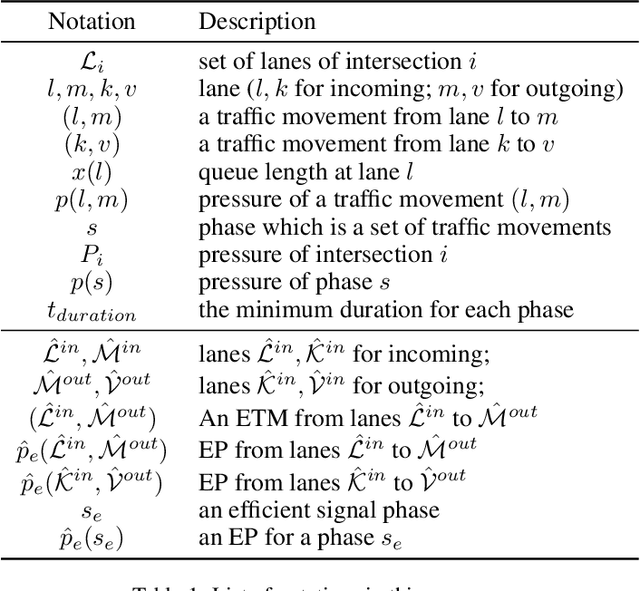

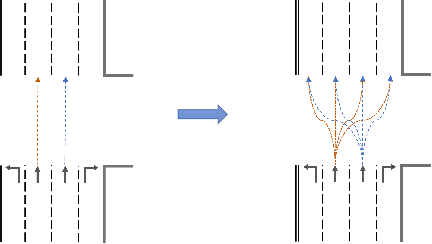

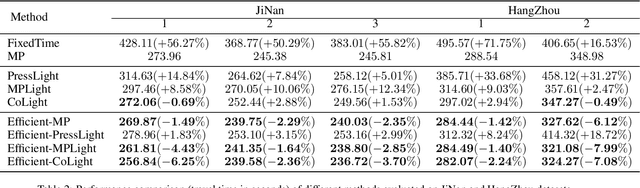

Efficient Pressure: Improving efficiency for signalized intersections

Dec 04, 2021

Abstract:Since conventional approaches could not adapt to dynamic traffic conditions, reinforcement learning (RL) has attracted more attention to help solve the traffic signal control (TSC) problem. However, existing RL-based methods are rarely deployed considering that they are neither cost-effective in terms of computing resources nor more robust than traditional approaches, which raises a critical research question: how to construct an adaptive controller for TSC with less training and reduced complexity based on RL-based approach? To address this question, in this paper, we (1) innovatively specify the traffic movement representation as a simple but efficient pressure of vehicle queues in a traffic network, namely efficient pressure (EP); (2) build a traffic signal settings protocol, including phase duration, signal phase number and EP for TSC; (3) design a TSC approach based on the traditional max pressure (MP) approach, namely efficient max pressure (Efficient-MP) using the EP to capture the traffic state; and (4) develop a general RL-based TSC algorithm template: efficient Xlight (Efficient-XLight) under EP. Through comprehensive experiments on multiple real-world datasets in our traffic signal settings' protocol for TSC, we demonstrate that efficient pressure is complementary to traditional and RL-based modeling to design better TSC methods. Our code is released on Github.

PTQ4ViT: Post-Training Quantization Framework for Vision Transformers

Nov 24, 2021

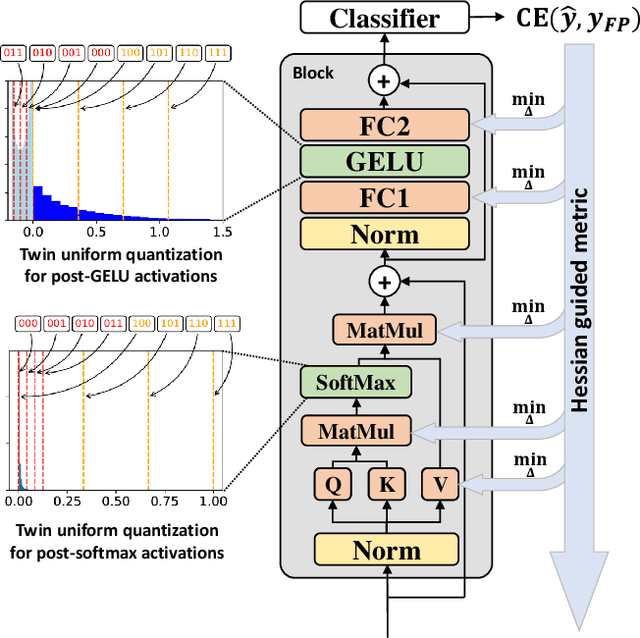

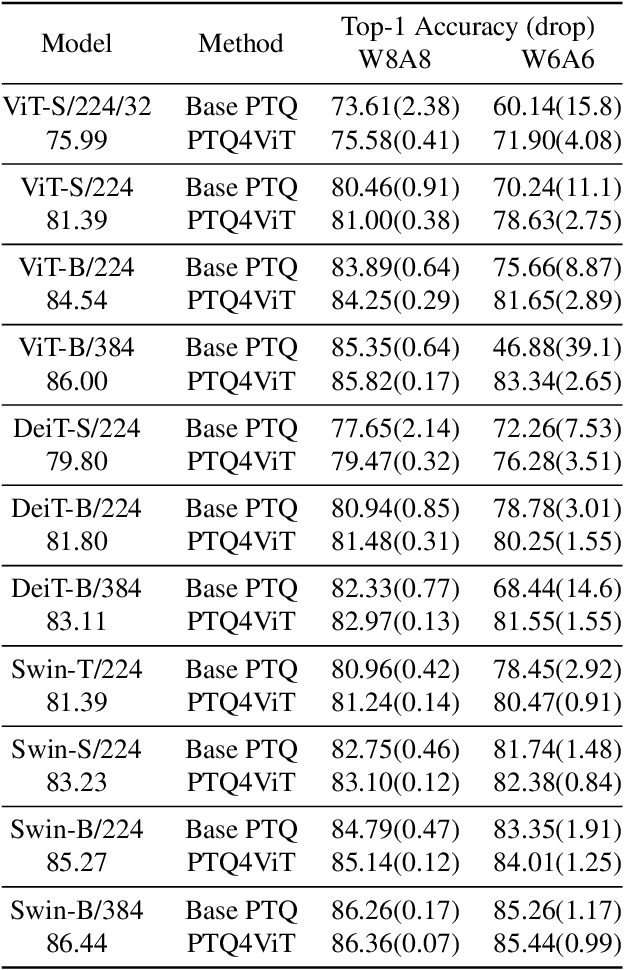

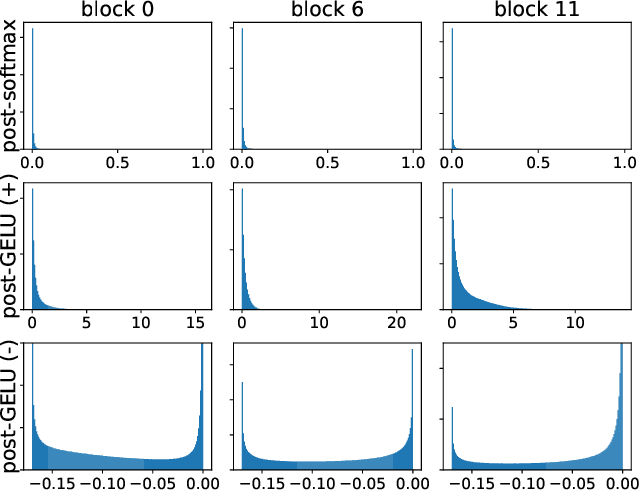

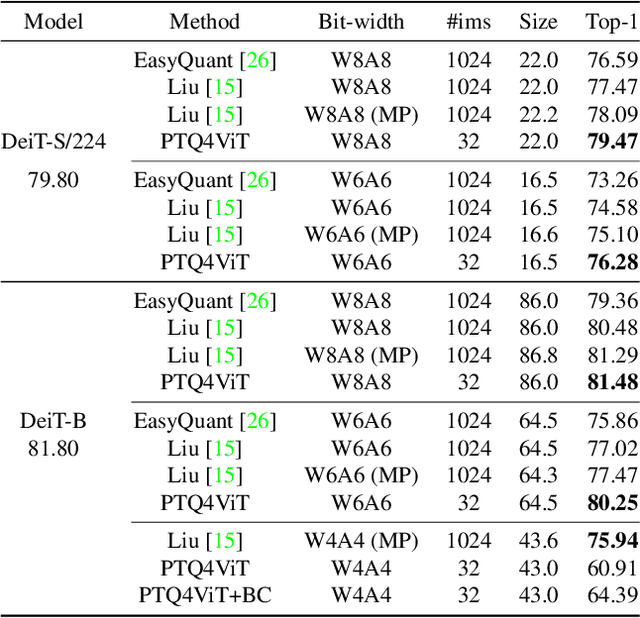

Abstract:Quantization is one of the most effective methods to compress neural networks, which has achieved great success on convolutional neural networks (CNNs). Recently, vision transformers have demonstrated great potential in computer vision. However, previous post-training quantization methods performed not well on vision transformer, resulting in more than 1% accuracy drop even in 8-bit quantization. Therefore, we analyze the problems of quantization on vision transformers. We observe the distributions of activation values after softmax and GELU functions are quite different from the Gaussian distribution. We also observe that common quantization metrics, such as MSE and cosine distance, are inaccurate to determine the optimal scaling factor. In this paper, we propose the twin uniform quantization method to reduce the quantization error on these activation values. And we propose to use a Hessian guided metric to evaluate different scaling factors, which improves the accuracy of calibration with a small cost. To enable the fast quantization of vision transformers, we develop an efficient framework, PTQ4ViT. Experiments show the quantized vision transformers achieve near-lossless prediction accuracy (less than 0.5% drop at 8-bit quantization) on the ImageNet classification task.

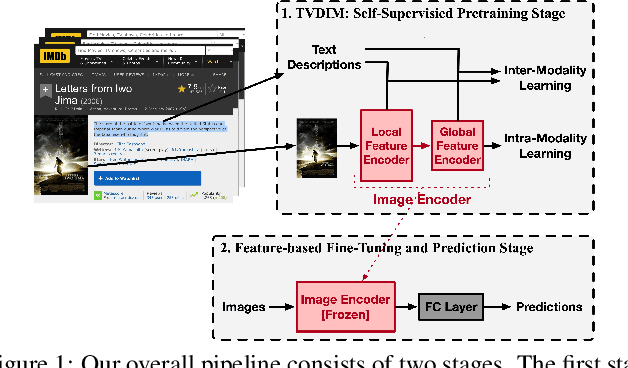

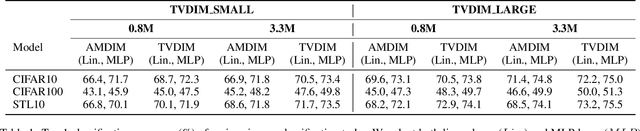

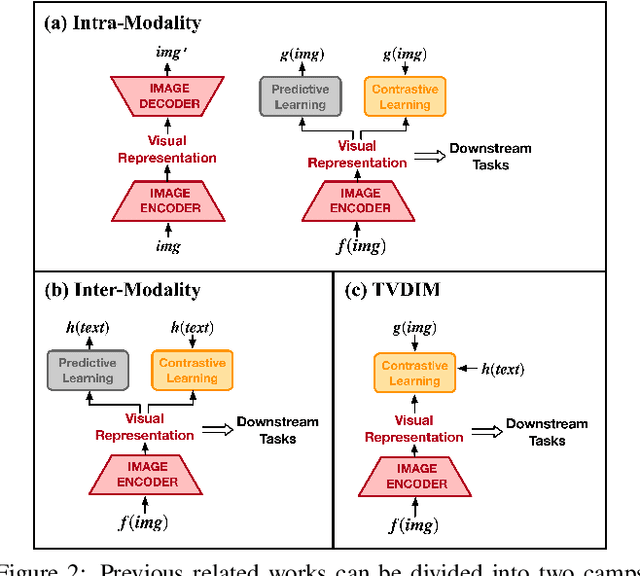

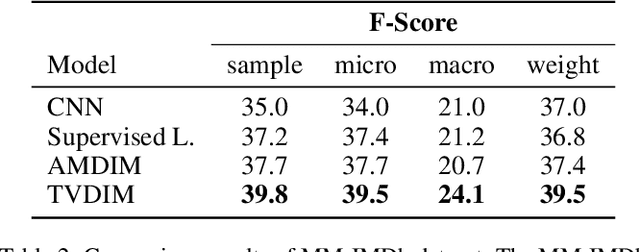

TVDIM: Enhancing Image Self-Supervised Pretraining via Noisy Text Data

Jun 13, 2021

Abstract:Among ubiquitous multimodal data in the real world, text is the modality generated by human, while image reflects the physical world honestly. In a visual understanding application, machines are expected to understand images like human. Inspired by this, we propose a novel self-supervised learning method, named Text-enhanced Visual Deep InfoMax (TVDIM), to learn better visual representations by fully utilizing the naturally-existing multimodal data. Our core idea of self-supervised learning is to maximize the mutual information between features extracted from multiple views of a shared context to a rational degree. Different from previous methods which only consider multiple views from a single modality, our work produces multiple views from different modalities, and jointly optimizes the mutual information for features pairs of intra-modality and inter-modality. Considering the information gap between inter-modality features pairs from data noise, we adopt a \emph{ranking-based} contrastive learning to optimize the mutual information. During evaluation, we directly use the pre-trained visual representations to complete various image classification tasks. Experimental results show that, TVDIM significantly outperforms previous visual self-supervised methods when processing the same set of images.

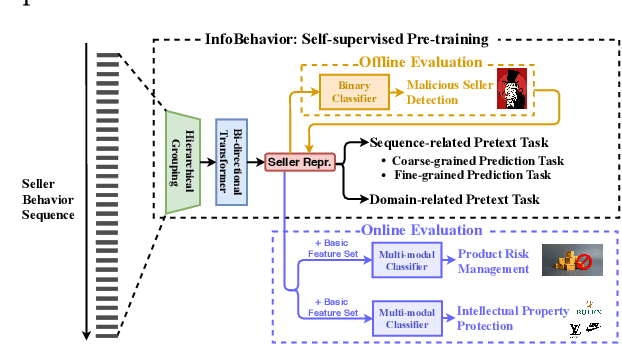

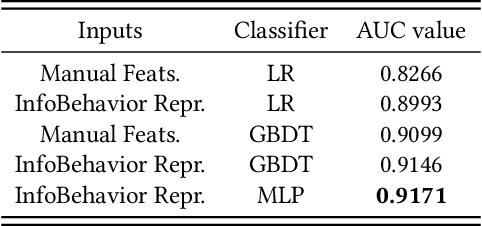

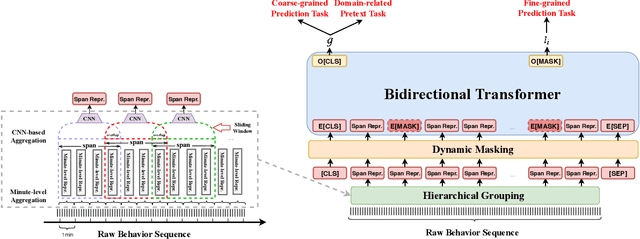

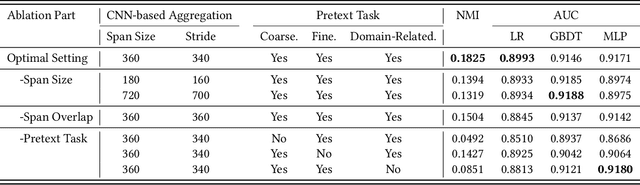

InfoBehavior: Self-supervised Representation Learning for Ultra-long Behavior Sequence via Hierarchical Grouping

Jun 13, 2021

Abstract:E-commerce companies have to face abnormal sellers who sell potentially-risky products. Typically, the risk can be identified by jointly considering product content (e.g., title and image) and seller behavior. This work focuses on behavior feature extraction as behavior sequences can provide valuable clues for the risk discovery by reflecting the sellers' operation habits. Traditional feature extraction techniques heavily depend on domain experts and adapt poorly to new tasks. In this paper, we propose a self-supervised method InfoBehavior to automatically extract meaningful representations from ultra-long raw behavior sequences instead of the costly feature selection procedure. InfoBehavior utilizes Bidirectional Transformer as feature encoder due to its excellent capability in modeling long-term dependency. However, it is intractable for commodity GPUs because the time and memory required by Transformer grow quadratically with the increase of sequence length. Thus, we propose a hierarchical grouping strategy to aggregate ultra-long raw behavior sequences to length-processable high-level embedding sequences. Moreover, we introduce two types of pretext tasks. Sequence-related pretext task defines a contrastive-based training objective to correctly select the masked-out coarse-grained/fine-grained behavior sequences against other "distractor" behavior sequences; Domain-related pretext task designs a classification training objective to correctly predict the domain-specific statistical results of anomalous behavior. We show that behavior representations from the pre-trained InfoBehavior can be directly used or integrated with features from other side information to support a wide range of downstream tasks. Experimental results demonstrate that InfoBehavior significantly improves the performance of Product Risk Management and Intellectual Property Protection.

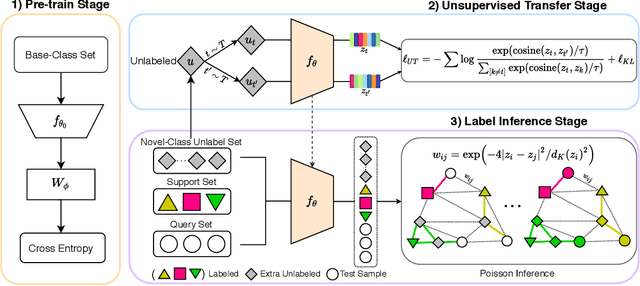

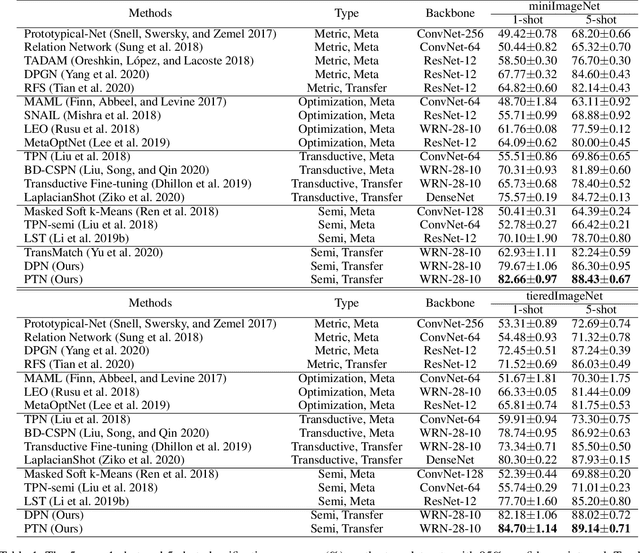

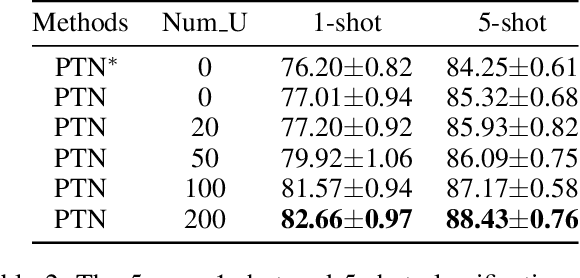

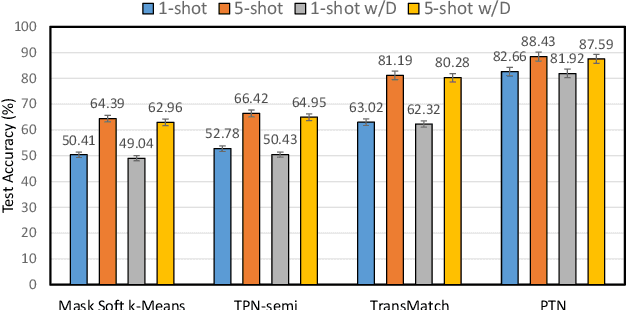

PTN: A Poisson Transfer Network for Semi-supervised Few-shot Learning

Dec 20, 2020

Abstract:The predicament in semi-supervised few-shot learning (SSFSL) is to maximize the value of the extra unlabeled data to boost the few-shot learner. In this paper, we propose a Poisson Transfer Network (PTN) to mine the unlabeled information for SSFSL from two aspects. First, the Poisson Merriman Bence Osher (MBO) model builds a bridge for the communications between labeled and unlabeled examples. This model serves as a more stable and informative classifier than traditional graph-based SSFSL methods in the message-passing process of the labels. Second, the extra unlabeled samples are employed to transfer the knowledge from base classes to novel classes through contrastive learning. Specifically, we force the augmented positive pairs close while push the negative ones distant. Our contrastive transfer scheme implicitly learns the novel-class embeddings to alleviate the over-fitting problem on the few labeled data. Thus, we can mitigate the degeneration of embedding generality in novel classes. Extensive experiments indicate that PTN outperforms the state-of-the-art few-shot and SSFSL models on miniImageNet and tieredImageNet benchmark datasets.

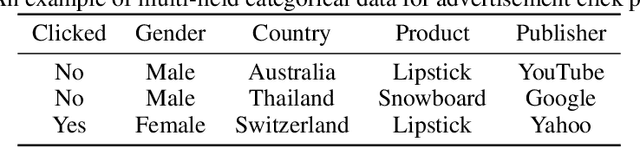

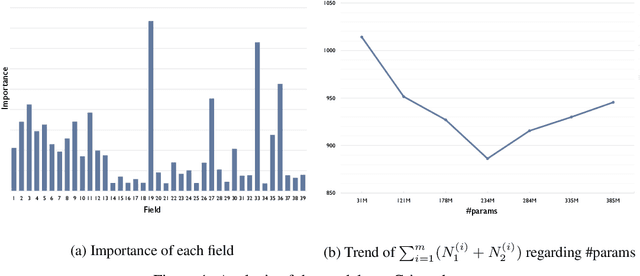

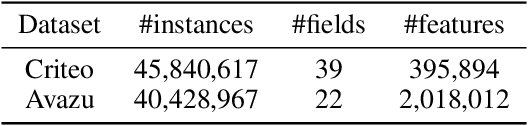

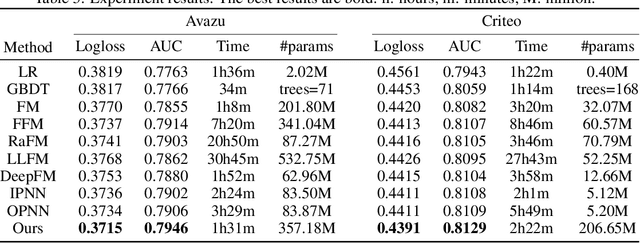

Field-wise Learning for Multi-field Categorical Data

Dec 01, 2020

Abstract:We propose a new method for learning with multi-field categorical data. Multi-field categorical data are usually collected over many heterogeneous groups. These groups can reflect in the categories under a field. The existing methods try to learn a universal model that fits all data, which is challenging and inevitably results in learning a complex model. In contrast, we propose a field-wise learning method leveraging the natural structure of data to learn simple yet efficient one-to-one field-focused models with appropriate constraints. In doing this, the models can be fitted to each category and thus can better capture the underlying differences in data. We present a model that utilizes linear models with variance and low-rank constraints, to help it generalize better and reduce the number of parameters. The model is also interpretable in a field-wise manner. As the dimensionality of multi-field categorical data can be very high, the models applied to such data are mostly over-parameterized. Our theoretical analysis can potentially explain the effect of over-parametrization on the generalization of our model. It also supports the variance constraints in the learning objective. The experiment results on two large-scale datasets show the superior performance of our model, the trend of the generalization error bound, and the interpretability of learning outcomes. Our code is available at https://github.com/lzb5600/Field-wise-Learning.

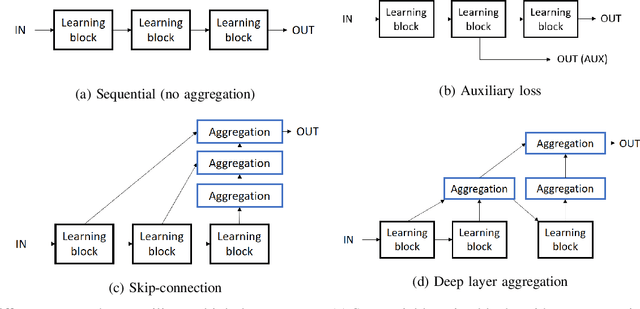

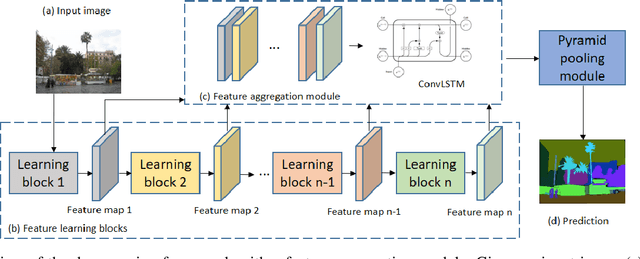

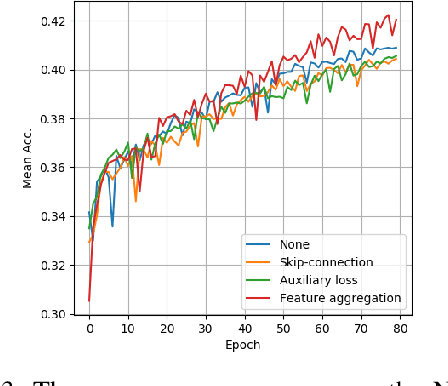

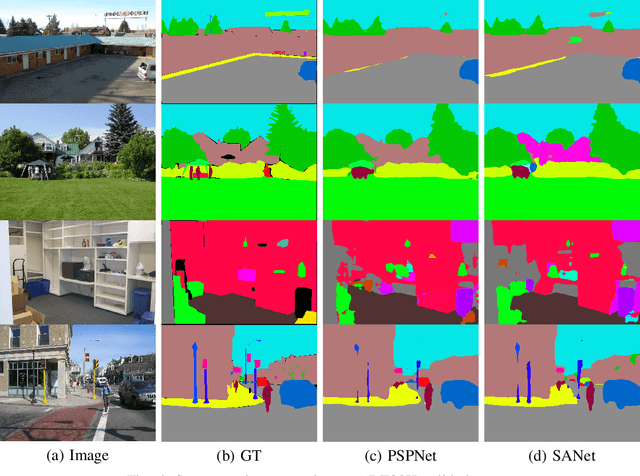

Multi-layer Feature Aggregation for Deep Scene Parsing Models

Nov 04, 2020

Abstract:Scene parsing from images is a fundamental yet challenging problem in visual content understanding. In this dense prediction task, the parsing model assigns every pixel to a categorical label, which requires the contextual information of adjacent image patches. So the challenge for this learning task is to simultaneously describe the geometric and semantic properties of objects or a scene. In this paper, we explore the effective use of multi-layer feature outputs of the deep parsing networks for spatial-semantic consistency by designing a novel feature aggregation module to generate the appropriate global representation prior, to improve the discriminative power of features. The proposed module can auto-select the intermediate visual features to correlate the spatial and semantic information. At the same time, the multiple skip connections form a strong supervision, making the deep parsing network easy to train. Extensive experiments on four public scene parsing datasets prove that the deep parsing network equipped with the proposed feature aggregation module can achieve very promising results.

Dual Attention on Pyramid Feature Maps for Image Captioning

Nov 02, 2020

Abstract:Generating natural sentences from images is a fundamental learning task for visual-semantic understanding in multimedia. In this paper, we propose to apply dual attention on pyramid image feature maps to fully explore the visual-semantic correlations and improve the quality of generated sentences. Specifically, with the full consideration of the contextual information provided by the hidden state of the RNN controller, the pyramid attention can better localize the visually indicative and semantically consistent regions in images. On the other hand, the contextual information can help re-calibrate the importance of feature components by learning the channel-wise dependencies, to improve the discriminative power of visual features for better content description. We conducted comprehensive experiments on three well-known datasets: Flickr8K, Flickr30K and MS COCO, which achieved impressive results in generating descriptive and smooth natural sentences from images. Using either convolution visual features or more informative bottom-up attention features, our composite captioning model achieves very promising performance in a single-model mode. The proposed pyramid attention and dual attention methods are highly modular, which can be inserted into various image captioning modules to further improve the performance.

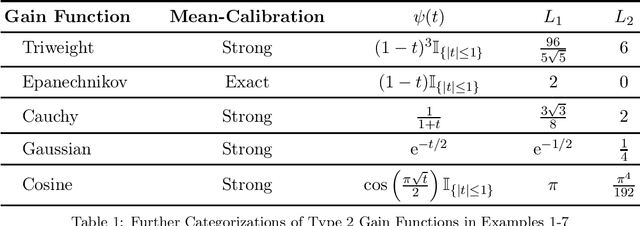

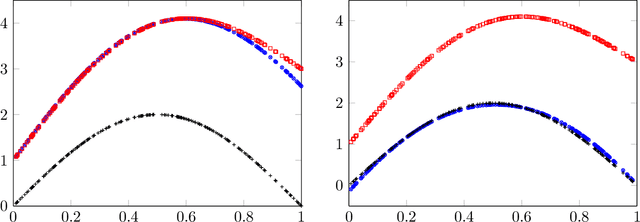

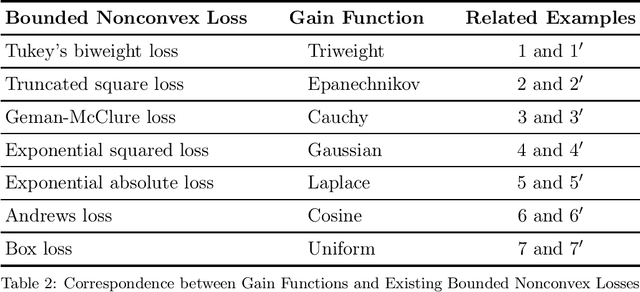

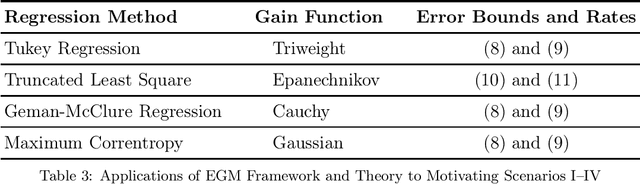

A Framework of Learning Through Empirical Gain Maximization

Sep 29, 2020

Abstract:We develop in this paper a framework of empirical gain maximization (EGM) to address the robust regression problem where heavy-tailed noise or outliers may present in the response variable. The idea of EGM is to approximate the density function of the noise distribution instead of approximating the truth function directly as usual. Unlike the classical maximum likelihood estimation that encourages equal importance of all observations and could be problematic in the presence of abnormal observations, EGM schemes can be interpreted from a minimum distance estimation viewpoint and allow the ignorance of those observations. Furthermore, it is shown that several well-known robust nonconvex regression paradigms, such as Tukey regression and truncated least square regression, can be reformulated into this new framework. We then develop a learning theory for EGM, by means of which a unified analysis can be conducted for these well-established but not fully-understood regression approaches. Resulting from the new framework, a novel interpretation of existing bounded nonconvex loss functions can be concluded. Within this new framework, the two seemingly irrelevant terminologies, the well-known Tukey's biweight loss for robust regression and the triweight kernel for nonparametric smoothing, are closely related. More precisely, it is shown that the Tukey's biweight loss can be derived from the triweight kernel. Similarly, other frequently employed bounded nonconvex loss functions in machine learning such as the truncated square loss, the Geman-McClure loss, and the exponential squared loss can also be reformulated from certain smoothing kernels in statistics. In addition, the new framework enables us to devise new bounded nonconvex loss functions for robust learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge