Pascal Fua

SuperPoint-E: local features for 3D reconstruction via tracking adaptation in endoscopy

Feb 04, 2026Abstract:In this work, we focus on boosting the feature extraction to improve the performance of Structure-from-Motion (SfM) in endoscopy videos. We present SuperPoint-E, a new local feature extraction method that, using our proposed Tracking Adaptation supervision strategy, significantly improves the quality of feature detection and description in endoscopy. Extensive experimentation on real endoscopy recordings studies our approach's most suitable configuration and evaluates SuperPoint-E feature quality. The comparison with other baselines also shows that our 3D reconstructions are denser and cover more and longer video segments because our detector fires more densely and our features are more likely to survive (i.e. higher detection precision). In addition, our descriptor is more discriminative, making the guided matching step almost redundant. The presented approach brings significant improvements in the 3D reconstructions obtained, via SfM on endoscopy videos, compared to the original SuperPoint and the gold standard SfM COLMAP pipeline.

Single View Garment Reconstruction Using Diffusion Mapping Via Pattern Coordinates

Apr 11, 2025Abstract:Reconstructing 3D clothed humans from images is fundamental to applications like virtual try-on, avatar creation, and mixed reality. While recent advances have enhanced human body recovery, accurate reconstruction of garment geometry -- especially for loose-fitting clothing -- remains an open challenge. We present a novel method for high-fidelity 3D garment reconstruction from single images that bridges 2D and 3D representations. Our approach combines Implicit Sewing Patterns (ISP) with a generative diffusion model to learn rich garment shape priors in a 2D UV space. A key innovation is our mapping model that establishes correspondences between 2D image pixels, UV pattern coordinates, and 3D geometry, enabling joint optimization of both 3D garment meshes and the corresponding 2D patterns by aligning learned priors with image observations. Despite training exclusively on synthetically simulated cloth data, our method generalizes effectively to real-world images, outperforming existing approaches on both tight- and loose-fitting garments. The reconstructed garments maintain physical plausibility while capturing fine geometric details, enabling downstream applications including garment retargeting and texture manipulation.

Do you understand epistemic uncertainty? Think again! Rigorous frequentist epistemic uncertainty estimation in regression

Mar 17, 2025Abstract:Quantifying model uncertainty is critical for understanding prediction reliability, yet distinguishing between aleatoric and epistemic uncertainty remains challenging. We extend recent work from classification to regression to provide a novel frequentist approach to epistemic and aleatoric uncertainty estimation. We train models to generate conditional predictions by feeding their initial output back as an additional input. This method allows for a rigorous measurement of model uncertainty by observing how prediction responses change when conditioned on the model's previous answer. We provide a complete theoretical framework to analyze epistemic uncertainty in regression in a frequentist way, and explain how it can be exploited in practice to gauge a model's uncertainty, with minimal changes to the original architecture.

D3DR: Lighting-Aware Object Insertion in Gaussian Splatting

Mar 09, 2025Abstract:Gaussian Splatting has become a popular technique for various 3D Computer Vision tasks, including novel view synthesis, scene reconstruction, and dynamic scene rendering. However, the challenge of natural-looking object insertion, where the object's appearance seamlessly matches the scene, remains unsolved. In this work, we propose a method, dubbed D3DR, for inserting a 3DGS-parametrized object into 3DGS scenes while correcting its lighting, shadows, and other visual artifacts to ensure consistency, a problem that has not been successfully addressed before. We leverage advances in diffusion models, which, trained on real-world data, implicitly understand correct scene lighting. After inserting the object, we optimize a diffusion-based Delta Denoising Score (DDS)-inspired objective to adjust its 3D Gaussian parameters for proper lighting correction. Utilizing diffusion model personalization techniques to improve optimization quality, our approach ensures seamless object insertion and natural appearance. Finally, we demonstrate the method's effectiveness by comparing it to existing approaches, achieving 0.5 PSNR and 0.15 SSIM improvements in relighting quality.

DiffAtlas: GenAI-fying Atlas Segmentation via Image-Mask Diffusion

Mar 09, 2025Abstract:Accurate medical image segmentation is crucial for precise anatomical delineation. Deep learning models like U-Net have shown great success but depend heavily on large datasets and struggle with domain shifts, complex structures, and limited training samples. Recent studies have explored diffusion models for segmentation by iteratively refining masks. However, these methods still retain the conventional image-to-mask mapping, making them highly sensitive to input data, which hampers stability and generalization. In contrast, we introduce DiffAtlas, a novel generative framework that models both images and masks through diffusion during training, effectively ``GenAI-fying'' atlas-based segmentation. During testing, the model is guided to generate a specific target image-mask pair, from which the corresponding mask is obtained. DiffAtlas retains the robustness of the atlas paradigm while overcoming its scalability and domain-specific limitations. Extensive experiments on CT and MRI across same-domain, cross-modality, varying-domain, and different data-scale settings using the MMWHS and TotalSegmentator datasets demonstrate that our approach outperforms existing methods, particularly in limited-data and zero-shot modality segmentation. Code is available at https://github.com/M3DV/DiffAtlas.

PartSDF: Part-Based Implicit Neural Representation for Composite 3D Shape Parametrization and Optimization

Feb 18, 2025

Abstract:Accurate 3D shape representation is essential in engineering applications such as design, optimization, and simulation. In practice, engineering workflows require structured, part-aware representations, as objects are inherently designed as assemblies of distinct components. However, most existing methods either model shapes holistically or decompose them without predefined part structures, limiting their applicability in real-world design tasks. We propose PartSDF, a supervised implicit representation framework that explicitly models composite shapes with independent, controllable parts while maintaining shape consistency. Despite its simple single-decoder architecture, PartSDF outperforms both supervised and unsupervised baselines in reconstruction and generation tasks. We further demonstrate its effectiveness as a structured shape prior for engineering applications, enabling precise control over individual components while preserving overall coherence. Code available at https://github.com/cvlab-epfl/PartSDF.

Real-time Free-view Human Rendering from Sparse-view RGB Videos using Double Unprojected Textures

Dec 17, 2024

Abstract:Real-time free-view human rendering from sparse-view RGB inputs is a challenging task due to the sensor scarcity and the tight time budget. To ensure efficiency, recent methods leverage 2D CNNs operating in texture space to learn rendering primitives. However, they either jointly learn geometry and appearance, or completely ignore sparse image information for geometry estimation, significantly harming visual quality and robustness to unseen body poses. To address these issues, we present Double Unprojected Textures, which at the core disentangles coarse geometric deformation estimation from appearance synthesis, enabling robust and photorealistic 4K rendering in real-time. Specifically, we first introduce a novel image-conditioned template deformation network, which estimates the coarse deformation of the human template from a first unprojected texture. This updated geometry is then used to apply a second and more accurate texture unprojection. The resulting texture map has fewer artifacts and better alignment with input views, which benefits our learning of finer-level geometry and appearance represented by Gaussian splats. We validate the effectiveness and efficiency of the proposed method in quantitative and qualitative experiments, which significantly surpasses other state-of-the-art methods.

MedTet: An Online Motion Model for 4D Heart Reconstruction

Dec 03, 2024

Abstract:We present a novel approach to reconstruction of 3D cardiac motion from sparse intraoperative data. While existing methods can accurately reconstruct 3D organ geometries from full 3D volumetric imaging, they cannot be used during surgical interventions where usually limited observed data, such as a few 2D frames or 1D signals, is available in real-time. We propose a versatile framework for reconstructing 3D motion from such partial data. It discretizes the 3D space into a deformable tetrahedral grid with signed distance values, providing implicit unlimited resolution while maintaining explicit control over motion dynamics. Given an initial 3D model reconstructed from pre-operative full volumetric data, our system, equipped with an universal observation encoder, can reconstruct coherent 3D cardiac motion from full 3D volumes, a few 2D MRI slices or even 1D signals. Extensive experiments on cardiac intervention scenarios demonstrate our ability to generate plausible and anatomically consistent 3D motion reconstructions from various sparse real-time observations, highlighting its potential for multimodal cardiac imaging. Our code and model will be made available at https://github.com/Scalsol/MedTet.

Counting Stacked Objects from Multi-View Images

Nov 28, 2024

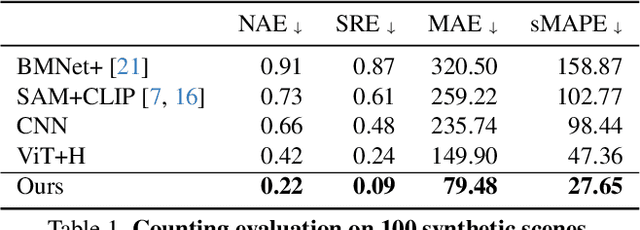

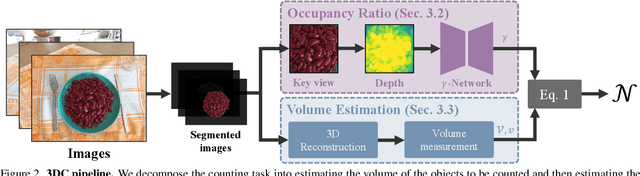

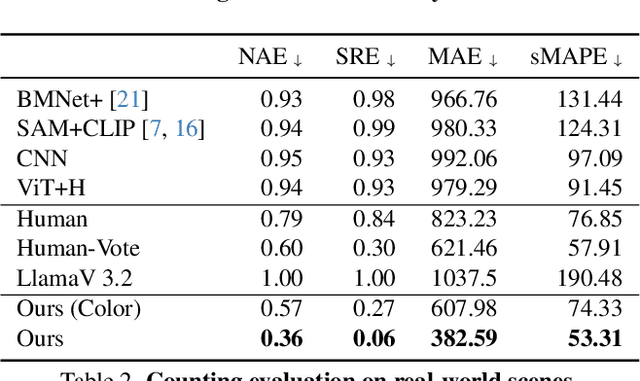

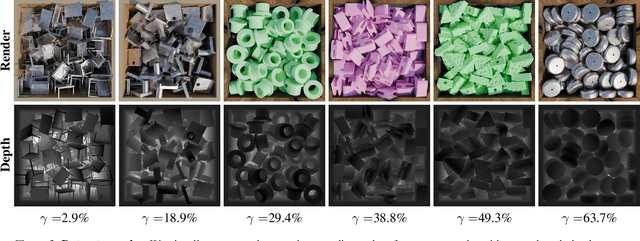

Abstract:Visual object counting is a fundamental computer vision task underpinning numerous real-world applications, from cell counting in biomedicine to traffic and wildlife monitoring. However, existing methods struggle to handle the challenge of stacked 3D objects in which most objects are hidden by those above them. To address this important yet underexplored problem, we propose a novel 3D counting approach that decomposes the task into two complementary subproblems - estimating the 3D geometry of the object stack and the occupancy ratio from multi-view images. By combining geometric reconstruction and deep learning-based depth analysis, our method can accurately count identical objects within containers, even when they are irregularly stacked. We validate our 3D Counting pipeline on diverse real-world and large-scale synthetic datasets, which we will release publicly to facilitate further research.

No Identity, no problem: Motion through detection for people tracking

Nov 25, 2024

Abstract:Tracking-by-detection has become the de facto standard approach to people tracking. To increase robustness, some approaches incorporate re-identification using appearance models and regressing motion offset, which requires costly identity annotations. In this paper, we propose exploiting motion clues while providing supervision only for the detections, which is much easier to do. Our algorithm predicts detection heatmaps at two different times, along with a 2D motion estimate between the two images. It then warps one heatmap using the motion estimate and enforces consistency with the other one. This provides the required supervisory signal on the motion without the need for any motion annotations. In this manner, we couple the information obtained from different images during training and increase accuracy, especially in crowded scenes and when using low frame-rate sequences. We show that our approach delivers state-of-the-art results for single- and multi-view multi-target tracking on the MOT17 and WILDTRACK datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge