Mingbao Lin

Real-Time Image Demoireing on Mobile Devices

Feb 04, 2023

Abstract:Moire patterns appear frequently when taking photos of digital screens, drastically degrading the image quality. Despite the advance of CNNs in image demoireing, existing networks are with heavy design, causing redundant computation burden for mobile devices. In this paper, we launch the first study on accelerating demoireing networks and propose a dynamic demoireing acceleration method (DDA) towards a real-time deployment on mobile devices. Our stimulus stems from a simple-yet-universal fact that moire patterns often unbalancedly distribute across an image. Consequently, excessive computation is wasted upon non-moire areas. Therefore, we reallocate computation costs in proportion to the complexity of image patches. In order to achieve this aim, we measure the complexity of an image patch by designing a novel moire prior that considers both colorfulness and frequency information of moire patterns. Then, we restore image patches with higher-complexity using larger networks and the ones with lower-complexity are assigned with smaller networks to relieve the computation burden. At last, we train all networks in a parameter-shared supernet paradigm to avoid additional parameter burden. Extensive experiments on several benchmarks demonstrate the efficacy of our proposed DDA. In addition, the acceleration evaluated on the VIVO X80 Pro smartphone equipped with a chip of Snapdragon 8 Gen 1 shows that our method can drastically reduce the inference time, leading to a real-time image demoireing on mobile devices. Source codes and models are released at https://github.com/zyxxmu/DDA

Low-Rank Winograd Transformation for 3D Convolutional Neural Networks

Jan 26, 2023Abstract:This paper focuses on Winograd transformation in 3D convolutional neural networks (CNNs) that are more over-parameterized compared with the 2D version. The over-increasing Winograd parameters not only exacerbate training complexity but also barricade the practical speedups due simply to the volume of element-wise products in the Winograd domain. We attempt to reduce trainable parameters by introducing a low-rank Winograd transformation, a novel training paradigm that decouples the original large tensor into two less storage-required trainable tensors, leading to a significant complexity reduction. Built upon our low-rank Winograd transformation, we take one step ahead by proposing a low-rank oriented sparse granularity that measures column-wise parameter importance. By simply involving the non-zero columns in the element-wise product, our sparse granularity is empowered with the ability to produce a very regular sparse pattern to acquire effectual Winograd speedups. To better understand the efficacy of our method, we perform extensive experiments on 3D CNNs. Results manifest that our low-rank Winograd transformation well outperforms the vanilla Winograd transformation. We also show that our proposed low-rank oriented sparse granularity permits practical Winograd acceleration compared with the vanilla counterpart.

Discriminator-Cooperated Feature Map Distillation for GAN Compression

Dec 29, 2022Abstract:Despite excellent performance in image generation, Generative Adversarial Networks (GANs) are notorious for its requirements of enormous storage and intensive computation. As an awesome ''performance maker'', knowledge distillation is demonstrated to be particularly efficacious in exploring low-priced GANs. In this paper, we investigate the irreplaceability of teacher discriminator and present an inventive discriminator-cooperated distillation, abbreviated as DCD, towards refining better feature maps from the generator. In contrast to conventional pixel-to-pixel match methods in feature map distillation, our DCD utilizes teacher discriminator as a transformation to drive intermediate results of the student generator to be perceptually close to corresponding outputs of the teacher generator. Furthermore, in order to mitigate mode collapse in GAN compression, we construct a collaborative adversarial training paradigm where the teacher discriminator is from scratch established to co-train with student generator in company with our DCD. Our DCD shows superior results compared with existing GAN compression methods. For instance, after reducing over 40x MACs and 80x parameters of CycleGAN, we well decrease FID metric from 61.53 to 48.24 while the current SoTA method merely has 51.92. This work's source code has been made accessible at https://github.com/poopit/DCD-official.

SMMix: Self-Motivated Image Mixing for Vision Transformers

Dec 26, 2022Abstract:CutMix is a vital augmentation strategy that determines the performance and generalization ability of vision transformers (ViTs). However, the inconsistency between the mixed images and the corresponding labels harms its efficacy. Existing CutMix variants tackle this problem by generating more consistent mixed images or more precise mixed labels, but inevitably introduce heavy training overhead or require extra information, undermining ease of use. To this end, we propose an efficient and effective Self-Motivated image Mixing method (SMMix), which motivates both image and label enhancement by the model under training itself. Specifically, we propose a max-min attention region mixing approach that enriches the attention-focused objects in the mixed images. Then, we introduce a fine-grained label assignment technique that co-trains the output tokens of mixed images with fine-grained supervision. Moreover, we devise a novel feature consistency constraint to align features from mixed and unmixed images. Due to the subtle designs of the self-motivated paradigm, our SMMix is significant in its smaller training overhead and better performance than other CutMix variants. In particular, SMMix improves the accuracy of DeiT-T/S, CaiT-XXS-24/36, and PVT-T/S/M/L by more than +1% on ImageNet-1k. The generalization capability of our method is also demonstrated on downstream tasks and out-of-distribution datasets. Code of this project is available at https://github.com/ChenMnZ/SMMix.

Exploring Content Relationships for Distilling Efficient GANs

Dec 21, 2022Abstract:This paper proposes a content relationship distillation (CRD) to tackle the over-parameterized generative adversarial networks (GANs) for the serviceability in cutting-edge devices. In contrast to traditional instance-level distillation, we design a novel GAN compression oriented knowledge by slicing the contents of teacher outputs into multiple fine-grained granularities, such as row/column strips (global information) and image patches (local information), modeling the relationships among them, such as pairwise distance and triplet-wise angle, and encouraging the student to capture these relationships within its output contents. Built upon our proposed content-level distillation, we also deploy an online teacher discriminator, which keeps updating when co-trained with the teacher generator and keeps freezing when co-trained with the student generator for better adversarial training. We perform extensive experiments on three benchmark datasets, the results of which show that our CRD reaches the most complexity reduction on GANs while obtaining the best performance in comparison with existing methods. For example, we reduce MACs of CycleGAN by around 40x and parameters by over 80x, meanwhile, 46.61 FIDs are obtained compared with these of 51.92 for the current state-of-the-art. Code of this project is available at https://github.com/TheKernelZ/CRD.

Shadow Removal by High-Quality Shadow Synthesis

Dec 08, 2022Abstract:Most shadow removal methods rely on the invasion of training images associated with laborious and lavish shadow region annotations, leading to the increasing popularity of shadow image synthesis. However, the poor performance also stems from these synthesized images since they are often shadow-inauthentic and details-impaired. In this paper, we present a novel generation framework, referred to as HQSS, for high-quality pseudo shadow image synthesis. The given image is first decoupled into a shadow region identity and a non-shadow region identity. HQSS employs a shadow feature encoder and a generator to synthesize pseudo images. Specifically, the encoder extracts the shadow feature of a region identity which is then paired with another region identity to serve as the generator input to synthesize a pseudo image. The pseudo image is expected to have the shadow feature as its input shadow feature and as well as a real-like image detail as its input region identity. To fulfill this goal, we design three learning objectives. When the shadow feature and input region identity are from the same region identity, we propose a self-reconstruction loss that guides the generator to reconstruct an identical pseudo image as its input. When the shadow feature and input region identity are from different identities, we introduce an inter-reconstruction loss and a cycle-reconstruction loss to make sure that shadow characteristics and detail information can be well retained in the synthesized images. Our HQSS is observed to outperform the state-of-the-art methods on ISTD dataset, Video Shadow Removal dataset, and SRD dataset. The code is available at https://github.com/zysxmu/HQSS.

Exploiting the Partly Scratch-off Lottery Ticket for Quantization-Aware Training

Nov 21, 2022

Abstract:Quantization-aware training (QAT) receives extensive popularity as it well retains the performance of quantized networks. In QAT, the contemporary experience is that all quantized weights are updated for an entire training process. In this paper, this experience is challenged based on an interesting phenomenon we observed. Specifically, a large portion of quantized weights reaches the optimal quantization level after a few training epochs, which we refer to as the partly scratch-off lottery ticket. This straightforward-yet-valuable observation naturally inspires us to zero out gradient calculations of these weights in the remaining training period to avoid meaningless updating. To effectively find the ticket, we develop a heuristic method, dubbed as lottery ticket scratcher (LTS), which freezes a weight once the distance between the full-precision one and its quantization level is smaller than a controllable threshold. Surprisingly, the proposed LTS typically eliminates 30%-60% weight updating and 15%-30% FLOPs of the backward pass, while still resulting on par with or even better performance than the compared baseline. For example, compared with the baseline, LTS improves 2-bit ResNet-18 by 1.41%, eliminating 56% weight updating and 28% FLOPs of the backward pass. Code is at https://github.com/zysxmu/LTS.

LAB-Net: LAB Color-Space Oriented Lightweight Network for Shadow Removal

Aug 27, 2022

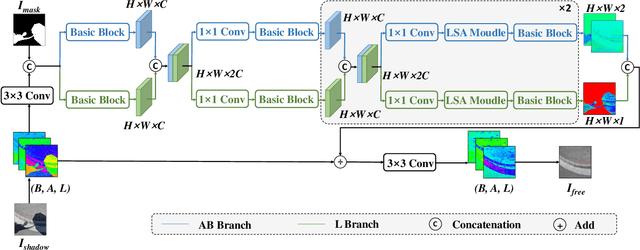

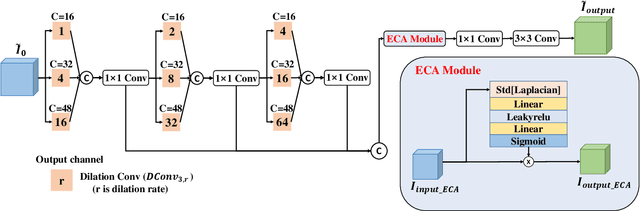

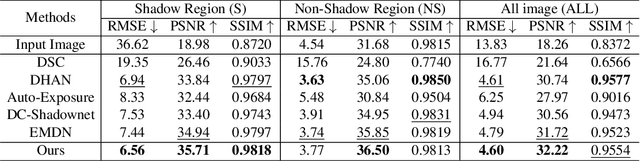

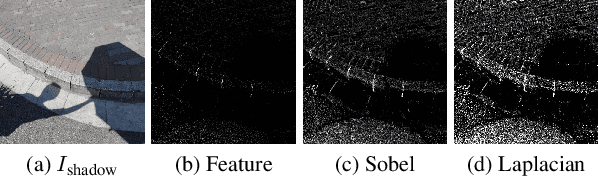

Abstract:This paper focuses on the limitations of current over-parameterized shadow removal models. We present a novel lightweight deep neural network that processes shadow images in the LAB color space. The proposed network termed "LAB-Net", is motivated by the following three observations: First, the LAB color space can well separate the luminance information and color properties. Second, sequentially-stacked convolutional layers fail to take full use of features from different receptive fields. Third, non-shadow regions are important prior knowledge to diminish the drastic color difference between shadow and non-shadow regions. Consequently, we design our LAB-Net by involving a two-branch structure: L and AB branches. Thus the shadow-related luminance information can well be processed in the L branch, while the color property is well retained in the AB branch. In addition, each branch is composed of several Basic Blocks, local spatial attention modules (LSA), and convolutional filters. Each Basic Block consists of multiple parallelized dilated convolutions of divergent dilation rates to receive different receptive fields that are operated with distinct network widths to save model parameters and computational costs. Then, an enhanced channel attention module (ECA) is constructed to aggregate features from different receptive fields for better shadow removal. Finally, the LSA modules are further developed to fully use the prior information in non-shadow regions to cleanse the shadow regions. We perform extensive experiments on the both ISTD and SRD datasets. Experimental results show that our LAB-Net well outperforms state-of-the-art methods. Also, our model's parameters and computational costs are reduced by several orders of magnitude. Our code is available at https://github.com/ngrxmu/LAB-Net.

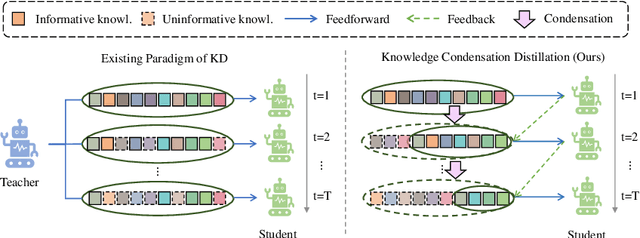

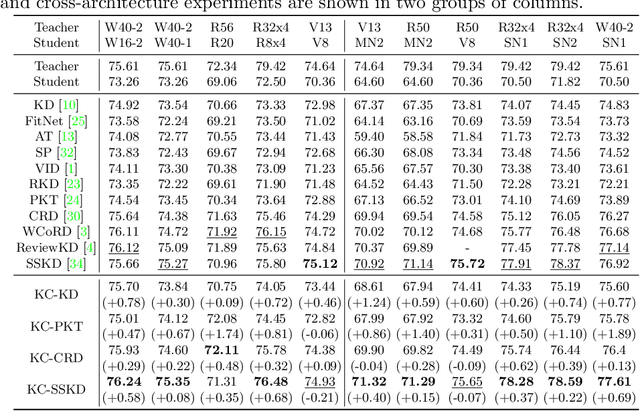

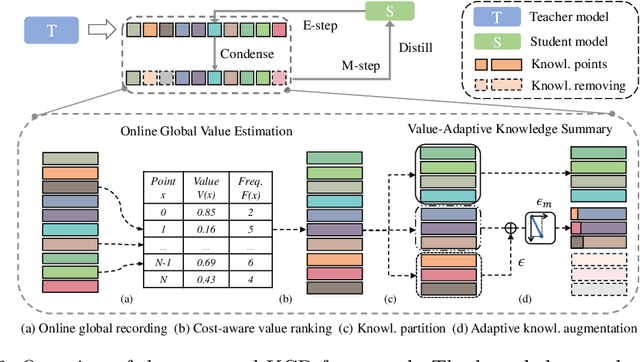

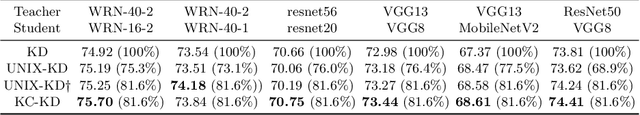

Knowledge Condensation Distillation

Jul 12, 2022

Abstract:Knowledge Distillation (KD) transfers the knowledge from a high-capacity teacher network to strengthen a smaller student. Existing methods focus on excavating the knowledge hints and transferring the whole knowledge to the student. However, the knowledge redundancy arises since the knowledge shows different values to the student at different learning stages. In this paper, we propose Knowledge Condensation Distillation (KCD). Specifically, the knowledge value on each sample is dynamically estimated, based on which an Expectation-Maximization (EM) framework is forged to iteratively condense a compact knowledge set from the teacher to guide the student learning. Our approach is easy to build on top of the off-the-shelf KD methods, with no extra training parameters and negligible computation overhead. Thus, it presents one new perspective for KD, in which the student that actively identifies teacher's knowledge in line with its aptitude can learn to learn more effectively and efficiently. Experiments on standard benchmarks manifest that the proposed KCD can well boost the performance of student model with even higher distillation efficiency. Code is available at https://github.com/dzy3/KCD.

Learning Best Combination for Efficient N:M Sparsity

Jun 14, 2022

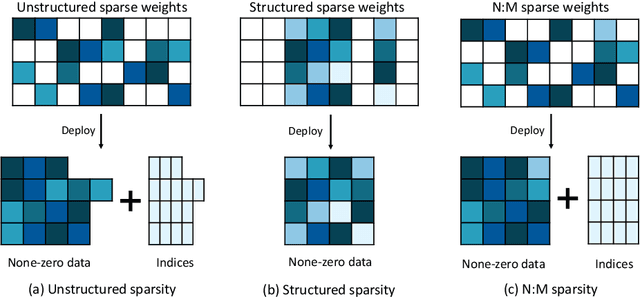

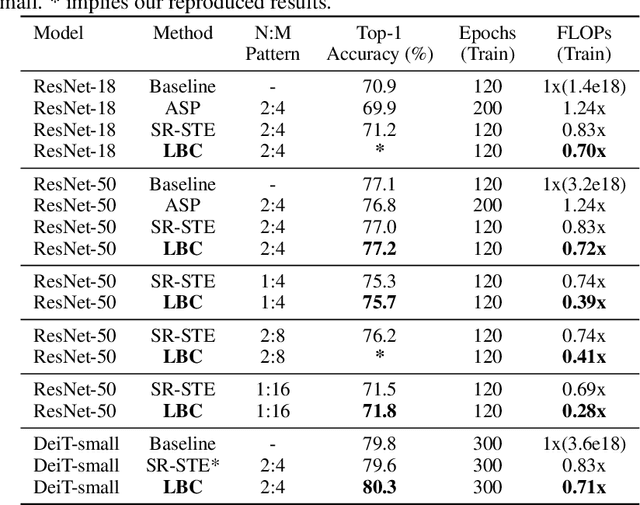

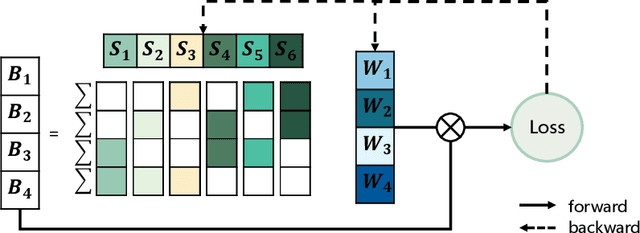

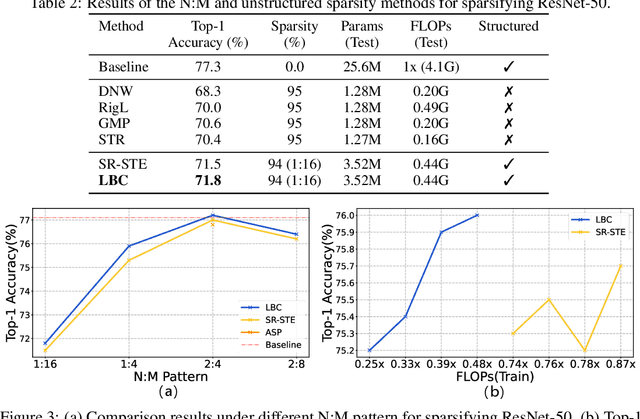

Abstract:By forcing at most N out of M consecutive weights to be non-zero, the recent N:M network sparsity has received increasing attention for its two attractive advantages: 1) Promising performance at a high sparsity. 2) Significant speedups on NVIDIA A100 GPUs. Recent studies require an expensive pre-training phase or a heavy dense-gradient computation. In this paper, we show that the N:M learning can be naturally characterized as a combinatorial problem which searches for the best combination candidate within a finite collection. Motivated by this characteristic, we solve N:M sparsity in an efficient divide-and-conquer manner. First, we divide the weight vector into $C_{\text{M}}^{\text{N}}$ combination subsets of a fixed size N. Then, we conquer the combinatorial problem by assigning each combination a learnable score that is jointly optimized with its associate weights. We prove that the introduced scoring mechanism can well model the relative importance between combination subsets. And by gradually removing low-scored subsets, N:M fine-grained sparsity can be efficiently optimized during the normal training phase. Comprehensive experiments demonstrate that our learning best combination (LBC) performs consistently better than off-the-shelf N:M sparsity methods across various networks. Our code is released at \url{https://github.com/zyxxmu/LBC}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge