Heavy-Tailed Universality Predicts Trends in Test Accuracies for Very Large Pre-Trained Deep Neural Networks

Jan 24, 2019Charles H. Martin, Michael W. Mahoney

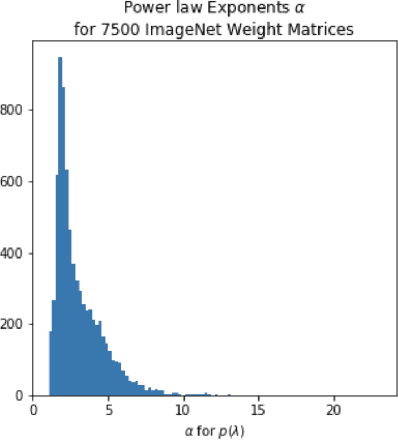

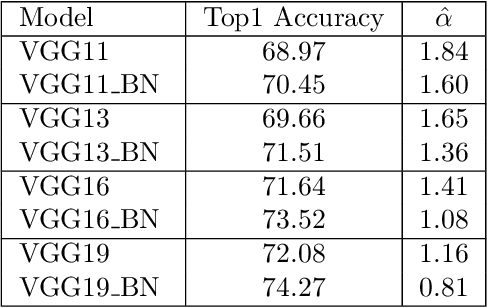

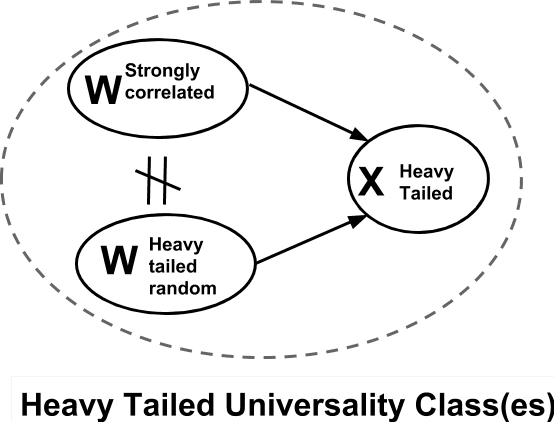

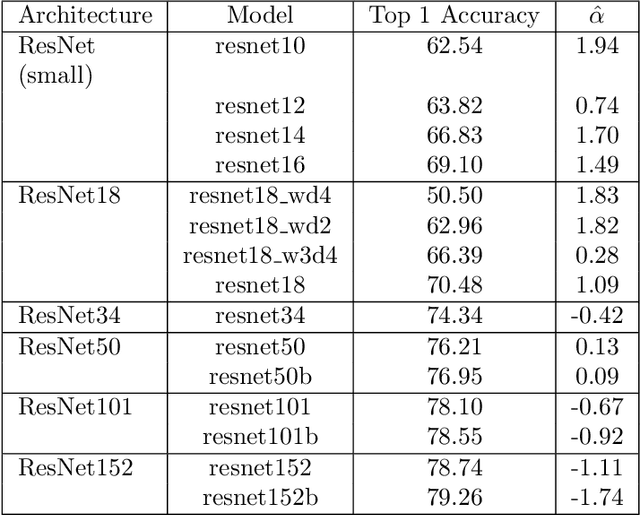

Given two or more Deep Neural Networks (DNNs) with the same or similar architectures, and trained on the same dataset, but trained with different solvers, parameters, hyper-parameters, regularization, etc., can we predict which DNN will have the best test accuracy, and can we do so without peeking at the test data? In this paper, we show how to use a new Theory of Heavy-Tailed Self-Regularization (HT-SR) to answer this. HT-SR suggests, among other things, that modern DNNs exhibit what we call Heavy-Tailed Mechanistic Universality (HT-MU), meaning that the correlations in the layer weight matrices can be fit to a power law with exponents that lie in common Universality classes from Heavy-Tailed Random Matrix Theory (HT-RMT). From this, we develop a Universal capacity control metric that is a weighted average of these PL exponents. Rather than considering small toy NNs, we examine over 50 different, large-scale pre-trained DNNs, ranging over 15 different architectures, trained on ImagetNet, each of which has been reported to have different test accuracies. We show that this new capacity metric correlates very well with the reported test accuracies of these DNNs, looking across each architecture (VGG16/.../VGG19, ResNet10/.../ResNet152, etc.). We also show how to approximate the metric by the more familiar Product Norm capacity measure, as the average of the log Frobenius norm of the layer weight matrices. Our approach requires no changes to the underlying DNN or its loss function, it does not require us to train a model (although it could be used to monitor training), and it does not even require access to the ImageNet data.

Traditional and Heavy-Tailed Self Regularization in Neural Network Models

Jan 24, 2019Charles H. Martin, Michael W. Mahoney

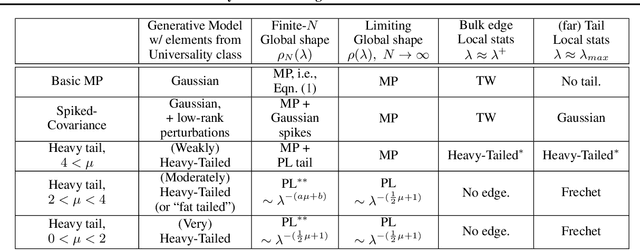

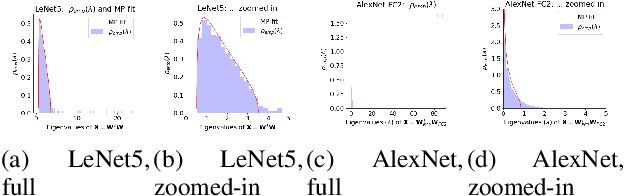

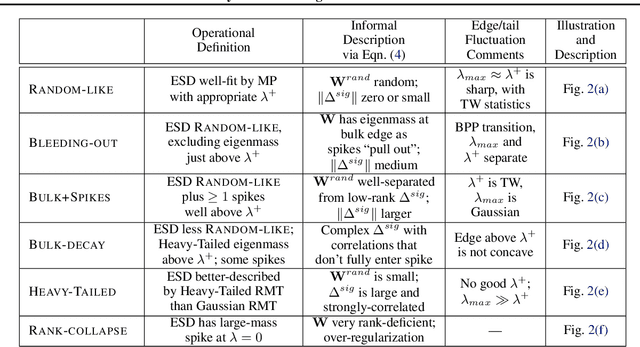

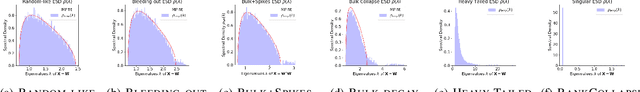

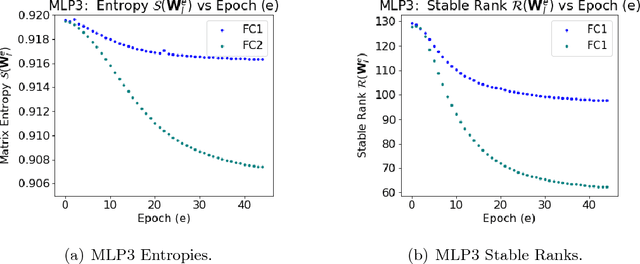

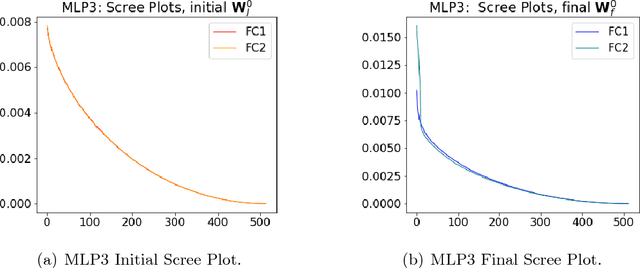

Random Matrix Theory (RMT) is applied to analyze the weight matrices of Deep Neural Networks (DNNs), including both production quality, pre-trained models such as AlexNet and Inception, and smaller models trained from scratch, such as LeNet5 and a miniature-AlexNet. Empirical and theoretical results clearly indicate that the empirical spectral density (ESD) of DNN layer matrices displays signatures of traditionally-regularized statistical models, even in the absence of exogenously specifying traditional forms of regularization, such as Dropout or Weight Norm constraints. Building on recent results in RMT, most notably its extension to Universality classes of Heavy-Tailed matrices, we develop a theory to identify \emph{5+1 Phases of Training}, corresponding to increasing amounts of \emph{Implicit Self-Regularization}. For smaller and/or older DNNs, this Implicit Self-Regularization is like traditional Tikhonov regularization, in that there is a `size scale' separating signal from noise. For state-of-the-art DNNs, however, we identify a novel form of \emph{Heavy-Tailed Self-Regularization}, similar to the self-organization seen in the statistical physics of disordered systems. This implicit Self-Regularization can depend strongly on the many knobs of the training process. By exploiting the generalization gap phenomena, we demonstrate that we can cause a small model to exhibit all 5+1 phases of training simply by changing the batch size.

On the Computational Inefficiency of Large Batch Sizes for Stochastic Gradient Descent

Nov 30, 2018Noah Golmant, Nikita Vemuri, Zhewei Yao, Vladimir Feinberg, Amir Gholami, Kai Rothauge, Michael W. Mahoney, Joseph Gonzalez

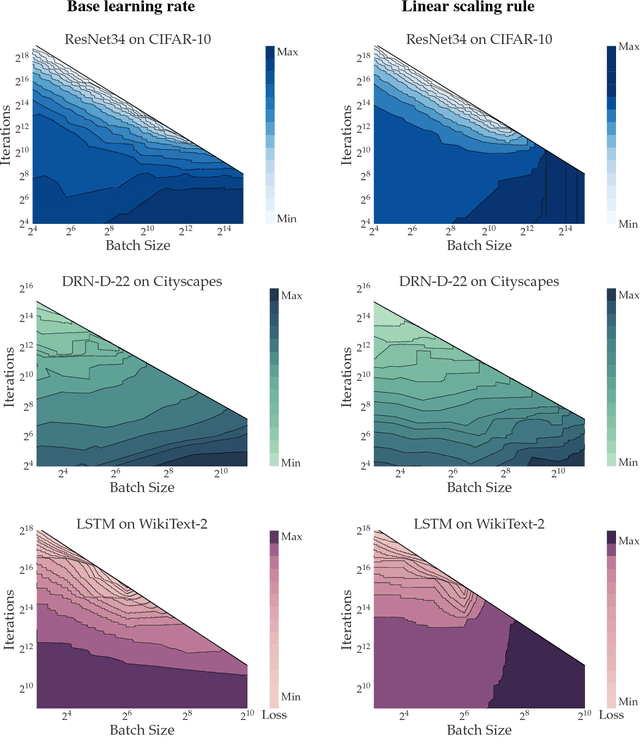

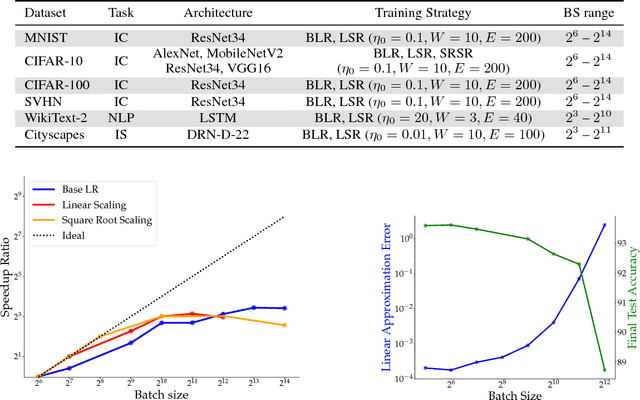

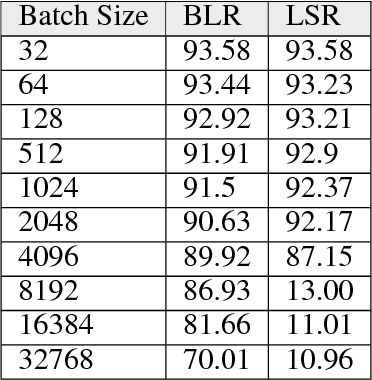

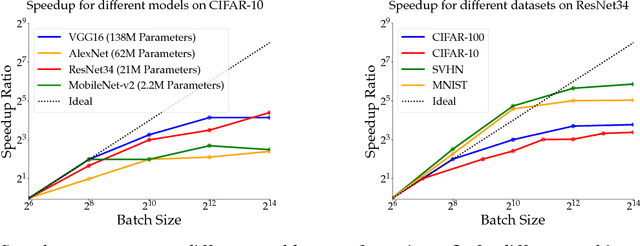

Increasing the mini-batch size for stochastic gradient descent offers significant opportunities to reduce wall-clock training time, but there are a variety of theoretical and systems challenges that impede the widespread success of this technique. We investigate these issues, with an emphasis on time to convergence and total computational cost, through an extensive empirical analysis of network training across several architectures and problem domains, including image classification, image segmentation, and language modeling. Although it is common practice to increase the batch size in order to fully exploit available computational resources, we find a substantially more nuanced picture. Our main finding is that across a wide range of network architectures and problem domains, increasing the batch size beyond a certain point yields no decrease in wall-clock time to convergence for \emph{either} train or test loss. This batch size is usually substantially below the capacity of current systems. We show that popular training strategies for large batch size optimization begin to fail before we can populate all available compute resources, and we show that the point at which these methods break down depends more on attributes like model architecture and data complexity than it does directly on the size of the dataset.

Implicit Self-Regularization in Deep Neural Networks: Evidence from Random Matrix Theory and Implications for Learning

Oct 02, 2018Charles H. Martin, Michael W. Mahoney

Random Matrix Theory (RMT) is applied to analyze weight matrices of Deep Neural Networks (DNNs), including both production quality, pre-trained models such as AlexNet and Inception, and smaller models trained from scratch, such as LeNet5 and a miniature-AlexNet. Empirical and theoretical results clearly indicate that the DNN training process itself implicitly implements a form of Self-Regularization. The empirical spectral density (ESD) of DNN layer matrices displays signatures of traditionally-regularized statistical models, even in the absence of exogenously specifying traditional forms of explicit regularization. Building on relatively recent results in RMT, most notably its extension to Universality classes of Heavy-Tailed matrices, we develop a theory to identify 5+1 Phases of Training, corresponding to increasing amounts of Implicit Self-Regularization. These phases can be observed during the training process as well as in the final learned DNNs. For smaller and/or older DNNs, this Implicit Self-Regularization is like traditional Tikhonov regularization, in that there is a "size scale" separating signal from noise. For state-of-the-art DNNs, however, we identify a novel form of Heavy-Tailed Self-Regularization, similar to the self-organization seen in the statistical physics of disordered systems. This results from correlations arising at all size scales, which arises implicitly due to the training process itself. This implicit Self-Regularization can depend strongly on the many knobs of the training process. By exploiting the generalization gap phenomena, we demonstrate that we can cause a small model to exhibit all 5+1 phases of training simply by changing the batch size. This demonstrates that---all else being equal---DNN optimization with larger batch sizes leads to less-well implicitly-regularized models, and it provides an explanation for the generalization gap phenomena.

Newton-MR: Newton's Method Without Smoothness or Convexity

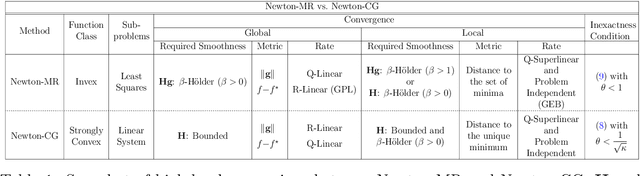

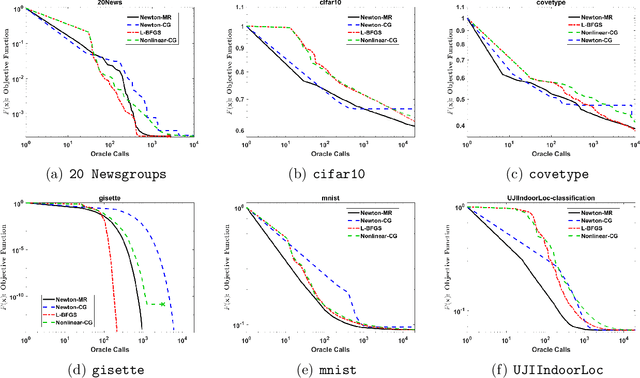

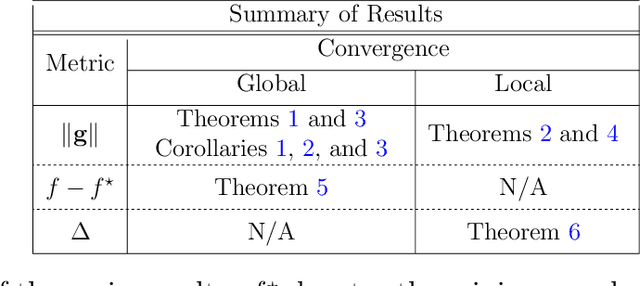

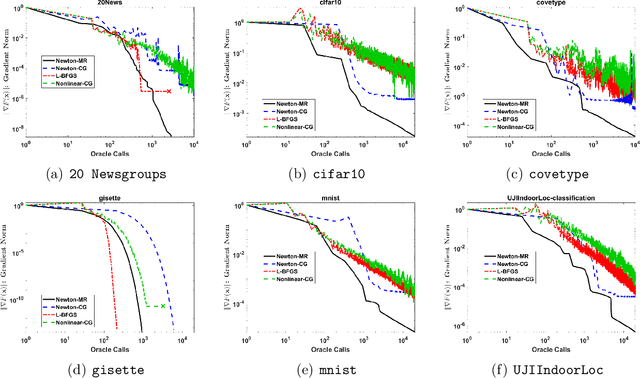

Sep 30, 2018Fred Roosta, Yang Liu, Peng Xu, Michael W. Mahoney

Establishing global convergence of the classical Newton's method has long been limited to making (strong) convexity assumptions. This has limited the application range of Newton's method in its classical form. Hence, many Newton-type variants have been proposed which aim at extending the classical Newton's method beyond (strongly) convex problems. Furthermore, as a common denominator, the analysis of almost all these methods relies heavily on the Lipschitz continuity assumptions of the gradient and Hessian. In fact, it is widely believed that in the absence of well-behaved and continuous Hessian, the application of curvature can hurt more so that it can help. Here, we show that two seemingly simple modifications of the classical Newton's method result in an algorithm, called Newton-MR, which can readily be applied to invex problems. Newton-MR appears almost indistinguishable from the classical Newton's method, yet it offers a diverse range of algorithmic and theoretical advantages. In particular, not only Newton-MR's application extends far beyond convexity, but also it is more suitable than the classical Newton's method for (strongly) convex problems. Furthermore, by introducing a much weaker notion of joint regularity of Hessian and gradient, we show that the global convergence of Newton-MR can be established even in the absence of continuity assumptions of the gradient and/or Hessian. We further obtain local convergence guarantees of Newton-MR and show that our local analysis indeed generalizes that of the classical Newton's method. Specifically, our analysis does not make use of the notion of isolated minimum, which is required for the local convergence analysis of the classical Newton's method.

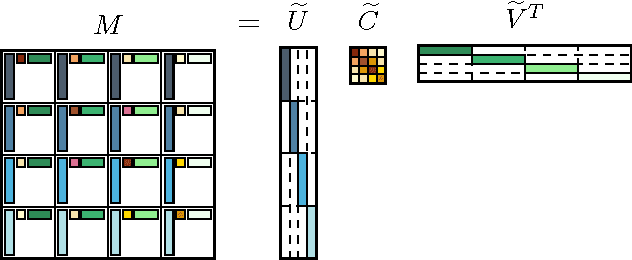

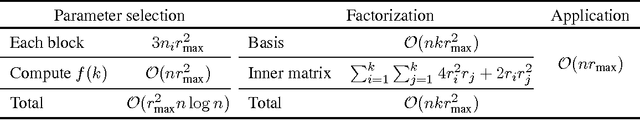

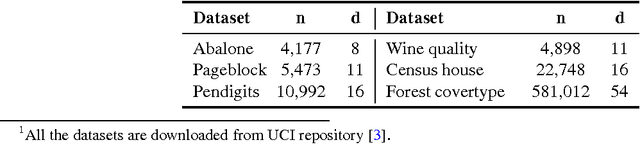

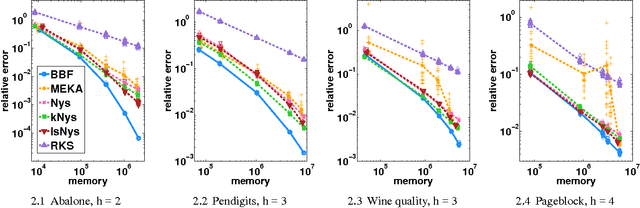

Block Basis Factorization for Scalable Kernel Matrix Evaluation

Sep 12, 2018Ruoxi Wang, Yingzhou Li, Michael W. Mahoney, Eric Darve

Kernel methods are widespread in machine learning; however, they are limited by the quadratic complexity of the construction, application, and storage of kernel matrices. Low-rank matrix approximation algorithms are widely used to address this problem and reduce the arithmetic and storage cost. However, we observed that for some datasets with wide intra-class variability, the optimal kernel parameter for smaller classes yields a matrix that is less well approximated by low-rank methods. In this paper, we propose an efficient structured low-rank approximation method---the Block Basis Factorization (BBF)---and its fast construction algorithm to approximate radial basis function (RBF) kernel matrices. Our approach has linear memory cost and floating point operations. BBF works for a wide range of kernel bandwidth parameters and extends the domain of applicability of low-rank approximation methods significantly. Our empirical results demonstrate the stability and superiority over the state-of-art kernel approximation algorithms.

GIANT: Globally Improved Approximate Newton Method for Distributed Optimization

Sep 11, 2018Shusen Wang, Farbod Roosta-Khorasani, Peng Xu, Michael W. Mahoney

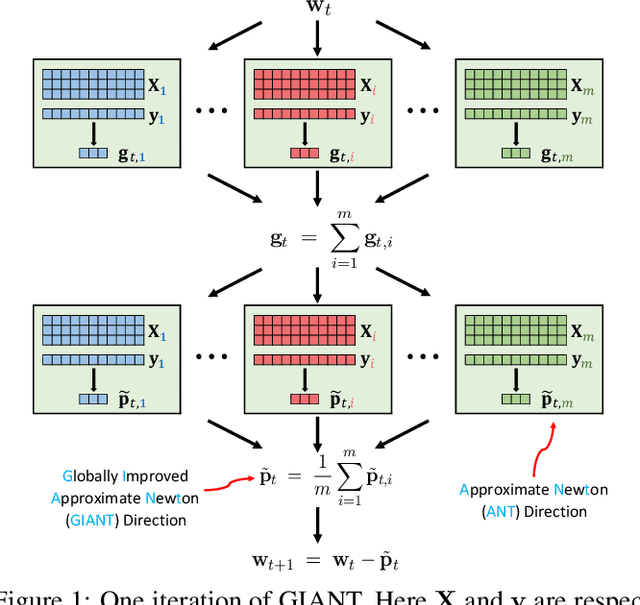

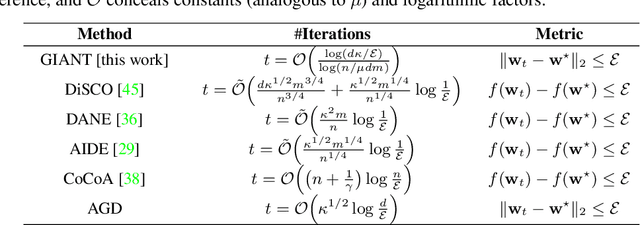

For distributed computing environment, we consider the empirical risk minimization problem and propose a distributed and communication-efficient Newton-type optimization method. At every iteration, each worker locally finds an Approximate NewTon (ANT) direction, which is sent to the main driver. The main driver, then, averages all the ANT directions received from workers to form a {\it Globally Improved ANT} (GIANT) direction. GIANT is highly communication efficient and naturally exploits the trade-offs between local computations and global communications in that more local computations result in fewer overall rounds of communications. Theoretically, we show that GIANT enjoys an improved convergence rate as compared with first-order methods and existing distributed Newton-type methods. Further, and in sharp contrast with many existing distributed Newton-type methods, as well as popular first-order methods, a highly advantageous practical feature of GIANT is that it only involves one tuning parameter. We conduct large-scale experiments on a computer cluster and, empirically, demonstrate the superior performance of GIANT.

Error Estimation for Randomized Least-Squares Algorithms via the Bootstrap

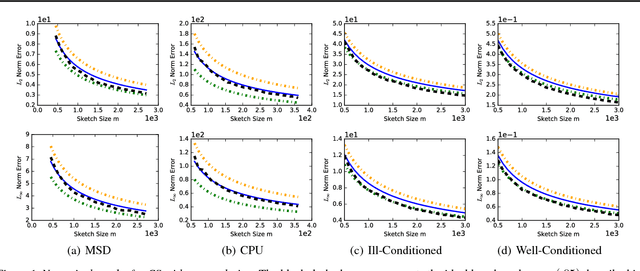

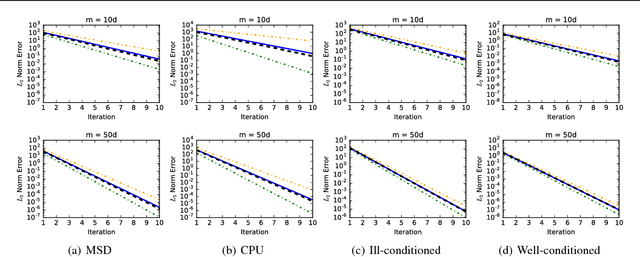

Sep 06, 2018Miles E. Lopes, Shusen Wang, Michael W. Mahoney

Over the course of the past decade, a variety of randomized algorithms have been proposed for computing approximate least-squares (LS) solutions in large-scale settings. A longstanding practical issue is that, for any given input, the user rarely knows the actual error of an approximate solution (relative to the exact solution). Likewise, it is difficult for the user to know precisely how much computation is needed to achieve the desired error tolerance. Consequently, the user often appeals to worst-case error bounds that tend to offer only qualitative guidance. As a more practical alternative, we propose a bootstrap method to compute a posteriori error estimates for randomized LS algorithms. These estimates permit the user to numerically assess the error of a given solution, and to predict how much work is needed to improve a "preliminary" solution. In addition, we provide theoretical consistency results for the method, which are the first such results in this context (to the best of our knowledge). From a practical standpoint, the method also has considerable flexibility, insofar as it can be applied to several popular sketching algorithms, as well as a variety of error metrics. Moreover, the extra step of error estimation does not add much cost to an underlying sketching algorithm. Finally, we demonstrate the effectiveness of the method with empirical results.

Distributed Second-order Convex Optimization

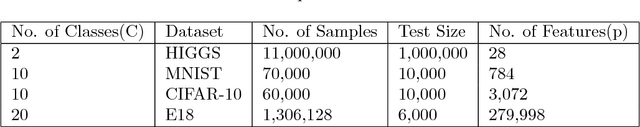

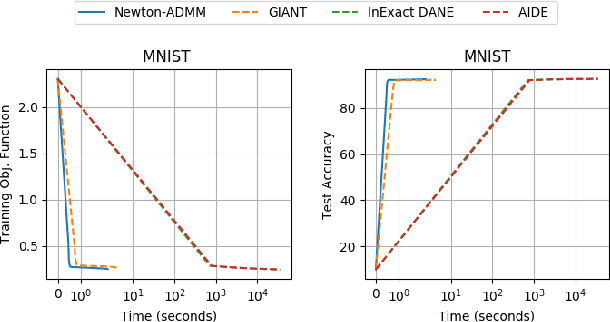

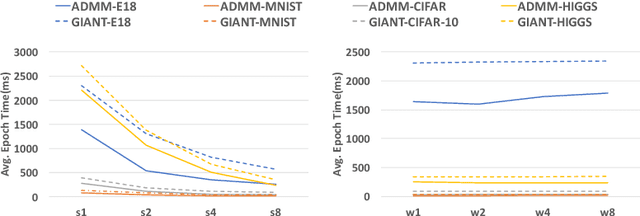

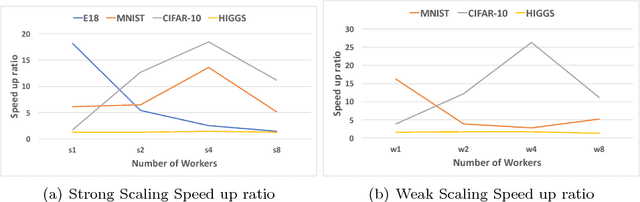

Jul 18, 2018Chih-Hao Fang, Sudhir B Kylasa, Farbod Roosta-Khorasani, Michael W. Mahoney, Ananth Grama

Convex optimization problems arise frequently in diverse machine learning (ML) applications. First-order methods, i.e., those that solely rely on the gradient information, are most commonly used to solve these problems. This choice is motivated by their simplicity and low per-iteration cost. Second-order methods that rely on curvature information through the dense Hessian matrix have, thus far, proven to be prohibitively expensive at scale, both in terms of computational and memory requirements. We present a novel multi-GPU distributed formulation of a second order (Newton-type) solver for convex finite sum minimization problems for multi-class classification. Our distributed formulation relies on the Alternating Direction of Multipliers Method (ADMM), which requires only one round of communication per-iteration -- significantly reducing communication overheads, while incurring minimal convergence overhead. By leveraging the computational capabilities of GPUs, we demonstrate that per-iteration costs of Newton-type methods can be significantly reduced to be on-par with, if not better than, state-of-the-art first-order alternatives. Given their significantly faster convergence rates, we demonstrate that our methods can process large data-sets in much shorter time (orders of magnitude in many cases) compared to existing first and second order methods, while yielding similar test-accuracy results.

Hessian-based Analysis of Large Batch Training and Robustness to Adversaries

Jun 18, 2018Zhewei Yao, Amir Gholami, Qi Lei, Kurt Keutzer, Michael W. Mahoney

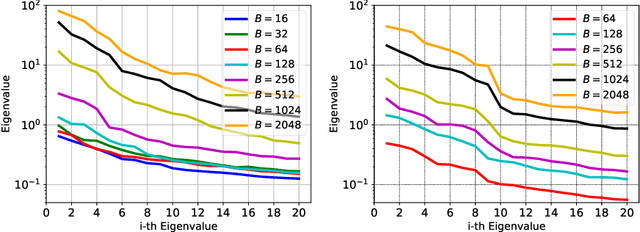

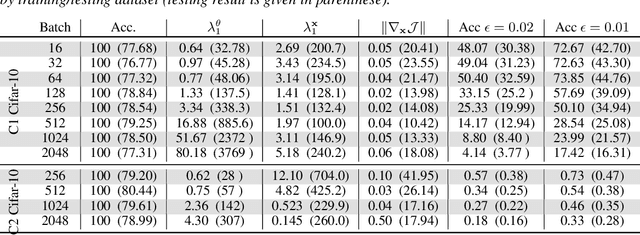

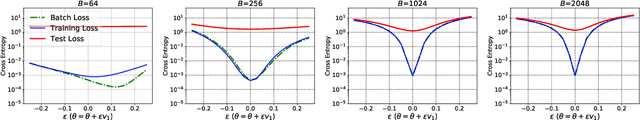

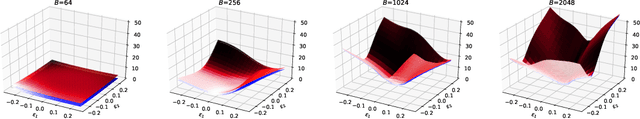

Large batch size training of Neural Networks has been shown to incur accuracy loss when trained with the current methods. The precise underlying reasons for this are still not completely understood. Here, we study large batch size training through the lens of the Hessian operator and robust optimization. In particular, we perform a Hessian based study to analyze how the landscape of the loss functional is different for large batch size training. We compute the true Hessian spectrum, without approximation, by back-propagating the second derivative. Our results on multiple networks show that, when training at large batch sizes, one tends to stop at points in the parameter space with noticeably higher/larger Hessian spectrum, i.e., where the eigenvalues of the Hessian are much larger. We then study how batch size affects robustness of the model in the face of adversarial attacks. All the results show that models trained with large batches are more susceptible to adversarial attacks, as compared to models trained with small batch sizes. Furthermore, we prove a theoretical result which shows that the problem of finding an adversarial perturbation is a saddle-free optimization problem. Finally, we show empirical results that demonstrate that adversarial training leads to areas with smaller Hessian spectrum. We present detailed experiments with five different network architectures tested on MNIST, CIFAR-10, and CIFAR-100 datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge