Michael C. Mozer

Aligning Machine and Human Visual Representations across Abstraction Levels

Sep 10, 2024

Abstract:Deep neural networks have achieved success across a wide range of applications, including as models of human behavior in vision tasks. However, neural network training and human learning differ in fundamental ways, and neural networks often fail to generalize as robustly as humans do, raising questions regarding the similarity of their underlying representations. What is missing for modern learning systems to exhibit more human-like behavior? We highlight a key misalignment between vision models and humans: whereas human conceptual knowledge is hierarchically organized from fine- to coarse-scale distinctions, model representations do not accurately capture all these levels of abstraction. To address this misalignment, we first train a teacher model to imitate human judgments, then transfer human-like structure from its representations into pretrained state-of-the-art vision foundation models. These human-aligned models more accurately approximate human behavior and uncertainty across a wide range of similarity tasks, including a new dataset of human judgments spanning multiple levels of semantic abstractions. They also perform better on a diverse set of machine learning tasks, increasing generalization and out-of-distribution robustness. Thus, infusing neural networks with additional human knowledge yields a best-of-both-worlds representation that is both more consistent with human cognition and more practically useful, thus paving the way toward more robust, interpretable, and human-like artificial intelligence systems.

Reawakening knowledge: Anticipatory recovery from catastrophic interference via structured training

Mar 14, 2024

Abstract:We explore the training dynamics of neural networks in a structured non-IID setting where documents are presented cyclically in a fixed, repeated sequence. Typically, networks suffer from catastrophic interference when training on a sequence of documents; however, we discover a curious and remarkable property of LLMs fine-tuned sequentially in this setting: they exhibit anticipatory behavior, recovering from the forgetting on documents before encountering them again. The behavior emerges and becomes more robust as the architecture scales up its number of parameters. Through comprehensive experiments and visualizations, we uncover new insights into training over-parameterized networks in structured environments.

On the Foundations of Shortcut Learning

Oct 24, 2023

Abstract:Deep-learning models can extract a rich assortment of features from data. Which features a model uses depends not only on predictivity-how reliably a feature indicates train-set labels-but also on availability-how easily the feature can be extracted, or leveraged, from inputs. The literature on shortcut learning has noted examples in which models privilege one feature over another, for example texture over shape and image backgrounds over foreground objects. Here, we test hypotheses about which input properties are more available to a model, and systematically study how predictivity and availability interact to shape models' feature use. We construct a minimal, explicit generative framework for synthesizing classification datasets with two latent features that vary in predictivity and in factors we hypothesize to relate to availability, and quantify a model's shortcut bias-its over-reliance on the shortcut (more available, less predictive) feature at the expense of the core (less available, more predictive) feature. We find that linear models are relatively unbiased, but introducing a single hidden layer with ReLU or Tanh units yields a bias. Our empirical findings are consistent with a theoretical account based on Neural Tangent Kernels. Finally, we study how models used in practice trade off predictivity and availability in naturalistic datasets, discovering availability manipulations which increase models' degree of shortcut bias. Taken together, these findings suggest that the propensity to learn shortcut features is a fundamental characteristic of deep nonlinear architectures warranting systematic study given its role in shaping how models solve tasks.

Can Neural Network Memorization Be Localized?

Jul 18, 2023

Abstract:Recent efforts at explaining the interplay of memorization and generalization in deep overparametrized networks have posited that neural networks $\textit{memorize}$ "hard" examples in the final few layers of the model. Memorization refers to the ability to correctly predict on $\textit{atypical}$ examples of the training set. In this work, we show that rather than being confined to individual layers, memorization is a phenomenon confined to a small set of neurons in various layers of the model. First, via three experimental sources of converging evidence, we find that most layers are redundant for the memorization of examples and the layers that contribute to example memorization are, in general, not the final layers. The three sources are $\textit{gradient accounting}$ (measuring the contribution to the gradient norms from memorized and clean examples), $\textit{layer rewinding}$ (replacing specific model weights of a converged model with previous training checkpoints), and $\textit{retraining}$ (training rewound layers only on clean examples). Second, we ask a more generic question: can memorization be localized $\textit{anywhere}$ in a model? We discover that memorization is often confined to a small number of neurons or channels (around 5) of the model. Based on these insights we propose a new form of dropout -- $\textit{example-tied dropout}$ that enables us to direct the memorization of examples to an apriori determined set of neurons. By dropping out these neurons, we are able to reduce the accuracy on memorized examples from $100\%\to3\%$, while also reducing the generalization gap.

Spotlight Attention: Robust Object-Centric Learning With a Spatial Locality Prior

May 31, 2023

Abstract:The aim of object-centric vision is to construct an explicit representation of the objects in a scene. This representation is obtained via a set of interchangeable modules called \emph{slots} or \emph{object files} that compete for local patches of an image. The competition has a weak inductive bias to preserve spatial continuity; consequently, one slot may claim patches scattered diffusely throughout the image. In contrast, the inductive bias of human vision is strong, to the degree that attention has classically been described with a spotlight metaphor. We incorporate a spatial-locality prior into state-of-the-art object-centric vision models and obtain significant improvements in segmenting objects in both synthetic and real-world datasets. Similar to human visual attention, the combination of image content and spatial constraints yield robust unsupervised object-centric learning, including less sensitivity to model hyperparameters.

Layer-Stack Temperature Scaling

Nov 18, 2022

Abstract:Recent works demonstrate that early layers in a neural network contain useful information for prediction. Inspired by this, we show that extending temperature scaling across all layers improves both calibration and accuracy. We call this procedure "layer-stack temperature scaling" (LATES). Informally, LATES grants each layer a weighted vote during inference. We evaluate it on five popular convolutional neural network architectures both in- and out-of-distribution and observe a consistent improvement over temperature scaling in terms of accuracy, calibration, and AUC. All conclusions are supported by comprehensive statistical analyses. Since LATES neither retrains the architecture nor introduces many more parameters, its advantages can be reaped without requiring additional data beyond what is used in temperature scaling. Finally, we show that combining LATES with Monte Carlo Dropout matches state-of-the-art results on CIFAR10/100.

An Empirical Study on Clustering Pretrained Embeddings: Is Deep Strictly Better?

Nov 09, 2022Abstract:Recent research in clustering face embeddings has found that unsupervised, shallow, heuristic-based methods -- including $k$-means and hierarchical agglomerative clustering -- underperform supervised, deep, inductive methods. While the reported improvements are indeed impressive, experiments are mostly limited to face datasets, where the clustered embeddings are highly discriminative or well-separated by class (Recall@1 above 90% and often nearing ceiling), and the experimental methodology seemingly favors the deep methods. We conduct a large-scale empirical study of 17 clustering methods across three datasets and obtain several robust findings. Notably, deep methods are surprisingly fragile for embeddings with more uncertainty, where they match or even perform worse than shallow, heuristic-based methods. When embeddings are highly discriminative, deep methods do outperform the baselines, consistent with past results, but the margin between methods is much smaller than previously reported. We believe our benchmarks broaden the scope of supervised clustering methods beyond the face domain and can serve as a foundation on which these methods could be improved. To enable reproducibility, we include all necessary details in the appendices, and plan to release the code.

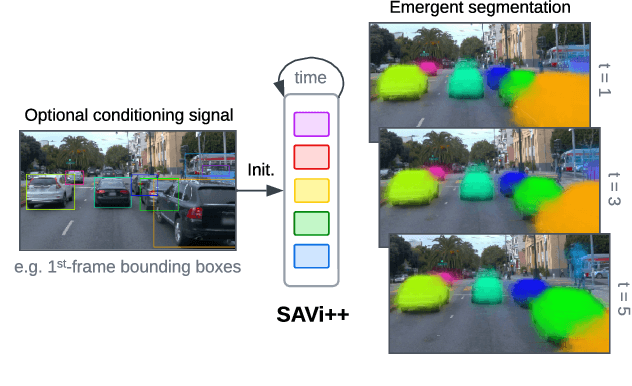

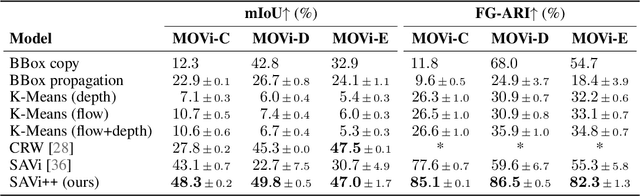

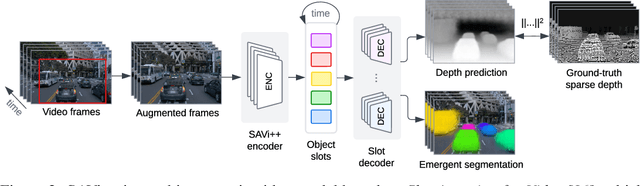

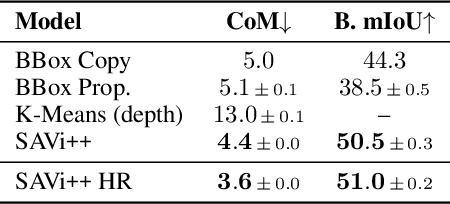

SAVi++: Towards End-to-End Object-Centric Learning from Real-World Videos

Jun 15, 2022

Abstract:The visual world can be parsimoniously characterized in terms of distinct entities with sparse interactions. Discovering this compositional structure in dynamic visual scenes has proven challenging for end-to-end computer vision approaches unless explicit instance-level supervision is provided. Slot-based models leveraging motion cues have recently shown great promise in learning to represent, segment, and track objects without direct supervision, but they still fail to scale to complex real-world multi-object videos. In an effort to bridge this gap, we take inspiration from human development and hypothesize that information about scene geometry in the form of depth signals can facilitate object-centric learning. We introduce SAVi++, an object-centric video model which is trained to predict depth signals from a slot-based video representation. By further leveraging best practices for model scaling, we are able to train SAVi++ to segment complex dynamic scenes recorded with moving cameras, containing both static and moving objects of diverse appearance on naturalistic backgrounds, without the need for segmentation supervision. Finally, we demonstrate that by using sparse depth signals obtained from LiDAR, SAVi++ is able to learn emergent object segmentation and tracking from videos in the real-world Waymo Open dataset.

Overcoming Temptation: Incentive Design For Intertemporal Choice

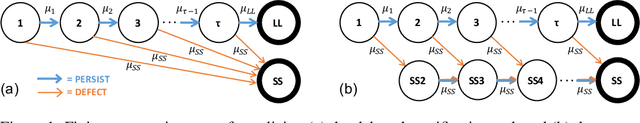

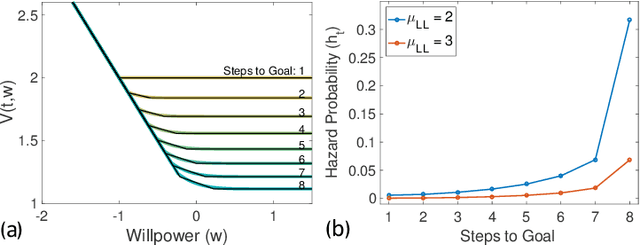

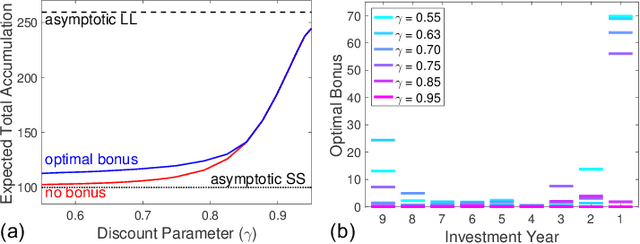

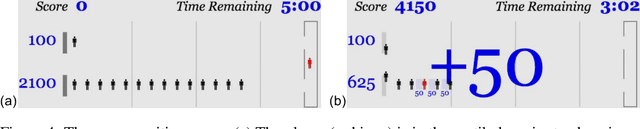

Mar 14, 2022

Abstract:Individuals are often faced with temptations that can lead them astray from long-term goals. We're interested in developing interventions that steer individuals toward making good initial decisions and then maintaining those decisions over time. In the realm of financial decision making, a particularly successful approach is the prize-linked savings account: individuals are incentivized to make deposits by tying deposits to a periodic lottery that awards bonuses to the savers. Although these lotteries have been very effective in motivating savers across the globe, they are a one-size-fits-all solution. We investigate whether customized bonuses can be more effective. We formalize a delayed-gratification task as a Markov decision problem and characterize individuals as rational agents subject to temporal discounting, a cost associated with effort, and fluctuations in willpower. Our theory is able to explain key behavioral findings in intertemporal choice. We created an online delayed-gratification game in which the player scores points by selecting a queue to wait in and then performing a series of actions to advance to the front. Data collected from the game is fit to the model, and the instantiated model is then used to optimize predicted player performance over a space of incentives. We demonstrate that customized incentive structures can improve an individual's goal-directed decision making.

Head2Toe: Utilizing Intermediate Representations for Better Transfer Learning

Jan 10, 2022

Abstract:Transfer-learning methods aim to improve performance in a data-scarce target domain using a model pretrained on a data-rich source domain. A cost-efficient strategy, linear probing, involves freezing the source model and training a new classification head for the target domain. This strategy is outperformed by a more costly but state-of-the-art method -- fine-tuning all parameters of the source model to the target domain -- possibly because fine-tuning allows the model to leverage useful information from intermediate layers which is otherwise discarded by the later pretrained layers. We explore the hypothesis that these intermediate layers might be directly exploited. We propose a method, Head-to-Toe probing (Head2Toe), that selects features from all layers of the source model to train a classification head for the target-domain. In evaluations on the VTAB-1k, Head2Toe matches performance obtained with fine-tuning on average while reducing training and storage cost hundred folds or more, but critically, for out-of-distribution transfer, Head2Toe outperforms fine-tuning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge