Jingkuan Song

MovieFactory: Automatic Movie Creation from Text using Large Generative Models for Language and Images

Jun 12, 2023Abstract:In this paper, we present MovieFactory, a powerful framework to generate cinematic-picture (3072$\times$1280), film-style (multi-scene), and multi-modality (sounding) movies on the demand of natural languages. As the first fully automated movie generation model to the best of our knowledge, our approach empowers users to create captivating movies with smooth transitions using simple text inputs, surpassing existing methods that produce soundless videos limited to a single scene of modest quality. To facilitate this distinctive functionality, we leverage ChatGPT to expand user-provided text into detailed sequential scripts for movie generation. Then we bring scripts to life visually and acoustically through vision generation and audio retrieval. To generate videos, we extend the capabilities of a pretrained text-to-image diffusion model through a two-stage process. Firstly, we employ spatial finetuning to bridge the gap between the pretrained image model and the new video dataset. Subsequently, we introduce temporal learning to capture object motion. In terms of audio, we leverage sophisticated retrieval models to select and align audio elements that correspond to the plot and visual content of the movie. Extensive experiments demonstrate that our MovieFactory produces movies with realistic visuals, diverse scenes, and seamlessly fitting audio, offering users a novel and immersive experience. Generated samples can be found in YouTube or Bilibili (1080P).

CageViT: Convolutional Activation Guided Efficient Vision Transformer

May 17, 2023Abstract:Recently, Transformers have emerged as the go-to architecture for both vision and language modeling tasks, but their computational efficiency is limited by the length of the input sequence. To address this, several efficient variants of Transformers have been proposed to accelerate computation or reduce memory consumption while preserving performance. This paper presents an efficient vision Transformer, called CageViT, that is guided by convolutional activation to reduce computation. Our CageViT, unlike current Transformers, utilizes a new encoder to handle the rearranged tokens, bringing several technical contributions: 1) Convolutional activation is used to pre-process the token after patchifying the image to select and rearrange the major tokens and minor tokens, which substantially reduces the computation cost through an additional fusion layer. 2) Instead of using the class activation map of the convolutional model directly, we design a new weighted class activation to lower the model requirements. 3) To facilitate communication between major tokens and fusion tokens, Gated Linear SRA is proposed to further integrate fusion tokens into the attention mechanism. We perform a comprehensive validation of CageViT on the image classification challenge. Experimental results demonstrate that the proposed CageViT outperforms the most recent state-of-the-art backbones by a large margin in terms of efficiency, while maintaining a comparable level of accuracy (e.g. a moderate-sized 43.35M model trained solely on 224 x 224 ImageNet-1K can achieve Top-1 accuracy of 83.4% accuracy).

Prototype-based Embedding Network for Scene Graph Generation

Mar 13, 2023Abstract:Current Scene Graph Generation (SGG) methods explore contextual information to predict relationships among entity pairs. However, due to the diverse visual appearance of numerous possible subject-object combinations, there is a large intra-class variation within each predicate category, e.g., "man-eating-pizza, giraffe-eating-leaf", and the severe inter-class similarity between different classes, e.g., "man-holding-plate, man-eating-pizza", in model's latent space. The above challenges prevent current SGG methods from acquiring robust features for reliable relation prediction. In this paper, we claim that the predicate's category-inherent semantics can serve as class-wise prototypes in the semantic space for relieving the challenges. To the end, we propose the Prototype-based Embedding Network (PE-Net), which models entities/predicates with prototype-aligned compact and distinctive representations and thereby establishes matching between entity pairs and predicates in a common embedding space for relation recognition. Moreover, Prototype-guided Learning (PL) is introduced to help PE-Net efficiently learn such entitypredicate matching, and Prototype Regularization (PR) is devised to relieve the ambiguous entity-predicate matching caused by the predicate's semantic overlap. Extensive experiments demonstrate that our method gains superior relation recognition capability on SGG, achieving new state-of-the-art performances on both Visual Genome and Open Images datasets.

DETA: Denoised Task Adaptation for Few-Shot Learning

Mar 11, 2023Abstract:Test-time task adaptation in few-shot learning aims to adapt a pre-trained task-agnostic model for capturing taskspecific knowledge of the test task, rely only on few-labeled support samples. Previous approaches generally focus on developing advanced algorithms to achieve the goal, while neglecting the inherent problems of the given support samples. In fact, with only a handful of samples available, the adverse effect of either the image noise (a.k.a. X-noise) or the label noise (a.k.a. Y-noise) from support samples can be severely amplified. To address this challenge, in this work we propose DEnoised Task Adaptation (DETA), a first, unified image- and label-denoising framework orthogonal to existing task adaptation approaches. Without extra supervision, DETA filters out task-irrelevant, noisy representations by taking advantage of both global visual information and local region details of support samples. On the challenging Meta-Dataset, DETA consistently improves the performance of a broad spectrum of baseline methods applied on various pre-trained models. Notably, by tackling the overlooked image noise in Meta-Dataset, DETA establishes new state-of-the-art results. Code is released at https://github.com/nobody-1617/DETA.

A Closer Look at Few-shot Classification Again

Feb 02, 2023

Abstract:Few-shot classification consists of a training phase where a model is learned on a relatively large dataset and an adaptation phase where the learned model is adapted to previously-unseen tasks with limited labeled samples. In this paper, we empirically prove that the training algorithm and the adaptation algorithm can be completely disentangled, which allows algorithm analysis and design to be done individually for each phase. Our meta-analysis for each phase reveals several interesting insights that may help better understand key aspects of few-shot classification and connections with other fields such as visual representation learning and transfer learning. We hope the insights and research challenges revealed in this paper can inspire future work in related directions.

Hyperbolic Hierarchical Contrastive Hashing

Dec 17, 2022Abstract:Hierarchical semantic structures, naturally existing in real-world datasets, can assist in capturing the latent distribution of data to learn robust hash codes for retrieval systems. Although hierarchical semantic structures can be simply expressed by integrating semantically relevant data into a high-level taxon with coarser-grained semantics, the construction, embedding, and exploitation of the structures remain tricky for unsupervised hash learning. To tackle these problems, we propose a novel unsupervised hashing method named Hyperbolic Hierarchical Contrastive Hashing (HHCH). We propose to embed continuous hash codes into hyperbolic space for accurate semantic expression since embedding hierarchies in hyperbolic space generates less distortion than in hyper-sphere space and Euclidean space. In addition, we extend the K-Means algorithm to hyperbolic space and perform the proposed hierarchical hyperbolic K-Means algorithm to construct hierarchical semantic structures adaptively. To exploit the hierarchical semantic structures in hyperbolic space, we designed the hierarchical contrastive learning algorithm, including hierarchical instance-wise and hierarchical prototype-wise contrastive learning. Extensive experiments on four benchmark datasets demonstrate that the proposed method outperforms the state-of-the-art unsupervised hashing methods. Codes will be released.

Progressive Tree-Structured Prototype Network for End-to-End Image Captioning

Nov 17, 2022Abstract:Studies of image captioning are shifting towards a trend of a fully end-to-end paradigm by leveraging powerful visual pre-trained models and transformer-based generation architecture for more flexible model training and faster inference speed. State-of-the-art approaches simply extract isolated concepts or attributes to assist description generation. However, such approaches do not consider the hierarchical semantic structure in the textual domain, which leads to an unpredictable mapping between visual representations and concept words. To this end, we propose a novel Progressive Tree-Structured prototype Network (dubbed PTSN), which is the first attempt to narrow down the scope of prediction words with appropriate semantics by modeling the hierarchical textual semantics. Specifically, we design a novel embedding method called tree-structured prototype, producing a set of hierarchical representative embeddings which capture the hierarchical semantic structure in textual space. To utilize such tree-structured prototypes into visual cognition, we also propose a progressive aggregation module to exploit semantic relationships within the image and prototypes. By applying our PTSN to the end-to-end captioning framework, extensive experiments conducted on MSCOCO dataset show that our method achieves a new state-of-the-art performance with 144.2% (single model) and 146.5% (ensemble of 4 models) CIDEr scores on `Karpathy' split and 141.4% (c5) and 143.9% (c40) CIDEr scores on the official online test server. Trained models and source code have been released at: https://github.com/NovaMind-Z/PTSN.

Learning Dual-Fused Modality-Aware Representations for RGBD Tracking

Nov 15, 2022Abstract:With the development of depth sensors in recent years, RGBD object tracking has received significant attention. Compared with the traditional RGB object tracking, the addition of the depth modality can effectively solve the target and background interference. However, some existing RGBD trackers use the two modalities separately and thus some particularly useful shared information between them is ignored. On the other hand, some methods attempt to fuse the two modalities by treating them equally, resulting in the missing of modality-specific features. To tackle these limitations, we propose a novel Dual-fused Modality-aware Tracker (termed DMTracker) which aims to learn informative and discriminative representations of the target objects for robust RGBD tracking. The first fusion module focuses on extracting the shared information between modalities based on cross-modal attention. The second aims at integrating the RGB-specific and depth-specific information to enhance the fused features. By fusing both the modality-shared and modality-specific information in a modality-aware scheme, our DMTracker can learn discriminative representations in complex tracking scenes. Experiments show that our proposed tracker achieves very promising results on challenging RGBD benchmarks.

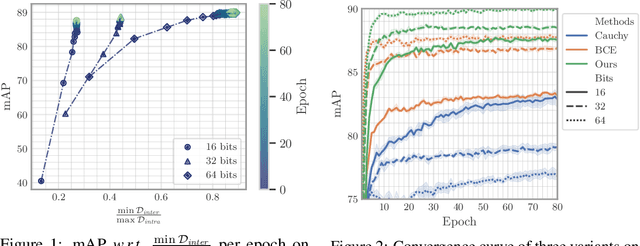

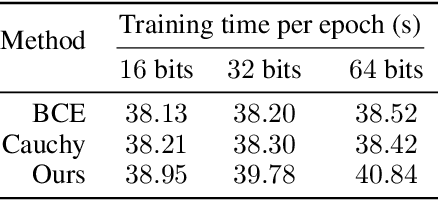

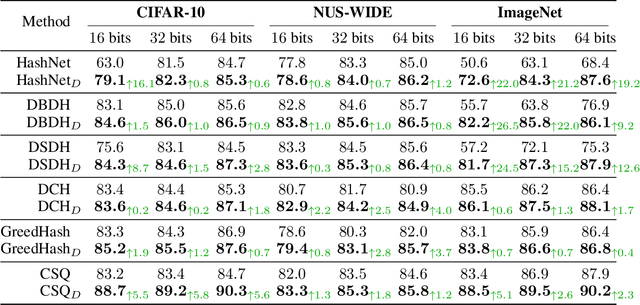

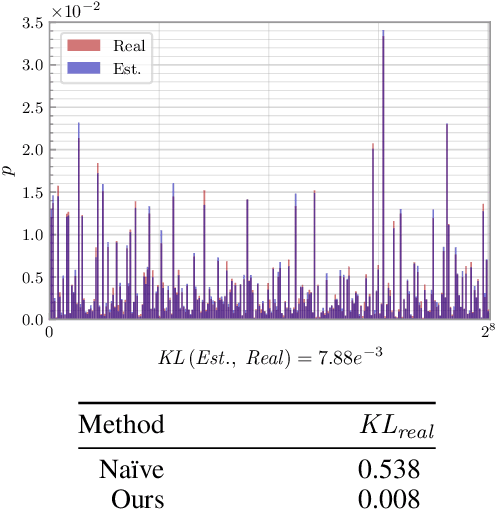

A Lower Bound of Hash Codes' Performance

Oct 12, 2022

Abstract:As a crucial approach for compact representation learning, hashing has achieved great success in effectiveness and efficiency. Numerous heuristic Hamming space metric learning objectives are designed to obtain high-quality hash codes. Nevertheless, a theoretical analysis of criteria for learning good hash codes remains largely unexploited. In this paper, we prove that inter-class distinctiveness and intra-class compactness among hash codes determine the lower bound of hash codes' performance. Promoting these two characteristics could lift the bound and improve hash learning. We then propose a surrogate model to fully exploit the above objective by estimating the posterior of hash codes and controlling it, which results in a low-bias optimization. Extensive experiments reveal the effectiveness of the proposed method. By testing on a series of hash-models, we obtain performance improvements among all of them, with an up to $26.5\%$ increase in mean Average Precision and an up to $20.5\%$ increase in accuracy. Our code is publicly available at \url{https://github.com/VL-Group/LBHash}.

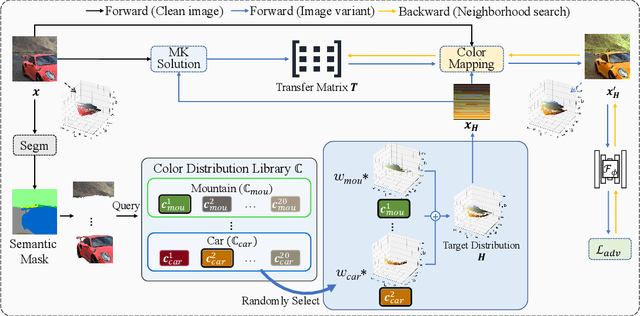

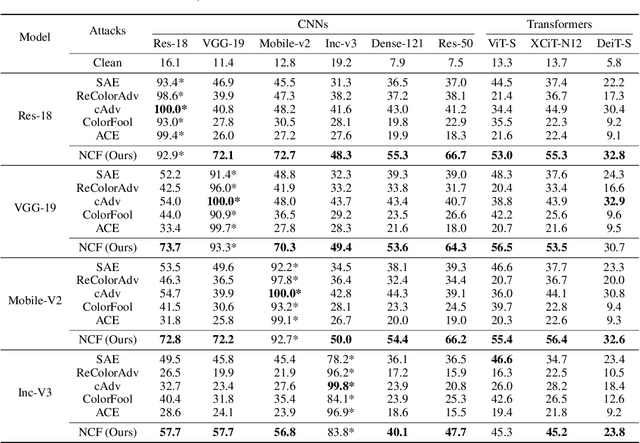

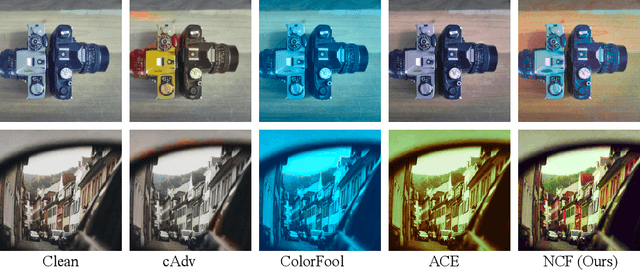

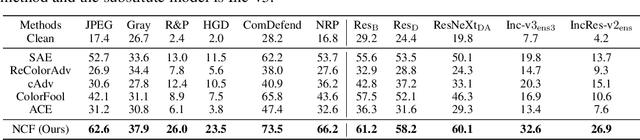

Natural Color Fool: Towards Boosting Black-box Unrestricted Attacks

Oct 05, 2022

Abstract:Unrestricted color attacks, which manipulate semantically meaningful color of an image, have shown their stealthiness and success in fooling both human eyes and deep neural networks. However, current works usually sacrifice the flexibility of the uncontrolled setting to ensure the naturalness of adversarial examples. As a result, the black-box attack performance of these methods is limited. To boost transferability of adversarial examples without damaging image quality, we propose a novel Natural Color Fool (NCF) which is guided by realistic color distributions sampled from a publicly available dataset and optimized by our neighborhood search and initialization reset. By conducting extensive experiments and visualizations, we convincingly demonstrate the effectiveness of our proposed method. Notably, on average, results show that our NCF can outperform state-of-the-art approaches by 15.0%$\sim$32.9% for fooling normally trained models and 10.0%$\sim$25.3% for evading defense methods. Our code is available at https://github.com/ylhz/Natural-Color-Fool.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge