Jian Zhou

CRIBS, LTSI

Dirichlet Diffusion Score Model for Biological Sequence Generation

May 18, 2023

Abstract:Designing biological sequences is an important challenge that requires satisfying complex constraints and thus is a natural problem to address with deep generative modeling. Diffusion generative models have achieved considerable success in many applications. Score-based generative stochastic differential equations (SDE) model is a continuous-time diffusion model framework that enjoys many benefits, but the originally proposed SDEs are not naturally designed for modeling discrete data. To develop generative SDE models for discrete data such as biological sequences, here we introduce a diffusion process defined in the probability simplex space with stationary distribution being the Dirichlet distribution. This makes diffusion in continuous space natural for modeling discrete data. We refer to this approach as Dirchlet diffusion score model. We demonstrate that this technique can generate samples that satisfy hard constraints using a Sudoku generation task. This generative model can also solve Sudoku, including hard puzzles, without additional training. Finally, we applied this approach to develop the first human promoter DNA sequence design model and showed that designed sequences share similar properties with natural promoter sequences.

Controlled physics-informed data generation for deep learning-based remaining useful life prediction under unseen operation conditions

Apr 23, 2023Abstract:Limited availability of representative time-to-failure (TTF) trajectories either limits the performance of deep learning (DL)-based approaches on remaining useful life (RUL) prediction in practice or even precludes their application. Generating synthetic data that is physically plausible is a promising way to tackle this challenge. In this study, a novel hybrid framework combining the controlled physics-informed data generation approach with a deep learning-based prediction model for prognostics is proposed. In the proposed framework, a new controlled physics-informed generative adversarial network (CPI-GAN) is developed to generate synthetic degradation trajectories that are physically interpretable and diverse. Five basic physics constraints are proposed as the controllable settings in the generator. A physics-informed loss function with penalty is designed as the regularization term, which ensures that the changing trend of system health state recorded in the synthetic data is consistent with the underlying physical laws. Then, the generated synthetic data is used as input of the DL-based prediction model to obtain the RUL estimations. The proposed framework is evaluated based on new Commercial Modular Aero-Propulsion System Simulation (N-CMAPSS), a turbofan engine prognostics dataset where a limited avail-ability of TTF trajectories is assumed. The experimental results demonstrate that the proposed framework is able to generate synthetic TTF trajectories that are consistent with underlying degradation trends. The generated trajectories enable to significantly improve the accuracy of RUL predictions.

* 22 pages,12 figures

Systematic Review on Learning-based Spectral CT

Apr 15, 2023

Abstract:Spectral computed tomography (CT) has recently emerged as an advanced version of medical CT and significantly improves conventional (single-energy) CT. Spectral CT has two main forms: dual-energy computed tomography (DECT) and photon-counting computed tomography (PCCT), which offer image improvement, material decomposition, and feature quantification relative to conventional CT. However, the inherent challenges of spectral CT, evidenced by data and image artifacts, remain a bottleneck for clinical applications. To address these problems, machine learning techniques have been widely applied to spectral CT. In this review, we present the state-of-the-art data-driven techniques for spectral CT.

Small but Mighty: Enhancing 3D Point Clouds Semantic Segmentation with U-Next Framework

Apr 03, 2023

Abstract:We study the problem of semantic segmentation of large-scale 3D point clouds. In recent years, significant research efforts have been directed toward local feature aggregation, improved loss functions and sampling strategies. While the fundamental framework of point cloud semantic segmentation has been largely overlooked, with most existing approaches rely on the U-Net architecture by default. In this paper, we propose U-Next, a small but mighty framework designed for point cloud semantic segmentation. The key to this framework is to learn multi-scale hierarchical representations from semantically similar feature maps. Specifically, we build our U-Next by stacking multiple U-Net $L^1$ codecs in a nested and densely arranged manner to minimize the semantic gap, while simultaneously fusing the feature maps across scales to effectively recover the fine-grained details. We also devised a multi-level deep supervision mechanism to further smooth gradient propagation and facilitate network optimization. Extensive experiments conducted on three large-scale benchmarks including S3DIS, Toronto3D, and SensatUrban demonstrate the superiority and the effectiveness of the proposed U-Next architecture. Our U-Next architecture shows consistent and visible performance improvements across different tasks and baseline models, indicating its great potential to serve as a general framework for future research.

Towards a Single Unified Model for Effective Detection, Segmentation, and Diagnosis of Eight Major Cancers Using a Large Collection of CT Scans

Jan 28, 2023

Abstract:Human readers or radiologists routinely perform full-body multi-organ multi-disease detection and diagnosis in clinical practice, while most medical AI systems are built to focus on single organs with a narrow list of a few diseases. This might severely limit AI's clinical adoption. A certain number of AI models need to be assembled non-trivially to match the diagnostic process of a human reading a CT scan. In this paper, we construct a Unified Tumor Transformer (UniT) model to detect (tumor existence and location) and diagnose (tumor characteristics) eight major cancer-prevalent organs in CT scans. UniT is a query-based Mask Transformer model with the output of multi-organ and multi-tumor semantic segmentation. We decouple the object queries into organ queries, detection queries and diagnosis queries, and further establish hierarchical relationships among the three groups. This clinically-inspired architecture effectively assists inter- and intra-organ representation learning of tumors and facilitates the resolution of these complex, anatomically related multi-organ cancer image reading tasks. UniT is trained end-to-end using a curated large-scale CT images of 10,042 patients including eight major types of cancers and occurring non-cancer tumors (all are pathology-confirmed with 3D tumor masks annotated by radiologists). On the test set of 631 patients, UniT has demonstrated strong performance under a set of clinically relevant evaluation metrics, substantially outperforming both multi-organ segmentation methods and an assembly of eight single-organ expert models in tumor detection, segmentation, and diagnosis. Such a unified multi-cancer image reading model (UniT) can significantly reduce the number of false positives produced by combined multi-system models. This moves one step closer towards a universal high-performance cancer screening tool.

Combating Uncertainty and Class Imbalance in Facial Expression Recognition

Dec 15, 2022

Abstract:Recognition of facial expression is a challenge when it comes to computer vision. The primary reasons are class imbalance due to data collection and uncertainty due to inherent noise such as fuzzy facial expressions and inconsistent labels. However, current research has focused either on the problem of class imbalance or on the problem of uncertainty, ignoring the intersection of how to address these two problems. Therefore, in this paper, we propose a framework based on Resnet and Attention to solve the above problems. We design weight for each class. Through the penalty mechanism, our model will pay more attention to the learning of small samples during training, and the resulting decrease in model accuracy can be improved by a Convolutional Block Attention Module (CBAM). Meanwhile, our backbone network will also learn an uncertain feature for each sample. By mixing uncertain features between samples, the model can better learn those features that can be used for classification, thus suppressing uncertainty. Experiments show that our method surpasses most basic methods in terms of accuracy on facial expression data sets (e.g., AffectNet, RAF-DB), and it also solves the problem of class imbalance well.

Multi-Scale Feature Fusion Transformer Network for End-to-End Single Channel Speech Separation

Dec 14, 2022Abstract:Recently studies on time-domain audio separation networks (TasNets) have made a great stride in speech separation. One of the most representative TasNets is a network with a dual-path segmentation approach. However, the original model called DPRNN used a fixed feature dimension and unchanged segment size throughout all layers of the network. In this paper, we propose a multi-scale feature fusion transformer network (MSFFT-Net) based on the conventional dual-path structure for single-channel speech separation. Unlike the conventional dual-path structure where only one processing path exists, adopting several iterative blocks with alternative intra-chunk and inter-chunk operations to capture local and global context information, the proposed MSFFT-Net has multiple parallel processing paths where the feature information can be exchanged between multiple parallel processing paths. Experiments show that our proposed networks based on multi-scale feature fusion structure have achieved better results than the original dual-path model on the benchmark dataset-WSJ0-2mix, where the SI-SNRi score of MSFFT-3P is 20.7dB (1.47% improvement), and MSFFT-2P is 21.0dB (3.45% improvement), which achieves SOTA on WSJ0-2mix without any data augmentation method.

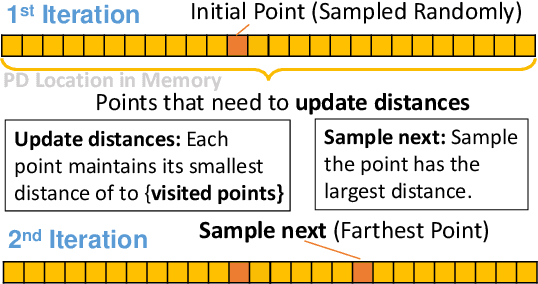

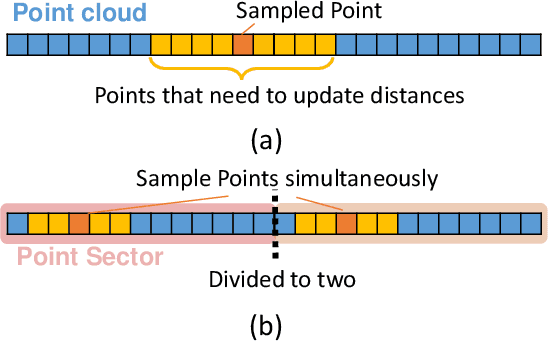

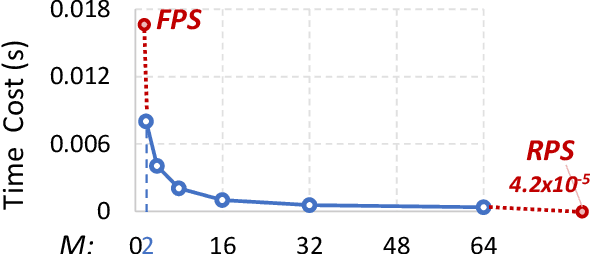

An Adjustable Farthest Point Sampling Method for Approximately-sorted Point Cloud Data

Aug 18, 2022

Abstract:Sampling is an essential part of raw point cloud data processing such as in the popular PointNet++ scheme. Farthest Point Sampling (FPS), which iteratively samples the farthest point and performs distance updating, is one of the most popular sampling schemes. Unfortunately it suffers from low efficiency and can become the bottleneck of point cloud applications. We propose adjustable FPS (AFPS), parameterized by M, to aggressively reduce the complexity of FPS without compromising on the sampling performance. Specifically, it divides the original point cloud into M small point clouds and samples M points simultaneously. It exploits the dimensional locality of an approximately sorted point cloud data to minimize its performance degradation. AFPS method can achieve 22 to 30x speedup over original FPS. Furthermore, we propose the nearest-point-distance-updating (NPDU) method to limit the number of distance updates to a constant number. The combined NPDU on AFPS method can achieve a 34-280x speedup on a point cloud with 2K-32K points with algorithmic performance that is comparable to the original FPS. For instance, for the ShapeNet part segmentation task, it achieves 0.8490 instance average mIoU (mean Intersection of Union), which is only 0.0035 drop compared to the original FPS.

CycleGAN with Dual Adversarial Loss for Bone-Conducted Speech Enhancement

Nov 02, 2021

Abstract:Compared with air-conducted speech, bone-conducted speech has the unique advantage of shielding background noise. Enhancement of bone-conducted speech helps to improve its quality and intelligibility. In this paper, a novel CycleGAN with dual adversarial loss (CycleGAN-DAL) is proposed for bone-conducted speech enhancement. The proposed method uses an adversarial loss and a cycle-consistent loss simultaneously to learn forward and cyclic mapping, in which the adversarial loss is replaced with the classification adversarial loss and the defect adversarial loss to consolidate the forward mapping. Compared with conventional baseline methods, it can learn feature mapping between bone-conducted speech and target speech without additional air-conducted speech assistance. Moreover, the proposed method also avoids the oversmooth problem which is occurred commonly in conventional statistical based models. Experimental results show that the proposed method outperforms baseline methods such as CycleGAN, GMM, and BLSTM. Keywords: Bone-conducted speech enhancement, dual adversarial loss, Parallel CycleGAN, high frequency speech reconstruction

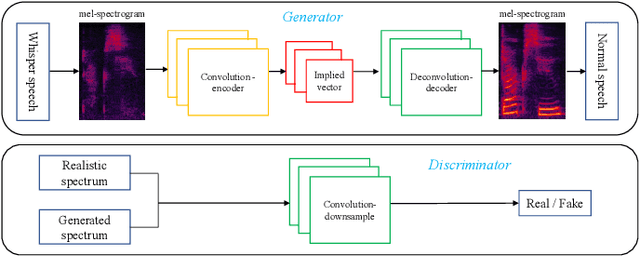

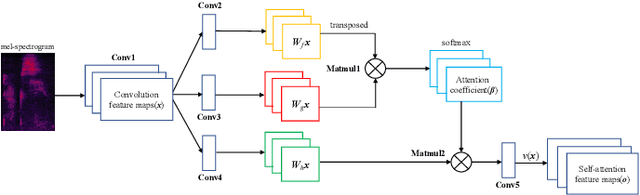

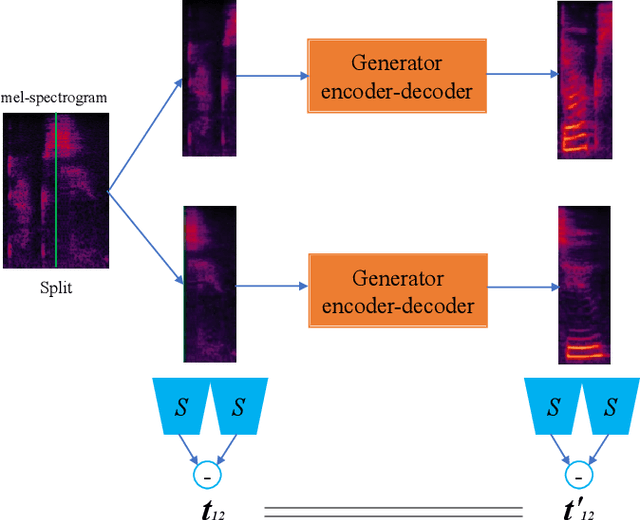

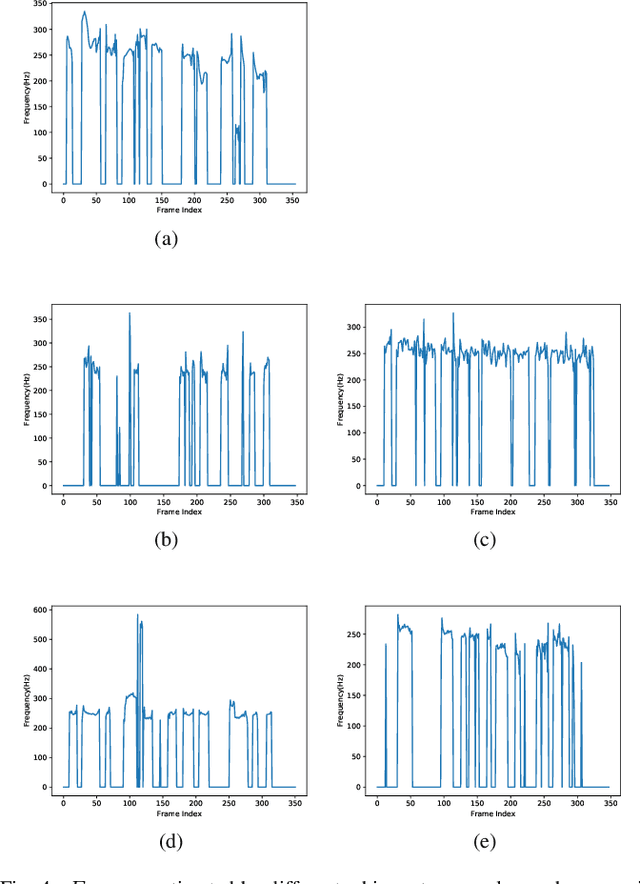

Attention-Guided Generative Adversarial Network for Whisper to Normal Speech Conversion

Nov 02, 2021

Abstract:Whispered speech is a special way of pronunciation without using vocal cord vibration. A whispered speech does not contain a fundamental frequency, and its energy is about 20dB lower than that of a normal speech. Converting a whispered speech into a normal speech can improve speech quality and intelligibility. In this paper, a novel attention-guided generative adversarial network model incorporating an autoencoder, a Siamese neural network, and an identity mapping loss function for whisper to normal speech conversion (AGAN-W2SC) is proposed. The proposed method avoids the challenge of estimating the fundamental frequency of the normal voiced speech converted from a whispered speech. Specifically, the proposed model is more amendable to practical applications because it does not need to align speech features for training. Experimental results demonstrate that the proposed AGAN-W2SC can obtain improved speech quality and intelligibility compared with dynamic-time-warping-based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge