EURO: ESPnet Unsupervised ASR Open-source Toolkit

Dec 01, 2022Dongji Gao, Jiatong Shi, Shun-Po Chuang, Leibny Paola Garcia, Hung-yi Lee, Shinji Watanabe, Sanjeev Khudanpur

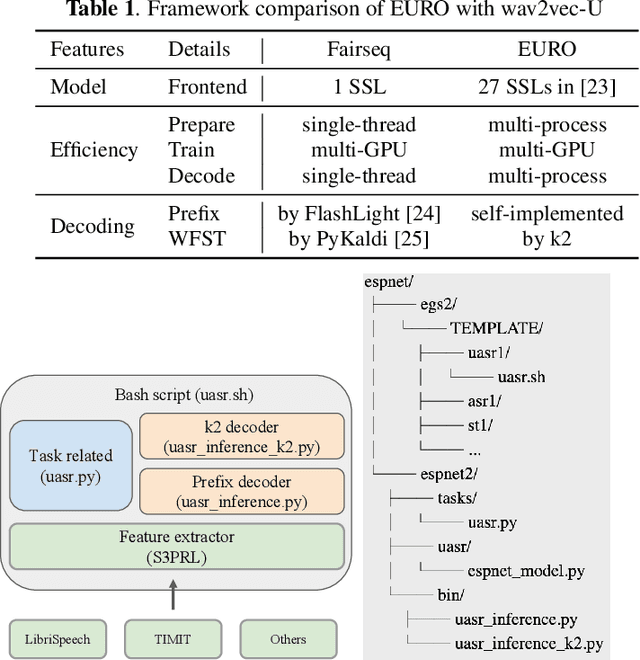

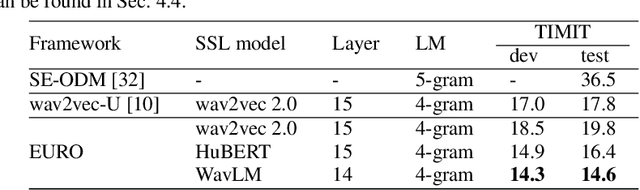

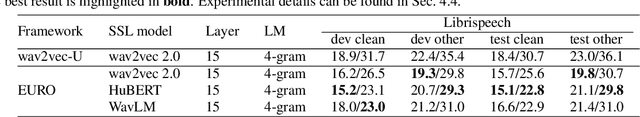

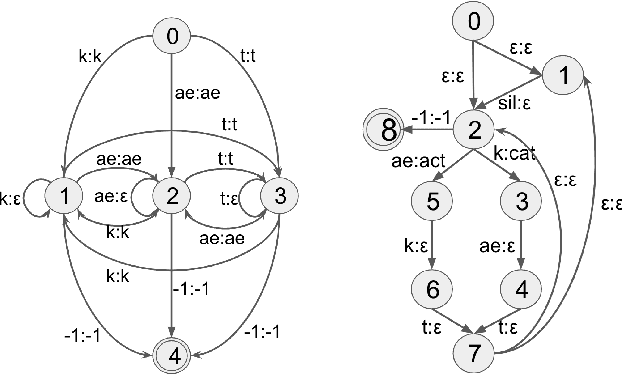

This paper describes the ESPnet Unsupervised ASR Open-source Toolkit (EURO), an end-to-end open-source toolkit for unsupervised automatic speech recognition (UASR). EURO adopts the state-of-the-art UASR learning method introduced by the Wav2vec-U, originally implemented at FAIRSEQ, which leverages self-supervised speech representations and adversarial training. In addition to wav2vec2, EURO extends the functionality and promotes reproducibility for UASR tasks by integrating S3PRL and k2, resulting in flexible frontends from 27 self-supervised models and various graph-based decoding strategies. EURO is implemented in ESPnet and follows its unified pipeline to provide UASR recipes with a complete setup. This improves the pipeline's efficiency and allows EURO to be easily applied to existing datasets in ESPnet. Extensive experiments on three mainstream self-supervised models demonstrate the toolkit's effectiveness and achieve state-of-the-art UASR performance on TIMIT and LibriSpeech datasets. EURO will be publicly available at https://github.com/espnet/espnet, aiming to promote this exciting and emerging research area based on UASR through open-source activity.

CHAPTER: Exploiting Convolutional Neural Network Adapters for Self-supervised Speech Models

Dec 01, 2022Zih-Ching Chen, Yu-Shun Sung, Hung-yi Lee

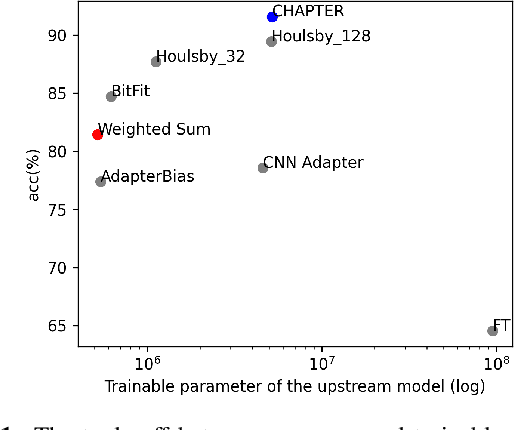

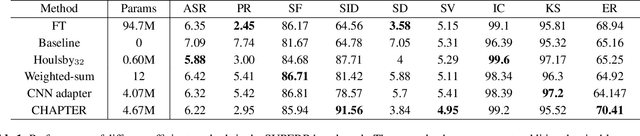

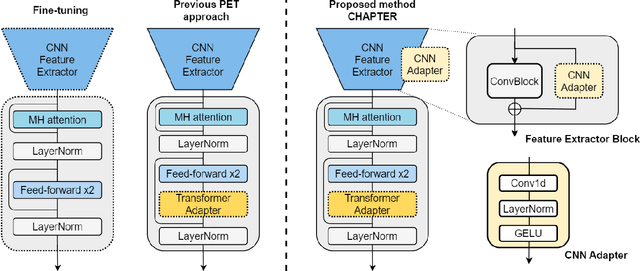

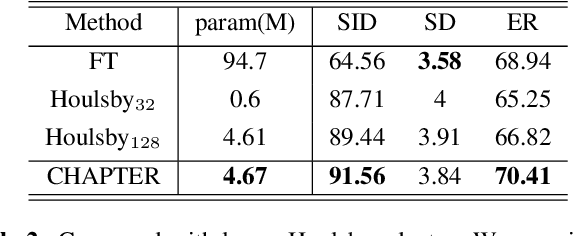

Self-supervised learning (SSL) is a powerful technique for learning representations from unlabeled data. Transformer based models such as HuBERT, which consist a feature extractor and transformer layers, are leading the field in the speech domain. SSL models are fine-tuned on a wide range of downstream tasks, which involves re-training the majority of the model for each task. Previous studies have introduced applying adapters, which are small lightweight modules commonly used in Natural Language Processing (NLP) to adapt pre-trained models to new tasks. However, such efficient tuning techniques only provide adaptation at the transformer layer, but failed to perform adaptation at the feature extractor. In this paper, we propose CHAPTER, an efficient tuning method specifically designed for SSL speech model, by applying CNN adapters at the feature extractor. Using this method, we can only fine-tune fewer than 5% of parameters per task compared to fully fine-tuning and achieve better and more stable performance. We empirically found that adding CNN adapters to the feature extractor can help the adaptation on emotion and speaker tasks. For instance, the accuracy of SID is improved from 87.71 to 91.56, and the accuracy of ER is improved by 5%.

Model Extraction Attack against Self-supervised Speech Models

Nov 29, 2022Tsu-Yuan Hsu, Chen-An Li, Tung-Yu Wu, Hung-yi Lee

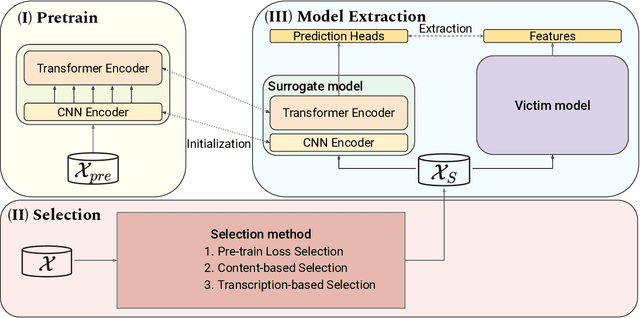

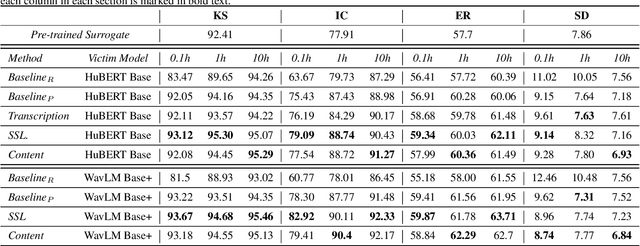

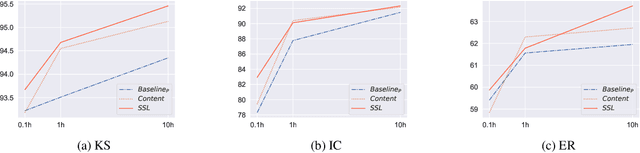

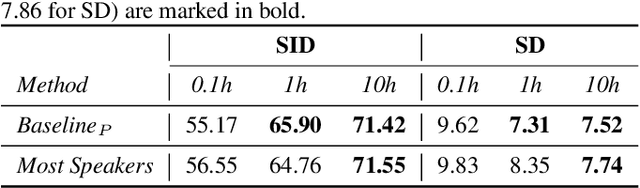

Self-supervised learning (SSL) speech models generate meaningful representations of given clips and achieve incredible performance across various downstream tasks. Model extraction attack (MEA) often refers to an adversary stealing the functionality of the victim model with only query access. In this work, we study the MEA problem against SSL speech model with a small number of queries. We propose a two-stage framework to extract the model. In the first stage, SSL is conducted on the large-scale unlabeled corpus to pre-train a small speech model. Secondly, we actively sample a small portion of clips from the unlabeled corpus and query the target model with these clips to acquire their representations as labels for the small model's second-stage training. Experiment results show that our sampling methods can effectively extract the target model without knowing any information about its model architecture.

Compressing Transformer-based self-supervised models for speech processing

Nov 17, 2022Tzu-Quan Lin, Tsung-Huan Yang, Chun-Yao Chang, Kuang-Ming Chen, Tzu-hsun Feng, Hung-yi Lee, Hao Tang

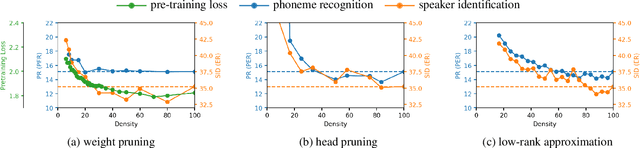

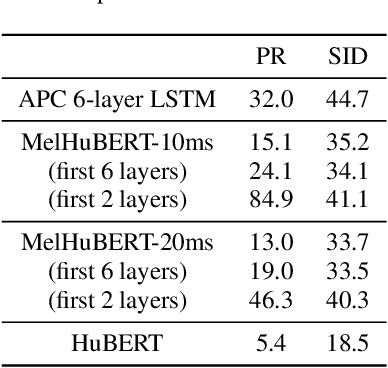

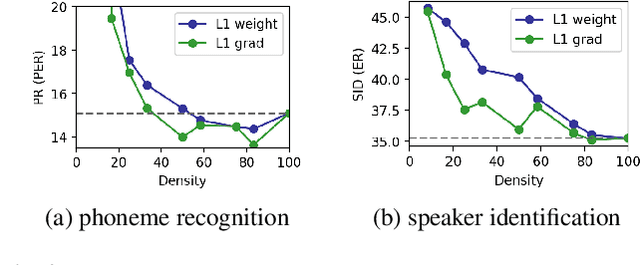

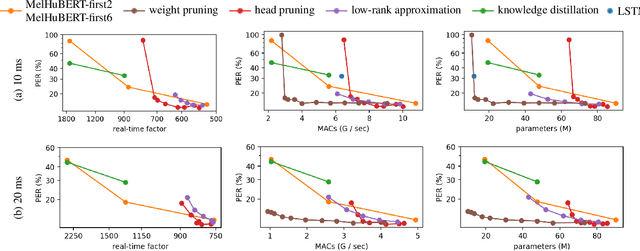

Despite the success of Transformers in self-supervised learning with applications to various downstream tasks, the computational cost of training and inference remains a major challenge for applying these models to a wide spectrum of devices. Several isolated attempts have been made to compress Transformers, prior to applying them to downstream tasks. In this work, we aim to provide context for the isolated results, studying several commonly used compression techniques, including weight pruning, head pruning, low-rank approximation, and knowledge distillation. We report wall-clock time, the number of parameters, and the number of multiply-accumulate operations for these techniques, charting the landscape of compressing Transformer-based self-supervised models.

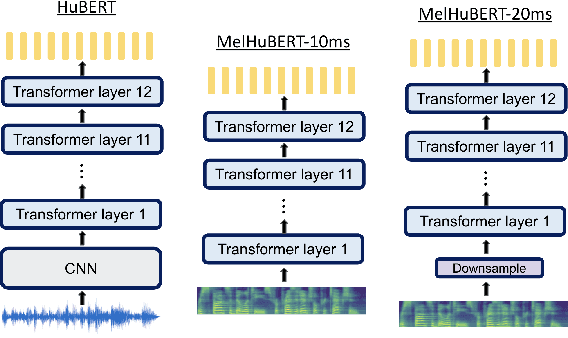

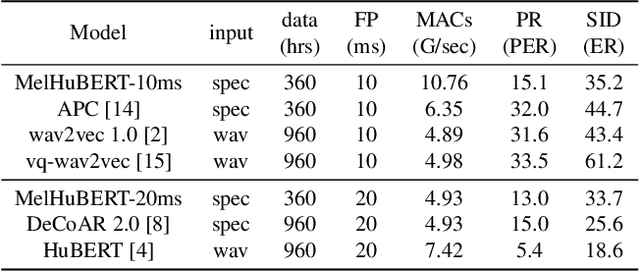

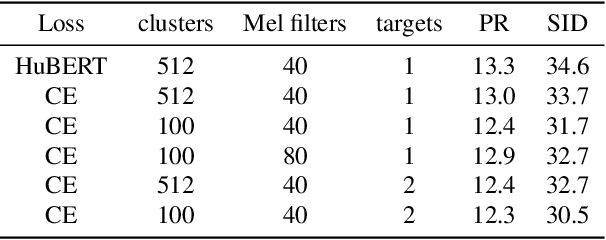

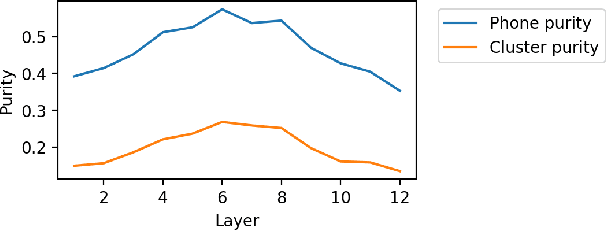

MelHuBERT: A simplified HuBERT on Mel spectrogram

Nov 17, 2022Tzu-Quan Lin, Hung-yi Lee, Hao Tang

Self-supervised models have had great success in learning speech representations that can generalize to various downstream tasks. HuBERT, in particular, achieves strong performance while being relatively simple in training compared to others. The original experimental setting is computationally extensive, hindering the reproducibility of the models. It is also unclear why certain design decisions are made, such as the ad-hoc loss function, and whether these decisions have an impact on the learned representations. We propose MelHuBERT, a simplified version of HuBERT that takes Mel spectrograms as input, significantly reducing computation and memory consumption. We study several aspects of training, including the loss function, multi-stage training, and streaming options. Our result is a efficient yet performant model that can be trained on a single GPU.

Introducing Semantics into Speech Encoders

Nov 15, 2022Derek Xu, Shuyan Dong, Changhan Wang, Suyoun Kim, Zhaojiang Lin, Akshat Shrivastava, Shang-Wen Li, Liang-Hsuan Tseng, Alexei Baevski, Guan-Ting Lin, Hung-yi Lee, Yizhou Sun, Wei Wang

Recent studies find existing self-supervised speech encoders contain primarily acoustic rather than semantic information. As a result, pipelined supervised automatic speech recognition (ASR) to large language model (LLM) systems achieve state-of-the-art results on semantic spoken language tasks by utilizing rich semantic representations from the LLM. These systems come at the cost of labeled audio transcriptions, which is expensive and time-consuming to obtain. We propose a task-agnostic unsupervised way of incorporating semantic information from LLMs into self-supervised speech encoders without labeled audio transcriptions. By introducing semantics, we improve existing speech encoder spoken language understanding performance by over 10\% on intent classification, with modest gains in named entity resolution and slot filling, and spoken question answering FF1 score by over 2\%. Our unsupervised approach achieves similar performance as supervised methods trained on over 100 hours of labeled audio transcripts, demonstrating the feasibility of unsupervised semantic augmentations to existing speech encoders.

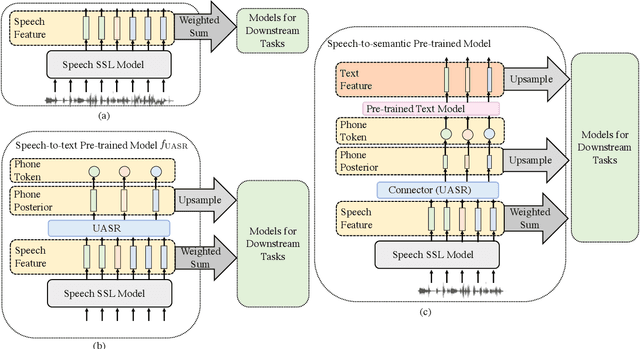

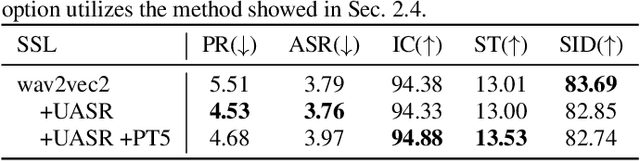

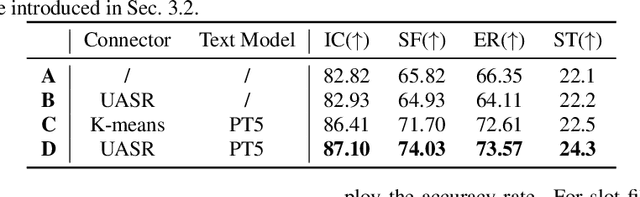

Bridging Speech and Textual Pre-trained Models with Unsupervised ASR

Nov 06, 2022Jiatong Shi, Chan-Jan Hsu, Holam Chung, Dongji Gao, Paola Garcia, Shinji Watanabe, Ann Lee, Hung-yi Lee

Spoken language understanding (SLU) is a task aiming to extract high-level semantics from spoken utterances. Previous works have investigated the use of speech self-supervised models and textual pre-trained models, which have shown reasonable improvements to various SLU tasks. However, because of the mismatched modalities between speech signals and text tokens, previous methods usually need complex designs of the frameworks. This work proposes a simple yet efficient unsupervised paradigm that connects speech and textual pre-trained models, resulting in an unsupervised speech-to-semantic pre-trained model for various tasks in SLU. To be specific, we propose to use unsupervised automatic speech recognition (ASR) as a connector that bridges different modalities used in speech and textual pre-trained models. Our experiments show that unsupervised ASR itself can improve the representations from speech self-supervised models. More importantly, it is shown as an efficient connector between speech and textual pre-trained models, improving the performances of five different SLU tasks. Notably, on spoken question answering, we reach the state-of-the-art result over the challenging NMSQA benchmark.

Once-for-All Sequence Compression for Self-Supervised Speech Models

Nov 04, 2022Hsuan-Jui Chen, Yen Meng, Hung-yi Lee

The sequence length along the time axis is often the dominant factor of the computational cost of self-supervised speech models. Works have been proposed to reduce the sequence length for lowering the computational cost. However, different downstream tasks have different tolerance of sequence compressing, so a model that produces a fixed compressing rate may not fit all tasks. In this work, we introduce a once-for-all (OFA) sequence compression framework for self-supervised speech models that supports a continuous range of compressing rates. The framework is evaluated on various tasks, showing marginal degradation compared to the fixed compressing rate variants with a smooth performance-efficiency trade-off. We further explore adaptive compressing rate learning, demonstrating the ability to select task-specific preferred frame periods without needing a grid search.

M-SpeechCLIP: Leveraging Large-Scale, Pre-Trained Models for Multilingual Speech to Image Retrieval

Nov 02, 2022Layne Berry, Yi-Jen Shih, Hsuan-Fu Wang, Heng-Jui Chang, Hung-yi Lee, David Harwath

This work investigates the use of large-scale, pre-trained models (CLIP and HuBERT) for multilingual speech-image retrieval. For non-English speech-image retrieval, we outperform the current state-of-the-art performance by a wide margin when training separate models for each language, and show that a single model which processes speech in all three languages still achieves retrieval scores comparable with the prior state-of-the-art. We identify key differences in model behavior and performance between English and non-English settings, presumably attributable to the English-only pre-training of CLIP and HuBERT. Finally, we show that our models can be used for mono- and cross-lingual speech-text retrieval and cross-lingual speech-speech retrieval, despite never having seen any parallel speech-text or speech-speech data during training.

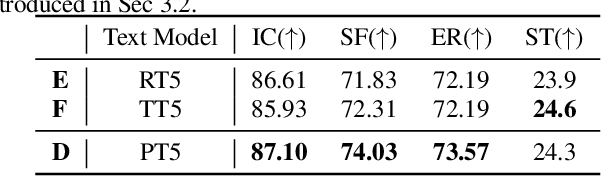

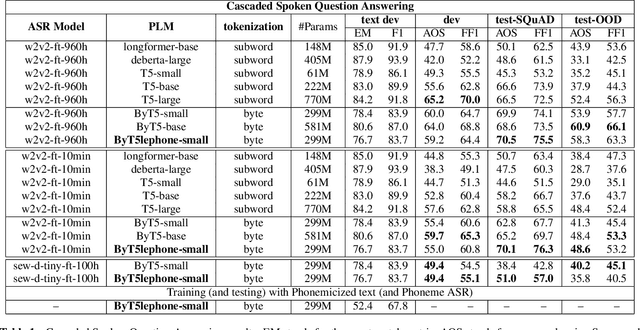

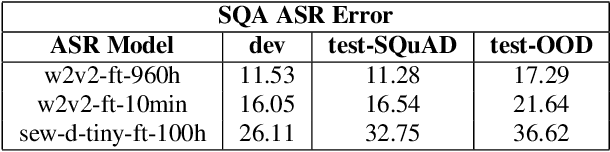

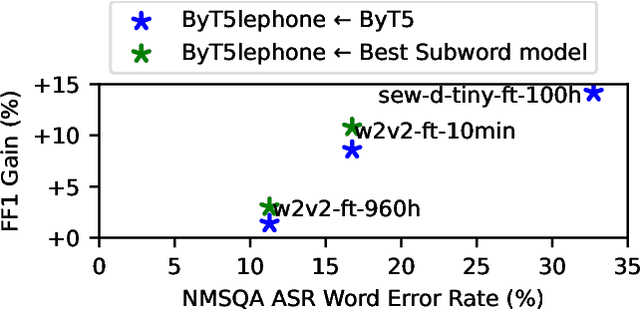

T5lephone: Bridging Speech and Text Self-supervised Models for Spoken Language Understanding via Phoneme level T5

Nov 01, 2022Chan-Jan Hsu, Ho-Lam Chung, Hung-yi Lee, Yu Tsao

In Spoken language understanding (SLU), a natural solution is concatenating pre-trained speech models (e.g. HuBERT) and pretrained language models (PLM, e.g. T5). Most previous works use pretrained language models with subword-based tokenization. However, the granularity of input units affects the alignment of speech model outputs and language model inputs, and PLM with character-based tokenization is underexplored. In this work, we conduct extensive studies on how PLMs with different tokenization strategies affect spoken language understanding task including spoken question answering (SQA) and speech translation (ST). We further extend the idea to create T5lephone(pronounced as telephone), a variant of T5 that is pretrained using phonemicized text. We initialize T5lephone with existing PLMs to pretrain it using relatively lightweight computational resources. We reached state-of-the-art on NMSQA, and the T5lephone model exceeds T5 with other types of units on end-to-end SQA and ST.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge