Dong Zhou

Imitation learning-based spacecraft rendezvous and docking method with Expert Demonstration

Jan 19, 2026Abstract:Existing spacecraft rendezvous and docking control methods largely rely on predefined dynamic models and often exhibit limited robustness in realistic on-orbit environments. To address this issue, this paper proposes an Imitation Learning-based spacecraft rendezvous and docking control framework (IL-SRD) that directly learns control policies from expert demonstrations, thereby reducing dependence on accurate modeling. We propose an anchored decoder target mechanism, which conditions the decoder queries on state-related anchors to explicitly constrain the control generation process. This mechanism enforces physically consistent control evolution and effectively suppresses implausible action deviations in sequential prediction, enabling reliable six-degree-of-freedom (6-DOF) rendezvous and docking control. To further enhance stability, a temporal aggregation mechanism is incorporated to mitigate error accumulation caused by the sequential prediction nature of Transformer-based models, where small inaccuracies at each time step can propagate and amplify over long horizons. Extensive simulation results demonstrate that the proposed IL-SRD framework achieves accurate and energy-efficient model-free rendezvous and docking control. Robustness evaluations further confirm its capability to maintain competitive performance under significant unknown disturbances. The source code is available at https://github.com/Dongzhou-1996/IL-SRD.

E-MMDiT: Revisiting Multimodal Diffusion Transformer Design for Fast Image Synthesis under Limited Resources

Oct 31, 2025Abstract:Diffusion models have shown strong capabilities in generating high-quality images from text prompts. However, these models often require large-scale training data and significant computational resources to train, or suffer from heavy structure with high latency. To this end, we propose Efficient Multimodal Diffusion Transformer (E-MMDiT), an efficient and lightweight multimodal diffusion model with only 304M parameters for fast image synthesis requiring low training resources. We provide an easily reproducible baseline with competitive results. Our model for 512px generation, trained with only 25M public data in 1.5 days on a single node of 8 AMD MI300X GPUs, achieves 0.66 on GenEval and easily reaches to 0.72 with some post-training techniques such as GRPO. Our design philosophy centers on token reduction as the computational cost scales significantly with the token count. We adopt a highly compressive visual tokenizer to produce a more compact representation and propose a novel multi-path compression module for further compression of tokens. To enhance our design, we introduce Position Reinforcement, which strengthens positional information to maintain spatial coherence, and Alternating Subregion Attention (ASA), which performs attention within subregions to further reduce computational cost. In addition, we propose AdaLN-affine, an efficient lightweight module for computing modulation parameters in transformer blocks. Our code is available at https://github.com/AMD-AGI/Nitro-E and we hope E-MMDiT serves as a strong and practical baseline for future research and contributes to democratization of generative AI models.

Multimodal Spiking Neural Network for Space Robotic Manipulation

Aug 10, 2025Abstract:This paper presents a multimodal control framework based on spiking neural networks (SNNs) for robotic arms aboard space stations. It is designed to cope with the constraints of limited onboard resources while enabling autonomous manipulation and material transfer in space operations. By combining geometric states with tactile and semantic information, the framework strengthens environmental awareness and contributes to more robust control strategies. To guide the learning process progressively, a dual-channel, three-stage curriculum reinforcement learning (CRL) scheme is further integrated into the system. The framework was tested across a range of tasks including target approach, object grasping, and stable lifting with wall-mounted robotic arms, demonstrating reliable performance throughout. Experimental evaluations demonstrate that the proposed method consistently outperforms baseline approaches in both task success rate and energy efficiency. These findings highlight its suitability for real-world aerospace applications.

Language-Conditioned Open-Vocabulary Mobile Manipulation with Pretrained Models

Jul 23, 2025Abstract:Open-vocabulary mobile manipulation (OVMM) that involves the handling of novel and unseen objects across different workspaces remains a significant challenge for real-world robotic applications. In this paper, we propose a novel Language-conditioned Open-Vocabulary Mobile Manipulation framework, named LOVMM, incorporating the large language model (LLM) and vision-language model (VLM) to tackle various mobile manipulation tasks in household environments. Our approach is capable of solving various OVMM tasks with free-form natural language instructions (e.g. "toss the food boxes on the office room desk to the trash bin in the corner", and "pack the bottles from the bed to the box in the guestroom"). Extensive experiments simulated in complex household environments show strong zero-shot generalization and multi-task learning abilities of LOVMM. Moreover, our approach can also generalize to multiple tabletop manipulation tasks and achieve better success rates compared to other state-of-the-art methods.

Megrez2 Technical Report

Jul 23, 2025Abstract:We present Megrez2, a novel lightweight and high-performance language model architecture optimized for device native deployment. Megrez2 introduces a novel cross-layer expert sharing mechanism, which significantly reduces total parameter count by reusing expert modules across adjacent transformer layers while maintaining most of the model's capacity. It also incorporates pre-gated routing, enabling memory-efficient expert loading and faster inference. As the first instantiation of the Megrez2 architecture, we introduce the Megrez2-Preview model, which is pre-trained on a 5-trillion-token corpus and further enhanced through supervised fine-tuning and reinforcement learning with verifiable rewards. With only 3B activated and 7.5B stored parameters, Megrez2-Preview demonstrates competitive or superior performance compared to larger models on a wide range of tasks, including language understanding, instruction following, mathematical reasoning, and code generation. These results highlight the effectiveness of the Megrez2 architecture to achieve a balance between accuracy, efficiency, and deployability, making it a strong candidate for real-world, resource-constrained applications.

AMD-Hummingbird: Towards an Efficient Text-to-Video Model

Mar 25, 2025Abstract:Text-to-Video (T2V) generation has attracted significant attention for its ability to synthesize realistic videos from textual descriptions. However, existing models struggle to balance computational efficiency and high visual quality, particularly on resource-limited devices, e.g.,iGPUs and mobile phones. Most prior work prioritizes visual fidelity while overlooking the need for smaller, more efficient models suitable for real-world deployment. To address this challenge, we propose a lightweight T2V framework, termed Hummingbird, which prunes existing models and enhances visual quality through visual feedback learning. Our approach reduces the size of the U-Net from 1.4 billion to 0.7 billion parameters, significantly improving efficiency while preserving high-quality video generation. Additionally, we introduce a novel data processing pipeline that leverages Large Language Models (LLMs) and Video Quality Assessment (VQA) models to enhance the quality of both text prompts and video data. To support user-driven training and style customization, we publicly release the full training code, including data processing and model training. Extensive experiments show that our method achieves a 31X speedup compared to state-of-the-art models such as VideoCrafter2, while also attaining the highest overall score on VBench. Moreover, our method supports the generation of videos with up to 26 frames, addressing the limitations of existing U-Net-based methods in long video generation. Notably, the entire training process requires only four GPUs, yet delivers performance competitive with existing leading methods. Hummingbird presents a practical and efficient solution for T2V generation, combining high performance, scalability, and flexibility for real-world applications.

LLM-driven Effective Knowledge Tracing by Integrating Dual-channel Difficulty

Feb 27, 2025Abstract:Knowledge Tracing (KT) is a fundamental technology in intelligent tutoring systems used to simulate changes in students' knowledge state during learning, track personalized knowledge mastery, and predict performance. However, current KT models face three major challenges: (1) When encountering new questions, models face cold-start problems due to sparse interaction records, making precise modeling difficult; (2) Traditional models only use historical interaction records for student personalization modeling, unable to accurately track individual mastery levels, resulting in unclear personalized modeling; (3) The decision-making process is opaque to educators, making it challenging for them to understand model judgments. To address these challenges, we propose a novel Dual-channel Difficulty-aware Knowledge Tracing (DDKT) framework that utilizes Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) for subjective difficulty assessment, while integrating difficulty bias-aware algorithms and student mastery algorithms for precise difficulty measurement. Our framework introduces three key innovations: (1) Difficulty Balance Perception Sequence (DBPS) - students' subjective perceptions combined with objective difficulty, measuring gaps between LLM-assessed difficulty, mathematical-statistical difficulty, and students' subjective perceived difficulty through attention mechanisms; (2) Difficulty Mastery Ratio (DMR) - precise modeling of student mastery levels through different difficulty zones; (3) Knowledge State Update Mechanism - implementing personalized knowledge acquisition through gated networks and updating student knowledge state. Experimental results on two real datasets show our method consistently outperforms nine baseline models, improving AUC metrics by 2% to 10% while effectively addressing cold-start problems and enhancing model interpretability.

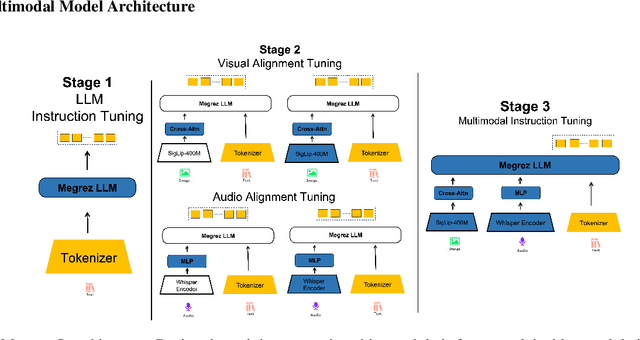

Megrez-Omni Technical Report

Feb 19, 2025

Abstract:In this work, we present the Megrez models, comprising a language model (Megrez-3B-Instruct) and a multimodal model (Megrez-3B-Omni). These models are designed to deliver fast inference, compactness, and robust edge-side intelligence through a software-hardware co-design approach. Megrez-3B-Instruct offers several advantages, including high accuracy, high speed, ease of use, and a wide range of applications. Building on Megrez-3B-Instruct, Megrez-3B-Omni is an on-device multimodal understanding LLM that supports image, text, and audio analysis. It achieves state-of-the-art accuracy across all three modalities and demonstrates strong versatility and robustness, setting a new benchmark for multimodal AI models.

Edit as You See: Image-guided Video Editing via Masked Motion Modeling

Jan 08, 2025Abstract:Recent advancements in diffusion models have significantly facilitated text-guided video editing. However, there is a relative scarcity of research on image-guided video editing, a method that empowers users to edit videos by merely indicating a target object in the initial frame and providing an RGB image as reference, without relying on the text prompts. In this paper, we propose a novel Image-guided Video Editing Diffusion model, termed IVEDiff for the image-guided video editing. IVEDiff is built on top of image editing models, and is equipped with learnable motion modules to maintain the temporal consistency of edited video. Inspired by self-supervised learning concepts, we introduce a masked motion modeling fine-tuning strategy that empowers the motion module's capabilities for capturing inter-frame motion dynamics, while preserving the capabilities for intra-frame semantic correlations modeling of the base image editing model. Moreover, an optical-flow-guided motion reference network is proposed to ensure the accurate propagation of information between edited video frames, alleviating the misleading effects of invalid information. We also construct a benchmark to facilitate further research. The comprehensive experiments demonstrate that our method is able to generate temporally smooth edited videos while robustly dealing with various editing objects with high quality.

ReNeg: Learning Negative Embedding with Reward Guidance

Dec 27, 2024Abstract:In text-to-image (T2I) generation applications, negative embeddings have proven to be a simple yet effective approach for enhancing generation quality. Typically, these negative embeddings are derived from user-defined negative prompts, which, while being functional, are not necessarily optimal. In this paper, we introduce ReNeg, an end-to-end method designed to learn improved Negative embeddings guided by a Reward model. We employ a reward feedback learning framework and integrate classifier-free guidance (CFG) into the training process, which was previously utilized only during inference, thus enabling the effective learning of negative embeddings. We also propose two strategies for learning both global and per-sample negative embeddings. Extensive experiments show that the learned negative embedding significantly outperforms null-text and handcrafted counterparts, achieving substantial improvements in human preference alignment. Additionally, the negative embedding learned within the same text embedding space exhibits strong generalization capabilities. For example, using the same CLIP text encoder, the negative embedding learned on SD1.5 can be seamlessly transferred to text-to-image or even text-to-video models such as ControlNet, ZeroScope, and VideoCrafter2, resulting in consistent performance improvements across the board.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge