Bei Liu

Long-Form Video-Language Pre-Training with Multimodal Temporal Contrastive Learning

Oct 12, 2022

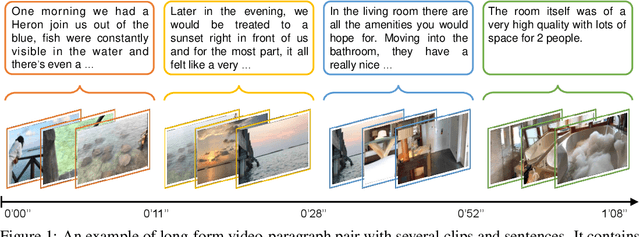

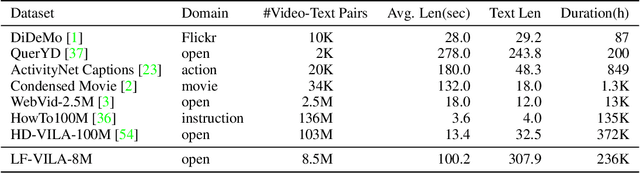

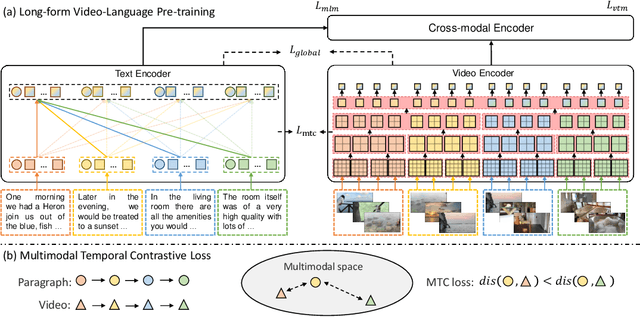

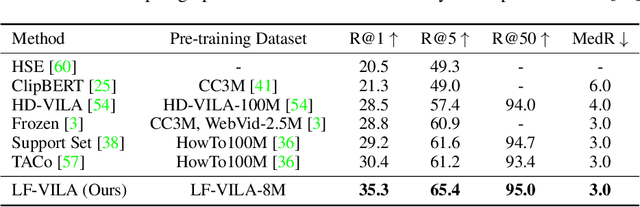

Abstract:Large-scale video-language pre-training has shown significant improvement in video-language understanding tasks. Previous studies of video-language pretraining mainly focus on short-form videos (i.e., within 30 seconds) and sentences, leaving long-form video-language pre-training rarely explored. Directly learning representation from long-form videos and language may benefit many long-form video-language understanding tasks. However, it is challenging due to the difficulty of modeling long-range relationships and the heavy computational burden caused by more frames. In this paper, we introduce a Long-Form VIdeo-LAnguage pre-training model (LF-VILA) and train it on a large-scale long-form video and paragraph dataset constructed from an existing public dataset. To effectively capture the rich temporal dynamics and to better align video and language in an efficient end-to-end manner, we introduce two novel designs in our LF-VILA model. We first propose a Multimodal Temporal Contrastive (MTC) loss to learn the temporal relation across different modalities by encouraging fine-grained alignment between long-form videos and paragraphs. Second, we propose a Hierarchical Temporal Window Attention (HTWA) mechanism to effectively capture long-range dependency while reducing computational cost in Transformer. We fine-tune the pre-trained LF-VILA model on seven downstream long-form video-language understanding tasks of paragraph-to-video retrieval and long-form video question-answering, and achieve new state-of-the-art performances. Specifically, our model achieves 16.1% relative improvement on ActivityNet paragraph-to-video retrieval task and 2.4% on How2QA task, respectively. We release our code, dataset, and pre-trained models at https://github.com/microsoft/XPretrain.

CLIP-ViP: Adapting Pre-trained Image-Text Model to Video-Language Representation Alignment

Sep 23, 2022

Abstract:The pre-trained image-text models, like CLIP, have demonstrated the strong power of vision-language representation learned from a large scale of web-collected image-text data. In light of the well-learned visual features, some existing works transfer image representation to video domain and achieve good results. However, how to utilize image-language pre-trained model (e.g., CLIP) for video-language pre-training (post-pretraining) is still under explored. In this paper, we investigate two questions: 1) what are the factors hindering post-pretraining CLIP to further improve the performance on video-language tasks? and 2) how to mitigate the impact of these factors? Through a series of comparative experiments and analyses, we find that the data scale and domain gap between language sources have great impacts. Motivated by these, we propose a Omnisource Cross-modal Learning method equipped with a Video Proxy mechanism on the basis of CLIP, namely CLIP-ViP. Extensive results show that our approach improves the performance of CLIP on video-text retrieval by a large margin. Our model also achieves SOTA results on a variety of datasets, including MSR-VTT, DiDeMo, LSMDC, and ActivityNet. We will release our code and pre-trained CLIP-ViP models at https://github.com/microsoft/XPretrain/tree/main/CLIP-ViP.

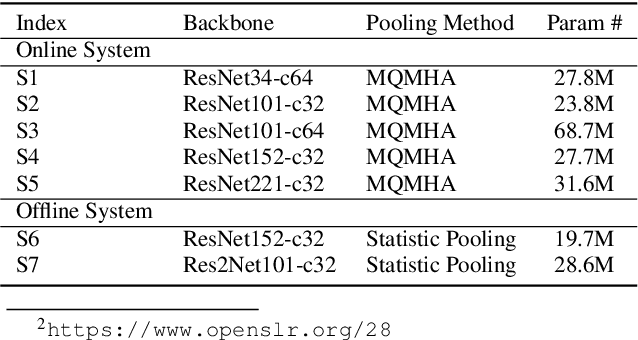

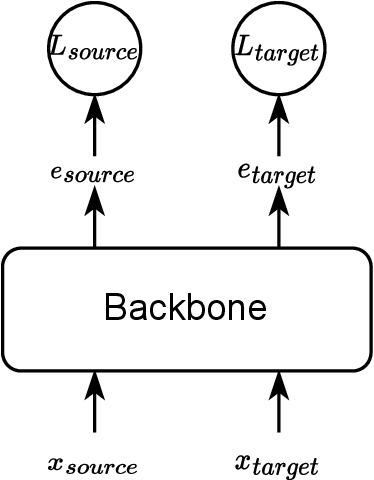

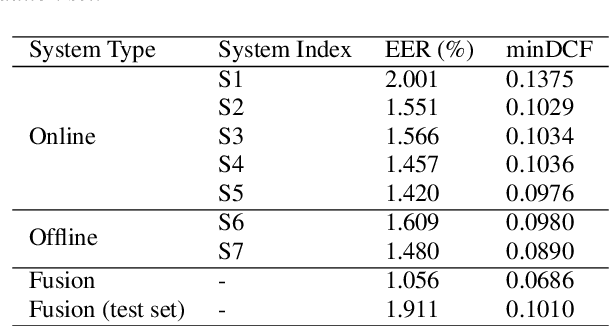

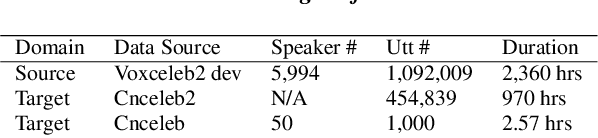

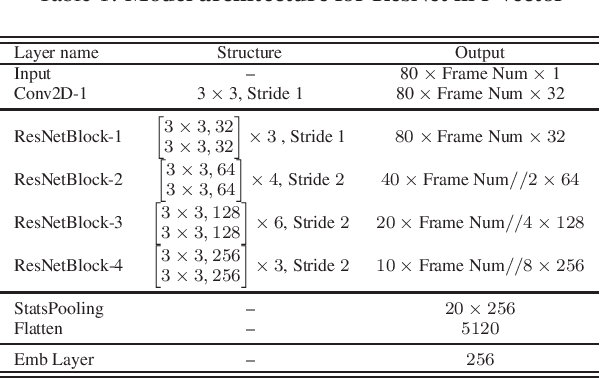

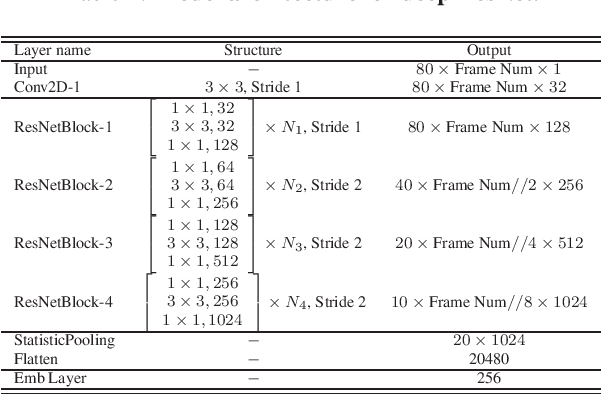

SJTU-AISPEECH System for VoxCeleb Speaker Recognition Challenge 2022

Sep 20, 2022

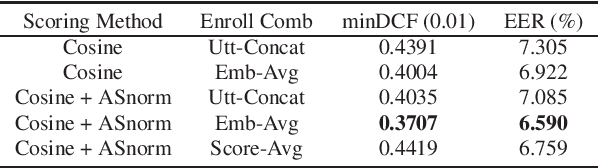

Abstract:This report describes the SJTU-AISPEECH system for the Voxceleb Speaker Recognition Challenge 2022. For track1, we implemented two kinds of systems, the online system and the offline system. Different ResNet-based backbones and loss functions are explored. Our final fusion system achieved 3rd place in track1. For track3, we implemented statistic adaptation and jointly training based domain adaptation. In the jointly training based domain adaptation, we jointly trained the source and target domain dataset with different training objectives to do the domain adaptation. We explored two different training objectives for target domain data, self-supervised learning based angular proto-typical loss and semi-supervised learning based classification loss with estimated pseudo labels. Besides, we used the dynamic loss-gate and label correction (DLG-LC) strategy to improve the quality of pseudo labels when the target domain objective is a classification loss. Our final fusion system achieved 4th place (very close to 3rd place, relatively less than 1%) in track3.

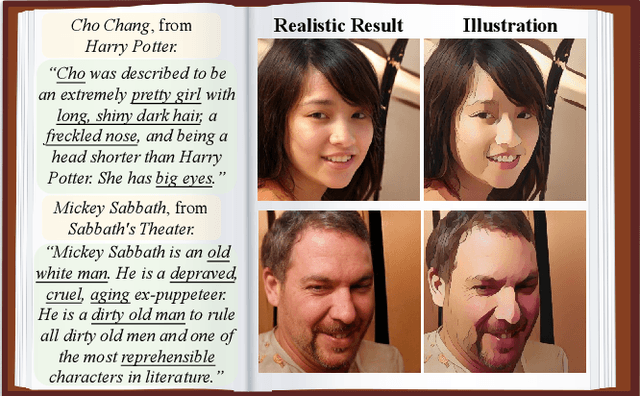

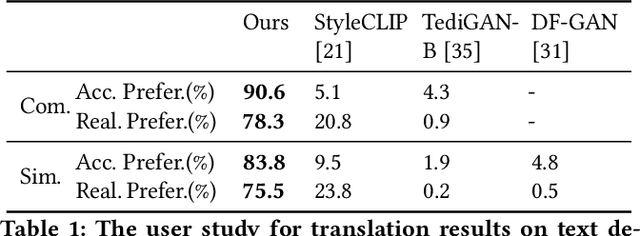

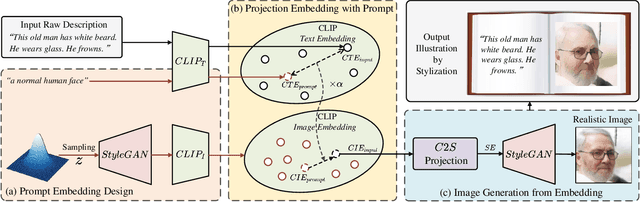

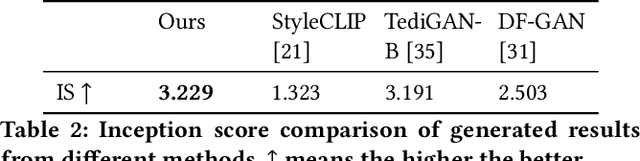

AI Illustrator: Translating Raw Descriptions into Images by Prompt-based Cross-Modal Generation

Sep 08, 2022

Abstract:AI illustrator aims to automatically design visually appealing images for books to provoke rich thoughts and emotions. To achieve this goal, we propose a framework for translating raw descriptions with complex semantics into semantically corresponding images. The main challenge lies in the complexity of the semantics of raw descriptions, which may be hard to be visualized (e.g., "gloomy" or "Asian"). It usually poses challenges for existing methods to handle such descriptions. To address this issue, we propose a Prompt-based Cross-Modal Generation Framework (PCM-Frame) to leverage two powerful pre-trained models, including CLIP and StyleGAN. Our framework consists of two components: a projection module from Text Embeddings to Image Embeddings based on prompts, and an adapted image generation module built on StyleGAN which takes Image Embeddings as inputs and is trained by combined semantic consistency losses. To bridge the gap between realistic images and illustration designs, we further adopt a stylization model as post-processing in our framework for better visual effects. Benefiting from the pre-trained models, our method can handle complex descriptions and does not require external paired data for training. Furthermore, we have built a benchmark that consists of 200 raw descriptions. We conduct a user study to demonstrate our superiority over the competing methods with complicated texts. We release our code at https://github.com/researchmm/AI_Illustrator.

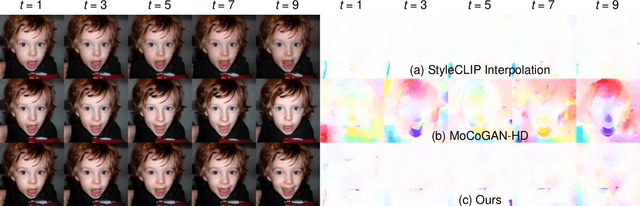

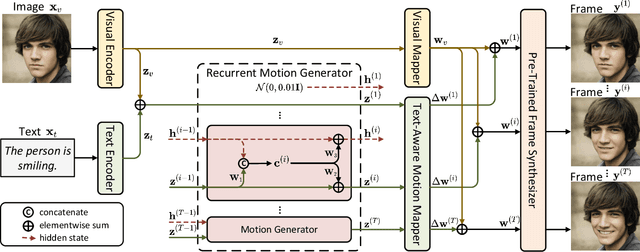

Language-Guided Face Animation by Recurrent StyleGAN-based Generator

Aug 11, 2022

Abstract:Recent works on language-guided image manipulation have shown great power of language in providing rich semantics, especially for face images. However, the other natural information, motions, in language is less explored. In this paper, we leverage the motion information and study a novel task, language-guided face animation, that aims to animate a static face image with the help of languages. To better utilize both semantics and motions from languages, we propose a simple yet effective framework. Specifically, we propose a recurrent motion generator to extract a series of semantic and motion information from the language and feed it along with visual information to a pre-trained StyleGAN to generate high-quality frames. To optimize the proposed framework, three carefully designed loss functions are proposed including a regularization loss to keep the face identity, a path length regularization loss to ensure motion smoothness, and a contrastive loss to enable video synthesis with various language guidance in one single model. Extensive experiments with both qualitative and quantitative evaluations on diverse domains (\textit{e.g.,} human face, anime face, and dog face) demonstrate the superiority of our model in generating high-quality and realistic videos from one still image with the guidance of language. Code will be available at https://github.com/TiankaiHang/language-guided-animation.git.

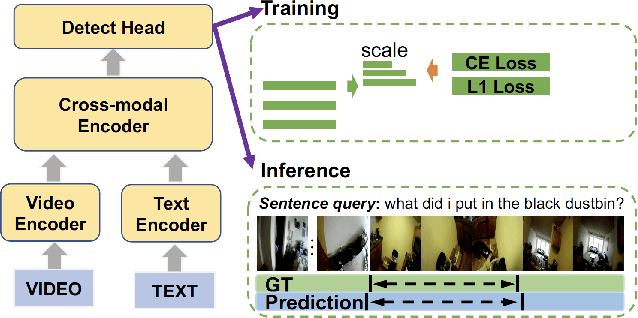

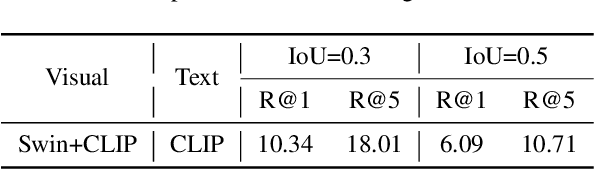

Exploring Anchor-based Detection for Ego4D Natural Language Query

Aug 10, 2022

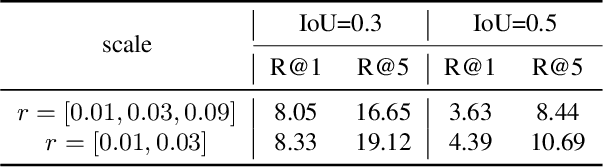

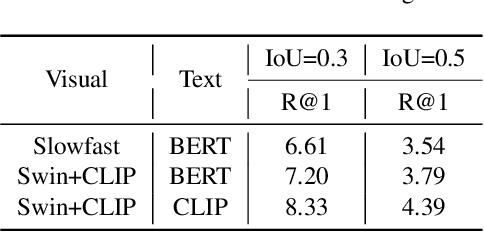

Abstract:In this paper we provide the technique report of Ego4D natural language query challenge in CVPR 2022. Natural language query task is challenging due to the requirement of comprehensive understanding of video contents. Most previous works address this task based on third-person view datasets while few research interest has been placed in the ego-centric view by far. Great progress has been made though, we notice that previous works can not adapt well to ego-centric view datasets e.g., Ego4D mainly because of two reasons: 1) most queries in Ego4D have a excessively small temporal duration (e.g., less than 5 seconds); 2) queries in Ego4D are faced with much more complex video understanding of long-term temporal orders. Considering these, we propose our solution of this challenge to solve the above issues.

The SJTU X-LANCE Lab System for CNSRC 2022

Jun 23, 2022

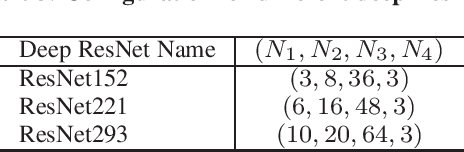

Abstract:This technical report describes the SJTU X-LANCE Lab system for the three tracks in CNSRC 2022. In this challenge, we explored the speaker embedding modeling ability of deep ResNet (Deeper r-vector). All the systems are only trained on the Cnceleb training set and we use the same systems for the three tracks in CNSRC 2022. In this challenge, our system ranks the first place in the fixed track of speaker verification task. Our best single system and fusion system achieve 0.3164 and 0.2975 minDCF respectively. Besides, we submit the result of ResNet221 to the speaker retrieval track and achieve 0.4626 mAP.

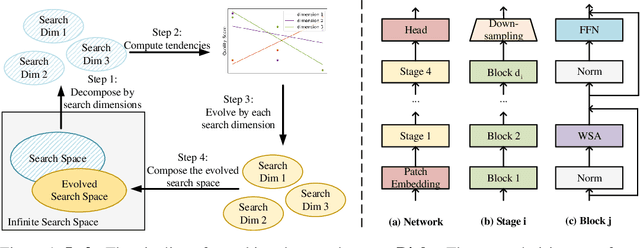

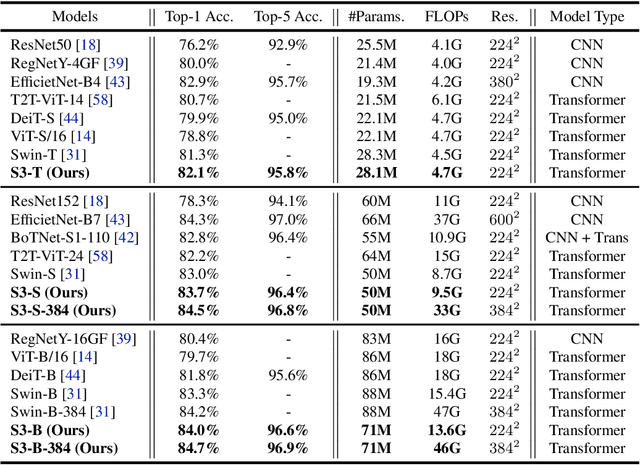

Searching the Search Space of Vision Transformer

Nov 29, 2021

Abstract:Vision Transformer has shown great visual representation power in substantial vision tasks such as recognition and detection, and thus been attracting fast-growing efforts on manually designing more effective architectures. In this paper, we propose to use neural architecture search to automate this process, by searching not only the architecture but also the search space. The central idea is to gradually evolve different search dimensions guided by their E-T Error computed using a weight-sharing supernet. Moreover, we provide design guidelines of general vision transformers with extensive analysis according to the space searching process, which could promote the understanding of vision transformer. Remarkably, the searched models, named S3 (short for Searching the Search Space), from the searched space achieve superior performance to recently proposed models, such as Swin, DeiT and ViT, when evaluated on ImageNet. The effectiveness of S3 is also illustrated on object detection, semantic segmentation and visual question answering, demonstrating its generality to downstream vision and vision-language tasks. Code and models will be available at https://github.com/microsoft/Cream.

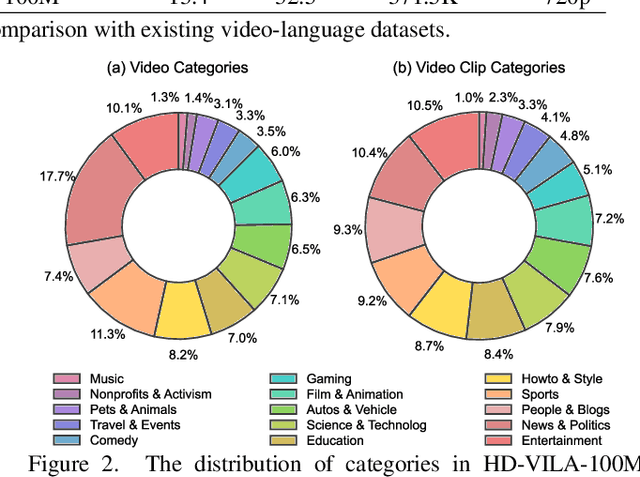

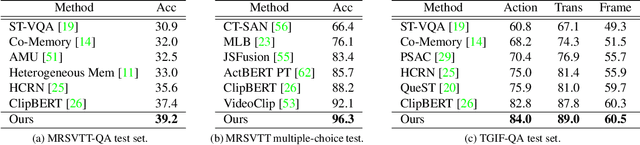

Advancing High-Resolution Video-Language Representation with Large-Scale Video Transcriptions

Nov 19, 2021

Abstract:We study joint video and language (VL) pre-training to enable cross-modality learning and benefit plentiful downstream VL tasks. Existing works either extract low-quality video features or learn limited text embedding, while neglecting that high-resolution videos and diversified semantics can significantly improve cross-modality learning. In this paper, we propose a novel High-resolution and Diversified VIdeo-LAnguage pre-training model (HD-VILA) for many visual tasks. In particular, we collect a large dataset with two distinct properties: 1) the first high-resolution dataset including 371.5k hours of 720p videos, and 2) the most diversified dataset covering 15 popular YouTube categories. To enable VL pre-training, we jointly optimize the HD-VILA model by a hybrid Transformer that learns rich spatiotemporal features, and a multimodal Transformer that enforces interactions of the learned video features with diversified texts. Our pre-training model achieves new state-of-the-art results in 10 VL understanding tasks and 2 more novel text-to-visual generation tasks. For example, we outperform SOTA models with relative increases of 38.5% R@1 in zero-shot MSR-VTT text-to-video retrieval task, and 53.6% in high-resolution dataset LSMDC. The learned VL embedding is also effective in generating visually pleasing and semantically relevant results in text-to-visual manipulation and super-resolution tasks.

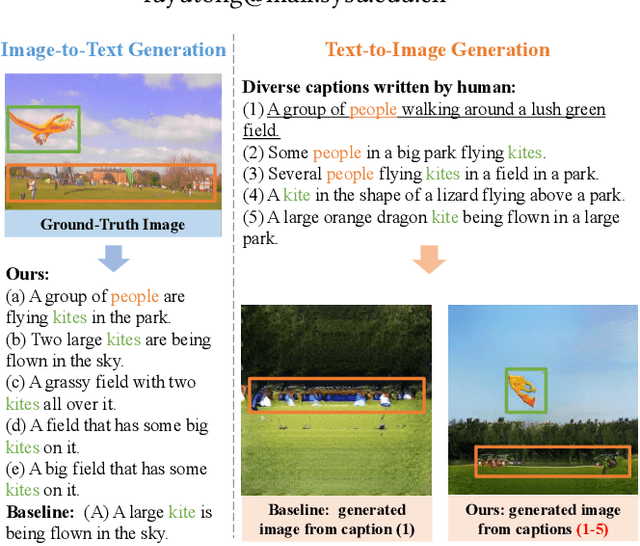

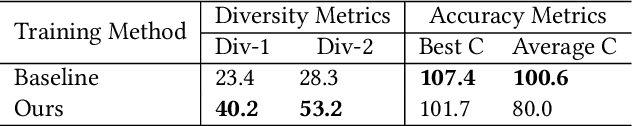

A Picture is Worth a Thousand Words: A Unified System for Diverse Captions and Rich Images Generation

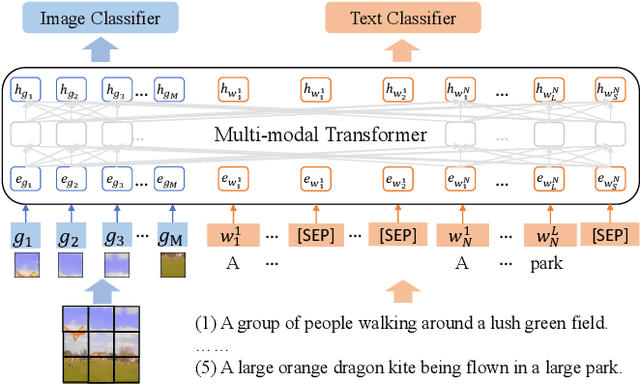

Oct 19, 2021

Abstract:A creative image-and-text generative AI system mimics humans' extraordinary abilities to provide users with diverse and comprehensive caption suggestions, as well as rich image creations. In this work, we demonstrate such an AI creation system to produce both diverse captions and rich images. When users imagine an image and associate it with multiple captions, our system paints a rich image to reflect all captions faithfully. Likewise, when users upload an image, our system depicts it with multiple diverse captions. We propose a unified multi-modal framework to achieve this goal. Specifically, our framework jointly models image-and-text representations with a Transformer network, which supports rich image creation by accepting multiple captions as input. We consider the relations among input captions to encourage diversity in training and adopt a non-autoregressive decoding strategy to enable real-time inference. Based on these, our system supports both diverse captions and rich images generations. Our code is available online.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge