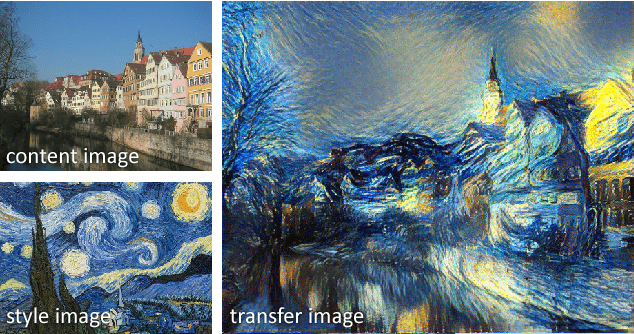

photo style transfer

Papers and Code

NTIRE 2021 Depth Guided Image Relighting Challenge

Apr 27, 2021

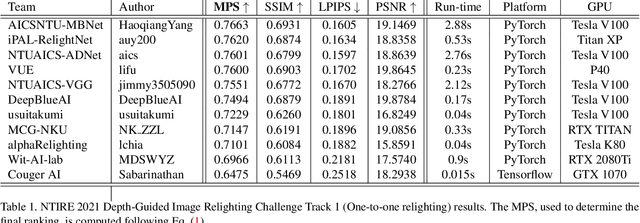

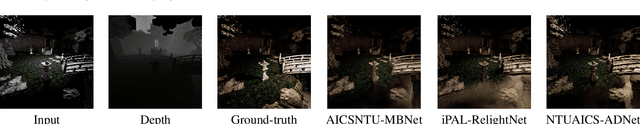

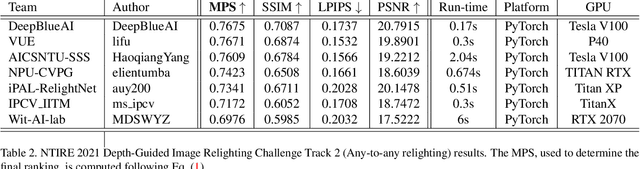

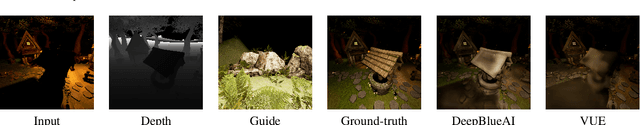

Image relighting is attracting increasing interest due to its various applications. From a research perspective, image relighting can be exploited to conduct both image normalization for domain adaptation, and also for data augmentation. It also has multiple direct uses for photo montage and aesthetic enhancement. In this paper, we review the NTIRE 2021 depth guided image relighting challenge. We rely on the VIDIT dataset for each of our two challenge tracks, including depth information. The first track is on one-to-one relighting where the goal is to transform the illumination setup of an input image (color temperature and light source position) to the target illumination setup. In the second track, the any-to-any relighting challenge, the objective is to transform the illumination settings of the input image to match those of another guide image, similar to style transfer. In both tracks, participants were given depth information about the captured scenes. We had nearly 250 registered participants, leading to 18 confirmed team submissions in the final competition stage. The competitions, methods, and final results are presented in this paper.

* Code and data available on https://github.com/majedelhelou/VIDIT

AniGAN: Style-Guided Generative Adversarial Networks for Unsupervised Anime Face Generation

Feb 24, 2021

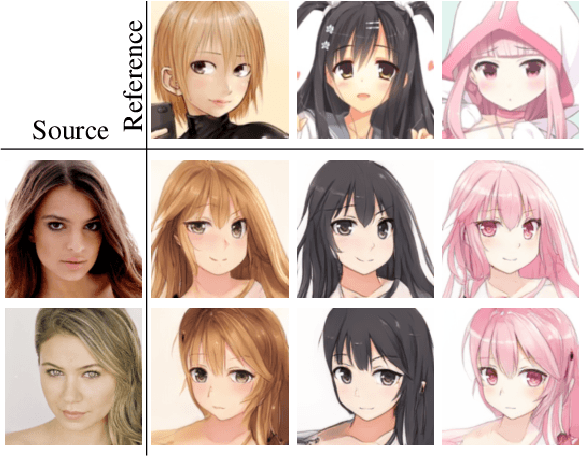

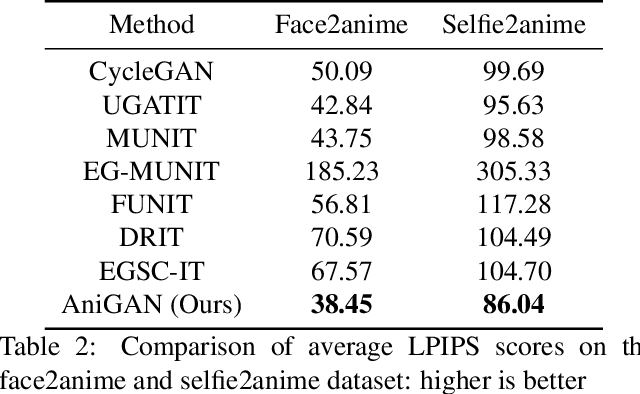

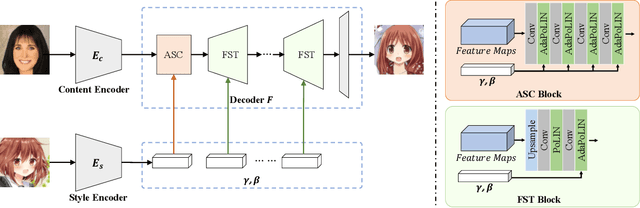

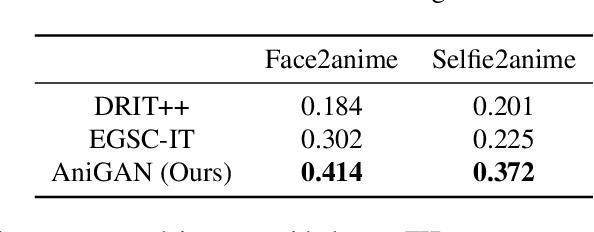

In this paper, we propose a novel framework to translate a portrait photo-face into an anime appearance. Our aim is to synthesize anime-faces which are style-consistent with a given reference anime-face. However, unlike typical translation tasks, such anime-face translation is challenging due to complex variations of appearances among anime-faces. Existing methods often fail to transfer the styles of reference anime-faces, or introduce noticeable artifacts/distortions in the local shapes of their generated faces. We propose Ani- GAN, a novel GAN-based translator that synthesizes highquality anime-faces. Specifically, a new generator architecture is proposed to simultaneously transfer color/texture styles and transform local facial shapes into anime-like counterparts based on the style of a reference anime-face, while preserving the global structure of the source photoface. We propose a double-branch discriminator to learn both domain-specific distributions and domain-shared distributions, helping generate visually pleasing anime-faces and effectively mitigate artifacts. Extensive experiments qualitatively and quantitatively demonstrate the superiority of our method over state-of-the-art methods.

TextStyleBrush: Transfer of Text Aesthetics from a Single Example

Jun 15, 2021

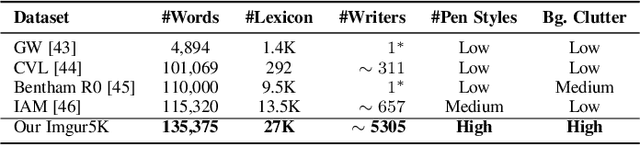

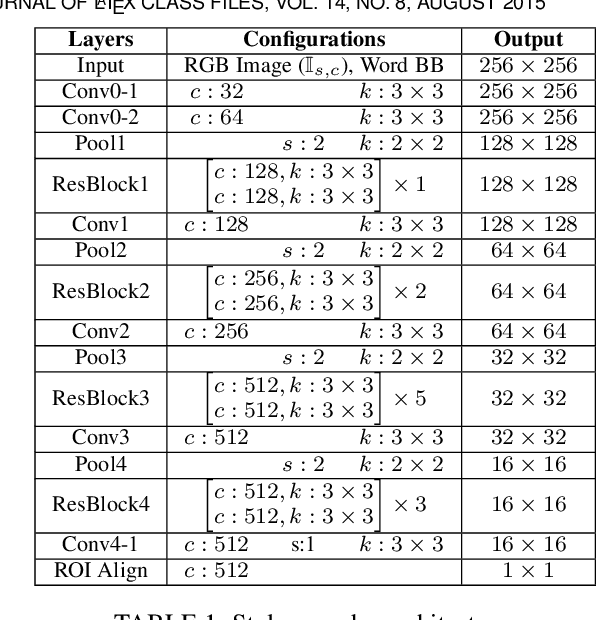

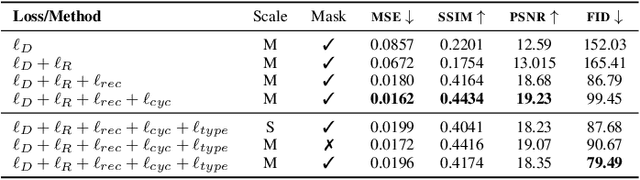

We present a novel approach for disentangling the content of a text image from all aspects of its appearance. The appearance representation we derive can then be applied to new content, for one-shot transfer of the source style to new content. We learn this disentanglement in a self-supervised manner. Our method processes entire word boxes, without requiring segmentation of text from background, per-character processing, or making assumptions on string lengths. We show results in different text domains which were previously handled by specialized methods, e.g., scene text, handwritten text. To these ends, we make a number of technical contributions: (1) We disentangle the style and content of a textual image into a non-parametric, fixed-dimensional vector. (2) We propose a novel approach inspired by StyleGAN but conditioned over the example style at different resolution and content. (3) We present novel self-supervised training criteria which preserve both source style and target content using a pre-trained font classifier and text recognizer. Finally, (4) we also introduce Imgur5K, a new challenging dataset for handwritten word images. We offer numerous qualitative photo-realistic results of our method. We further show that our method surpasses previous work in quantitative tests on scene text and handwriting datasets, as well as in a user study.

Automated Deep Photo Style Transfer

Jan 12, 2019

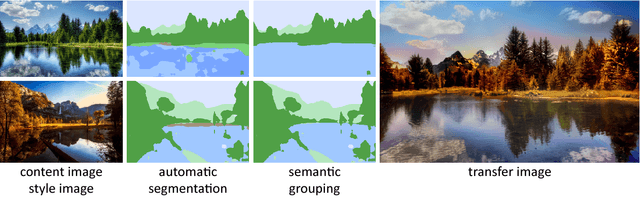

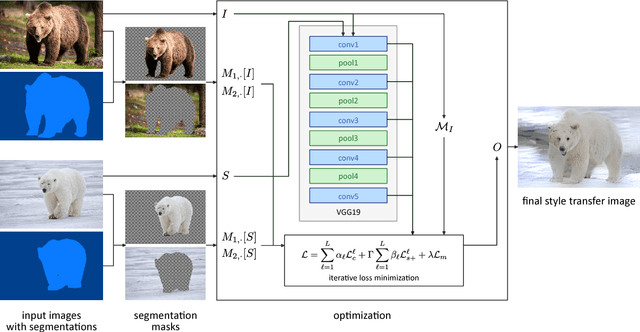

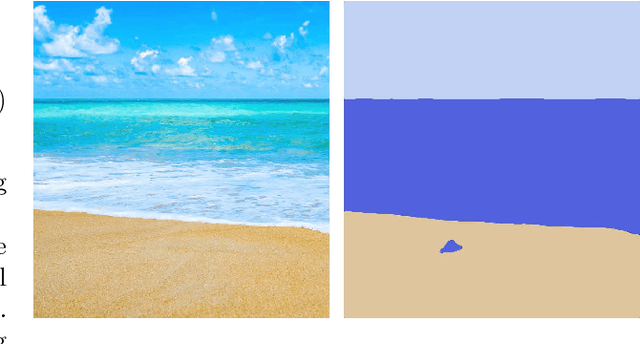

Photorealism is a complex concept that cannot easily be formulated mathematically. Deep Photo Style Transfer is an attempt to transfer the style of a reference image to a content image while preserving its photorealism. This is achieved by introducing a constraint that prevents distortions in the content image and by applying the style transfer independently for semantically different parts of the images. In addition, an automated segmentation process is presented that consists of a neural network based segmentation method followed by a semantic grouping step. To further improve the results a measure for image aesthetics is used and elaborated. If the content and the style image are sufficiently similar, the result images look very realistic. With the automation of the image segmentation the pipeline becomes completely independent from any user interaction, which allows for new applications.

Salienteye: Maximizing Engagement While Maintaining Artistic Style on Instagram Using Deep Neural Networks

Jun 13, 2020

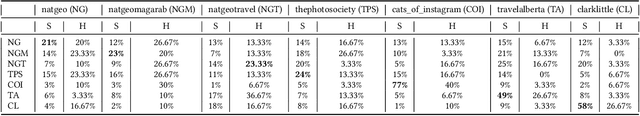

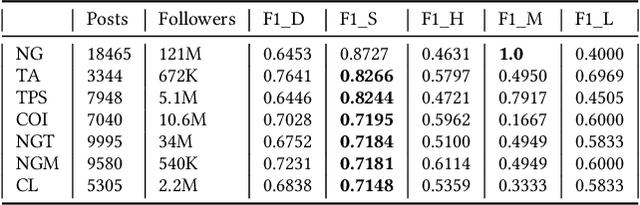

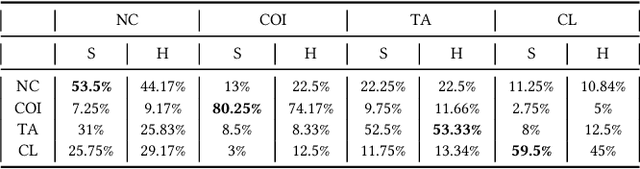

Instagram has become a great venue for amateur and professional photographers alike to showcase their work. It has, in other words, democratized photography. Generally, photographers take thousands of photos in a session, from which they pick a few to showcase their work on Instagram. Photographers trying to build a reputation on Instagram have to strike a balance between maximizing their followers' engagement with their photos, while also maintaining their artistic style. We used transfer learning to adapt Xception, which is a model for object recognition trained on the ImageNet dataset, to the task of engagement prediction and utilized Gram matrices generated from VGG19, another object recognition model trained on ImageNet, for the task of style similarity measurement on photos posted on Instagram. Our models can be trained on individual Instagram accounts to create personalized engagement prediction and style similarity models. Once trained on their accounts, users can have new photos sorted based on predicted engagement and style similarity to their previous work, thus enabling them to upload photos that not only have the potential to maximize engagement from their followers but also maintain their style of photography. We trained and validated our models on several Instagram accounts, showing it to be adept at both tasks, also outperforming several baseline models and human annotators.

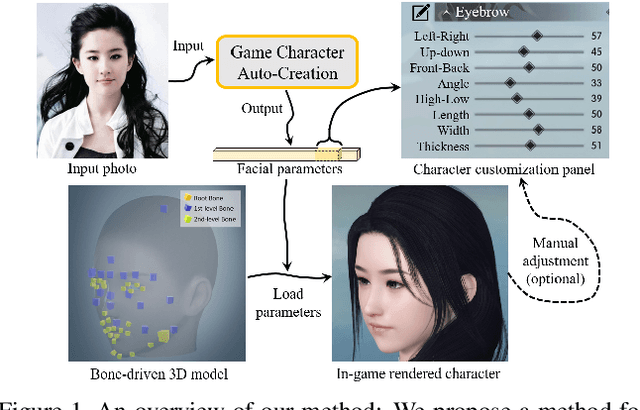

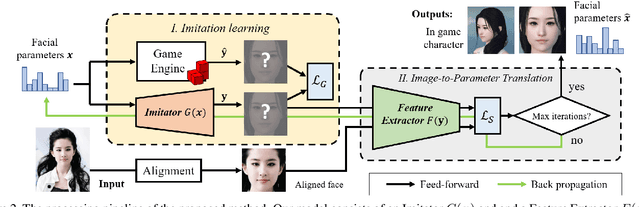

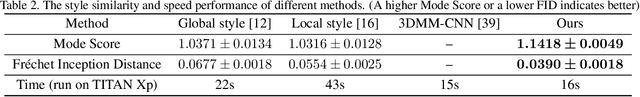

Fast and Robust Face-to-Parameter Translation for Game Character Auto-Creation

Aug 17, 2020

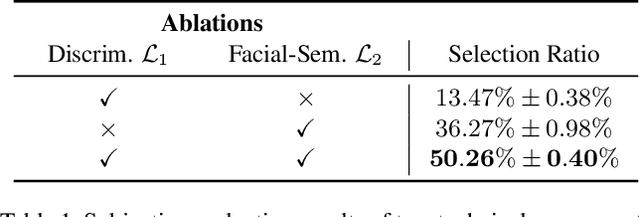

With the rapid development of Role-Playing Games (RPGs), players are now allowed to edit the facial appearance of their in-game characters with their preferences rather than using default templates. This paper proposes a game character auto-creation framework that generates in-game characters according to a player's input face photo. Different from the previous methods that are designed based on neural style transfer or monocular 3D face reconstruction, we re-formulate the character auto-creation process in a different point of view: by predicting a large set of physically meaningful facial parameters under a self-supervised learning paradigm. Instead of updating facial parameters iteratively at the input end of the renderer as suggested by previous methods, which are time-consuming, we introduce a facial parameter translator so that the creation can be done efficiently through a single forward propagation from the face embeddings to parameters, with a considerable 1000x computational speedup. Despite its high efficiency, the interactivity is preserved in our method where users are allowed to optionally fine-tune the facial parameters on our creation according to their needs. Our approach also shows better robustness than previous methods, especially for those photos with head-pose variance. Comparison results and ablation analysis on seven public face verification datasets suggest the effectiveness of our method.

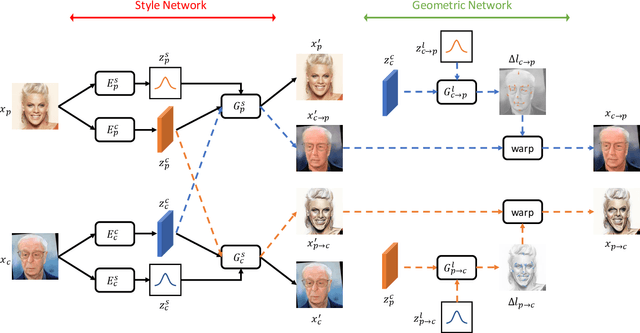

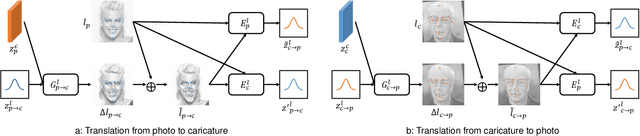

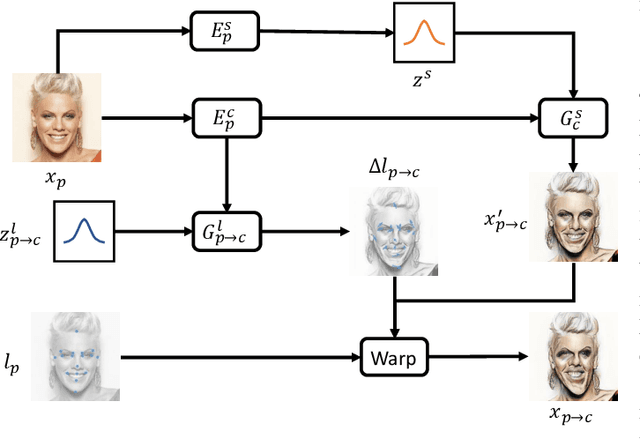

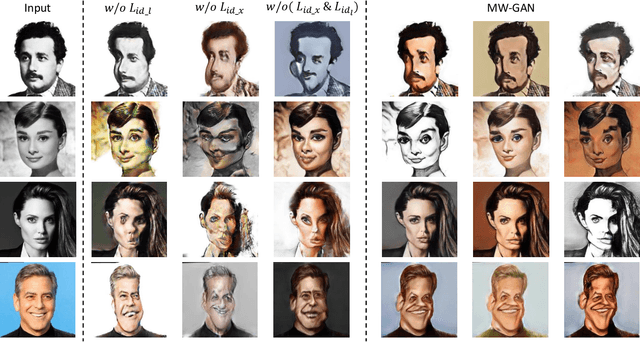

MW-GAN: Multi-Warping GAN for Caricature Generation with Multi-Style Geometric Exaggeration

Jan 07, 2020

Given an input face photo, the goal of caricature generation is to produce stylized, exaggerated caricatures that share the same identity as the photo. It requires simultaneous style transfer and shape exaggeration with rich diversity, and meanwhile preserving the identity of the input. To address this challenging problem, we propose a novel framework called Multi-Warping GAN (MW-GAN), including a style network and a geometric network that are designed to conduct style transfer and geometric exaggeration respectively. We bridge the gap between the style and landmarks of an image with corresponding latent code spaces by a dual way design, so as to generate caricatures with arbitrary styles and geometric exaggeration, which can be specified either through random sampling of latent code or from a given caricature sample. Besides, we apply identity preserving loss to both image space and landmark space, leading to a great improvement in quality of generated caricatures. Experiments show that caricatures generated by MW-GAN have better quality than existing methods.

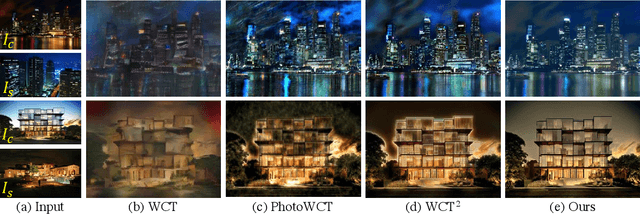

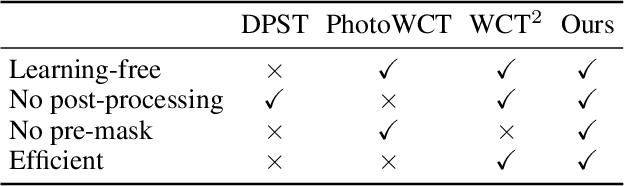

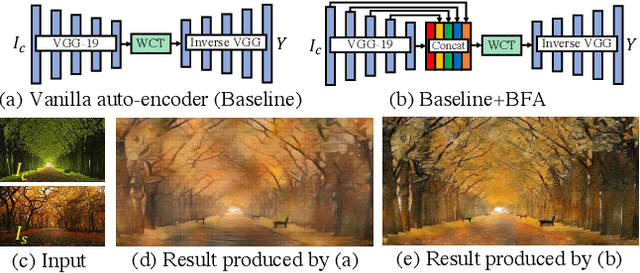

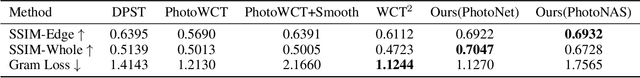

Ultrafast Photorealistic Style Transfer via Neural Architecture Search

Dec 05, 2019

The key challenge in photorealistic style transfer is that an algorithm should faithfully transfer the style of a reference photo to a content photo while the generated image should look like one captured by a camera. Although several photorealistic style transfer algorithms have been proposed, they need to rely on post- and/or pre-processing to make the generated images look photorealistic. If we disable the additional processing, these algorithms would fail to produce plausible photorealistic stylization in terms of detail preservation and photorealism. In this work, we propose an effective solution to these issues. Our method consists of a construction step (C-step) to build a photorealistic stylization network and a pruning step (P-step) for acceleration. In the C-step, we propose a dense auto-encoder named PhotoNet based on a carefully designed pre-analysis. PhotoNet integrates a feature aggregation module (BFA) and instance normalized skip links (INSL). To generate faithful stylization, we introduce multiple style transfer modules in the decoder and INSLs. PhotoNet significantly outperforms existing algorithms in terms of both efficiency and effectiveness. In the P-step, we adopt a neural architecture search method to accelerate PhotoNet. We propose an automatic network pruning framework in the manner of teacher-student learning for photorealistic stylization. The network architecture named PhotoNAS resulted from the search achieves significant acceleration over PhotoNet while keeping the stylization effects almost intact. We conduct extensive experiments on both image and video transfer. The results show that our method can produce favorable results while achieving 20-30 times acceleration in comparison with the existing state-of-the-art approaches. It is worth noting that the proposed algorithm accomplishes better performance without any pre- or post-processing.

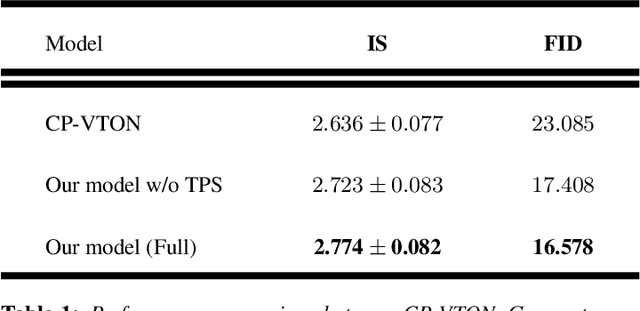

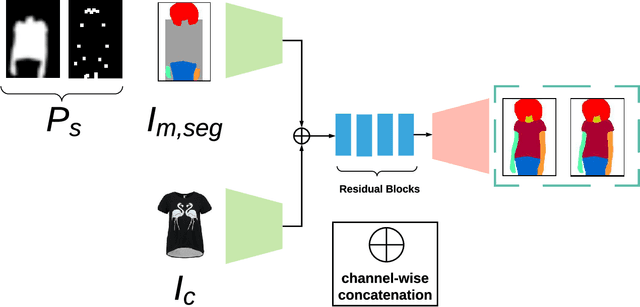

GarmentGAN: Photo-realistic Adversarial Fashion Transfer

Mar 04, 2020

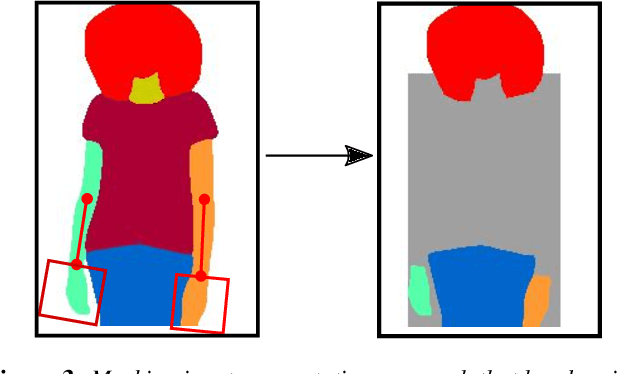

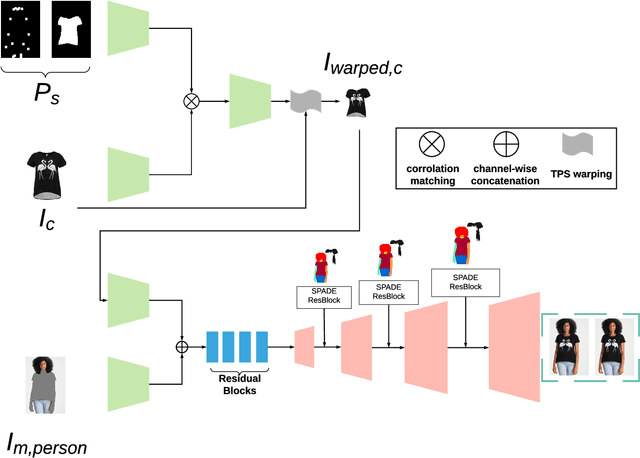

The garment transfer problem comprises two tasks: learning to separate a person's body (pose, shape, color) from their clothing (garment type, shape, style) and then generating new images of the wearer dressed in arbitrary garments. We present GarmentGAN, a new algorithm that performs image-based garment transfer through generative adversarial methods. The GarmentGAN framework allows users to virtually try-on items before purchase and generalizes to various apparel types. GarmentGAN requires as input only two images, namely, a picture of the target fashion item and an image containing the customer. The output is a synthetic image wherein the customer is wearing the target apparel. In order to make the generated image look photo-realistic, we employ the use of novel generative adversarial techniques. GarmentGAN improves on existing methods in the realism of generated imagery and solves various problems related to self-occlusions. Our proposed model incorporates additional information during training, utilizing both segmentation maps and body key-point information. We show qualitative and quantitative comparisons to several other networks to demonstrate the effectiveness of this technique.

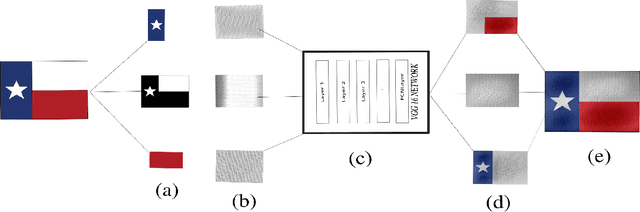

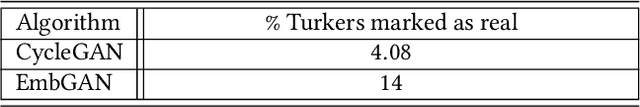

Generating Embroidery Patterns Using Image-to-Image Translation

Mar 05, 2020

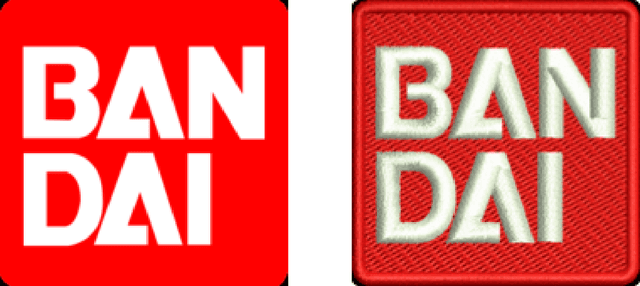

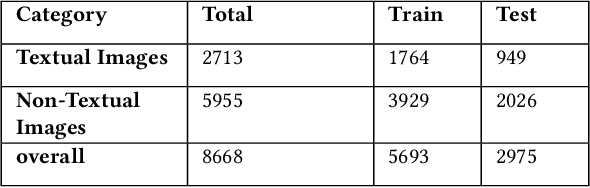

In many scenarios in computer vision, machine learning, and computer graphics, there is a requirement to learn the mapping from an image of one domain to an image of another domain, called Image-to-image translation. For example, style transfer, object transfiguration, visually altering the appearance of weather conditions in an image, changing the appearance of a day image into a night image or vice versa, photo enhancement, to name a few. In this paper, we propose two machine learning techniques to solve the embroidery image-to-image translation. Our goal is to generate a preview image which looks similar to an embroidered image, from a user-uploaded image. Our techniques are modifications of two existing techniques, neural style transfer, and cycle-consistent generative-adversarial network. Neural style transfer renders the semantic content of an image from one domain in the style of a different image in another domain, whereas a cycle-consistent generative adversarial network learns the mapping from an input image to output image without any paired training data, and also learn a loss function to train this mapping. Furthermore, the techniques we propose are independent of any embroidery attributes, such as elevation of the image, light-source, start, and endpoints of a stitch, type of stitch used, fabric type, etc. Given the user image, our techniques can generate a preview image which looks similar to an embroidered image. We train and test our propose techniques on an embroidery dataset which consist of simple 2D images. To do so, we prepare an unpaired embroidery dataset with more than 8000 user-uploaded images along with embroidered images. Empirical results show that these techniques successfully generate an approximate preview of an embroidered version of a user image, which can help users in decision making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge