Xbd

Papers and Code

DisasterInsight: A Multimodal Benchmark for Function-Aware and Grounded Disaster Assessment

Jan 26, 2026Timely interpretation of satellite imagery is critical for disaster response, yet existing vision-language benchmarks for remote sensing largely focus on coarse labels and image-level recognition, overlooking the functional understanding and instruction robustness required in real humanitarian workflows. We introduce DisasterInsight, a multimodal benchmark designed to evaluate vision-language models (VLMs) on realistic disaster analysis tasks. DisasterInsight restructures the xBD dataset into approximately 112K building-centered instances and supports instruction-diverse evaluation across multiple tasks, including building-function classification, damage-level and disaster-type classification, counting, and structured report generation aligned with humanitarian assessment guidelines. To establish domain-adapted baselines, we propose DI-Chat, obtained by fine-tuning existing VLM backbones on disaster-specific instruction data using parameter-efficient Low-Rank Adaptation (LoRA). Extensive experiments on state-of-the-art generic and remote-sensing VLMs reveal substantial performance gaps across tasks, particularly in damage understanding and structured report generation. DI-Chat achieves significant improvements on damage-level and disaster-type classification as well as report generation quality, while building-function classification remains challenging for all evaluated models. DisasterInsight provides a unified benchmark for studying grounded multimodal reasoning in disaster imagery.

The Potential of Copernicus Satellites for Disaster Response: Retrieving Building Damage from Sentinel-1 and Sentinel-2

Nov 07, 2025

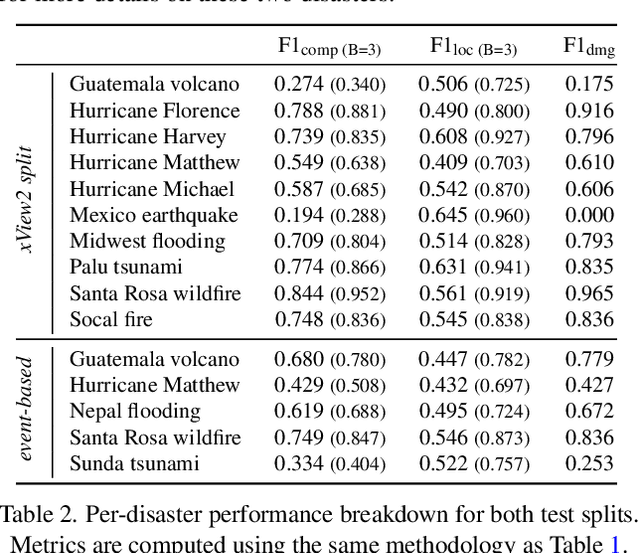

Natural disasters demand rapid damage assessment to guide humanitarian response. Here, we investigate whether medium-resolution Earth observation images from the Copernicus program can support building damage assessment, complementing very-high resolution imagery with often limited availability. We introduce xBD-S12, a dataset of 10,315 pre- and post-disaster image pairs from both Sentinel-1 and Sentinel-2, spatially and temporally aligned with the established xBD benchmark. In a series of experiments, we demonstrate that building damage can be detected and mapped rather well in many disaster scenarios, despite the moderate 10$\,$m ground sampling distance. We also find that, for damage mapping at that resolution, architectural sophistication does not seem to bring much advantage: more complex model architectures tend to struggle with generalization to unseen disasters, and geospatial foundation models bring little practical benefit. Our results suggest that Copernicus images are a viable data source for rapid, wide-area damage assessment and could play an important role alongside VHR imagery. We release the xBD-S12 dataset, code, and trained models to support further research.

Structural Damage Detection Using AI Super Resolution and Visual Language Model

Aug 23, 2025Natural disasters pose significant challenges to timely and accurate damage assessment due to their sudden onset and the extensive areas they affect. Traditional assessment methods are often labor-intensive, costly, and hazardous to personnel, making them impractical for rapid response, especially in resource-limited settings. This study proposes a novel, cost-effective framework that leverages aerial drone footage, an advanced AI-based video super-resolution model, Video Restoration Transformer (VRT), and Gemma3:27b, a 27 billion parameter Visual Language Model (VLM). This integrated system is designed to improve low-resolution disaster footage, identify structural damage, and classify buildings into four damage categories, ranging from no/slight damage to total destruction, along with associated risk levels. The methodology was validated using pre- and post-event drone imagery from the 2023 Turkey earthquakes (courtesy of The Guardian) and satellite data from the 2013 Moore Tornado (xBD dataset). The framework achieved a classification accuracy of 84.5%, demonstrating its ability to provide highly accurate results. Furthermore, the system's accessibility allows non-technical users to perform preliminary analyses, thereby improving the responsiveness and efficiency of disaster management efforts.

Distribution Shifts at Scale: Out-of-distribution Detection in Earth Observation

Dec 18, 2024Training robust deep learning models is critical in Earth Observation, where globally deployed models often face distribution shifts that degrade performance, especially in low-data regions. Out-of-distribution (OOD) detection addresses this challenge by identifying inputs that differ from in-distribution (ID) data. However, existing methods either assume access to OOD data or compromise primary task performance, making them unsuitable for real-world deployment. We propose TARDIS, a post-hoc OOD detection method for scalable geospatial deployments. The core novelty lies in generating surrogate labels by integrating information from ID data and unknown distributions, enabling OOD detection at scale. Our method takes a pre-trained model, ID data, and WILD samples, disentangling the latter into surrogate ID and surrogate OOD labels based on internal activations, and fits a binary classifier as an OOD detector. We validate TARDIS on EuroSAT and xBD datasets, across 17 experimental setups covering covariate and semantic shifts, showing that it performs close to the theoretical upper bound in assigning surrogate ID and OOD samples in 13 cases. To demonstrate scalability, we deploy TARDIS on the Fields of the World dataset, offering actionable insights into pre-trained model behavior for large-scale deployments. The code is publicly available at https://github.com/microsoft/geospatial-ood-detection.

Benchmarking Attention Mechanisms and Consistency Regularization Semi-Supervised Learning for Post-Flood Building Damage Assessment in Satellite Images

Dec 04, 2024

Post-flood building damage assessment is critical for rapid response and post-disaster reconstruction planning. Current research fails to consider the distinct requirements of disaster assessment (DA) from change detection (CD) in neural network design. This paper focuses on two key differences: 1) building change features in DA satellite images are more subtle than in CD; 2) DA datasets face more severe data scarcity and label imbalance. To address these issues, in terms of model architecture, the research explores the benchmark performance of attention mechanisms in post-flood DA tasks and introduces Simple Prior Attention UNet (SPAUNet) to enhance the model's ability to recognize subtle changes, in terms of semi-supervised learning (SSL) strategies, the paper constructs four different combinations of image-level label category reference distributions for consistent training. Experimental results on flood events of xBD dataset show that SPAUNet performs exceptionally well in supervised learning experiments, achieving a recall of 79.10\% and an F1 score of 71.32\% for damaged classification, outperforming CD methods. The results indicate the necessity of DA task-oriented model design. SSL experiments demonstrate the positive impact of image-level consistency regularization on the model. Using pseudo-labels to form the reference distribution for consistency training yields the best results, proving the potential of using the category distribution of a large amount of unlabeled data for SSL. This paper clarifies the differences between DA and CD tasks. It preliminarily explores model design strategies utilizing prior attention mechanisms and image-level consistency regularization, establishing new post-flood DA task benchmark methods.

Robust Disaster Assessment from Aerial Imagery Using Text-to-Image Synthetic Data

May 22, 2024We present a simple and efficient method to leverage emerging text-to-image generative models in creating large-scale synthetic supervision for the task of damage assessment from aerial images. While significant recent advances have resulted in improved techniques for damage assessment using aerial or satellite imagery, they still suffer from poor robustness to domains where manual labeled data is unavailable, directly impacting post-disaster humanitarian assistance in such under-resourced geographies. Our contribution towards improving domain robustness in this scenario is two-fold. Firstly, we leverage the text-guided mask-based image editing capabilities of generative models and build an efficient and easily scalable pipeline to generate thousands of post-disaster images from low-resource domains. Secondly, we propose a simple two-stage training approach to train robust models while using manual supervision from different source domains along with the generated synthetic target domain data. We validate the strength of our proposed framework under cross-geography domain transfer setting from xBD and SKAI images in both single-source and multi-source settings, achieving significant improvements over a source-only baseline in each case.

ChangeMamba: Remote Sensing Change Detection with Spatio-Temporal State Space Model

Apr 14, 2024

Convolutional neural networks (CNN) and Transformers have made impressive progress in the field of remote sensing change detection (CD). However, both architectures have inherent shortcomings. Recently, the Mamba architecture, based on state space models, has shown remarkable performance in a series of natural language processing tasks, which can effectively compensate for the shortcomings of the above two architectures. In this paper, we explore for the first time the potential of the Mamba architecture for remote sensing CD tasks. We tailor the corresponding frameworks, called MambaBCD, MambaSCD, and MambaBDA, for binary change detection (BCD), semantic change detection (SCD), and building damage assessment (BDA), respectively. All three frameworks adopt the cutting-edge Visual Mamba architecture as the encoder, which allows full learning of global spatial contextual information from the input images. For the change decoder, which is available in all three architectures, we propose three spatio-temporal relationship modeling mechanisms, which can be naturally combined with the Mamba architecture and fully utilize its attribute to achieve spatio-temporal interaction of multi-temporal features, thereby obtaining accurate change information. On five benchmark datasets, our proposed frameworks outperform current CNN- and Transformer-based approaches without using any complex training strategies or tricks, fully demonstrating the potential of the Mamba architecture in CD tasks. Specifically, we obtained 83.11%, 88.39% and 94.19% F1 scores on the three BCD datasets SYSU, LEVIR-CD+, and WHU-CD; on the SCD dataset SECOND, we obtained 24.11% SeK; and on the BDA dataset xBD, we obtained 81.41% overall F1 score. Further experiments show that our architecture is quite robust to degraded data. The source code will be available in https://github.com/ChenHongruixuan/MambaCD

A simple, strong baseline for building damage detection on the xBD dataset

Jan 30, 2024We construct a strong baseline method for building damage detection by starting with the highly-engineered winning solution of the xView2 competition, and gradually stripping away components. This way, we obtain a much simpler method, while retaining adequate performance. We expect the simplified solution to be more widely and easily applicable. This expectation is based on the reduced complexity, as well as the fact that we choose hyperparameters based on simple heuristics, that transfer to other datasets. We then re-arrange the xView2 dataset splits such that the test locations are not seen during training, contrary to the competition setup. In this setting, we find that both the complex and the simplified model fail to generalize to unseen locations. Analyzing the dataset indicates that this failure to generalize is not only a model-based problem, but that the difficulty might also be influenced by the unequal class distributions between events. Code, including the baseline model, is available under https://github.com/PaulBorneP/Xview2_Strong_Baseline

AB2CD: AI for Building Climate Damage Classification and Detection

Sep 03, 2023We explore the implementation of deep learning techniques for precise building damage assessment in the context of natural hazards, utilizing remote sensing data. The xBD dataset, comprising diverse disaster events from across the globe, serves as the primary focus, facilitating the evaluation of deep learning models. We tackle the challenges of generalization to novel disasters and regions while accounting for the influence of low-quality and noisy labels inherent in natural hazard data. Furthermore, our investigation quantitatively establishes that the minimum satellite imagery resolution essential for effective building damage detection is 3 meters and below 1 meter for classification using symmetric and asymmetric resolution perturbation analyses. To achieve robust and accurate evaluations of building damage detection and classification, we evaluated different deep learning models with residual, squeeze and excitation, and dual path network backbones, as well as ensemble techniques. Overall, the U-Net Siamese network ensemble with F-1 score of 0.812 performed the best against the xView2 challenge benchmark. Additionally, we evaluate a Universal model trained on all hazards against a flood expert model and investigate generalization gaps across events, and out of distribution from field data in the Ahr Valley. Our research findings showcase the potential and limitations of advanced AI solutions in enhancing the impact assessment of climate change-induced extreme weather events, such as floods and hurricanes. These insights have implications for disaster impact assessment in the face of escalating climate challenges.

End-to-end Remote Sensing Change Detection of Unregistered Bi-temporal Images for Natural Disasters

Aug 16, 2023

Change detection based on remote sensing images has been a prominent area of interest in the field of remote sensing. Deep networks have demonstrated significant success in detecting changes in bi-temporal remote sensing images and have found applications in various fields. Given the degradation of natural environments and the frequent occurrence of natural disasters, accurately and swiftly identifying damaged buildings in disaster-stricken areas through remote sensing images holds immense significance. This paper aims to investigate change detection specifically for natural disasters. Considering that existing public datasets used in change detection research are registered, which does not align with the practical scenario where bi-temporal images are not matched, this paper introduces an unregistered end-to-end change detection synthetic dataset called xBD-E2ECD. Furthermore, we propose an end-to-end change detection network named E2ECDNet, which takes an unregistered bi-temporal image pair as input and simultaneously generates the flow field prediction result and the change detection prediction result. It is worth noting that our E2ECDNet also supports change detection for registered image pairs, as registration can be seen as a special case of non-registration. Additionally, this paper redefines the criteria for correctly predicting a positive case and introduces neighborhood-based change detection evaluation metrics. The experimental results have demonstrated significant improvements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge