Jan Dirk Wegner

LitePT: Lighter Yet Stronger Point Transformer

Dec 15, 2025

Abstract:Modern neural architectures for 3D point cloud processing contain both convolutional layers and attention blocks, but the best way to assemble them remains unclear. We analyse the role of different computational blocks in 3D point cloud networks and find an intuitive behaviour: convolution is adequate to extract low-level geometry at high-resolution in early layers, where attention is expensive without bringing any benefits; attention captures high-level semantics and context in low-resolution, deep layers more efficiently. Guided by this design principle, we propose a new, improved 3D point cloud backbone that employs convolutions in early stages and switches to attention for deeper layers. To avoid the loss of spatial layout information when discarding redundant convolution layers, we introduce a novel, training-free 3D positional encoding, PointROPE. The resulting LitePT model has $3.6\times$ fewer parameters, runs $2\times$ faster, and uses $2\times$ less memory than the state-of-the-art Point Transformer V3, but nonetheless matches or even outperforms it on a range of tasks and datasets. Code and models are available at: https://github.com/prs-eth/LitePT.

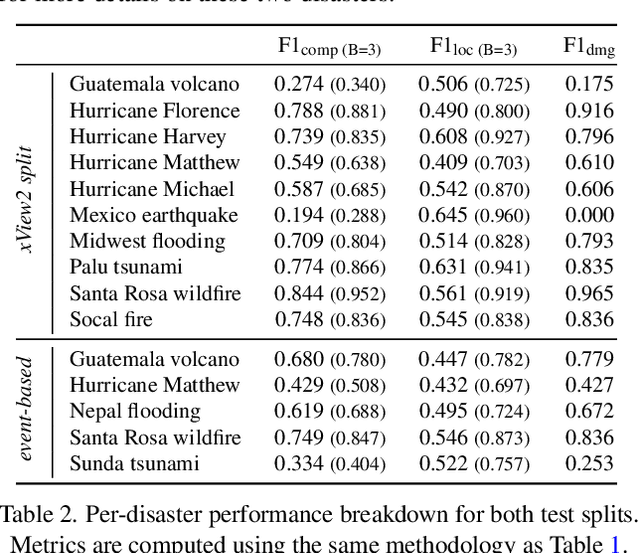

The Potential of Copernicus Satellites for Disaster Response: Retrieving Building Damage from Sentinel-1 and Sentinel-2

Nov 07, 2025

Abstract:Natural disasters demand rapid damage assessment to guide humanitarian response. Here, we investigate whether medium-resolution Earth observation images from the Copernicus program can support building damage assessment, complementing very-high resolution imagery with often limited availability. We introduce xBD-S12, a dataset of 10,315 pre- and post-disaster image pairs from both Sentinel-1 and Sentinel-2, spatially and temporally aligned with the established xBD benchmark. In a series of experiments, we demonstrate that building damage can be detected and mapped rather well in many disaster scenarios, despite the moderate 10$\,$m ground sampling distance. We also find that, for damage mapping at that resolution, architectural sophistication does not seem to bring much advantage: more complex model architectures tend to struggle with generalization to unseen disasters, and geospatial foundation models bring little practical benefit. Our results suggest that Copernicus images are a viable data source for rapid, wide-area damage assessment and could play an important role alongside VHR imagery. We release the xBD-S12 dataset, code, and trained models to support further research.

SSL4Eco: A Global Seasonal Dataset for Geospatial Foundation Models in Ecology

Apr 25, 2025Abstract:With the exacerbation of the biodiversity and climate crises, macroecological pursuits such as global biodiversity mapping become more urgent. Remote sensing offers a wealth of Earth observation data for ecological studies, but the scarcity of labeled datasets remains a major challenge. Recently, self-supervised learning has enabled learning representations from unlabeled data, triggering the development of pretrained geospatial models with generalizable features. However, these models are often trained on datasets biased toward areas of high human activity, leaving entire ecological regions underrepresented. Additionally, while some datasets attempt to address seasonality through multi-date imagery, they typically follow calendar seasons rather than local phenological cycles. To better capture vegetation seasonality at a global scale, we propose a simple phenology-informed sampling strategy and introduce corresponding SSL4Eco, a multi-date Sentinel-2 dataset, on which we train an existing model with a season-contrastive objective. We compare representations learned from SSL4Eco against other datasets on diverse ecological downstream tasks and demonstrate that our straightforward sampling method consistently improves representation quality, highlighting the importance of dataset construction. The model pretrained on SSL4Eco reaches state of the art performance on 7 out of 8 downstream tasks spanning (multi-label) classification and regression. We release our code, data, and model weights to support macroecological and computer vision research at https://github.com/PlekhanovaElena/ssl4eco.

Climplicit: Climatic Implicit Embeddings for Global Ecological Tasks

Apr 07, 2025Abstract:Deep learning on climatic data holds potential for macroecological applications. However, its adoption remains limited among scientists outside the deep learning community due to storage, compute, and technical expertise barriers. To address this, we introduce Climplicit, a spatio-temporal geolocation encoder pretrained to generate implicit climatic representations anywhere on Earth. By bypassing the need to download raw climatic rasters and train feature extractors, our model uses x1000 fewer disk space and significantly reduces computational needs for downstream tasks. We evaluate our Climplicit embeddings on biomes classification, species distribution modeling, and plant trait regression. We find that linear probing our Climplicit embeddings consistently performs better or on par with training a model from scratch on downstream tasks and overall better than alternative geolocation encoding models.

GSR4B: Biomass Map Super-Resolution with Sentinel-1/2 Guidance

Apr 03, 2025

Abstract:Accurate Above-Ground Biomass (AGB) mapping at both large scale and high spatio-temporal resolution is essential for applications ranging from climate modeling to biodiversity assessment, and sustainable supply chain monitoring. At present, fine-grained AGB mapping relies on costly airborne laser scanning acquisition campaigns usually limited to regional scales. Initiatives such as the ESA CCI map attempt to generate global biomass products from diverse spaceborne sensors but at a coarser resolution. To enable global, high-resolution (HR) mapping, several works propose to regress AGB from HR satellite observations such as ESA Sentinel-1/2 images. We propose a novel way to address HR AGB estimation, by leveraging both HR satellite observations and existing low-resolution (LR) biomass products. We cast this problem as Guided Super-Resolution (GSR), aiming at upsampling LR biomass maps (sources) from $100$ to $10$ m resolution, using auxiliary HR co-registered satellite images (guides). We compare super-resolving AGB maps with and without guidance, against direct regression from satellite images, on the public BioMassters dataset. We observe that Multi-Scale Guidance (MSG) outperforms direct regression both for regression ($-780$ t/ha RMSE) and perception ($+2.0$ dB PSNR) metrics, and better captures high-biomass values, without significant computational overhead. Interestingly, unlike the RGB+Depth setting they were originally designed for, our best-performing AGB GSR approaches are those that most preserve the guide image texture. Our results make a strong case for adopting the GSR framework for accurate HR biomass mapping at scale. Our code and model weights are made publicly available (https://github.com/kaankaramanofficial/GSR4B).

Lossy Neural Compression for Geospatial Analytics: A Review

Mar 03, 2025

Abstract:Over the past decades, there has been an explosion in the amount of available Earth Observation (EO) data. The unprecedented coverage of the Earth's surface and atmosphere by satellite imagery has resulted in large volumes of data that must be transmitted to ground stations, stored in data centers, and distributed to end users. Modern Earth System Models (ESMs) face similar challenges, operating at high spatial and temporal resolutions, producing petabytes of data per simulated day. Data compression has gained relevance over the past decade, with neural compression (NC) emerging from deep learning and information theory, making EO data and ESM outputs ideal candidates due to their abundance of unlabeled data. In this review, we outline recent developments in NC applied to geospatial data. We introduce the fundamental concepts of NC including seminal works in its traditional applications to image and video compression domains with focus on lossy compression. We discuss the unique characteristics of EO and ESM data, contrasting them with "natural images", and explain the additional challenges and opportunities they present. Moreover, we review current applications of NC across various EO modalities and explore the limited efforts in ESM compression to date. The advent of self-supervised learning (SSL) and foundation models (FM) has advanced methods to efficiently distill representations from vast unlabeled data. We connect these developments to NC for EO, highlighting the similarities between the two fields and elaborate on the potential of transferring compressed feature representations for machine--to--machine communication. Based on insights drawn from this review, we devise future directions relevant to applications in EO and ESM.

Deep learning meets tree phenology modeling: PhenoFormer vs. process-based models

Oct 30, 2024

Abstract:Phenology, the timing of cyclical plant life events such as leaf emergence and coloration, is crucial in the bio-climatic system. Climate change drives shifts in these phenological events, impacting ecosystems and the climate itself. Accurate phenology models are essential to predict the occurrence of these phases under changing climatic conditions. Existing methods include hypothesis-driven process models and data-driven statistical approaches. Process models account for dormancy stages and various phenology drivers, while statistical models typically rely on linear or traditional machine learning techniques. Research shows that process models often outperform statistical methods when predicting under climate conditions outside historical ranges, especially with climate change scenarios. However, deep learning approaches remain underexplored in climate phenology modeling. We introduce PhenoFormer, a neural architecture better suited than traditional statistical methods at predicting phenology under shift in climate data distribution, while also bringing significant improvements or performing on par to the best performing process-based models. Our numerical experiments on a 70-year dataset of 70,000 phenological observations from 9 woody species in Switzerland show that PhenoFormer outperforms traditional machine learning methods by an average of 13% R2 and 1.1 days RMSE for spring phenology, and 11% R2 and 0.7 days RMSE for autumn phenology, while matching or exceeding the best process-based models. Our results demonstrate that deep learning has the potential to be a valuable methodological tool for accurate climate-phenology prediction, and our PhenoFormer is a first promising step in improving phenological predictions before a complete understanding of the underlying physiological mechanisms is available.

An Open-Source Tool for Mapping War Destruction at Scale in Ukraine using Sentinel-1 Time Series

Jun 04, 2024

Abstract:Access to detailed war impact assessments is crucial for humanitarian organizations to effectively assist populations most affected by armed conflicts. However, maintaining a comprehensive understanding of the situation on the ground is challenging, especially in conflicts that cover vast territories and extend over long periods. This study presents a scalable and transferable method for estimating war-induced damage to buildings. We first train a machine learning model to output pixel-wise probability of destruction from Synthetic Aperture Radar (SAR) satellite image time series, leveraging existing, manual damage assessments as ground truth and cloud-based geospatial analysis tools for large-scale inference. We further post-process these assessments using open building footprints to obtain a final damage estimate per building. We introduce an accessible, open-source tool that allows users to adjust the confidence interval based on their specific requirements and use cases. Our approach enables humanitarian organizations and other actors to rapidly screen large geographic regions for war impacts. We provide two publicly accessible dashboards: a Ukraine Damage Explorer to dynamically view our pre-computed estimates, and a Rapid Damage Mapping Tool to easily run our method and produce custom maps.

Point2Building: Reconstructing Buildings from Airborne LiDAR Point Clouds

Mar 04, 2024

Abstract:We present a learning-based approach to reconstruct buildings as 3D polygonal meshes from airborne LiDAR point clouds. What makes 3D building reconstruction from airborne LiDAR hard is the large diversity of building designs and especially roof shapes, the low and varying point density across the scene, and the often incomplete coverage of building facades due to occlusions by vegetation or to the viewing angle of the sensor. To cope with the diversity of shapes and inhomogeneous and incomplete object coverage, we introduce a generative model that directly predicts 3D polygonal meshes from input point clouds. Our autoregressive model, called Point2Building, iteratively builds up the mesh by generating sequences of vertices and faces. This approach enables our model to adapt flexibly to diverse geometries and building structures. Unlike many existing methods that rely heavily on pre-processing steps like exhaustive plane detection, our model learns directly from the point cloud data, thereby reducing error propagation and increasing the fidelity of the reconstruction. We experimentally validate our method on a collection of airborne LiDAR data of Zurich, Berlin and Tallinn. Our method shows good generalization to diverse urban styles.

Point2CAD: Reverse Engineering CAD Models from 3D Point Clouds

Dec 07, 2023

Abstract:Computer-Aided Design (CAD) model reconstruction from point clouds is an important problem at the intersection of computer vision, graphics, and machine learning; it saves the designer significant time when iterating on in-the-wild objects. Recent advancements in this direction achieve relatively reliable semantic segmentation but still struggle to produce an adequate topology of the CAD model. In this work, we analyze the current state of the art for that ill-posed task and identify shortcomings of existing methods. We propose a hybrid analytic-neural reconstruction scheme that bridges the gap between segmented point clouds and structured CAD models and can be readily combined with different segmentation backbones. Moreover, to power the surface fitting stage, we propose a novel implicit neural representation of freeform surfaces, driving up the performance of our overall CAD reconstruction scheme. We extensively evaluate our method on the popular ABC benchmark of CAD models and set a new state-of-the-art for that dataset. Project page: https://www.obukhov.ai/point2cad}{https://www.obukhov.ai/point2cad.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge