Cityflow

Papers and Code

PyTSC: A Unified Platform for Multi-Agent Reinforcement Learning in Traffic Signal Control

Oct 23, 2024

Multi-Agent Reinforcement Learning (MARL) presents a promising approach for addressing the complexity of Traffic Signal Control (TSC) in urban environments. However, existing platforms for MARL-based TSC research face challenges such as slow simulation speeds and convoluted, difficult-to-maintain codebases. To address these limitations, we introduce PyTSC, a robust and flexible simulation environment that facilitates the training and evaluation of MARL algorithms for TSC. PyTSC integrates multiple simulators, such as SUMO and CityFlow, and offers a streamlined API, empowering researchers to explore a broad spectrum of MARL approaches efficiently. PyTSC accelerates experimentation and provides new opportunities for advancing intelligent traffic management systems in real-world applications.

Spatial-Temporal Multi-Cuts for Online Multiple-Camera Vehicle Tracking

Oct 03, 2024Accurate online multiple-camera vehicle tracking is essential for intelligent transportation systems, autonomous driving, and smart city applications. Like single-camera multiple-object tracking, it is commonly formulated as a graph problem of tracking-by-detection. Within this framework, existing online methods usually consist of two-stage procedures that cluster temporally first, then spatially, or vice versa. This is computationally expensive and prone to error accumulation. We introduce a graph representation that allows spatial-temporal clustering in a single, combined step: New detections are spatially and temporally connected with existing clusters. By keeping sparse appearance and positional cues of all detections in a cluster, our method can compare clusters based on the strongest available evidence. The final tracks are obtained online using a simple multicut assignment procedure. Our method does not require any training on the target scene, pre-extraction of single-camera tracks, or additional annotations. Notably, we outperform the online state-of-the-art on the CityFlow dataset in terms of IDF1 by more than 14%, and on the Synthehicle dataset by more than 25%, respectively. The code is publicly available.

MTLight: Efficient Multi-Task Reinforcement Learning for Traffic Signal Control

Apr 01, 2024Traffic signal control has a great impact on alleviating traffic congestion in modern cities. Deep reinforcement learning (RL) has been widely used for this task in recent years, demonstrating promising performance but also facing many challenges such as limited performances and sample inefficiency. To handle these challenges, MTLight is proposed to enhance the agent observation with a latent state, which is learned from numerous traffic indicators. Meanwhile, multiple auxiliary and supervisory tasks are constructed to learn the latent state, and two types of embedding latent features, the task-specific feature and task-shared feature, are used to make the latent state more abundant. Extensive experiments conducted on CityFlow demonstrate that MTLight has leading convergence speed and asymptotic performance. We further simulate under peak-hour pattern in all scenarios with increasing control difficulty and the results indicate that MTLight is highly adaptable.

CityFlowER: An Efficient and Realistic Traffic Simulator with Embedded Machine Learning Models

Feb 09, 2024

Traffic simulation is an essential tool for transportation infrastructure planning, intelligent traffic control policy learning, and traffic flow analysis. Its effectiveness relies heavily on the realism of the simulators used. Traditional traffic simulators, such as SUMO and CityFlow, are often limited by their reliance on rule-based models with hyperparameters that oversimplify driving behaviors, resulting in unrealistic simulations. To enhance realism, some simulators have provided Application Programming Interfaces (APIs) to interact with Machine Learning (ML) models, which learn from observed data and offer more sophisticated driving behavior models. However, this approach faces challenges in scalability and time efficiency as vehicle numbers increase. Addressing these limitations, we introduce CityFlowER, an advancement over the existing CityFlow simulator, designed for efficient and realistic city-wide traffic simulation. CityFlowER innovatively pre-embeds ML models within the simulator, eliminating the need for external API interactions and enabling faster data computation. This approach allows for a blend of rule-based and ML behavior models for individual vehicles, offering unparalleled flexibility and efficiency, particularly in large-scale simulations. We provide detailed comparisons with existing simulators, implementation insights, and comprehensive experiments to demonstrate CityFlowER's superiority in terms of realism, efficiency, and adaptability.

Purpose in the Machine: Do Traffic Simulators Produce Distributionally Equivalent Outcomes for Reinforcement Learning Applications?

Nov 14, 2023

Traffic simulators are used to generate data for learning in intelligent transportation systems (ITSs). A key question is to what extent their modelling assumptions affect the capabilities of ITSs to adapt to various scenarios when deployed in the real world. This work focuses on two simulators commonly used to train reinforcement learning (RL) agents for traffic applications, CityFlow and SUMO. A controlled virtual experiment varying driver behavior and simulation scale finds evidence against distributional equivalence in RL-relevant measures from these simulators, with the root mean squared error and KL divergence being significantly greater than 0 for all assessed measures. While granular real-world validation generally remains infeasible, these findings suggest that traffic simulators are not a deus ex machina for RL training: understanding the impacts of inter-simulator differences is necessary to train and deploy RL-based ITSs.

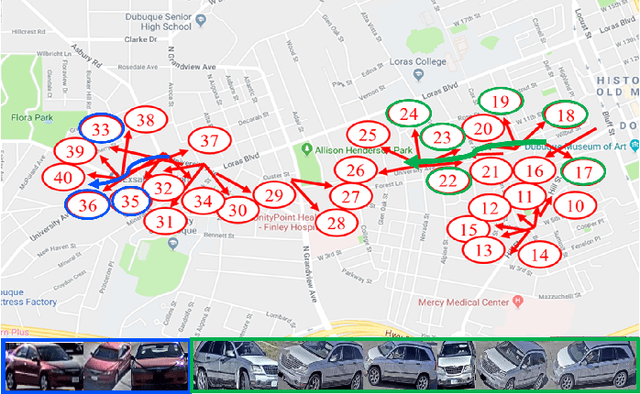

Multi-target multi-camera vehicle tracking using transformer-based camera link model and spatial-temporal information

Jan 18, 2023

Multi-target multi-camera tracking (MTMCT) of vehicles, i.e. tracking vehicles across multiple cameras, is a crucial application for the development of smart city and intelligent traffic system. The main challenges of MTMCT of vehicles include the intra-class variability of the same vehicle and inter-class similarity between different vehicles and how to associate the same vehicle accurately across different cameras under large search space. Previous methods for MTMCT usually use hierarchical clustering of trajectories to conduct cross camera association. However, the search space can be large and does not take spatial and temporal information into consideration. In this paper, we proposed a transformer-based camera link model with spatial and temporal filtering to conduct cross camera tracking. Achieving 73.68% IDF1 on the Nvidia Cityflow V2 dataset test set, showing the effectiveness of our camera link model on multi-target multi-camera tracking.

LibSignal: An Open Library for Traffic Signal Control

Nov 19, 2022This paper introduces a library for cross-simulator comparison of reinforcement learning models in traffic signal control tasks. This library is developed to implement recent state-of-the-art reinforcement learning models with extensible interfaces and unified cross-simulator evaluation metrics. It supports commonly-used simulators in traffic signal control tasks, including Simulation of Urban MObility(SUMO) and CityFlow, and multiple benchmark datasets for fair comparisons. We conducted experiments to validate our implementation of the models and to calibrate the simulators so that the experiments from one simulator could be referential to the other. Based on the validated models and calibrated environments, this paper compares and reports the performance of current state-of-the-art RL algorithms across different datasets and simulators. This is the first time that these methods have been compared fairly under the same datasets with different simulators.

Adaptive Affinity for Associations in Multi-Target Multi-Camera Tracking

Dec 14, 2021

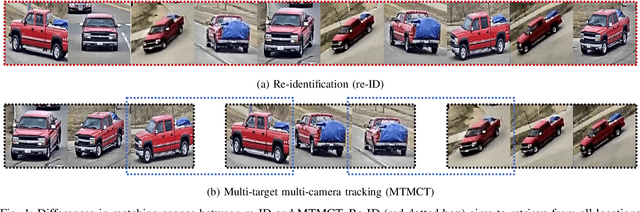

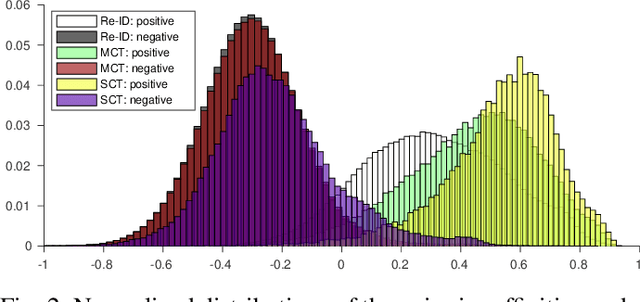

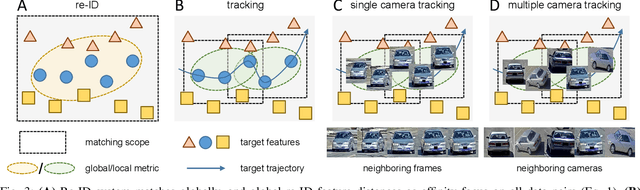

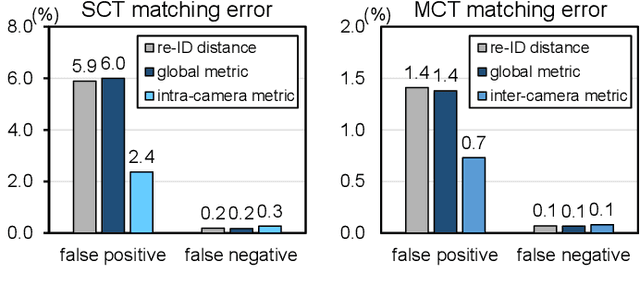

Data associations in multi-target multi-camera tracking (MTMCT) usually estimate affinity directly from re-identification (re-ID) feature distances. However, we argue that it might not be the best choice given the difference in matching scopes between re-ID and MTMCT problems. Re-ID systems focus on global matching, which retrieves targets from all cameras and all times. In contrast, data association in tracking is a local matching problem, since its candidates only come from neighboring locations and time frames. In this paper, we design experiments to verify such misfit between global re-ID feature distances and local matching in tracking, and propose a simple yet effective approach to adapt affinity estimations to corresponding matching scopes in MTMCT. Instead of trying to deal with all appearance changes, we tailor the affinity metric to specialize in ones that might emerge during data associations. To this end, we introduce a new data sampling scheme with temporal windows originally used for data associations in tracking. Minimizing the mismatch, the adaptive affinity module brings significant improvements over global re-ID distance, and produces competitive performance on CityFlow and DukeMTMC datasets.

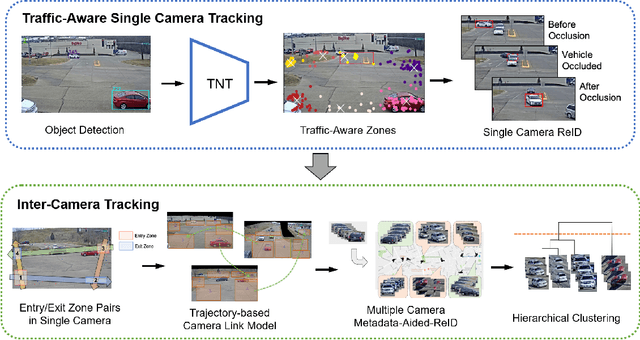

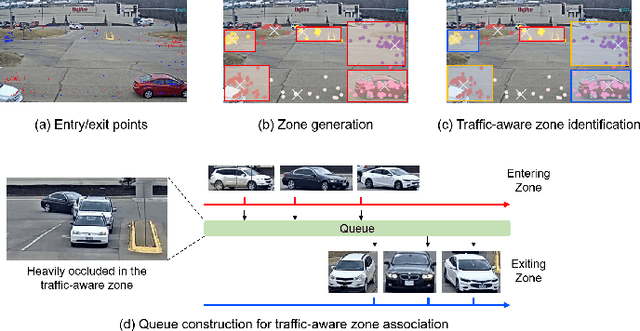

Multi-Target Multi-Camera Tracking of Vehicles using Metadata-Aided Re-ID and Trajectory-Based Camera Link Model

May 03, 2021

In this paper, we propose a novel framework for multi-target multi-camera tracking (MTMCT) of vehicles based on metadata-aided re-identification (MA-ReID) and the trajectory-based camera link model (TCLM). Given a video sequence and the corresponding frame-by-frame vehicle detections, we first address the isolated tracklets issue from single camera tracking (SCT) by the proposed traffic-aware single-camera tracking (TSCT). Then, after automatically constructing the TCLM, we solve MTMCT by the MA-ReID. The TCLM is generated from camera topological configuration to obtain the spatial and temporal information to improve the performance of MTMCT by reducing the candidate search of ReID. We also use the temporal attention model to create more discriminative embeddings of trajectories from each camera to achieve robust distance measures for vehicle ReID. Moreover, we train a metadata classifier for MTMCT to obtain the metadata feature, which is concatenated with the temporal attention based embeddings. Finally, the TCLM and hierarchical clustering are jointly applied for global ID assignment. The proposed method is evaluated on the CityFlow dataset, achieving IDF1 76.77%, which outperforms the state-of-the-art MTMCT methods.

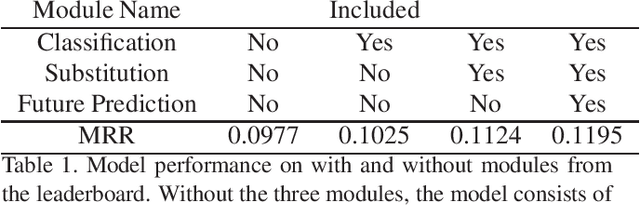

SBNet: Segmentation-based Network for Natural Language-based Vehicle Search

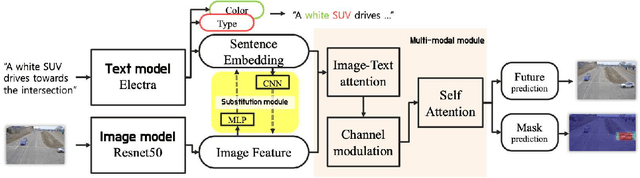

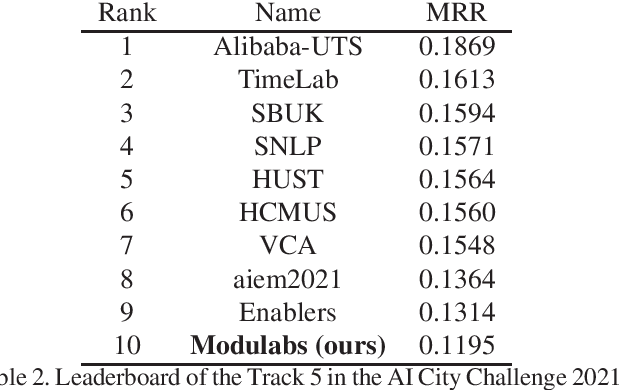

Apr 22, 2021

Natural language-based vehicle retrieval is a task to find a target vehicle within a given image based on a natural language description as a query. This technology can be applied to various areas including police searching for a suspect vehicle. However, it is challenging due to the ambiguity of language descriptions and the difficulty of processing multi-modal data. To tackle this problem, we propose a deep neural network called SBNet that performs natural language-based segmentation for vehicle retrieval. We also propose two task-specific modules to improve performance: a substitution module that helps features from different domains to be embedded in the same space and a future prediction module that learns temporal information. SBnet has been trained using the CityFlow-NL dataset that contains 2,498 tracks of vehicles with three unique natural language descriptions each and tested 530 unique vehicle tracks and their corresponding query sets. SBNet achieved a significant improvement over the baseline in the natural language-based vehicle tracking track in the AI City Challenge 2021.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge