3D Face Animation

3D face animation is the process of generating animated videos of a person's face based on an audio recording of their voice.

Papers and Code

Generalizable and Animatable 3D Full-Head Gaussian Avatar from a Single Image

Jan 19, 2026Building 3D animatable head avatars from a single image is an important yet challenging problem. Existing methods generally collapse under large camera pose variations, compromising the realism of 3D avatars. In this work, we propose a new framework to tackle the novel setting of one-shot 3D full-head animatable avatar reconstruction in a single feed-forward pass, enabling real-time animation and simultaneous 360$^\circ$ rendering views. To facilitate efficient animation control, we model 3D head avatars with Gaussian primitives embedded on the surface of a parametric face model within the UV space. To obtain knowledge of full-head geometry and textures, we leverage rich 3D full-head priors within a pretrained 3D generative adversarial network (GAN) for global full-head feature extraction and multi-view supervision. To increase the fidelity of the 3D reconstruction of the input image, we take advantage of the symmetric nature of the UV space and human faces to fuse local fine-grained input image features with the global full-head textures. Extensive experiments demonstrate the effectiveness of our method, achieving high-quality 3D full-head modeling as well as real-time animation, thereby improving the realism of 3D talking avatars.

ICo3D: An Interactive Conversational 3D Virtual Human

Jan 19, 2026This work presents Interactive Conversational 3D Virtual Human (ICo3D), a method for generating an interactive, conversational, and photorealistic 3D human avatar. Based on multi-view captures of a subject, we create an animatable 3D face model and a dynamic 3D body model, both rendered by splatting Gaussian primitives. Once merged together, they represent a lifelike virtual human avatar suitable for real-time user interactions. We equip our avatar with an LLM for conversational ability. During conversation, the audio speech of the avatar is used as a driving signal to animate the face model, enabling precise synchronization. We describe improvements to our dynamic Gaussian models that enhance photorealism: SWinGS++ for body reconstruction and HeadGaS++ for face reconstruction, and provide as well a solution to merge the separate face and body models without artifacts. We also present a demo of the complete system, showcasing several use cases of real-time conversation with the 3D avatar. Our approach offers a fully integrated virtual avatar experience, supporting both oral and written form interactions in immersive environments. ICo3D is applicable to a wide range of fields, including gaming, virtual assistance, and personalized education, among others. Project page: https://ico3d.github.io/

LegacyAvatars: Volumetric Face Avatars For Traditional Graphics Pipelines

Jan 18, 2026We introduce a novel representation for efficient classical rendering of photorealistic 3D face avatars. Leveraging recent advances in radiance fields anchored to parametric face models, our approach achieves controllable volumetric rendering of complex facial features, including hair, skin, and eyes. At enrollment time, we learn a set of radiance manifolds in 3D space to extract an explicit layered mesh, along with appearance and warp textures. During deployment, this allows us to control and animate the face through simple linear blending and alpha compositing of textures over a static mesh. This explicit representation also enables the generated avatar to be efficiently streamed online and then rendered using classical mesh and shader-based rendering on legacy graphics platforms, eliminating the need for any custom engineering or integration.

Learning Domain Agnostic Latent Embeddings of 3D Faces for Zero-shot Animal Expression Transfer

Jan 10, 2026We present a zero-shot framework for transferring human facial expressions to 3D animal face meshes. Our method combines intrinsic geometric descriptors (HKS/WKS) with a mesh-agnostic latent embedding that disentangles facial identity and expression. The ID latent space captures species-independent facial structure, while the expression latent space encodes deformation patterns that generalize across humans and animals. Trained only with human expression pairs, the model learns the embeddings, decoupling, and recoupling of cross-identity expressions, enabling expression transfer without requiring animal expression data. To enforce geometric consistency, we employ Jacobian loss together with vertex-position and Laplacian losses. Experiments show that our approach achieves plausible cross-species expression transfer, effectively narrowing the geometric gap between human and animal facial shapes.

Instant Expressive Gaussian Head Avatar via 3D-Aware Expression Distillation

Dec 18, 2025Portrait animation has witnessed tremendous quality improvements thanks to recent advances in video diffusion models. However, these 2D methods often compromise 3D consistency and speed, limiting their applicability in real-world scenarios, such as digital twins or telepresence. In contrast, 3D-aware facial animation feedforward methods -- built upon explicit 3D representations, such as neural radiance fields or Gaussian splatting -- ensure 3D consistency and achieve faster inference speed, but come with inferior expression details. In this paper, we aim to combine their strengths by distilling knowledge from a 2D diffusion-based method into a feed-forward encoder, which instantly converts an in-the-wild single image into a 3D-consistent, fast yet expressive animatable representation. Our animation representation is decoupled from the face's 3D representation and learns motion implicitly from data, eliminating the dependency on pre-defined parametric models that often constrain animation capabilities. Unlike previous computationally intensive global fusion mechanisms (e.g., multiple attention layers) for fusing 3D structural and animation information, our design employs an efficient lightweight local fusion strategy to achieve high animation expressivity. As a result, our method runs at 107.31 FPS for animation and pose control while achieving comparable animation quality to the state-of-the-art, surpassing alternative designs that trade speed for quality or vice versa. Project website is https://research.nvidia.com/labs/amri/projects/instant4d

Towards Unified Co-Speech Gesture Generation via Hierarchical Implicit Periodicity Learning

Dec 15, 2025Generating 3D-based body movements from speech shows great potential in extensive downstream applications, while it still suffers challenges in imitating realistic human movements. Predominant research efforts focus on end-to-end generation schemes to generate co-speech gestures, spanning GANs, VQ-VAE, and recent diffusion models. As an ill-posed problem, in this paper, we argue that these prevailing learning schemes fail to model crucial inter- and intra-correlations across different motion units, i.e. head, body, and hands, thus leading to unnatural movements and poor coordination. To delve into these intrinsic correlations, we propose a unified Hierarchical Implicit Periodicity (HIP) learning approach for audio-inspired 3D gesture generation. Different from predominant research, our approach models this multi-modal implicit relationship by two explicit technique insights: i) To disentangle the complicated gesture movements, we first explore the gesture motion phase manifolds with periodic autoencoders to imitate human natures from realistic distributions while incorporating non-period ones from current latent states for instance-level diversities. ii) To model the hierarchical relationship of face motions, body gestures, and hand movements, driving the animation with cascaded guidance during learning. We exhibit our proposed approach on 3D avatars and extensive experiments show our method outperforms the state-of-the-art co-speech gesture generation methods by both quantitative and qualitative evaluations. Code and models will be publicly available.

Enhanced 3D Shape Analysis via Information Geometry

Dec 18, 2025Three-dimensional point clouds provide highly accurate digital representations of objects, essential for applications in computer graphics, photogrammetry, computer vision, and robotics. However, comparing point clouds faces significant challenges due to their unstructured nature and the complex geometry of the surfaces they represent. Traditional geometric metrics such as Hausdorff and Chamfer distances often fail to capture global statistical structure and exhibit sensitivity to outliers, while existing Kullback-Leibler (KL) divergence approximations for Gaussian Mixture Models can produce unbounded or numerically unstable values. This paper introduces an information geometric framework for 3D point cloud shape analysis by representing point clouds as Gaussian Mixture Models (GMMs) on a statistical manifold. We prove that the space of GMMs forms a statistical manifold and propose the Modified Symmetric Kullback-Leibler (MSKL) divergence with theoretically guaranteed upper and lower bounds, ensuring numerical stability for all GMM comparisons. Through comprehensive experiments on human pose discrimination (MPI-FAUST dataset) and animal shape comparison (G-PCD dataset), we demonstrate that MSKL provides stable and monotonically varying values that directly reflect geometric variation, outperforming traditional distances and existing KL approximations.

FastAvatar: Towards Unified Fast High-Fidelity 3D Avatar Reconstruction with Large Gaussian Reconstruction Transformers

Aug 27, 2025Despite significant progress in 3D avatar reconstruction, it still faces challenges such as high time complexity, sensitivity to data quality, and low data utilization. We propose FastAvatar, a feedforward 3D avatar framework capable of flexibly leveraging diverse daily recordings (e.g., a single image, multi-view observations, or monocular video) to reconstruct a high-quality 3D Gaussian Splatting (3DGS) model within seconds, using only a single unified model. FastAvatar's core is a Large Gaussian Reconstruction Transformer featuring three key designs: First, a variant VGGT-style transformer architecture aggregating multi-frame cues while injecting initial 3D prompt to predict an aggregatable canonical 3DGS representation; Second, multi-granular guidance encoding (camera pose, FLAME expression, head pose) mitigating animation-induced misalignment for variable-length inputs; Third, incremental Gaussian aggregation via landmark tracking and sliced fusion losses. Integrating these features, FastAvatar enables incremental reconstruction, i.e., improving quality with more observations, unlike prior work wasting input data. This yields a quality-speed-tunable paradigm for highly usable avatar modeling. Extensive experiments show that FastAvatar has higher quality and highly competitive speed compared to existing methods.

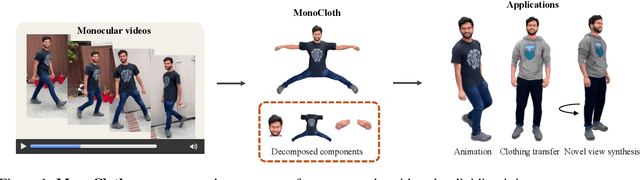

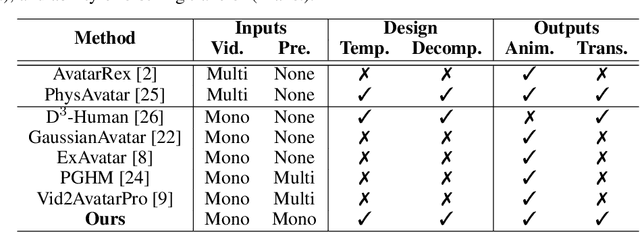

MonoCloth: Reconstruction and Animation of Cloth-Decoupled Human Avatars from Monocular Videos

Aug 06, 2025

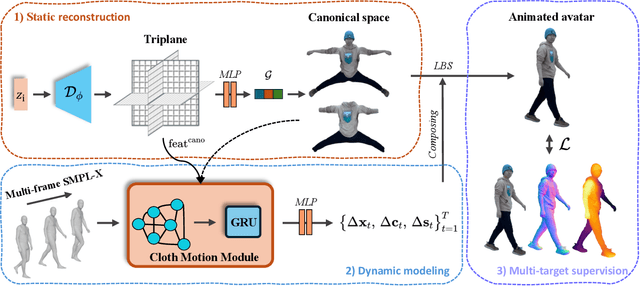

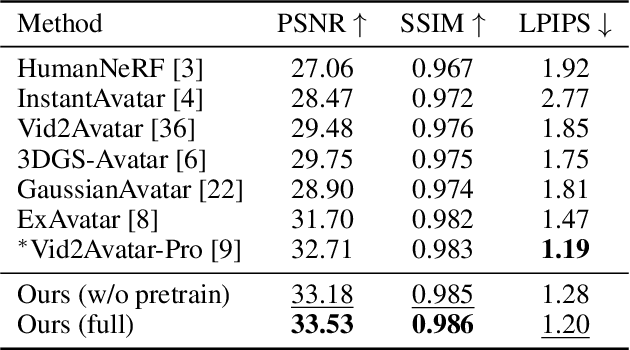

Reconstructing realistic 3D human avatars from monocular videos is a challenging task due to the limited geometric information and complex non-rigid motion involved. We present MonoCloth, a new method for reconstructing and animating clothed human avatars from monocular videos. To overcome the limitations of monocular input, we introduce a part-based decomposition strategy that separates the avatar into body, face, hands, and clothing. This design reflects the varying levels of reconstruction difficulty and deformation complexity across these components. Specifically, we focus on detailed geometry recovery for the face and hands. For clothing, we propose a dedicated cloth simulation module that captures garment deformation using temporal motion cues and geometric constraints. Experimental results demonstrate that MonoCloth improves both visual reconstruction quality and animation realism compared to existing methods. Furthermore, thanks to its part-based design, MonoCloth also supports additional tasks such as clothing transfer, underscoring its versatility and practical utility.

Speak2Sign3D: A Multi-modal Pipeline for English Speech to American Sign Language Animation

Jul 09, 2025Helping deaf and hard-of-hearing people communicate more easily is the main goal of Automatic Sign Language Translation. Although most past research has focused on turning sign language into text, doing the reverse, turning spoken English into sign language animations, has been largely overlooked. That's because it involves multiple steps, such as understanding speech, translating it into sign-friendly grammar, and generating natural human motion. In this work, we introduce a complete pipeline that converts English speech into smooth, realistic 3D sign language animations. Our system starts with Whisper to translate spoken English into text. Then, we use a MarianMT machine translation model to translate that text into American Sign Language (ASL) gloss, a simplified version of sign language that captures meaning without grammar. This model performs well, reaching BLEU scores of 0.7714 and 0.8923. To make the gloss translation more accurate, we also use word embeddings such as Word2Vec and FastText to understand word meanings. Finally, we animate the translated gloss using a 3D keypoint-based motion system trained on Sign3D-WLASL, a dataset we created by extracting body, hand, and face key points from real ASL videos in the WLASL dataset. To support the gloss translation stage, we also built a new dataset called BookGlossCorpus-CG, which turns everyday English sentences from the BookCorpus dataset into ASL gloss using grammar rules. Our system stitches everything together by smoothly interpolating between signs to create natural, continuous animations. Unlike previous works like How2Sign and Phoenix-2014T that focus on recognition or use only one type of data, our pipeline brings together audio, text, and motion in a single framework that goes all the way from spoken English to lifelike 3D sign language animation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge