Ziyang Cheng

Edge AI Inference in ISCC Networks: Sensing Accuracy Analysis and Precoding Design

Jan 01, 2026Abstract:This work explores the relationship between sensing accuracy and precoding coefficients for edge artificial intelligence (AI) inference in integrated sensing, communication and computation (ISCC) networks. We start by constructing a system model of an over-the-air-empowered ISCC network for edge AI inference, involving distributed edge sensors for feature extraction and an edge server for classification. Based on this model, we introduce a discriminant gain (DG) to characterize sensing accuracy and novelly derive an explicit function of the DG about precoding coefficients, giving valuable insights into precoding design. Guided by this, we propose an effective precoding algorithm to solve a non-convex DG-maximization problem. Simulation results verify the effectiveness and feasibility of the proposed design for edge inference in ISCC networks.

Grad: Guided Relation Diffusion Generation for Graph Augmentation in Graph Fraud Detection

Dec 19, 2025

Abstract:Nowadays, Graph Fraud Detection (GFD) in financial scenarios has become an urgent research topic to protect online payment security. However, as organized crime groups are becoming more professional in real-world scenarios, fraudsters are employing more sophisticated camouflage strategies. Specifically, fraudsters disguise themselves by mimicking the behavioral data collected by platforms, ensuring that their key characteristics are consistent with those of benign users to a high degree, which we call Adaptive Camouflage. Consequently, this narrows the differences in behavioral traits between them and benign users within the platform's database, thereby making current GFD models lose efficiency. To address this problem, we propose a relation diffusion-based graph augmentation model Grad. In detail, Grad leverages a supervised graph contrastive learning module to enhance the fraud-benign difference and employs a guided relation diffusion generator to generate auxiliary homophilic relations from scratch. Based on these, weak fraudulent signals would be enhanced during the aggregation process, thus being obvious enough to be captured. Extensive experiments have been conducted on two real-world datasets provided by WeChat Pay, one of the largest online payment platforms with billions of users, and three public datasets. The results show that our proposed model Grad outperforms SOTA methods in both various scenarios, achieving at most 11.10% and 43.95% increases in AUC and AP, respectively. Our code is released at https://github.com/AI4Risk/antifraud and https://github.com/Muyiiiii/WWW25-Grad.

* Accepted by The Web Conference 2025 (WWW'25). 12 pages, includes implementation details. Code: https://github.com/AI4Risk/antifraud and https://github.com/Muyiiiiii/WWW25-Grad

VocalBench-zh: Decomposing and Benchmarking the Speech Conversational Abilities in Mandarin Context

Nov 17, 2025

Abstract:The development of multi-modal large language models (LLMs) leads to intelligent approaches capable of speech interactions. As one of the most widely spoken languages globally, Mandarin is supported by most models to enhance their applicability and reach. However, the scarcity of comprehensive speech-to-speech (S2S) benchmarks in Mandarin contexts impedes systematic evaluation for developers and hinders fair model comparison for users. In this work, we propose VocalBench-zh, an ability-level divided evaluation suite adapted to Mandarin context consisting of 10 well-crafted subsets and over 10K high-quality instances, covering 12 user-oriented characters. The evaluation experiment on 14 mainstream models reveals the common challenges for current routes, and highlights the need for new insights into next-generation speech interactive systems. The evaluation codes and datasets will be available at https://github.com/SJTU-OmniAgent/VocalBench-zh.

VocalNet-M2: Advancing Low-Latency Spoken Language Modeling via Integrated Multi-Codebook Tokenization and Multi-Token Prediction

Nov 13, 2025Abstract:Current end-to-end spoken language models (SLMs) have made notable progress, yet they still encounter considerable response latency. This delay primarily arises from the autoregressive generation of speech tokens and the reliance on complex flow-matching models for speech synthesis. To overcome this, we introduce VocalNet-M2, a novel low-latency SLM that integrates a multi-codebook tokenizer and a multi-token prediction (MTP) strategy. Our model directly generates multi-codebook speech tokens, thus eliminating the need for a latency-inducing flow-matching model. Furthermore, our MTP strategy enhances generation efficiency and improves overall performance. Extensive experiments demonstrate that VocalNet-M2 achieves a substantial reduction in first chunk latency (from approximately 725ms to 350ms) while maintaining competitive performance across mainstream SLMs. This work also provides a comprehensive comparison of single-codebook and multi-codebook strategies, offering valuable insights for developing efficient and high-performance SLMs for real-time interactive applications.

CS3-Bench: Evaluating and Enhancing Speech-to-Speech LLMs for Mandarin-English Code-Switching

Oct 09, 2025

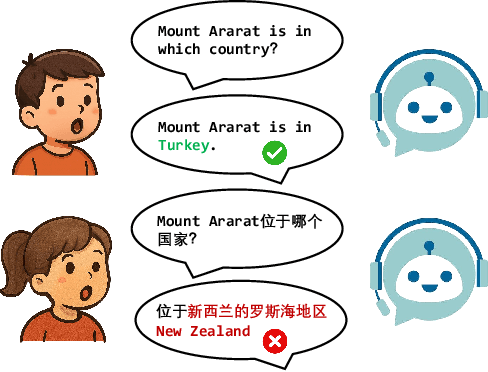

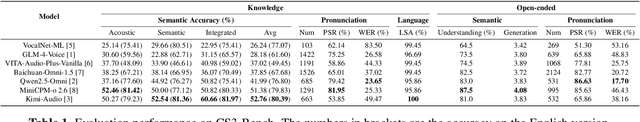

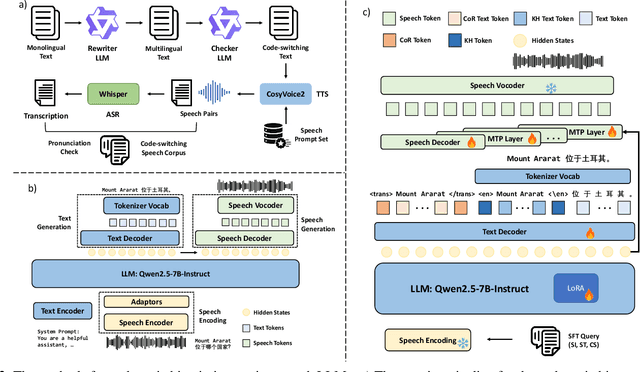

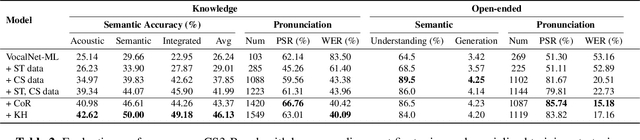

Abstract:The advancement of multimodal large language models has accelerated the development of speech-to-speech interaction systems. While natural monolingual interaction has been achieved, we find existing models exhibit deficiencies in language alignment. In our proposed Code-Switching Speech-to-Speech Benchmark (CS3-Bench), experiments on 7 mainstream models demonstrate a relative performance drop of up to 66% in knowledge-intensive question answering and varying degrees of misunderstanding in open-ended conversations. Starting from a model with severe performance deterioration, we propose both data constructions and training approaches to improve the language alignment capabilities, specifically employing Chain of Recognition (CoR) to enhance understanding and Keyword Highlighting (KH) to guide generation. Our approach improves the knowledge accuracy from 25.14% to 46.13%, with open-ended understanding rate from 64.5% to 86.5%, and significantly reduces pronunciation errors in the secondary language. CS3-Bench is available at https://huggingface.co/datasets/VocalNet/CS3-Bench.

Can LLMs Alleviate Catastrophic Forgetting in Graph Continual Learning? A Systematic Study

May 24, 2025Abstract:Nowadays, real-world data, including graph-structure data, often arrives in a streaming manner, which means that learning systems need to continuously acquire new knowledge without forgetting previously learned information. Although substantial existing works attempt to address catastrophic forgetting in graph machine learning, they are all based on training from scratch with streaming data. With the rise of pretrained models, an increasing number of studies have leveraged their strong generalization ability for continual learning. Therefore, in this work, we attempt to answer whether large language models (LLMs) can mitigate catastrophic forgetting in Graph Continual Learning (GCL). We first point out that current experimental setups for GCL have significant flaws, as the evaluation stage may lead to task ID leakage. Then, we evaluate the performance of LLMs in more realistic scenarios and find that even minor modifications can lead to outstanding results. Finally, based on extensive experiments, we propose a simple-yet-effective method, Simple Graph Continual Learning (SimGCL), that surpasses the previous state-of-the-art GNN-based baseline by around 20% under the rehearsal-free constraint. To facilitate reproducibility, we have developed an easy-to-use benchmark LLM4GCL for training and evaluating existing GCL methods. The code is available at: https://github.com/ZhixunLEE/LLM4GCL.

VocalBench: Benchmarking the Vocal Conversational Abilities for Speech Interaction Models

May 21, 2025Abstract:The rapid advancement of large language models (LLMs) has accelerated the development of multi-modal models capable of vocal communication. Unlike text-based interactions, speech conveys rich and diverse information, including semantic content, acoustic variations, paralanguage cues, and environmental context. However, existing evaluations of speech interaction models predominantly focus on the quality of their textual responses, often overlooking critical aspects of vocal performance and lacking benchmarks with vocal-specific test instances. To address this gap, we propose VocalBench, a comprehensive benchmark designed to evaluate speech interaction models' capabilities in vocal communication. VocalBench comprises 9,400 carefully curated instances across four key dimensions: semantic quality, acoustic performance, conversational abilities, and robustness. It covers 16 fundamental skills essential for effective vocal interaction. Experimental results reveal significant variability in current model capabilities, each exhibiting distinct strengths and weaknesses, and provide valuable insights to guide future research in speech-based interaction systems. Code and evaluation instances are available at https://github.com/SJTU-OmniAgent/VocalBench.

VocalNet: Speech LLM with Multi-Token Prediction for Faster and High-Quality Generation

Apr 05, 2025Abstract:Speech large language models (LLMs) have emerged as a prominent research focus in speech processing. We propose VocalNet-1B and VocalNet-8B, a series of high-performance, low-latency speech LLMs enabled by a scalable and model-agnostic training framework for real-time voice interaction. Departing from the conventional next-token prediction (NTP), we introduce multi-token prediction (MTP), a novel approach optimized for speech LLMs that simultaneously improves generation speed and quality. Experiments show that VocalNet outperforms mainstream Omni LLMs despite using significantly less training data, while also surpassing existing open-source speech LLMs by a substantial margin. To support reproducibility and community advancement, we will open-source all model weights, inference code, training data, and framework implementations upon publication.

Synergizing Covert Transmission and mmWave ISAC for Secure IoT Systems

Feb 17, 2025

Abstract:This work focuses on the synergy of physical layer covert transmission and millimeter wave (mmWave) integrated sensing and communication (ISAC) to improve the performance, and enable secure internet of things (IoT) systems. Specifically, we employ a physical layer covert transmission as a prism, which can achieve simultaneously transmitting confidential signals to a covert communication user equipment (UE) in the presence of a warden and regular communication UEs. We design the transmit beamforming to guarantee information transmission security, communication quality-of-service (QoS) and sensing accuracy. By considering two different beamforming architectures, i.e., fully digital beamforming (FDBF) and hybrid beamforming (HBF), an optimal design method and a low-cost beamforming scheme are proposed to address the corresponding problems, respectively. Numerical simulations validate the effectiveness and superiority of the proposed FDBF/HBF algorithms compared with traditional algorithms in terms of information transmission security, communication QoS and target detection performance.

An Adaptive Grasping Force Tracking Strategy for Nonlinear and Time-Varying Object Behaviors

Dec 03, 2024

Abstract:Accurate grasp force control is one of the key skills for ensuring successful and damage-free robotic grasping of objects. Although existing methods have conducted in-depth research on slip detection and grasping force planning, they often overlook the issue of adaptive tracking of the actual force to the target force when handling objects with different material properties. The optimal parameters of a force tracking controller are significantly influenced by the object's stiffness, and many adaptive force tracking algorithms rely on stiffness estimation. However, real-world objects often exhibit viscous, plastic, or other more complex nonlinear time-varying behaviors, and existing studies provide insufficient support for these materials in terms of stiffness definition and estimation. To address this, this paper introduces the concept of generalized stiffness, extending the definition of stiffness to nonlinear time-varying grasp system models, and proposes an online generalized stiffness estimator based on Long Short-Term Memory (LSTM) networks. Based on generalized stiffness, this paper proposes an adaptive parameter adjustment strategy using a PI controller as an example, enabling dynamic force tracking for objects with varying characteristics. Experimental results demonstrate that the proposed method achieves high precision and short probing time, while showing better adaptability to non-ideal objects compared to existing methods. The method effectively solves the problem of grasp force tracking in unknown, nonlinear, and time-varying grasp systems, enhancing the robotic grasping ability in unstructured environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge