Zhuo Wang

Singing Voice Conversion with Accompaniment Using Self-Supervised Representation-Based Melody Features

Feb 07, 2025Abstract:Melody preservation is crucial in singing voice conversion (SVC). However, in many scenarios, audio is often accompanied with background music (BGM), which can cause audio distortion and interfere with the extraction of melody and other key features, significantly degrading SVC performance. Previous methods have attempted to address this by using more robust neural network-based melody extractors, but their performance drops sharply in the presence of complex accompaniment. Other approaches involve performing source separation before conversion, but this often introduces noticeable artifacts, leading to a significant drop in conversion quality and increasing the user's operational costs. To address these issues, we introduce a novel SVC method that uses self-supervised representation-based melody features to improve melody modeling accuracy in the presence of BGM. In our experiments, we compare the effectiveness of different self-supervised learning (SSL) models for melody extraction and explore for the first time how SSL benefits the task of melody extraction. The experimental results demonstrate that our proposed SVC model significantly outperforms existing baseline methods in terms of melody accuracy and shows higher similarity and naturalness in both subjective and objective evaluations across noisy and clean audio environments.

AnyEnhance: A Unified Generative Model with Prompt-Guidance and Self-Critic for Voice Enhancement

Jan 26, 2025

Abstract:We introduce AnyEnhance, a unified generative model for voice enhancement that processes both speech and singing voices. Based on a masked generative model, AnyEnhance is capable of handling both speech and singing voices, supporting a wide range of enhancement tasks including denoising, dereverberation, declipping, super-resolution, and target speaker extraction, all simultaneously and without fine-tuning. AnyEnhance introduces a prompt-guidance mechanism for in-context learning, which allows the model to natively accept a reference speaker's timbre. In this way, it could boost enhancement performance when a reference audio is available and enable the target speaker extraction task without altering the underlying architecture. Moreover, we also introduce a self-critic mechanism into the generative process for masked generative models, yielding higher-quality outputs through iterative self-assessment and refinement. Extensive experiments on various enhancement tasks demonstrate AnyEnhance outperforms existing methods in terms of both objective metrics and subjective listening tests. Demo audios are publicly available at https://amphionspace.github.io/anyenhance/.

Diverse Generation while Maintaining Semantic Coordination: A Diffusion-Based Data Augmentation Method for Object Detection

Aug 06, 2024Abstract:Recent studies emphasize the crucial role of data augmentation in enhancing the performance of object detection models. However,existing methodologies often struggle to effectively harmonize dataset diversity with semantic coordination.To bridge this gap, we introduce an innovative augmentation technique leveraging pre-trained conditional diffusion models to mediate this balance. Our approach encompasses the development of a Category Affinity Matrix, meticulously designed to enhance dataset diversity, and a Surrounding Region Alignment strategy, which ensures the preservation of semantic coordination in the augmented images. Extensive experimental evaluations confirm the efficacy of our method in enriching dataset diversity while seamlessly maintaining semantic coordination. Our method yields substantial average improvements of +1.4AP, +0.9AP, and +3.4AP over existing alternatives on three distinct object detection models, respectively.

68-Channel Highly-Integrated Neural Signal Processing PSoC with On-Chip Feature Extraction, Compression, and Hardware Accelerators for Neuroprosthetics in 22nm FDSOI

Jul 12, 2024

Abstract:Multi-channel electrophysiology systems for recording of neuronal activity face significant data throughput limitations, hampering real-time, data-informed experiments. These limitations impact both experimental neurobiology research and next-generation neuroprosthetics. We present a novel solution that leverages the high integration density of 22nm FDSOI CMOS technology to address these challenges. The proposed highly integrated programmable System-on-Chip comprises 68-channel 0.41 \textmu W/Ch recording frontends, spike detectors, 16-channel 0.87-4.39 \textmu W/Ch action potential and 8-channel 0.32 \textmu W/Ch local field potential codecs, as well as a MAC-assisted power-efficient processor operating at 25 MHz (5.19 \textmu W/MHz). The system supports on-chip training processes for compression, training and inference for neural spike sorting. The spike sorting achieves an average accuracy of 91.48% or 94.12% depending on the utilized features. The proposed PSoC is optimized for reduced area (9 mm2) and power. On-chip processing and compression capabilities free up the data bottlenecks in data transmission (up to 91% space saving ratio), and moreover enable a fully autonomous yet flexible processor-driven operation. Combined, these design considerations overcome data-bottlenecks by allowing on-chip feature extraction and subsequent compression.

Validating Climate Models with Spherical Convolutional Wasserstein Distance

Jan 26, 2024

Abstract:The validation of global climate models is crucial to ensure the accuracy and efficacy of model output. We introduce the spherical convolutional Wasserstein distance to more comprehensively measure differences between climate models and reanalysis data. This new similarity measure accounts for spatial variability using convolutional projections and quantifies local differences in the distribution of climate variables. We apply this method to evaluate the historical model outputs of the Coupled Model Intercomparison Project (CMIP) members by comparing them to observational and reanalysis data products. Additionally, we investigate the progression from CMIP phase 5 to phase 6 and find modest improvements in the phase 6 models regarding their ability to produce realistic climatologies.

Learning Interpretable Rules for Scalable Data Representation and Classification

Oct 30, 2023

Abstract:Rule-based models, e.g., decision trees, are widely used in scenarios demanding high model interpretability for their transparent inner structures and good model expressivity. However, rule-based models are hard to optimize, especially on large data sets, due to their discrete parameters and structures. Ensemble methods and fuzzy/soft rules are commonly used to improve performance, but they sacrifice the model interpretability. To obtain both good scalability and interpretability, we propose a new classifier, named Rule-based Representation Learner (RRL), that automatically learns interpretable non-fuzzy rules for data representation and classification. To train the non-differentiable RRL effectively, we project it to a continuous space and propose a novel training method, called Gradient Grafting, that can directly optimize the discrete model using gradient descent. A novel design of logical activation functions is also devised to increase the scalability of RRL and enable it to discretize the continuous features end-to-end. Exhaustive experiments on ten small and four large data sets show that RRL outperforms the competitive interpretable approaches and can be easily adjusted to obtain a trade-off between classification accuracy and model complexity for different scenarios. Our code is available at: https://github.com/12wang3/rrl.

Can LLMs like GPT-4 outperform traditional AI tools in dementia diagnosis? Maybe, but not today

Jun 02, 2023

Abstract:Recent investigations show that large language models (LLMs), specifically GPT-4, not only have remarkable capabilities in common Natural Language Processing (NLP) tasks but also exhibit human-level performance on various professional and academic benchmarks. However, whether GPT-4 can be directly used in practical applications and replace traditional artificial intelligence (AI) tools in specialized domains requires further experimental validation. In this paper, we explore the potential of LLMs such as GPT-4 to outperform traditional AI tools in dementia diagnosis. Comprehensive comparisons between GPT-4 and traditional AI tools are conducted to examine their diagnostic accuracy in a clinical setting. Experimental results on two real clinical datasets show that, although LLMs like GPT-4 demonstrate potential for future advancements in dementia diagnosis, they currently do not surpass the performance of traditional AI tools. The interpretability and faithfulness of GPT-4 are also evaluated by comparison with real doctors. We discuss the limitations of GPT-4 in its current state and propose future research directions to enhance GPT-4 in dementia diagnosis.

Not Just Plain Text! Fuel Document-Level Relation Extraction with Explicit Syntax Refinement and Subsentence Modeling

Nov 10, 2022

Abstract:Document-level relation extraction (DocRE) aims to identify semantic labels among entities within a single document. One major challenge of DocRE is to dig decisive details regarding a specific entity pair from long text. However, in many cases, only a fraction of text carries required information, even in the manually labeled supporting evidence. To better capture and exploit instructive information, we propose a novel expLicit syntAx Refinement and Subsentence mOdeliNg based framework (LARSON). By introducing extra syntactic information, LARSON can model subsentences of arbitrary granularity and efficiently screen instructive ones. Moreover, we incorporate refined syntax into text representations which further improves the performance of LARSON. Experimental results on three benchmark datasets (DocRED, CDR, and GDA) demonstrate that LARSON significantly outperforms existing methods.

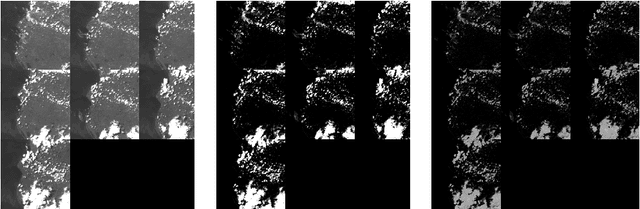

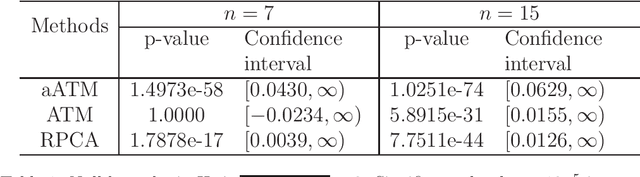

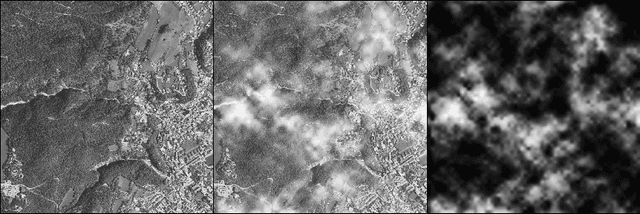

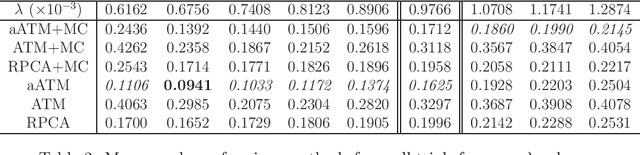

Cloud removal Using Atmosphere Model

Oct 05, 2022

Abstract:Cloud removal is an essential task in remote sensing data analysis. As the image sensors are distant from the earth ground, it is likely that part of the area of interests is covered by cloud. Moreover, the atmosphere in between creates a constant haze layer upon the acquired images. To recover the ground image, we propose to use scattering model for temporal sequence of images of any scene in the framework of low rank and sparse models. We further develop its variant, which is much faster and yet more accurate. To measure the performance of different methods {\em objectively}, we develop a semi-realistic simulation method to produce cloud cover so that various methods can be quantitatively analysed, which enables detailed study of many aspects of cloud removal algorithms, including verifying the effectiveness of proposed models in comparison with the state-of-the-arts, including deep learning models, and addressing the long standing problem of the determination of regularisation parameters. The latter is companioned with theoretic analysis on the range of the sparsity regularisation parameter and verified numerically.

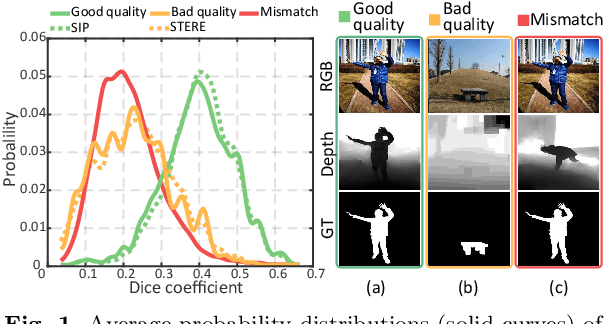

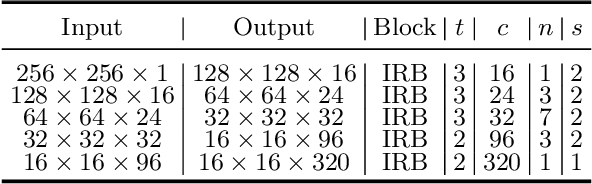

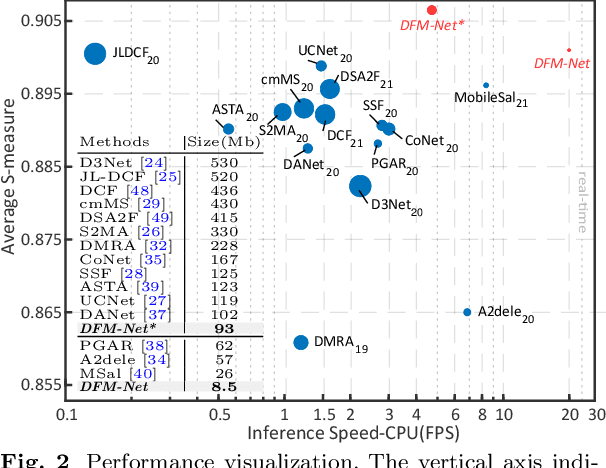

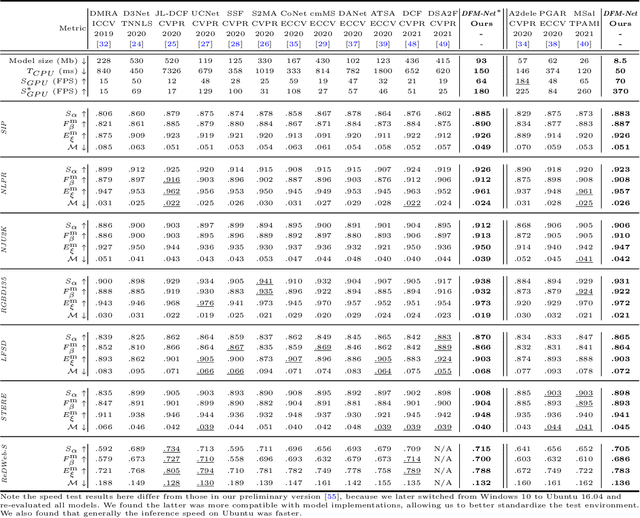

Depth Quality-Inspired Feature Manipulation for Efficient RGB-D and Video Salient Object Detection

Aug 08, 2022

Abstract:Recently CNN-based RGB-D salient object detection (SOD) has obtained significant improvement on detection accuracy. However, existing models often fail to perform well in terms of efficiency and accuracy simultaneously. This hinders their potential applications on mobile devices as well as many real-world problems. To bridge the accuracy gap between lightweight and large models for RGB-D SOD, in this paper, an efficient module that can greatly improve the accuracy but adds little computation is proposed. Inspired by the fact that depth quality is a key factor influencing the accuracy, we propose an efficient depth quality-inspired feature manipulation (DQFM) process, which can dynamically filter depth features according to depth quality. The proposed DQFM resorts to the alignment of low-level RGB and depth features, as well as holistic attention of the depth stream to explicitly control and enhance cross-modal fusion. We embed DQFM to obtain an efficient lightweight RGB-D SOD model called DFM-Net, where we in addition design a tailored depth backbone and a two-stage decoder as basic parts. Extensive experimental results on nine RGB-D datasets demonstrate that our DFM-Net outperforms recent efficient models, running at about 20 FPS on CPU with only 8.5Mb model size, and meanwhile being 2.9/2.4 times faster and 6.7/3.1 times smaller than the latest best models A2dele and MobileSal. It also maintains state-of-the-art accuracy when even compared to non-efficient models. Interestingly, further statistics and analyses verify the ability of DQFM in distinguishing depth maps of various qualities without any quality labels. Last but not least, we further apply DFM-Net to deal with video SOD (VSOD), achieving comparable performance against recent efficient models while being 3/2.3 times faster/smaller than the prior best in this field. Our code is available at https://github.com/zwbx/DFM-Net.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge