Zhongyu Li

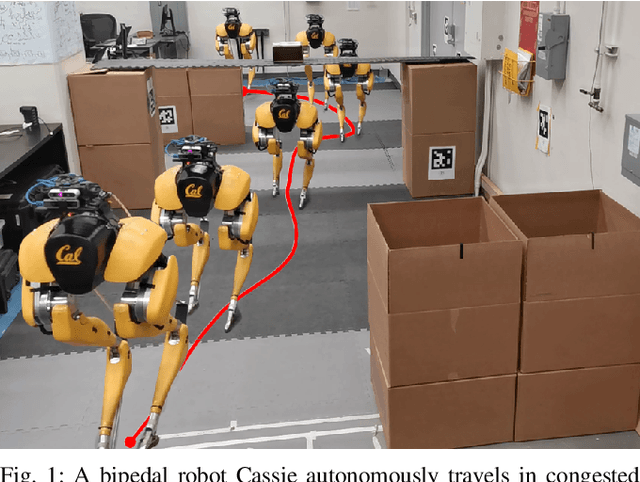

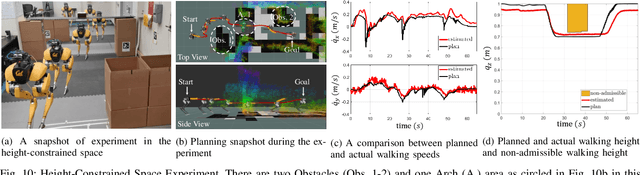

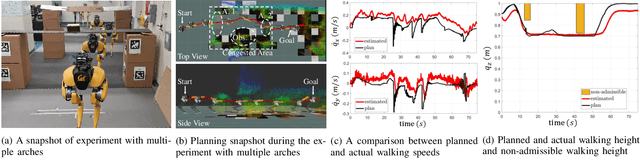

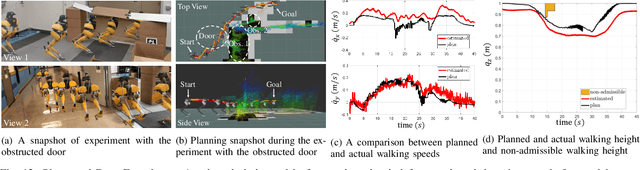

Vision-Aided Autonomous Navigation of Bipedal Robots in Height-Constrained Environments

Sep 13, 2021

Abstract:Navigating a large-scaled robot in unknown and cluttered height-constrained environments is challenging. Not only is a fast and reliable planning algorithm required to go around obstacles, the robot should also be able to change its intrinsic dimension by crouching in order to travel underneath height constrained regions. There are few mobile robots that are capable of handling such a challenge, and bipedal robots provide a solution. However, as bipedal robots have nonlinear and hybrid dynamics, trajectory planning while ensuring dynamic feasibility and safety on these robots is challenging. This paper presents an end-to-end vision-aided autonomous navigation framework which leverages three layers of planners and a variable walking height controller to enable bipedal robots to safely explore height-constrained environments. A vertically actuated Spring-Loaded Inverted Pendulum (vSLIP) model is introduced to capture the robot coupled dynamics of planar walking and vertical walking height. This reduced-order model is utilized to optimize for long-term and short-term safe trajectory plans. A variable walking height controller is leveraged to enable the bipedal robot to maintain stable periodic walking gaits while following the planned trajectory. The entire framework is tested and experimentally validated using a bipedal robot Cassie. This demonstrates reliable autonomy to drive the robot to safely avoid obstacles while walking to the goal location in various kinds of height-constrained cluttered environments.

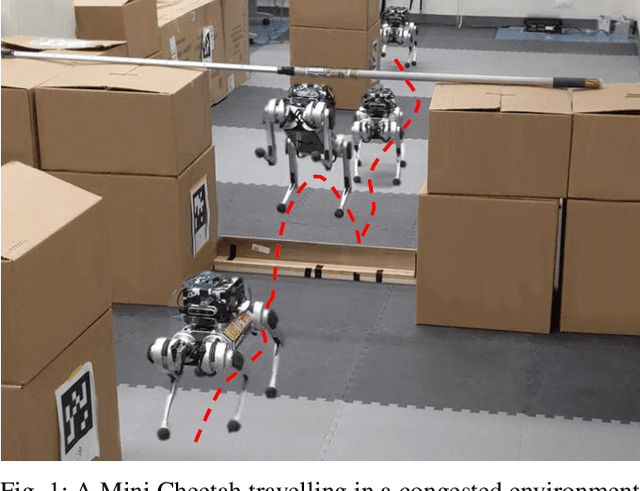

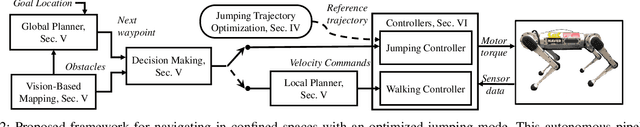

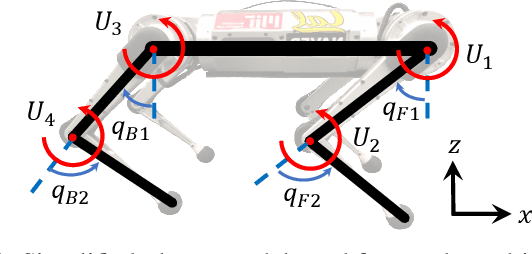

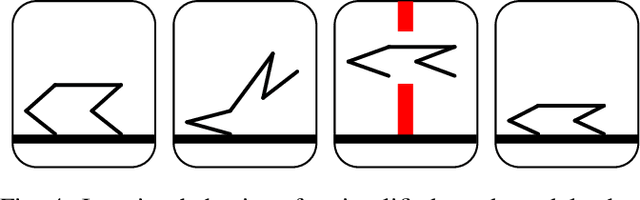

Autonomous Navigation for Quadrupedal Robots with Optimized Jumping through Constrained Obstacles

Jul 01, 2021

Abstract:Quadrupeds are strong candidates for navigating challenging environments because of their agile and dynamic designs. This paper presents a methodology that extends the range of exploration for quadrupedal robots by creating an end-to-end navigation framework that exploits walking and jumping modes. To obtain a dynamic jumping maneuver while avoiding obstacles, dynamically-feasible trajectories are optimized offline through collocation-based optimization where safety constraints are imposed. Such optimization schematic allows the robot to jump through window-shaped obstacles by considering both obstacles in the air and on the ground. The resulted jumping mode is utilized in an autonomous navigation pipeline that leverages a search-based global planner and a local planner to enable the robot to reach the goal location by walking. A state machine together with a decision making strategy allows the system to switch behaviors between walking around obstacles or jumping through them. The proposed framework is experimentally deployed and validated on a quadrupedal robot, a Mini Cheetah, to enable the robot to autonomously navigate through an environment while avoiding obstacles and jumping over a maximum height of 13 cm to pass through a window-shaped opening in order to reach its goal.

CRT-Net: A Generalized and Scalable Framework for the Computer-Aided Diagnosis of Electrocardiogram Signals

May 28, 2021

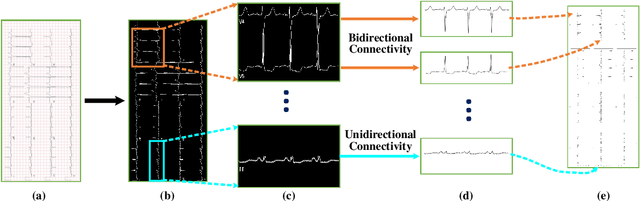

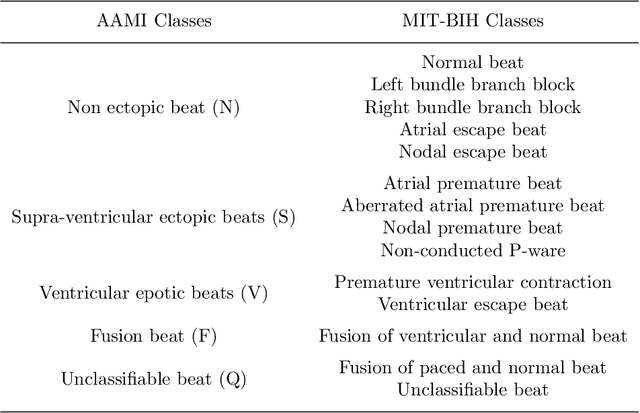

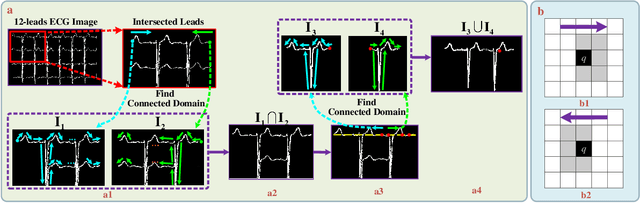

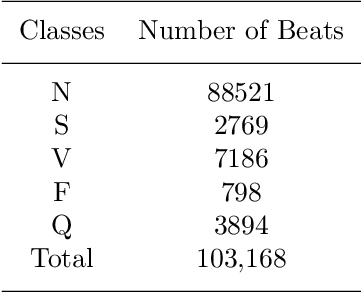

Abstract:Electrocardiogram (ECG) signals play critical roles in the clinical screening and diagnosis of many types of cardiovascular diseases. Despite deep neural networks that have been greatly facilitated computer-aided diagnosis (CAD) in many clinical tasks, the variability and complexity of ECG in the clinic still pose significant challenges in both diagnostic performance and clinical applications. In this paper, we develop a robust and scalable framework for the clinical recognition of ECG. Considering the fact that hospitals generally record ECG signals in the form of graphic waves of 2-D images, we first extract the graphic waves of 12-lead images into numerical 1-D ECG signals by a proposed bi-directional connectivity method. Subsequently, a novel deep neural network, namely CRT-Net, is designed for the fine-grained and comprehensive representation and recognition of 1-D ECG signals. The CRT-Net can well explore waveform features, morphological characteristics and time domain features of ECG by embedding convolution neural network(CNN), recurrent neural network(RNN), and transformer module in a scalable deep model, which is especially suitable in clinical scenarios with different lengths of ECG signals captured from different devices. The proposed framework is first evaluated on two widely investigated public repositories, demonstrating the superior performance of ECG recognition in comparison with state-of-the-art. Moreover, we validate the effectiveness of our proposed bi-directional connectivity and CRT-Net on clinical ECG images collected from the local hospital, including 258 patients with chronic kidney disease (CKD), 351 patients with Type-2 Diabetes (T2DM), and around 300 patients in the control group. In the experiments, our methods can achieve excellent performance in the recognition of these two types of disease.

Enhancing Feasibility and Safety of Nonlinear Model Predictive Control with Discrete-Time Control Barrier Functions

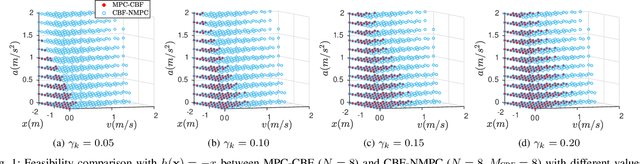

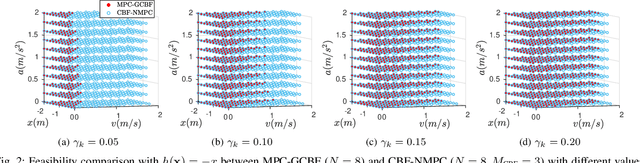

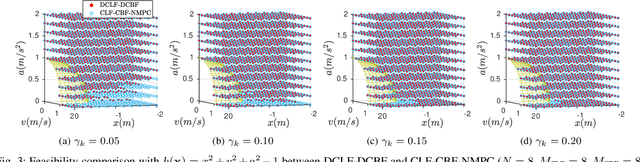

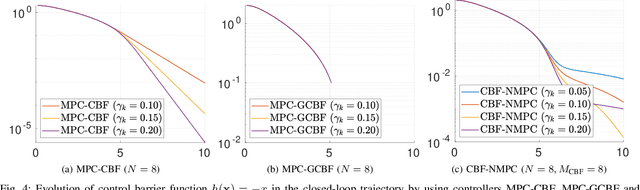

May 21, 2021

Abstract:Safety is one of the fundamental problems in robotics. Recently, one-step or multi-step optimal control problems for discrete-time nonlinear dynamical system are formulated to offer tracking stability using control Lyapunov functions (CLFs) while subject to input constraints as well as safety-critical constraints using control barrier functions (CBFs). The limitations of these existing approaches are mainly about feasibility and safety. In the existing approaches, the optimization feasibility and the system safety cannot be enhanced at the same time theoretically. In this paper, we propose two formulations that unifies CLFs and CBFs under the framework of nonlinear model predictive control (NMPC). In the proposed formulations, safety criteria is commonly formulated as CBF constraints and stability performance is ensured with either a terminal cost function or CLF constraints. Relaxing variables are introduced on the CBF constraints to resolve the tradeoff between feasibility and safety so that they can be enhanced at the same. The advantages about feasibility and safety of proposed formulations compared with existing methods are analyzed theoretically and validated with numerical results.

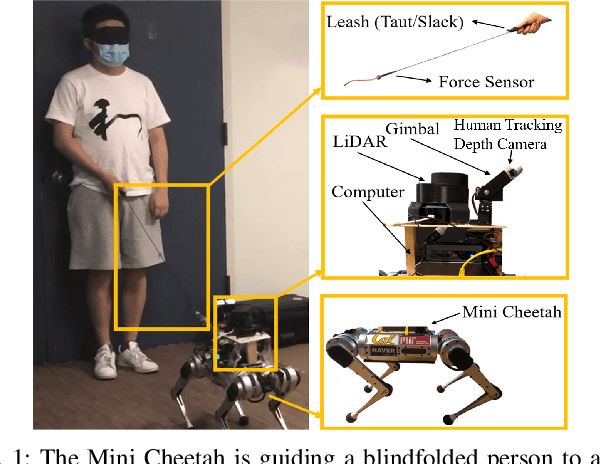

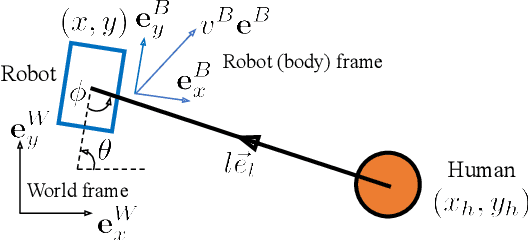

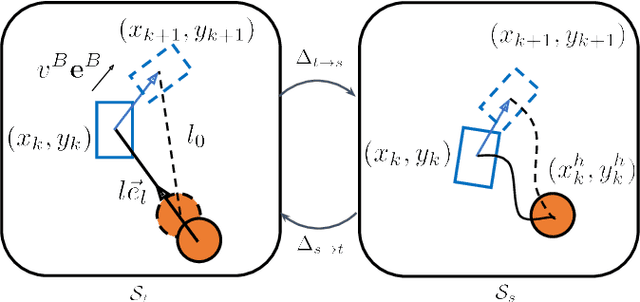

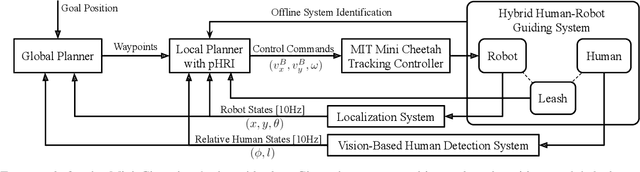

Robotic Guide Dog: Leading a Human with Leash-Guided Hybrid Physical Interaction

Mar 26, 2021

Abstract:An autonomous robot that is able to physically guide humans through narrow and cluttered spaces could be a big boon to the visually-impaired. Most prior robotic guiding systems are based on wheeled platforms with large bases with actuated rigid guiding canes. The large bases and the actuated arms limit these prior approaches from operating in narrow and cluttered environments. We propose a method that introduces a quadrupedal robot with a leash to enable the robot-guiding human system to change its intrinsic dimension (by letting the leash go slack) in order to fit into narrow spaces. We propose a hybrid physical Human-Robot Interaction model that involves leash tension to describe the dynamical relationship in the robot-guiding human system. This hybrid model is utilized in a mixed-integer programming problem to develop a reactive planner that is able to utilize slack-taut switching to guide a blind-folded person to safely travel in a confined space. The proposed leash-guided robot framework is deployed on a Mini Cheetah quadrupedal robot and validated in experiments.

Reinforcement Learning for Robust Parameterized Locomotion Control of Bipedal Robots

Mar 26, 2021

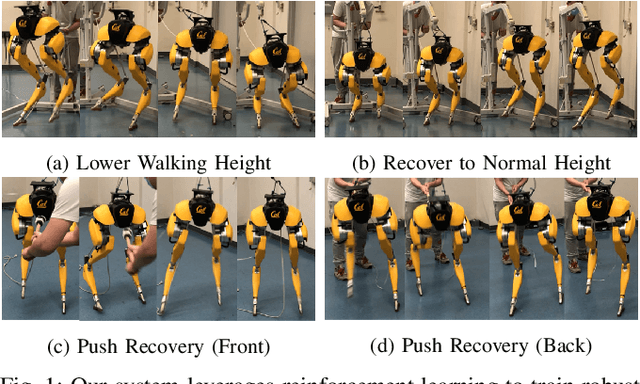

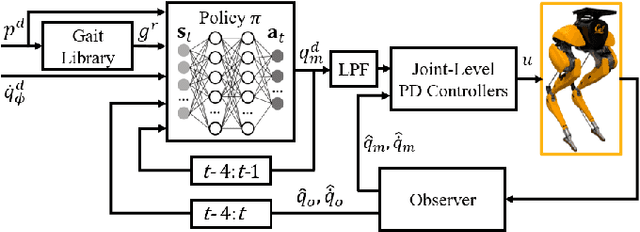

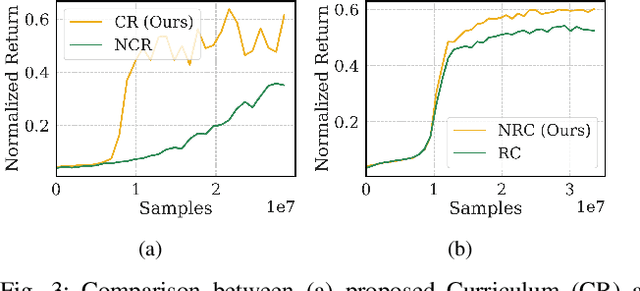

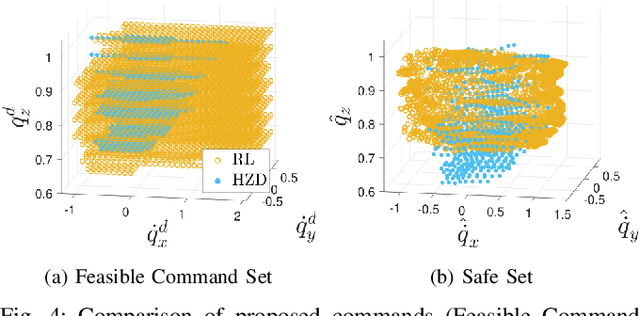

Abstract:Developing robust walking controllers for bipedal robots is a challenging endeavor. Traditional model-based locomotion controllers require simplifying assumptions and careful modelling; any small errors can result in unstable control. To address these challenges for bipedal locomotion, we present a model-free reinforcement learning framework for training robust locomotion policies in simulation, which can then be transferred to a real bipedal Cassie robot. To facilitate sim-to-real transfer, domain randomization is used to encourage the policies to learn behaviors that are robust across variations in system dynamics. The learned policies enable Cassie to perform a set of diverse and dynamic behaviors, while also being more robust than traditional controllers and prior learning-based methods that use residual control. We demonstrate this on versatile walking behaviors such as tracking a target walking velocity, walking height, and turning yaw.

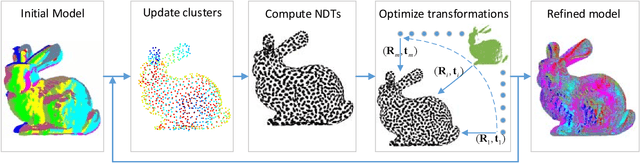

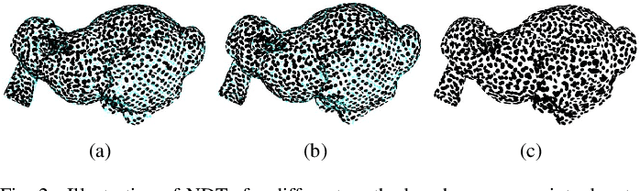

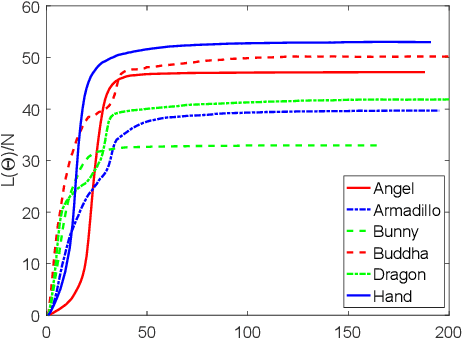

3DMNDT:3D multi-view registration method based on the normal distributions transform

Mar 20, 2021

Abstract:The normal distributions transform (NDT) is an effective paradigm for the point set registration. This method is originally designed for pair-wise registration and it will suffer from great challenges when applied to multi-view registration. Under the NDT framework, this paper proposes a novel multi-view registration method, named 3D multi-view registration based on the normal distributions transform (3DMNDT), which integrates the K-means clustering and Lie algebra solver to achieve multi-view registration. More specifically, the multi-view registration is cast into the problem of maximum likelihood estimation. Then, the K-means algorithm is utilized to divide all data points into different clusters, where a normal distribution is computed to locally models the probability of measuring a data point in each cluster. Subsequently, the registration problem is formulated by the NDT-based likelihood function. To maximize this likelihood function, the Lie algebra solver is developed to sequentially optimize each rigid transformation. The proposed method alternately implements data point clustering, NDT computing, and likelihood maximization until desired registration results are obtained. Experimental results tested on benchmark data sets illustrate that the proposed method can achieve state-of-the-art performance for multi-view registration.

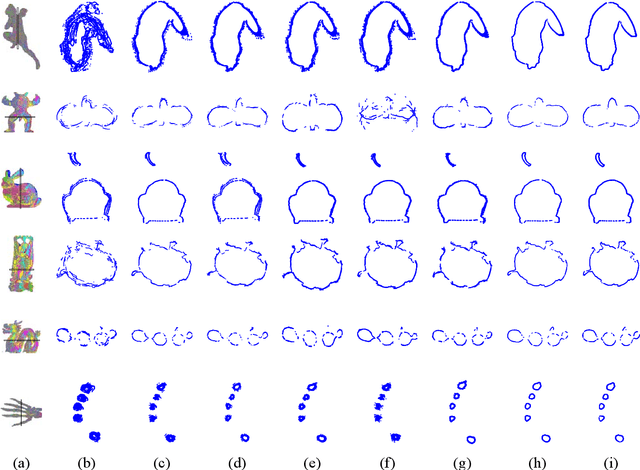

Effective multi-view registration of point sets based on student's t mixture model

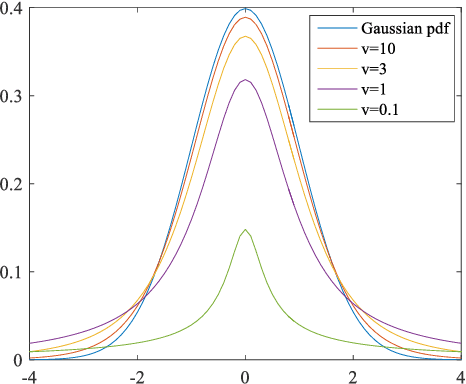

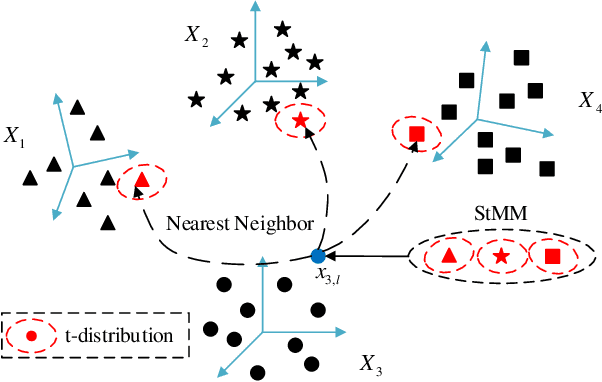

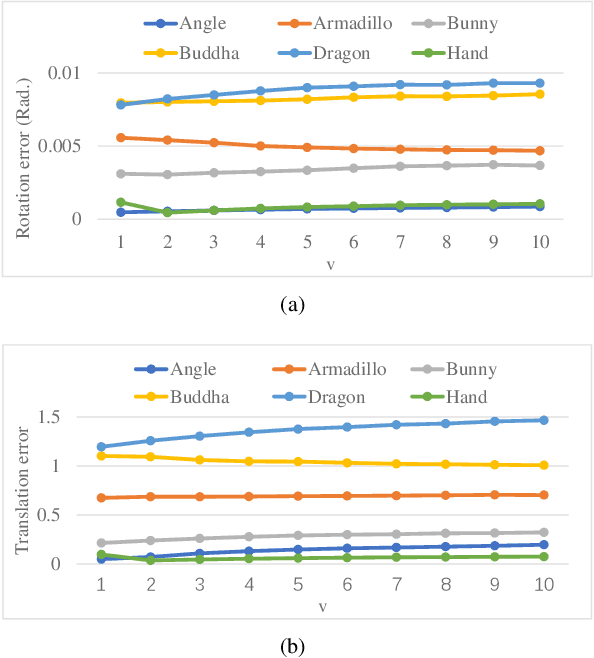

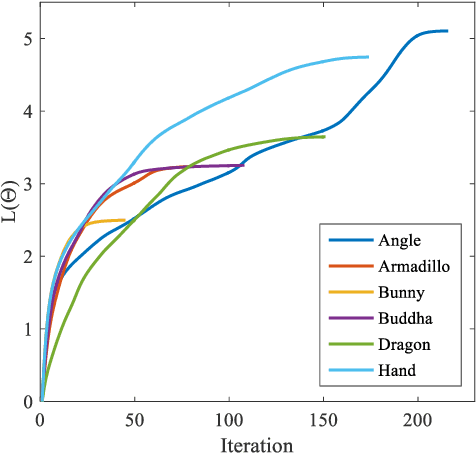

Dec 13, 2020

Abstract:Recently, Expectation-maximization (EM) algorithm has been introduced as an effective means to solve multi-view registration problem. Most of the previous methods assume that each data point is drawn from the Gaussian Mixture Model (GMM), which is difficult to deal with the noise with heavy-tail or outliers. Accordingly, this paper proposed an effective registration method based on Student's t Mixture Model (StMM). More specially, we assume that each data point is drawn from one unique StMM, where its nearest neighbors (NNs) in other point sets are regarded as the t-distribution centroids with equal covariances, membership probabilities, and fixed degrees of freedom. Based on this assumption, the multi-view registration problem is formulated into the maximization of the likelihood function including all rigid transformations. Subsequently, the EM algorithm is utilized to optimize rigid transformations as well as the only t-distribution covariance for multi-view registration. Since only a few model parameters require to be optimized, the proposed method is more likely to obtain the desired registration results. Besides, all t-distribution centroids can be obtained by the NN search method, it is very efficient to achieve multi-view registration. What's more, the t-distribution takes the noise with heavy-tail into consideration, which makes the proposed method be inherently robust to noises and outliers. Experimental results tested on benchmark data sets illustrate its superior performance on robustness and accuracy over state-of-the-art methods.

Tensor-based Intrinsic Subspace Representation Learning for Multi-view Clustering

Nov 12, 2020

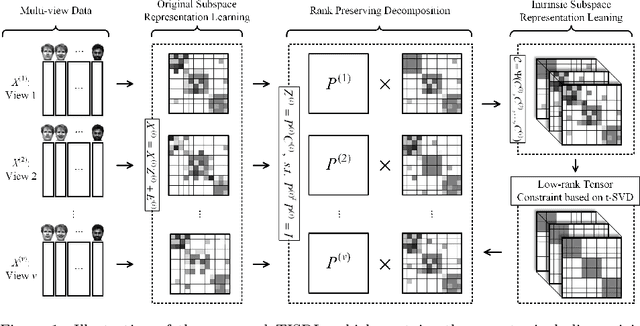

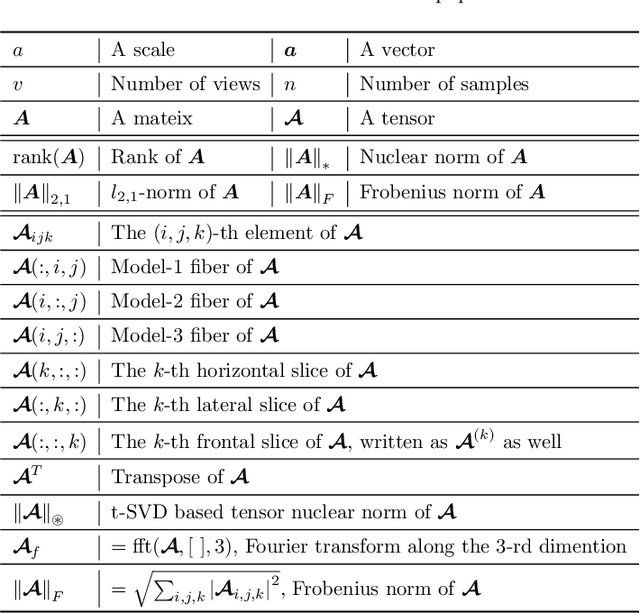

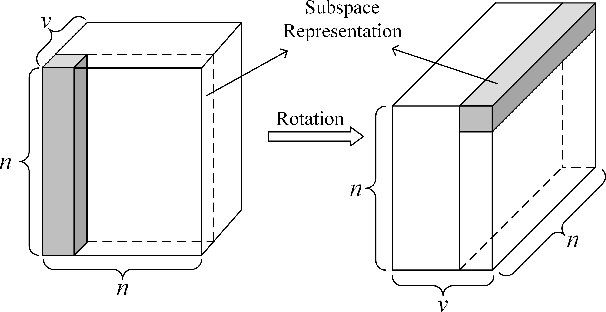

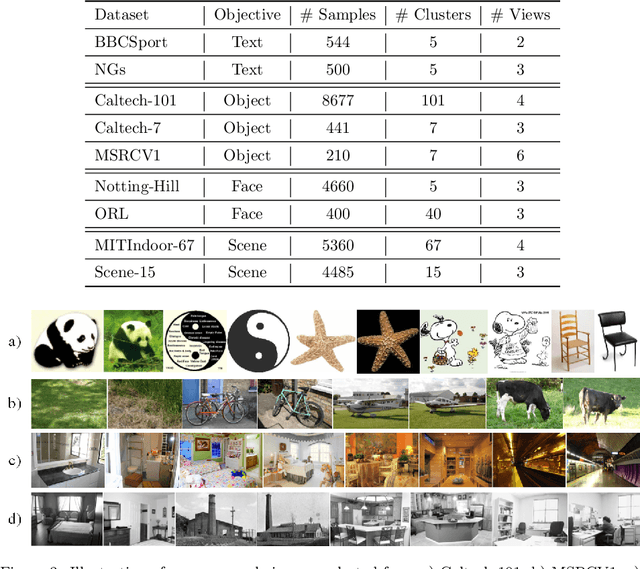

Abstract:As a hot research topic, many multi-view clustering approaches are proposed over the past few years. Nevertheless, most existing algorithms merely take the consensus information among different views into consideration for clustering. Actually, it may hinder the multi-view clustering performance in real-life applications, since different views usually contain diverse statistic properties. To address this problem, we propose a novel Tensor-based Intrinsic Subspace Representation Learning (TISRL) for multi-view clustering in this paper. Concretely, the rank preserving decomposition is proposed firstly to effectively deal with the diverse statistic information contained in different views. Then, to achieve the intrinsic subspace representation, the tensor-singular value decomposition based low-rank tensor constraint is also utilized in our method. It can be seen that specific information contained in different views is fully investigated by the rank preserving decomposition, and the high-order correlations of multi-view data are also mined by the low-rank tensor constraint. The objective function can be optimized by an augmented Lagrangian multiplier based alternating direction minimization algorithm. Experimental results on nine common used real-world multi-view datasets illustrate the superiority of TISRL.

Multi-view Subspace Clustering Networks with Local and Global Graph Information

Oct 24, 2020

Abstract:This study investigates the problem of multi-view subspace clustering, the goal of which is to explore the underlying grouping structure of data collected from different fields or measurements. Since data do not always comply with the linear subspace models in many real-world applications, most existing multi-view subspace clustering methods that based on the shallow linear subspace models may fail in practice. Furthermore, underlying graph information of multi-view data is always ignored in most existing multi-view subspace clustering methods. To address aforementioned limitations, we proposed the novel multi-view subspace clustering networks with local and global graph information, termed MSCNLG, in this paper. Specifically, autoencoder networks are employed on multiple views to achieve latent smooth representations that are suitable for the linear assumption. Simultaneously, by integrating fused multi-view graph information into self-expressive layers, the proposed MSCNLG obtains the common shared multi-view subspace representation, which can be used to get clustering results by employing the standard spectral clustering algorithm. As an end-to-end trainable framework, the proposed method fully investigates the valuable information of multiple views. Comprehensive experiments on six benchmark datasets validate the effectiveness and superiority of the proposed MSCNLG.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge