Yuge Huang

Evaluation-oriented Knowledge Distillation for Deep Face Recognition

Jun 06, 2022

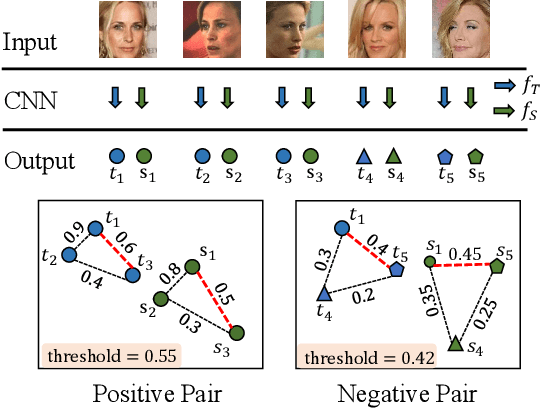

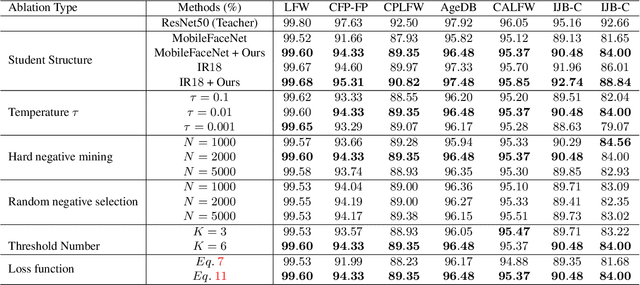

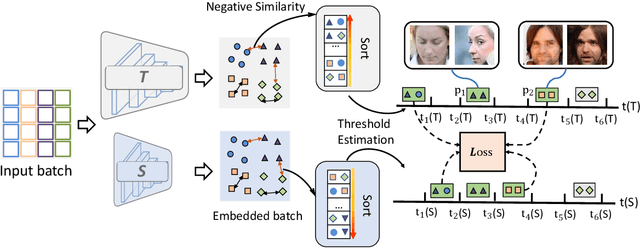

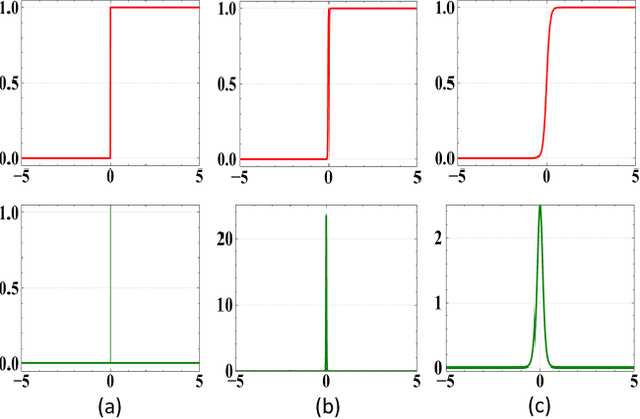

Abstract:Knowledge distillation (KD) is a widely-used technique that utilizes large networks to improve the performance of compact models. Previous KD approaches usually aim to guide the student to mimic the teacher's behavior completely in the representation space. However, such one-to-one corresponding constraints may lead to inflexible knowledge transfer from the teacher to the student, especially those with low model capacities. Inspired by the ultimate goal of KD methods, we propose a novel Evaluation oriented KD method (EKD) for deep face recognition to directly reduce the performance gap between the teacher and student models during training. Specifically, we adopt the commonly used evaluation metrics in face recognition, i.e., False Positive Rate (FPR) and True Positive Rate (TPR) as the performance indicator. According to the evaluation protocol, the critical pair relations that cause the TPR and FPR difference between the teacher and student models are selected. Then, the critical relations in the student are constrained to approximate the corresponding ones in the teacher by a novel rank-based loss function, giving more flexibility to the student with low capacity. Extensive experimental results on popular benchmarks demonstrate the superiority of our EKD over state-of-the-art competitors.

Adaptive Feature Alignment for Adversarial Training

Jun 16, 2021

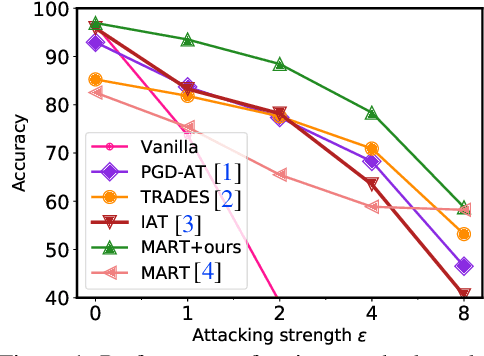

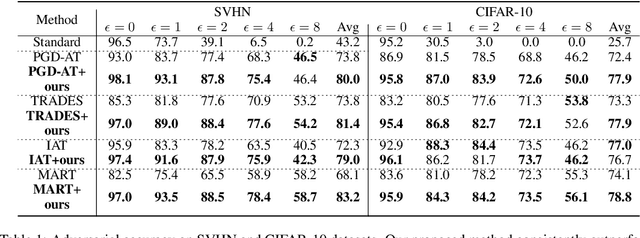

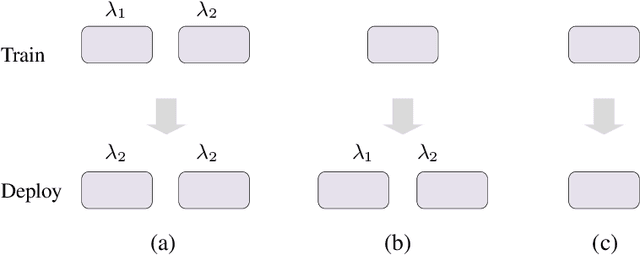

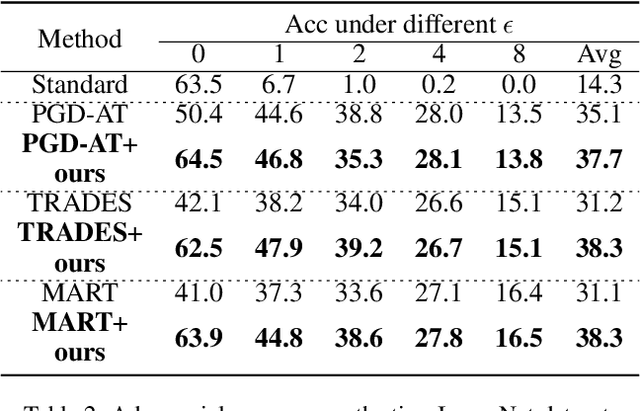

Abstract:Recent studies reveal that Convolutional Neural Networks (CNNs) are typically vulnerable to adversarial attacks, which pose a threat to security-sensitive applications. Many adversarial defense methods improve robustness at the cost of accuracy, raising the contradiction between standard and adversarial accuracies. In this paper, we observe an interesting phenomenon that feature statistics change monotonically and smoothly w.r.t the rising of attacking strength. Based on this observation, we propose the adaptive feature alignment (AFA) to generate features of arbitrary attacking strengths. Our method is trained to automatically align features of arbitrary attacking strength. This is done by predicting a fusing weight in a dual-BN architecture. Unlike previous works that need to either retrain the model or manually tune a hyper-parameters for different attacking strengths, our method can deal with arbitrary attacking strengths with a single model without introducing any hyper-parameter. Importantly, our method improves the model robustness against adversarial samples without incurring much loss in standard accuracy. Experiments on CIFAR-10, SVHN, and tiny-ImageNet datasets demonstrate that our method outperforms the state-of-the-art under a wide range of attacking strengths.

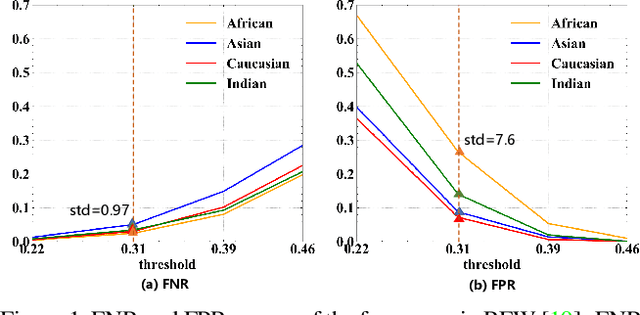

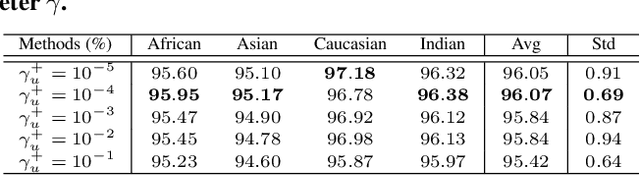

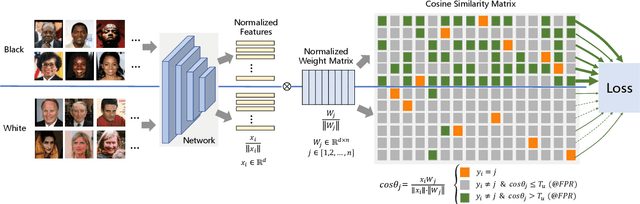

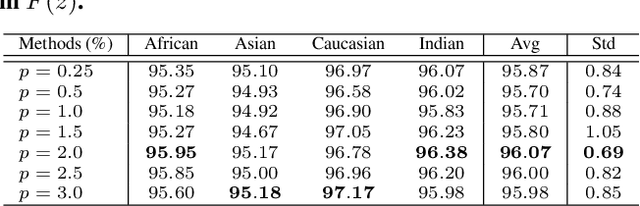

Consistent Instance False Positive Improves Fairness in Face Recognition

Jun 10, 2021

Abstract:Demographic bias is a significant challenge in practical face recognition systems. Existing methods heavily rely on accurate demographic annotations. However, such annotations are usually unavailable in real scenarios. Moreover, these methods are typically designed for a specific demographic group and are not general enough. In this paper, we propose a false positive rate penalty loss, which mitigates face recognition bias by increasing the consistency of instance False Positive Rate (FPR). Specifically, we first define the instance FPR as the ratio between the number of the non-target similarities above a unified threshold and the total number of the non-target similarities. The unified threshold is estimated for a given total FPR. Then, an additional penalty term, which is in proportion to the ratio of instance FPR overall FPR, is introduced into the denominator of the softmax-based loss. The larger the instance FPR, the larger the penalty. By such unequal penalties, the instance FPRs are supposed to be consistent. Compared with the previous debiasing methods, our method requires no demographic annotations. Thus, it can mitigate the bias among demographic groups divided by various attributes, and these attributes are not needed to be previously predefined during training. Extensive experimental results on popular benchmarks demonstrate the superiority of our method over state-of-the-art competitors. Code and trained models are available at https://github.com/Tencent/TFace.

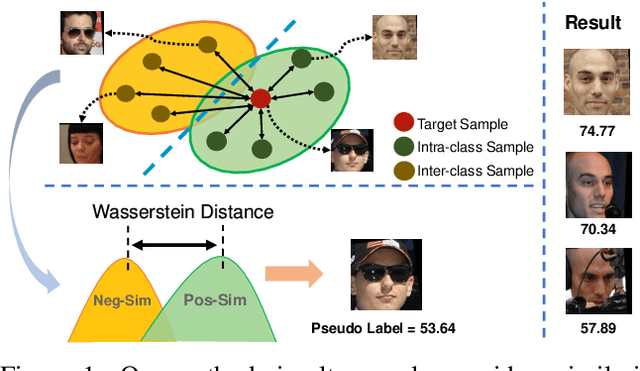

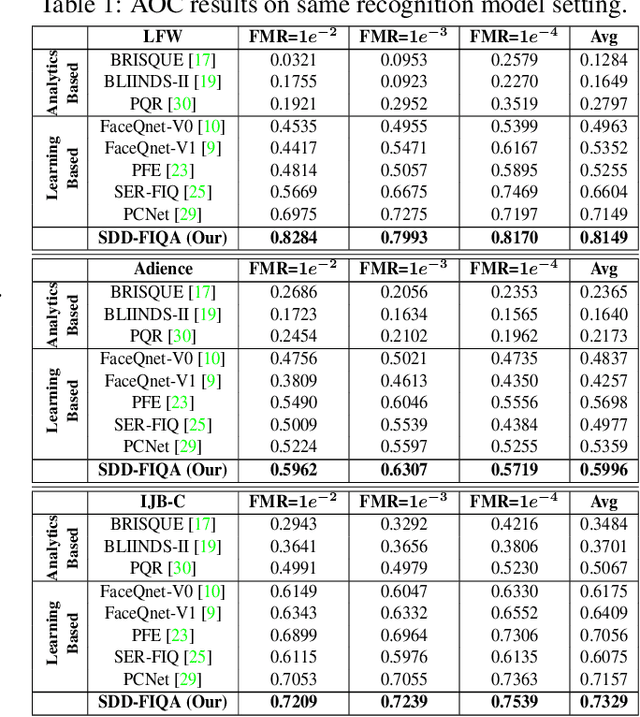

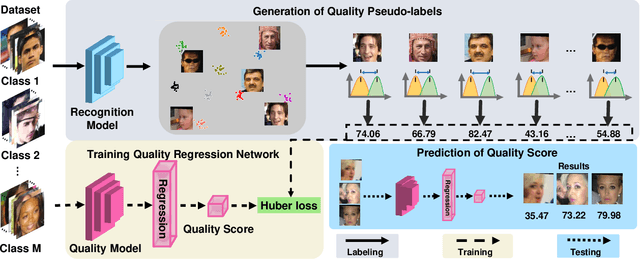

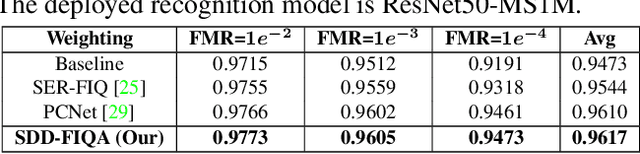

SDD-FIQA: Unsupervised Face Image Quality Assessment with Similarity Distribution Distance

Mar 10, 2021

Abstract:In recent years, Face Image Quality Assessment (FIQA) has become an indispensable part of the face recognition system to guarantee the stability and reliability of recognition performance in an unconstrained scenario. For this purpose, the FIQA method should consider both the intrinsic property and the recognizability of the face image. Most previous works aim to estimate the sample-wise embedding uncertainty or pair-wise similarity as the quality score, which only considers the information from partial intra-class. However, these methods ignore the valuable information from the inter-class, which is for estimating to the recognizability of face image. In this work, we argue that a high-quality face image should be similar to its intra-class samples and dissimilar to its inter-class samples. Thus, we propose a novel unsupervised FIQA method that incorporates Similarity Distribution Distance for Face Image Quality Assessment (SDD-FIQA). Our method generates quality pseudo-labels by calculating the Wasserstein Distance (WD) between the intra-class similarity distributions and inter-class similarity distributions. With these quality pseudo-labels, we are capable of training a regression network for quality prediction. Extensive experiments on benchmark datasets demonstrate that the proposed SDD-FIQA surpasses the state-of-the-arts by an impressive margin. Meanwhile, our method shows good generalization across different recognition systems.

CurricularFace: Adaptive Curriculum Learning Loss for Deep Face Recognition

Apr 01, 2020

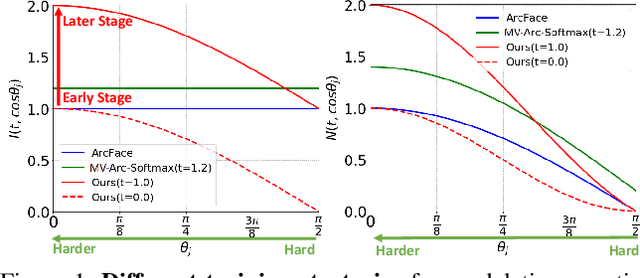

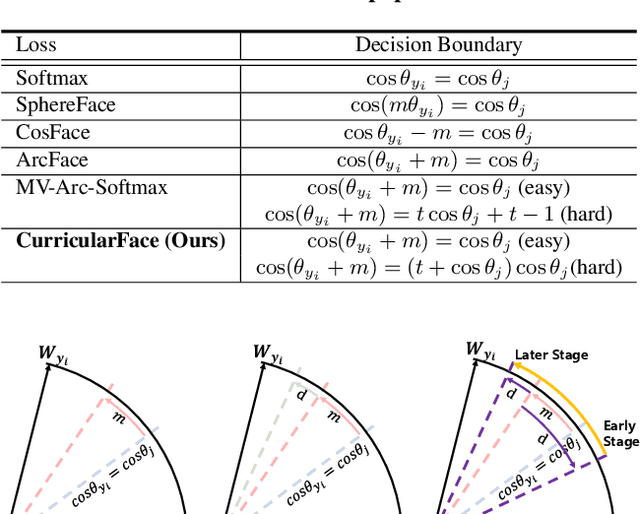

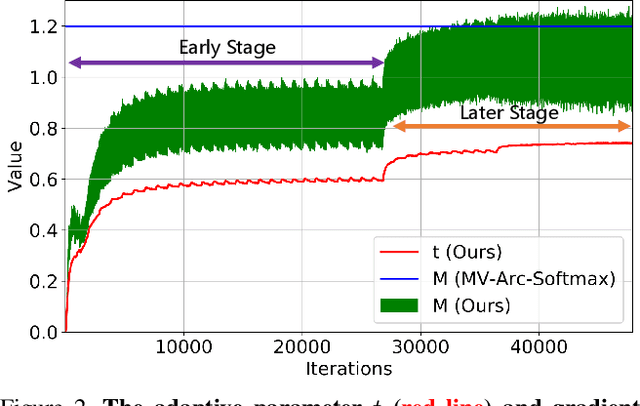

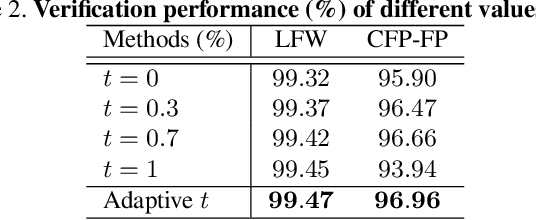

Abstract:As an emerging topic in face recognition, designing margin-based loss functions can increase the feature margin between different classes for enhanced discriminability. More recently, the idea of mining-based strategies is adopted to emphasize the misclassified samples, achieving promising results. However, during the entire training process, the prior methods either do not explicitly emphasize the sample based on its importance that renders the hard samples not fully exploited; or explicitly emphasize the effects of semi-hard/hard samples even at the early training stage that may lead to convergence issue. In this work, we propose a novel Adaptive Curriculum Learning loss (CurricularFace) that embeds the idea of curriculum learning into the loss function to achieve a novel training strategy for deep face recognition, which mainly addresses easy samples in the early training stage and hard ones in the later stage. Specifically, our CurricularFace adaptively adjusts the relative importance of easy and hard samples during different training stages. In each stage, different samples are assigned with different importance according to their corresponding difficultness. Extensive experimental results on popular benchmarks demonstrate the superiority of our CurricularFace over the state-of-the-art competitors.

Distribution Distillation Loss: Generic Approach for Improving Face Recognition from Hard Samples

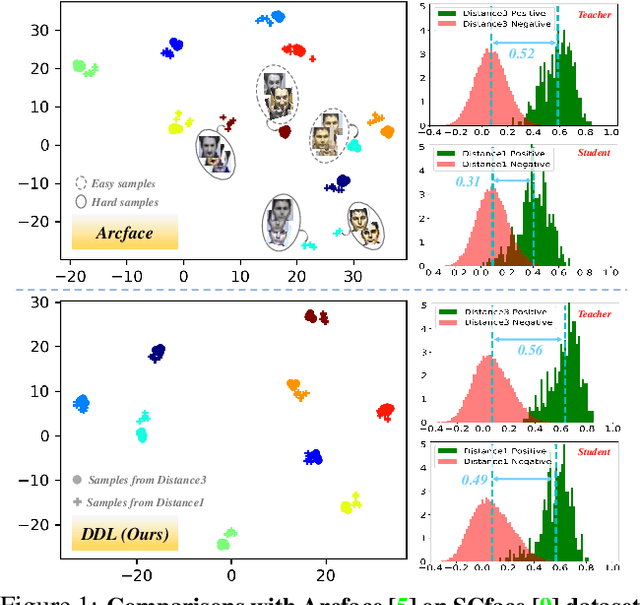

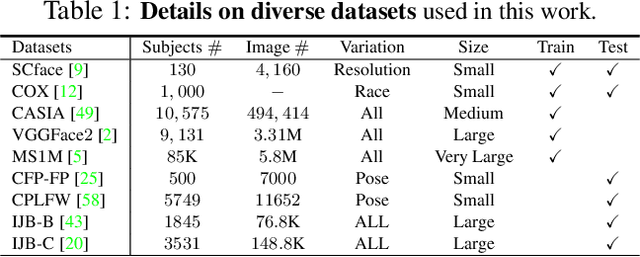

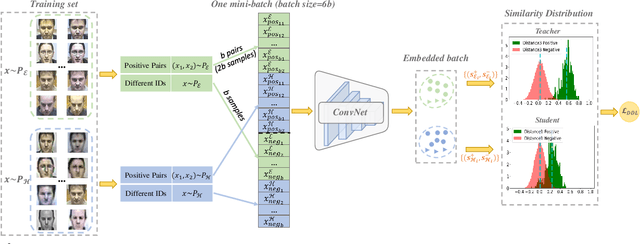

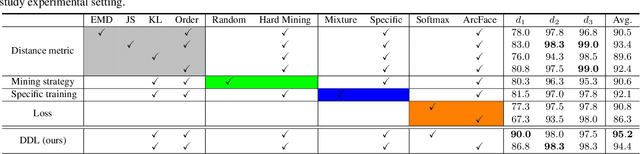

Feb 10, 2020

Abstract:Large facial variations are the main challenge in face recognition. To this end, previous variation-specific methods make full use of task-related prior to design special network losses, which are typically not general among different tasks and scenarios. In contrast, the existing generic methods focus on improving the feature discriminability to minimize the intra-class distance while maximizing the interclass distance, which perform well on easy samples but fail on hard samples. To improve the performance on those hard samples for general tasks, we propose a novel Distribution Distillation Loss to narrow the performance gap between easy and hard samples, which is a simple, effective and generic for various types of facial variations. Specifically, we first adopt state-of-the-art classifiers such as ArcFace to construct two similarity distributions: teacher distribution from easy samples and student distribution from hard samples. Then, we propose a novel distribution-driven loss to constrain the student distribution to approximate the teacher distribution, which thus leads to smaller overlap between the positive and negative pairs in the student distribution. We have conducted extensive experiments on both generic large-scale face benchmarks and benchmarks with diverse variations on race, resolution and pose. The quantitative results demonstrate the superiority of our method over strong baselines, e.g., Arcface and Cosface.

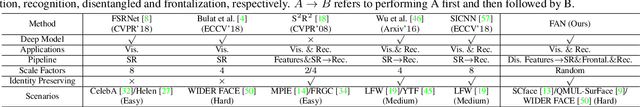

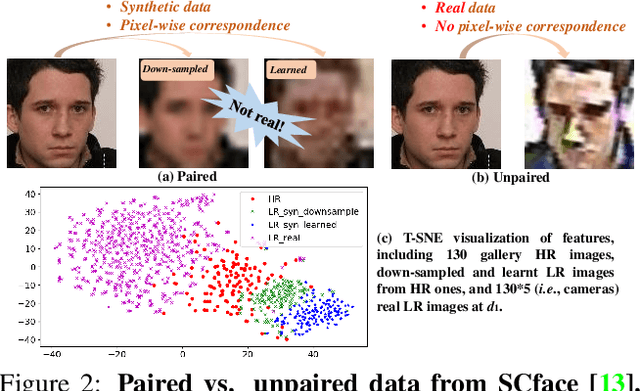

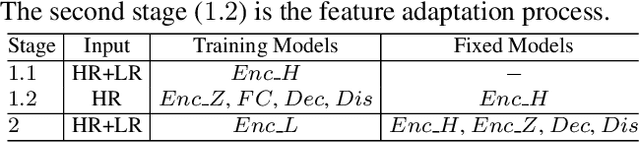

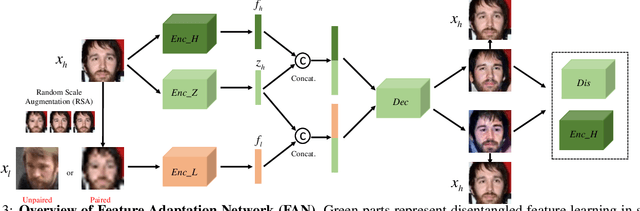

FAN: Feature Adaptation Network for Surveillance Face Recognition and Normalization

Nov 26, 2019

Abstract:This paper studies face recognition (FR) and normalization in surveillance imagery. Surveillance FR is a challenging problem that has great values in law enforcement. Despite recent progress in conventional FR, less effort has been devoted to surveillance FR. To bridge this gap, we propose a Feature Adaptation Network (FAN) to jointly perform surveillance FR and normalization. Our face normalization mainly acts on the aspect of image resolution, closely related to face super-resolution. However, previous face super-resolution methods require paired training data with pixel-to-pixel correspondence, which is typically unavailable between real low- and high-resolution faces. Our FAN can leverage both paired and unpaired data as we disentangle the features into identity and non-identity components and adapt the distribution of the identity features, which breaks the limit of current face super-resolution methods. We further propose a random scale augmentation scheme to learn resolution robust identity features, with advantages over previous fixed scale augmentation. Extensive experiments on LFW, WIDER FACE, QUML-SurvFace and SCface datasets have demonstrated the superiority of our proposed method compared to the state of the arts on surveillance face recognition and normalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge