Wenbin Zhang

Variance-Preserving-Based Interpolation Diffusion Models for Speech Enhancement

Jun 14, 2023Abstract:The goal of this study is to implement diffusion models for speech enhancement (SE). The first step is to emphasize the theoretical foundation of variance-preserving (VP)-based interpolation diffusion under continuous conditions. Subsequently, we present a more concise framework that encapsulates both the VP- and variance-exploding (VE)-based interpolation diffusion methods. We demonstrate that these two methods are special cases of the proposed framework. Additionally, we provide a practical example of VP-based interpolation diffusion for the SE task. To improve performance and ease model training, we analyze the common difficulties encountered in diffusion models and suggest amenable hyper-parameters. Finally, we evaluate our model against several methods using a public benchmark to showcase the effectiveness of our approach

Towards Fair Machine Learning Software: Understanding and Addressing Model Bias Through Counterfactual Thinking

Feb 16, 2023Abstract:The increasing use of Machine Learning (ML) software can lead to unfair and unethical decisions, thus fairness bugs in software are becoming a growing concern. Addressing these fairness bugs often involves sacrificing ML performance, such as accuracy. To address this issue, we present a novel counterfactual approach that uses counterfactual thinking to tackle the root causes of bias in ML software. In addition, our approach combines models optimized for both performance and fairness, resulting in an optimal solution in both aspects. We conducted a thorough evaluation of our approach on 10 benchmark tasks using a combination of 5 performance metrics, 3 fairness metrics, and 15 measurement scenarios, all applied to 8 real-world datasets. The conducted extensive evaluations show that the proposed method significantly improves the fairness of ML software while maintaining competitive performance, outperforming state-of-the-art solutions in 84.6% of overall cases based on a recent benchmarking tool.

Individual Fairness Guarantee in Learning with Censorship

Feb 16, 2023Abstract:Algorithmic fairness, studying how to make machine learning (ML) algorithms fair, is an established area of ML. As ML technologies expand their application domains, including ones with high societal impact, it becomes essential to take fairness into consideration when building ML systems. Yet, despite its wide range of socially sensitive applications, most work treats the issue of algorithmic bias as an intrinsic property of supervised learning, i.e., the class label is given as a precondition. Unlike prior fairness work, we study individual fairness in learning with censorship where the assumption of availability of the class label does not hold, while still requiring that similar individuals are treated similarly. We argue that this perspective represents a more realistic model of fairness research for real-world application deployment, and show how learning with such a relaxed precondition draws new insights that better explain algorithmic fairness. We also thoroughly evaluate the performance of the proposed methodology on three real-world datasets, and validate its superior performance in minimizing discrimination while maintaining predictive performance.

Preventing Discriminatory Decision-making in Evolving Data Streams

Feb 16, 2023Abstract:Bias in machine learning has rightly received significant attention over the last decade. However, most fair machine learning (fair-ML) work to address bias in decision-making systems has focused solely on the offline setting. Despite the wide prevalence of online systems in the real world, work on identifying and correcting bias in the online setting is severely lacking. The unique challenges of the online environment make addressing bias more difficult than in the offline setting. First, Streaming Machine Learning (SML) algorithms must deal with the constantly evolving real-time data stream. Second, they need to adapt to changing data distributions (concept drift) to make accurate predictions on new incoming data. Adding fairness constraints to this already complicated task is not straightforward. In this work, we focus on the challenges of achieving fairness in biased data streams while accounting for the presence of concept drift, accessing one sample at a time. We present Fair Sampling over Stream ($FS^2$), a novel fair rebalancing approach capable of being integrated with SML classification algorithms. Furthermore, we devise the first unified performance-fairness metric, Fairness Bonded Utility (FBU), to evaluate and compare the trade-off between performance and fairness of different bias mitigation methods efficiently. FBU simplifies the comparison of fairness-performance trade-offs of multiple techniques through one unified and intuitive evaluation, allowing model designers to easily choose a technique. Overall, extensive evaluations show our measures surpass those of other fair online techniques previously reported in the literature.

Fair Decision-making Under Uncertainty

Jan 29, 2023

Abstract:There has been concern within the artificial intelligence (AI) community and the broader society regarding the potential lack of fairness of AI-based decision-making systems. Surprisingly, there is little work quantifying and guaranteeing fairness in the presence of uncertainty which is prevalent in many socially sensitive applications, ranging from marketing analytics to actuarial analysis and recidivism prediction instruments. To this end, we study a longitudinal censored learning problem subject to fairness constraints, where we require that algorithmic decisions made do not affect certain individuals or social groups negatively in the presence of uncertainty on class label due to censorship. We argue that this formulation has a broader applicability to practical scenarios concerning fairness. We show how the newly devised fairness notions involving censored information and the general framework for fair predictions in the presence of censorship allow us to measure and mitigate discrimination under uncertainty that bridges the gap with real-world applications. Empirical evaluations on real-world discriminated datasets with censorship demonstrate the practicality of our approach.

Attention Mechanism based Cognition-level Scene Understanding

Apr 19, 2022

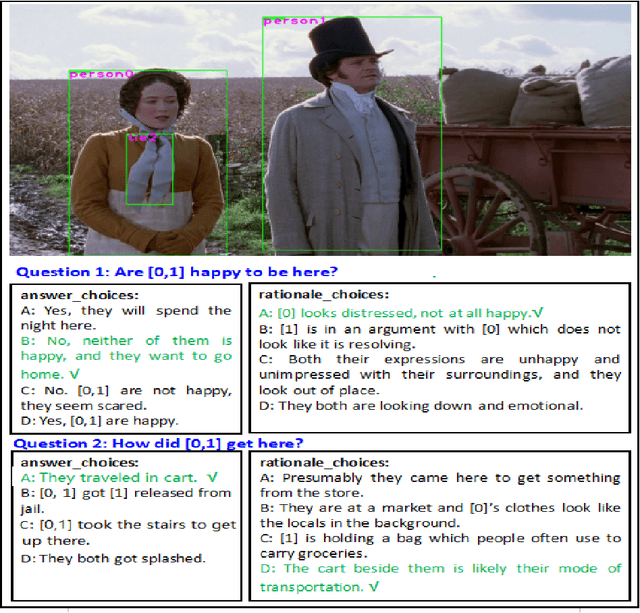

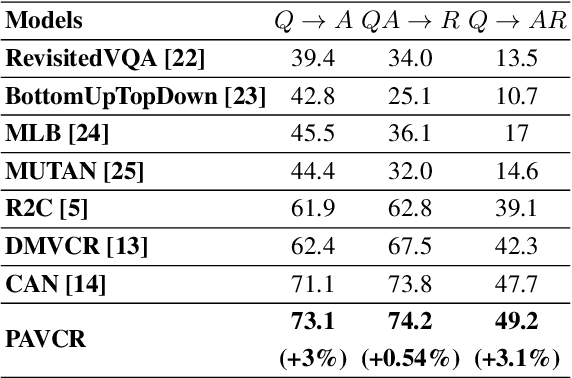

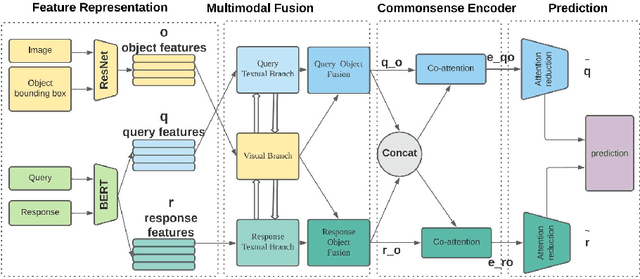

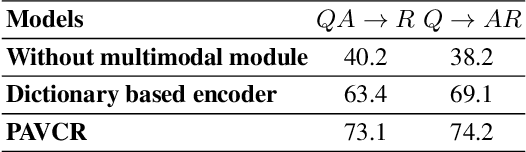

Abstract:Given a question-image input, the Visual Commonsense Reasoning (VCR) model can predict an answer with the corresponding rationale, which requires inference ability from the real world. The VCR task, which calls for exploiting the multi-source information as well as learning different levels of understanding and extensive commonsense knowledge, is a cognition-level scene understanding task. The VCR task has aroused researchers' interest due to its wide range of applications, including visual question answering, automated vehicle systems, and clinical decision support. Previous approaches to solving the VCR task generally rely on pre-training or exploiting memory with long dependency relationship encoded models. However, these approaches suffer from a lack of generalizability and losing information in long sequences. In this paper, we propose a parallel attention-based cognitive VCR network PAVCR, which fuses visual-textual information efficiently and encodes semantic information in parallel to enable the model to capture rich information for cognition-level inference. Extensive experiments show that the proposed model yields significant improvements over existing methods on the benchmark VCR dataset. Moreover, the proposed model provides intuitive interpretation into visual commonsense reasoning.

Longitudinal Fairness with Censorship

Mar 31, 2022

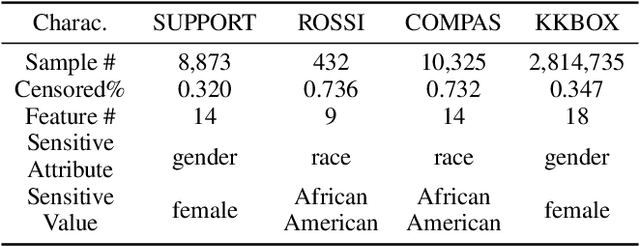

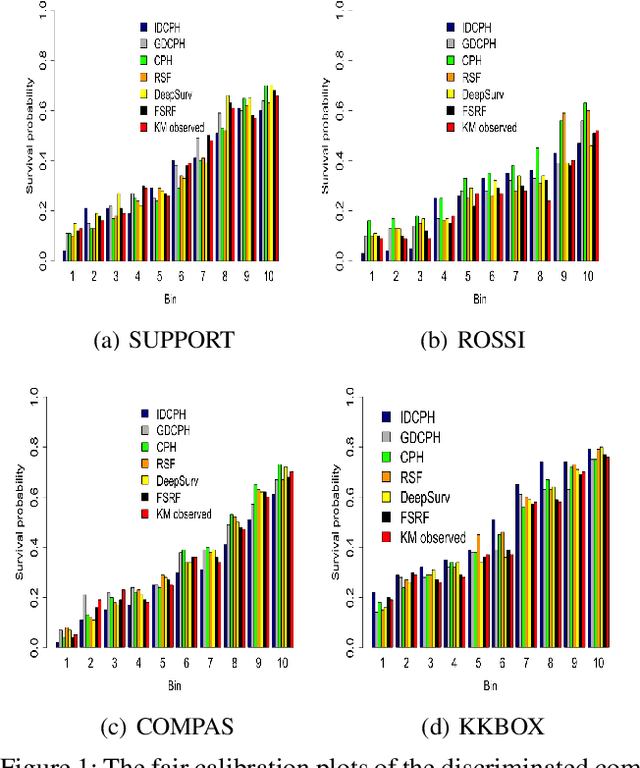

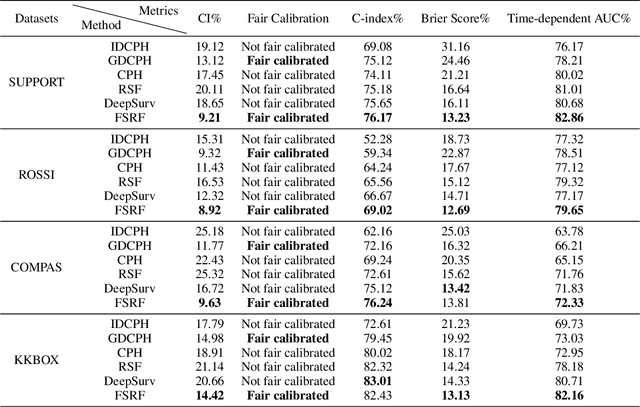

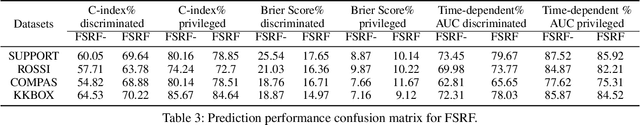

Abstract:Recent works in artificial intelligence fairness attempt to mitigate discrimination by proposing constrained optimization programs that achieve parity for some fairness statistic. Most assume availability of the class label, which is impractical in many real-world applications such as precision medicine, actuarial analysis and recidivism prediction. Here we consider fairness in longitudinal right-censored environments, where the time to event might be unknown, resulting in censorship of the class label and inapplicability of existing fairness studies. We devise applicable fairness measures, propose a debiasing algorithm, and provide necessary theoretical constructs to bridge fairness with and without censorship for these important and socially-sensitive tasks. Our experiments on four censored datasets confirm the utility of our approach.

Fairness Amidst Non-IID Graph Data: A Literature Review

Feb 16, 2022Abstract:Fairness in machine learning (ML), the process to understand and correct algorithmic bias, has gained increasing attention with numerous literature being carried out, commonly assume the underlying data is independent and identically distributed (IID). On the other hand, graphs are a ubiquitous data structure to capture connections among individual units and is non-IID by nature. It is therefore of great importance to bridge the traditional fairness literature designed on IID data and ubiquitous non-IID graph representations to tackle bias in ML systems. In this survey, we review such recent advance in fairness amidst non-IID graph data and identify datasets and evaluation metrics available for future research. We also point out the limitations of existing work as well as promising future directions.

Incremental Mining of Frequent Serial Episodes Considering Multiple Occurrence

Feb 03, 2022

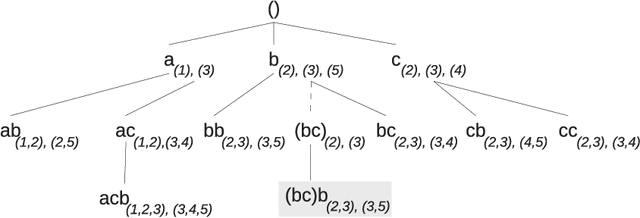

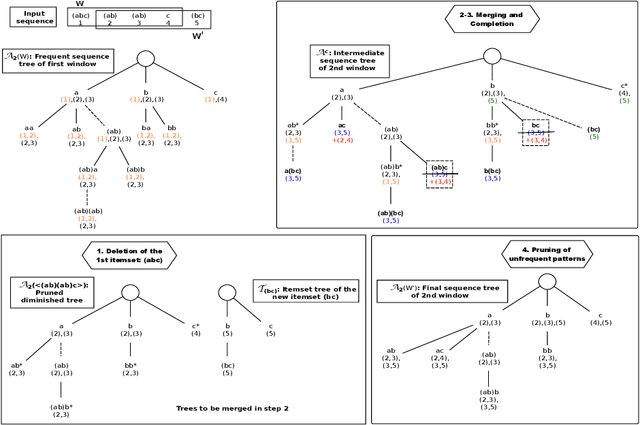

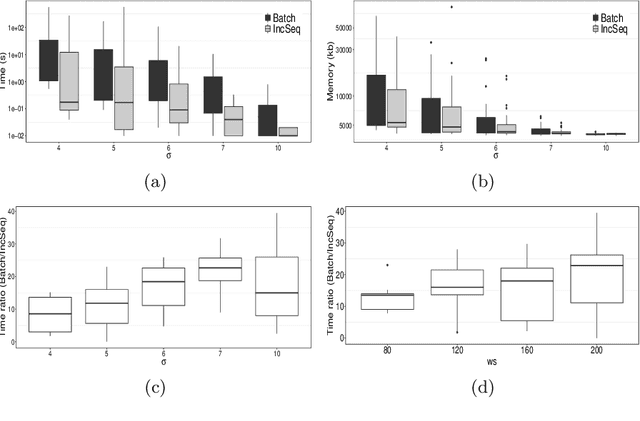

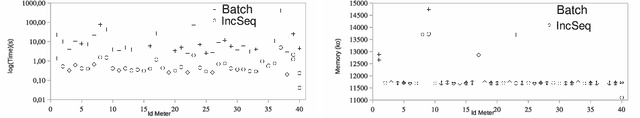

Abstract:The need to analyze information from streams arises in a variety of applications. One of the fundamental research directions is to mine sequential patterns over data streams. Current studies mine series of items based on the existence of the pattern in transactions but pay no attention to the series of itemsets and their multiple occurrences. The pattern over a window of itemsets stream and their multiple occurrences, however, provides additional capability to recognize the essential characteristics of the patterns and the inter-relationships among them that are unidentifiable by the existing items and existence based studies. In this paper, we study such a new sequential pattern mining problem and propose a corresponding efficient sequential miner with novel strategies to prune search space efficiently. Experiments on both real and synthetic data show the utility of our approach.

A Generic Knowledge Based Medical Diagnosis Expert System

Oct 26, 2021

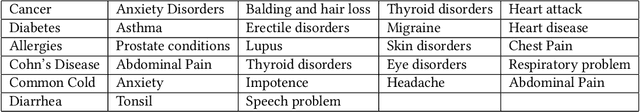

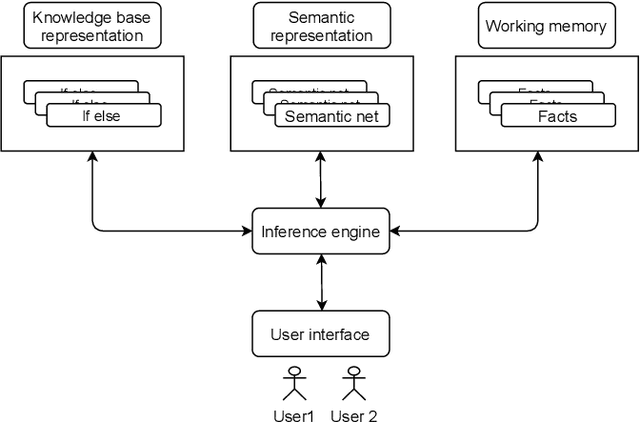

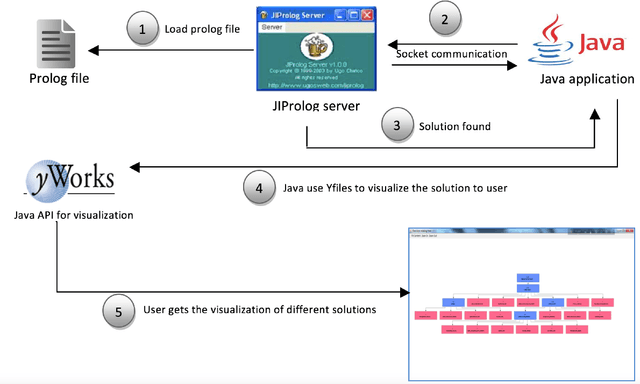

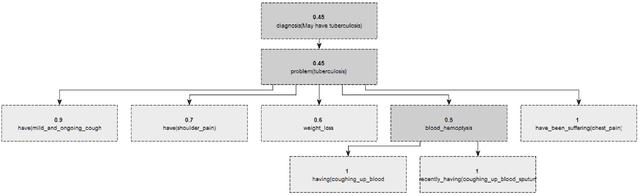

Abstract:In this paper, we design and implement a generic medical knowledge based system (MKBS) for identifying diseases from several symptoms. In this system, some important aspects like knowledge bases system, knowledge representation, inference engine have been addressed. The system asks users different questions and inference engines will use the certainty factor to prune out low possible solutions. The proposed disease diagnosis system also uses a graphical user interface (GUI) to facilitate users to interact with the expert system. Our expert system is generic and flexible, which can be integrated with any rule bases system in disease diagnosis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge