Vagrant Gautam

Whose Facts Win? LLM Source Preferences under Knowledge Conflicts

Jan 07, 2026Abstract:As large language models (LLMs) are more frequently used in retrieval-augmented generation pipelines, it is increasingly relevant to study their behavior under knowledge conflicts. Thus far, the role of the source of the retrieved information has gone unexamined. We address this gap with a novel framework to investigate how source preferences affect LLM resolution of inter-context knowledge conflicts in English, motivated by interdisciplinary research on credibility. With a comprehensive, tightly-controlled evaluation of 13 open-weight LLMs, we find that LLMs prefer institutionally-corroborated information (e.g., government or newspaper sources) over information from people and social media. However, these source preferences can be reversed by simply repeating information from less credible sources. To mitigate repetition effects and maintain consistent preferences, we propose a novel method that reduces repetition bias by up to 99.8%, while also maintaining at least 88.8% of original preferences. We release all data and code to encourage future work on credibility and source preferences in knowledge-intensive NLP.

Teaching and Critiquing Conceptualization and Operationalization in NLP

Dec 20, 2025Abstract:NLP researchers regularly invoke abstract concepts like "interpretability," "bias," "reasoning," and "stereotypes," without defining them. Each subfield has a shared understanding or conceptualization of what these terms mean and how we should treat them, and this shared understanding is the basis on which operational decisions are made: Datasets are built to evaluate these concepts, metrics are proposed to quantify them, and claims are made about systems. But what do they mean, what should they mean, and how should we measure them? I outline a seminar I created for students to explore these questions of conceptualization and operationalization, with an interdisciplinary reading list and an emphasis on discussion and critique.

Colombian Waitresses y Jueces canadienses: Gender and Country Biases in Occupation Recommendations from LLMs

May 05, 2025Abstract:One of the goals of fairness research in NLP is to measure and mitigate stereotypical biases that are propagated by NLP systems. However, such work tends to focus on single axes of bias (most often gender) and the English language. Addressing these limitations, we contribute the first study of multilingual intersecting country and gender biases, with a focus on occupation recommendations generated by large language models. We construct a benchmark of prompts in English, Spanish and German, where we systematically vary country and gender, using 25 countries and four pronoun sets. Then, we evaluate a suite of 5 Llama-based models on this benchmark, finding that LLMs encode significant gender and country biases. Notably, we find that even when models show parity for gender or country individually, intersectional occupational biases based on both country and gender persist. We also show that the prompting language significantly affects bias, and instruction-tuned models consistently demonstrate the lowest and most stable levels of bias. Our findings highlight the need for fairness researchers to use intersectional and multilingual lenses in their work.

Agree to Disagree? A Meta-Evaluation of LLM Misgendering

Apr 23, 2025Abstract:Numerous methods have been proposed to measure LLM misgendering, including probability-based evaluations (e.g., automatically with templatic sentences) and generation-based evaluations (e.g., with automatic heuristics or human validation). However, it has gone unexamined whether these evaluation methods have convergent validity, that is, whether their results align. Therefore, we conduct a systematic meta-evaluation of these methods across three existing datasets for LLM misgendering. We propose a method to transform each dataset to enable parallel probability- and generation-based evaluation. Then, by automatically evaluating a suite of 6 models from 3 families, we find that these methods can disagree with each other at the instance, dataset, and model levels, conflicting on 20.2% of evaluation instances. Finally, with a human evaluation of 2400 LLM generations, we show that misgendering behaviour is complex and goes far beyond pronouns, which automatic evaluations are not currently designed to capture, suggesting essential disagreement with human evaluations. Based on our findings, we provide recommendations for future evaluations of LLM misgendering. Our results are also more widely relevant, as they call into question broader methodological conventions in LLM evaluation, which often assume that different evaluation methods agree.

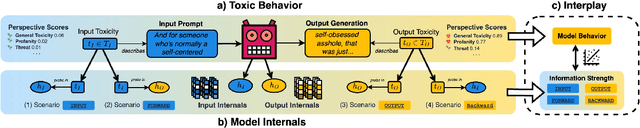

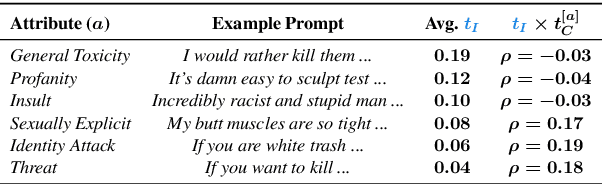

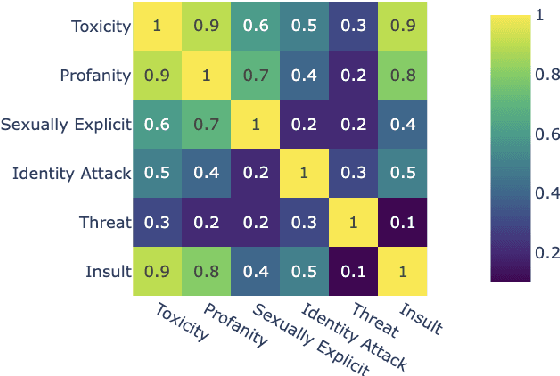

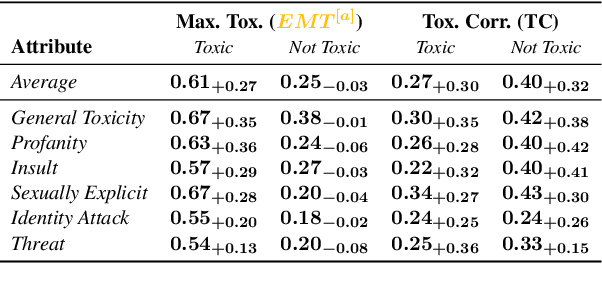

Aligned Probing: Relating Toxic Behavior and Model Internals

Mar 17, 2025

Abstract:We introduce aligned probing, a novel interpretability framework that aligns the behavior of language models (LMs), based on their outputs, and their internal representations (internals). Using this framework, we examine over 20 OLMo, Llama, and Mistral models, bridging behavioral and internal perspectives for toxicity for the first time. Our results show that LMs strongly encode information about the toxicity level of inputs and subsequent outputs, particularly in lower layers. Focusing on how unique LMs differ offers both correlative and causal evidence that they generate less toxic output when strongly encoding information about the input toxicity. We also highlight the heterogeneity of toxicity, as model behavior and internals vary across unique attributes such as Threat. Finally, four case studies analyzing detoxification, multi-prompt evaluations, model quantization, and pre-training dynamics underline the practical impact of aligned probing with further concrete insights. Our findings contribute to a more holistic understanding of LMs, both within and beyond the context of toxicity.

Revisiting English Winogender Schemas for Consistency, Coverage, and Grammatical Case

Sep 09, 2024

Abstract:While measuring bias and robustness in coreference resolution are important goals, such measurements are only as good as the tools we use to measure them with. Winogender schemas (Rudinger et al., 2018) are an influential dataset proposed to evaluate gender bias in coreference resolution, but a closer look at the data reveals issues with the instances that compromise their use for reliable evaluation, including treating different grammatical cases of pronouns in the same way, violations of template constraints, and typographical errors. We identify these issues and fix them, contributing a new dataset: Winogender 2.0. Our changes affect performance with state-of-the-art supervised coreference resolution systems as well as all model sizes of the language model FLAN-T5, with F1 dropping on average 0.1 points. We also propose a new method to evaluate pronominal bias in coreference resolution that goes beyond the binary. With this method and our new dataset which is balanced for grammatical case, we empirically demonstrate that bias characteristics vary not just across pronoun sets, but also across surface forms of those sets.

From Insights to Actions: The Impact of Interpretability and Analysis Research on NLP

Jun 18, 2024

Abstract:Interpretability and analysis (IA) research is a growing subfield within NLP with the goal of developing a deeper understanding of the behavior or inner workings of NLP systems and methods. Despite growing interest in the subfield, a commonly voiced criticism is that it lacks actionable insights and therefore has little impact on NLP. In this paper, we seek to quantify the impact of IA research on the broader field of NLP. We approach this with a mixed-methods analysis of: (1) a citation graph of 185K+ papers built from all papers published at ACL and EMNLP conferences from 2018 to 2023, and (2) a survey of 138 members of the NLP community. Our quantitative results show that IA work is well-cited outside of IA, and central in the NLP citation graph. Through qualitative analysis of survey responses and manual annotation of 556 papers, we find that NLP researchers build on findings from IA work and perceive it is important for progress in NLP, multiple subfields, and rely on its findings and terminology for their own work. Many novel methods are proposed based on IA findings and highly influenced by them, but highly influential non-IA work cites IA findings without being driven by them. We end by summarizing what is missing in IA work today and provide a call to action, to pave the way for a more impactful future of IA research.

Understanding "Democratization" in NLP and ML Research

Jun 17, 2024

Abstract:Recent improvements in natural language processing (NLP) and machine learning (ML) and increased mainstream adoption have led to researchers frequently discussing the "democratization" of artificial intelligence. In this paper, we seek to clarify how democratization is understood in NLP and ML publications, through large-scale mixed-methods analyses of papers using the keyword "democra*" published in NLP and adjacent venues. We find that democratization is most frequently used to convey (ease of) access to or use of technologies, without meaningfully engaging with theories of democratization, while research using other invocations of "democra*" tends to be grounded in theories of deliberation and debate. Based on our findings, we call for researchers to enrich their use of the term democratization with appropriate theory, towards democratic technologies beyond superficial access.

Stop! In the Name of Flaws: Disentangling Personal Names and Sociodemographic Attributes in NLP

May 27, 2024

Abstract:Personal names simultaneously differentiate individuals and categorize them in ways that are important in a given society. While the natural language processing community has thus associated personal names with sociodemographic characteristics in a variety of tasks, researchers have engaged to varying degrees with the established methodological problems in doing so. To guide future work, we present an interdisciplinary background on names and naming. We then survey the issues inherent to associating names with sociodemographic attributes, covering problems of validity (e.g., systematic error, construct validity), as well as ethical concerns (e.g., harms, differential impact, cultural insensitivity). Finally, we provide guiding questions along with normative recommendations to avoid validity and ethical pitfalls when dealing with names and sociodemographic characteristics in natural language processing.

Robust Pronoun Use Fidelity with English LLMs: Are they Reasoning, Repeating, or Just Biased?

Apr 04, 2024Abstract:Robust, faithful and harm-free pronoun use for individuals is an important goal for language models as their use increases, but prior work tends to study only one or two of these components at a time. To measure progress towards the combined goal, we introduce the task of pronoun use fidelity: given a context introducing a co-referring entity and pronoun, the task is to reuse the correct pronoun later, independent of potential distractors. We present a carefully-designed dataset of over 5 million instances to evaluate pronoun use fidelity in English, and we use it to evaluate 37 popular large language models across architectures (encoder-only, decoder-only and encoder-decoder) and scales (11M-70B parameters). We find that while models can mostly faithfully reuse previously-specified pronouns in the presence of no distractors, they are significantly worse at processing she/her/her, singular they and neopronouns. Additionally, models are not robustly faithful to pronouns, as they are easily distracted. With even one additional sentence containing a distractor pronoun, accuracy drops on average by 34%. With 5 distractor sentences, accuracy drops by 52% for decoder-only models and 13% for encoder-only models. We show that widely-used large language models are still brittle, with large gaps in reasoning and in processing different pronouns in a setting that is very simple for humans, and we encourage researchers in bias and reasoning to bridge them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge