Tianhang Yu

SoulX-FlashTalk: Real-Time Infinite Streaming of Audio-Driven Avatars via Self-Correcting Bidirectional Distillation

Jan 06, 2026Abstract:Deploying massive diffusion models for real-time, infinite-duration, audio-driven avatar generation presents a significant engineering challenge, primarily due to the conflict between computational load and strict latency constraints. Existing approaches often compromise visual fidelity by enforcing strictly unidirectional attention mechanisms or reducing model capacity. To address this problem, we introduce \textbf{SoulX-FlashTalk}, a 14B-parameter framework optimized for high-fidelity real-time streaming. Diverging from conventional unidirectional paradigms, we use a \textbf{Self-correcting Bidirectional Distillation} strategy that retains bidirectional attention within video chunks. This design preserves critical spatiotemporal correlations, significantly enhancing motion coherence and visual detail. To ensure stability during infinite generation, we incorporate a \textbf{Multi-step Retrospective Self-Correction Mechanism}, enabling the model to autonomously recover from accumulated errors and preventing collapse. Furthermore, we engineered a full-stack inference acceleration suite incorporating hybrid sequence parallelism, Parallel VAE, and kernel-level optimizations. Extensive evaluations confirm that SoulX-FlashTalk is the first 14B-scale system to achieve a \textbf{sub-second start-up latency (0.87s)} while reaching a real-time throughput of \textbf{32 FPS}, setting a new standard for high-fidelity interactive digital human synthesis.

SoulX-LiveTalk: Real-Time Infinite Streaming of Audio-Driven Avatars via Self-Correcting Bidirectional Distillation

Dec 31, 2025Abstract:Deploying massive diffusion models for real-time, infinite-duration, audio-driven avatar generation presents a significant engineering challenge, primarily due to the conflict between computational load and strict latency constraints. Existing approaches often compromise visual fidelity by enforcing strictly unidirectional attention mechanisms or reducing model capacity. To address this problem, we introduce \textbf{SoulX-LiveTalk}, a 14B-parameter framework optimized for high-fidelity real-time streaming. Diverging from conventional unidirectional paradigms, we use a \textbf{Self-correcting Bidirectional Distillation} strategy that retains bidirectional attention within video chunks. This design preserves critical spatiotemporal correlations, significantly enhancing motion coherence and visual detail. To ensure stability during infinite generation, we incorporate a \textbf{Multi-step Retrospective Self-Correction Mechanism}, enabling the model to autonomously recover from accumulated errors and preventing collapse. Furthermore, we engineered a full-stack inference acceleration suite incorporating hybrid sequence parallelism, Parallel VAE, and kernel-level optimizations. Extensive evaluations confirm that SoulX-LiveTalk is the first 14B-scale system to achieve a \textbf{sub-second start-up latency (0.87s)} while reaching a real-time throughput of \textbf{32 FPS}, setting a new standard for high-fidelity interactive digital human synthesis.

Radiomap Inpainting for Restricted Areas based on Propagation Priority and Depth Map

May 24, 2023

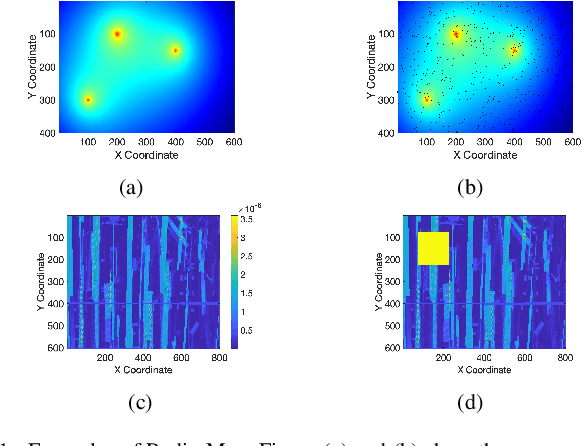

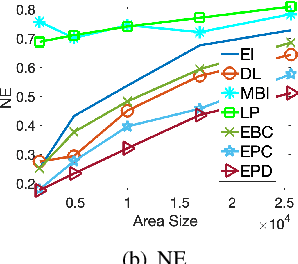

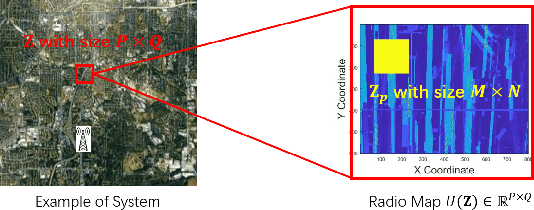

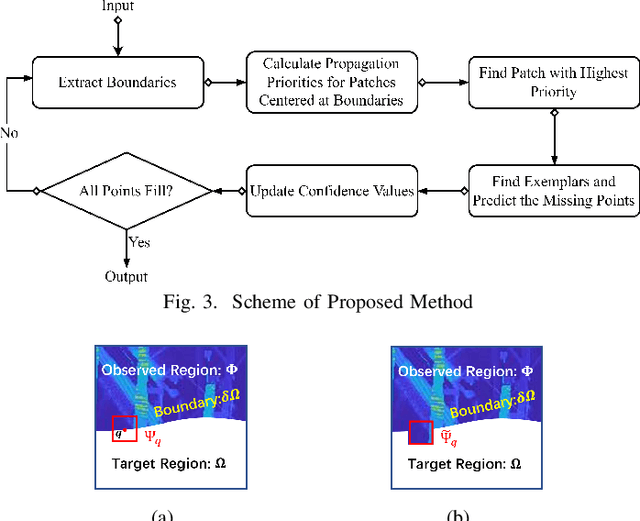

Abstract:Providing rich and useful information regarding spectrum activities and propagation channels, radiomaps characterize the detailed distribution of power spectral density (PSD) and are important tools for network planning in modern wireless systems. Generally, radiomaps are constructed from radio strength measurements by deployed sensors and user devices. However, not all areas are accessible for radio measurements due to physical constraints and security consideration, leading to non-uniformly spaced measurements and blanks on a radiomap. In this work, we explore distribution of radio spectrum strengths in view of surrounding environments, and propose two radiomap inpainting approaches for the reconstruction of radiomaps that cover missing areas. Specifically, we first define a propagation-based priority and integrate exemplar-based inpainting with radio propagation model for fine-resolution small-size missing area reconstruction on a radiomap. Then, we introduce a novel radio depth map and propose a two-step template-perturbation approach for large-size restricted region inpainting. Our experimental results demonstrate the power of the proposed propagation priority and radio depth map in capturing the PSD distribution, as well as the efficacy of the proposed methods for radiomap reconstruction.

Exemplar-Based Radio Map Reconstruction of Missing Areas Using Propagation Priority

Sep 10, 2022

Abstract:Radio map describes network coverage and is a practically important tool for network planning in modern wireless systems. Generally, radio strength measurements are collected to construct fine-resolution radio maps for analysis. However, certain protected areas are not accessible for measurement due to physical constraints and security considerations, leading to blanked spaces on a radio map. Non-uniformly spaced measurement and uneven observation resolution make it more difficult for radio map estimation and spectrum planning in protected areas. This work explores the distribution of radio spectrum strengths and proposes an exemplar-based approach to reconstruct missing areas on a radio map. Instead of taking generic image processing approaches, we leverage radio propagation models to determine directions of region filling and develop two different schemes to estimate the missing radio signal power. Our test results based on high-fidelity simulation demonstrate efficacy of the proposed methods for radio map reconstruction.

INT8 Winograd Acceleration for Conv1D Equipped ASR Models Deployed on Mobile Devices

Oct 28, 2020

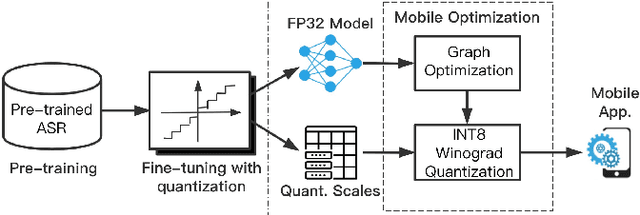

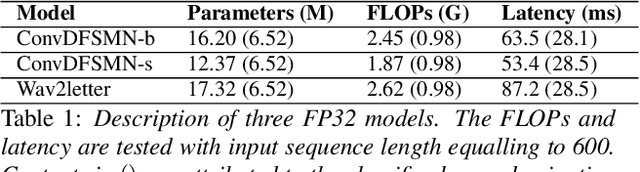

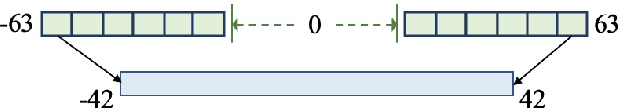

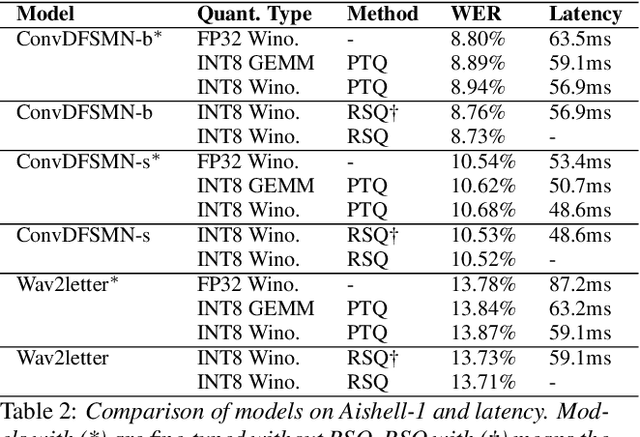

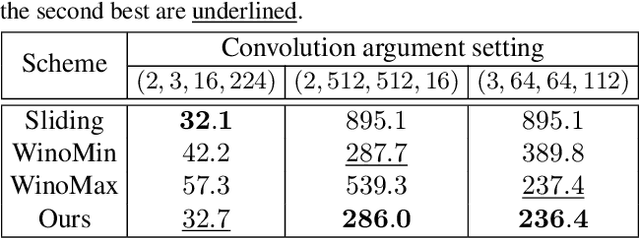

Abstract:The intensive computation of Automatic Speech Recognition (ASR) models obstructs them from being deployed on mobile devices. In this paper, we present a novel quantized Winograd optimization pipeline, which combines the quantization and fast convolution to achieve efficient inference acceleration on mobile devices for ASR models. To avoid the information loss due to the combination of quantization and Winograd convolution, a Range-Scaled Quantization (RSQ) training method is proposed to expand the quantized numerical range and to distill knowledge from high-precision values. Moreover, an improved Conv1D equipped DFSMN (ConvDFSMN) model is designed for mobile deployment. We conduct extensive experiments on both ConvDFSMN and Wav2letter models. Results demonstrate the models can be effectively optimized with the proposed pipeline. Especially, Wav2letter achieves 1.48* speedup with an approximate 0.07% WER decrease on ARMv7-based mobile devices.

MNN: A Universal and Efficient Inference Engine

Feb 27, 2020

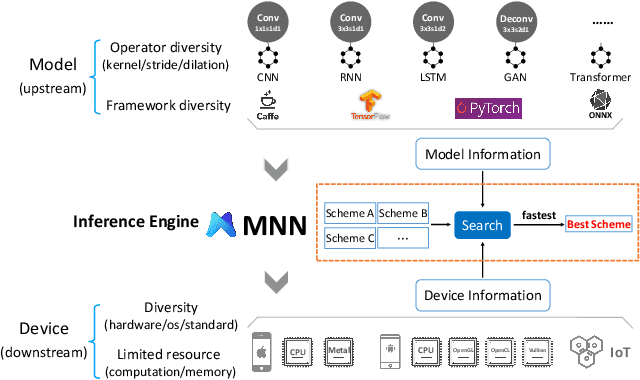

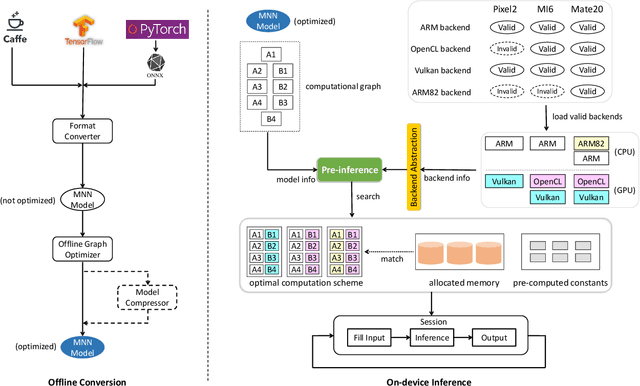

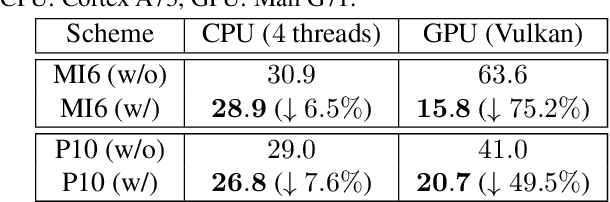

Abstract:Deploying deep learning models on mobile devices draws more and more attention recently. However, designing an efficient inference engine on devices is under the great challenges of model compatibility, device diversity, and resource limitation. To deal with these challenges, we propose Mobile Neural Network (MNN), a universal and efficient inference engine tailored to mobile applications. In this paper, the contributions of MNN include: (1) presenting a mechanism called pre-inference that manages to conduct runtime optimization; (2)deliveringthorough kernel optimization on operators to achieve optimal computation performance; (3) introducing backend abstraction module which enables hybrid scheduling and keeps the engine lightweight. Extensive benchmark experiments demonstrate that MNN performs favorably against other popular lightweight deep learning frameworks. MNN is available to public at: https://github.com/alibaba/MNN.

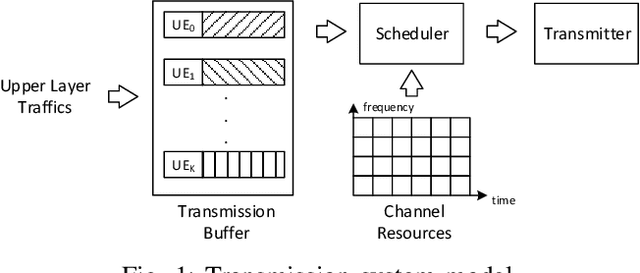

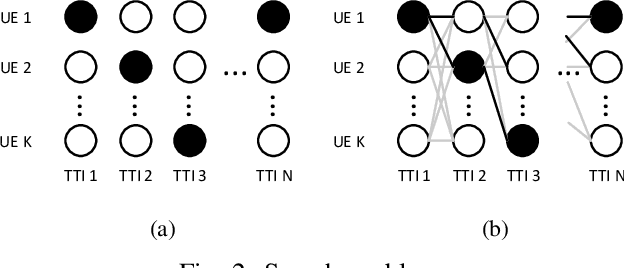

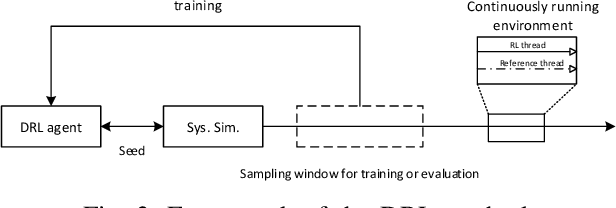

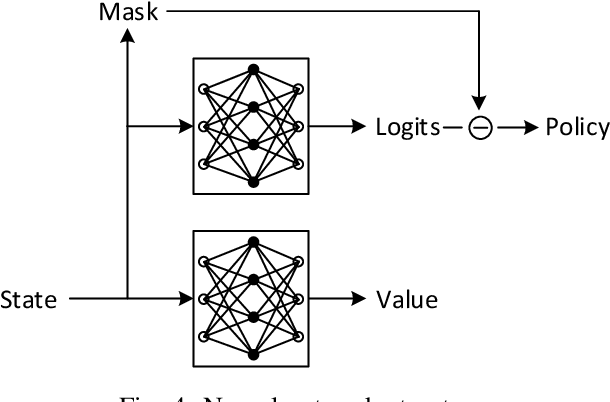

Buffer-aware Wireless Scheduling based on Deep Reinforcement Learning

Nov 13, 2019

Abstract:In this paper, the downlink packet scheduling problem for cellular networks is modeled, which jointly optimizes throughput, fairness and packet drop rate. Two genie-aided heuristic search methods are employed to explore the solution space. A deep reinforcement learning (DRL) framework with A2C algorithm is proposed for the optimization problem. Several methods have been utilized in the framework to improve the sampling and training efficiency and to adapt the algorithm to a specific scheduling problem. Numerical results show that DRL outperforms the baseline algorithm and achieves similar performance as genie-aided methods without using the future information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge