Houjiang Chen

Serving Large Language Models on Huawei CloudMatrix384

Jun 15, 2025

Abstract:The rapid evolution of large language models (LLMs), driven by growing parameter scales, adoption of mixture-of-experts (MoE) architectures, and expanding context lengths, imposes unprecedented demands on AI infrastructure. Traditional AI clusters face limitations in compute intensity, memory bandwidth, inter-chip communication, and latency, compounded by variable workloads and strict service-level objectives. Addressing these issues requires fundamentally redesigned hardware-software integration. This paper introduces Huawei CloudMatrix, a next-generation AI datacenter architecture, realized in the production-grade CloudMatrix384 supernode. It integrates 384 Ascend 910C NPUs and 192 Kunpeng CPUs interconnected via an ultra-high-bandwidth Unified Bus (UB) network, enabling direct all-to-all communication and dynamic pooling of resources. These features optimize performance for communication-intensive operations, such as large-scale MoE expert parallelism and distributed key-value cache access. To fully leverage CloudMatrix384, we propose CloudMatrix-Infer, an advanced LLM serving solution incorporating three core innovations: a peer-to-peer serving architecture that independently scales prefill, decode, and caching; a large-scale expert parallelism strategy supporting EP320 via efficient UB-based token dispatch; and hardware-aware optimizations including specialized operators, microbatch-based pipelining, and INT8 quantization. Evaluation with the DeepSeek-R1 model shows CloudMatrix-Infer achieves state-of-the-art efficiency: prefill throughput of 6,688 tokens/s per NPU and decode throughput of 1,943 tokens/s per NPU (<50 ms TPOT). It effectively balances throughput and latency, sustaining 538 tokens/s even under stringent 15 ms latency constraints, while INT8 quantization maintains model accuracy across benchmarks.

INT8 Winograd Acceleration for Conv1D Equipped ASR Models Deployed on Mobile Devices

Oct 28, 2020

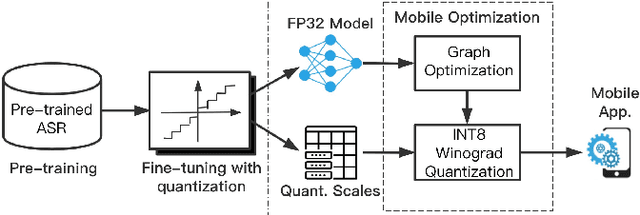

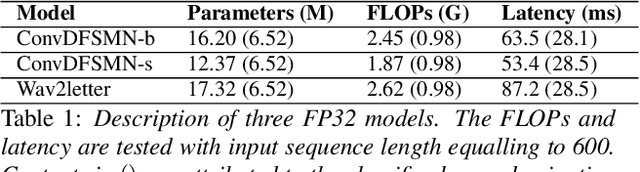

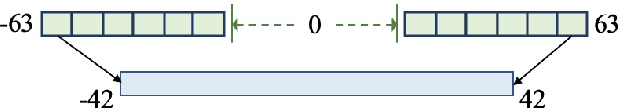

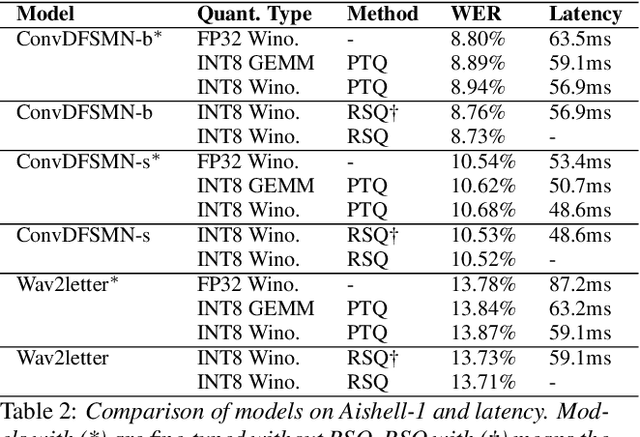

Abstract:The intensive computation of Automatic Speech Recognition (ASR) models obstructs them from being deployed on mobile devices. In this paper, we present a novel quantized Winograd optimization pipeline, which combines the quantization and fast convolution to achieve efficient inference acceleration on mobile devices for ASR models. To avoid the information loss due to the combination of quantization and Winograd convolution, a Range-Scaled Quantization (RSQ) training method is proposed to expand the quantized numerical range and to distill knowledge from high-precision values. Moreover, an improved Conv1D equipped DFSMN (ConvDFSMN) model is designed for mobile deployment. We conduct extensive experiments on both ConvDFSMN and Wav2letter models. Results demonstrate the models can be effectively optimized with the proposed pipeline. Especially, Wav2letter achieves 1.48* speedup with an approximate 0.07% WER decrease on ARMv7-based mobile devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge