Bridging the Gap between Pre-Training and Fine-Tuning for End-to-End Speech Translation

Sep 19, 2019Chengyi Wang, Yu Wu, Shujie Liu, Zhenglu Yang, Ming Zhou

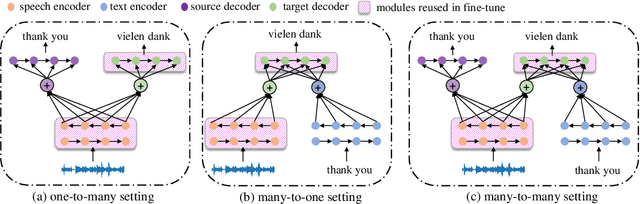

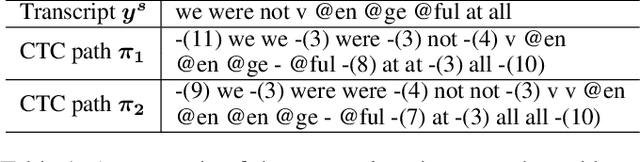

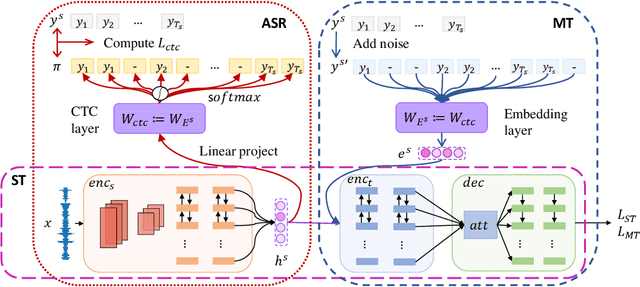

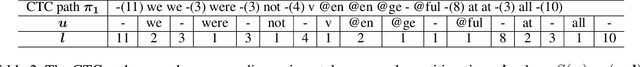

End-to-end speech translation, a hot topic in recent years, aims to translate a segment of audio into a specific language with an end-to-end model. Conventional approaches employ multi-task learning and pre-training methods for this task, but they suffer from the huge gap between pre-training and fine-tuning. To address these issues, we propose a Tandem Connectionist Encoding Network (TCEN) which bridges the gap by reusing all subnets in fine-tuning, keeping the roles of subnets consistent, and pre-training the attention module. Furthermore, we propose two simple but effective methods to guarantee the speech encoder outputs and the MT encoder inputs are consistent in terms of semantic representation and sequence length. Experimental results show that our model outperforms baselines 2.2 BLEU on a large benchmark dataset.

Accelerating Transformer Decoding via a Hybrid of Self-attention and Recurrent Neural Network

Sep 05, 2019Chengyi Wang, Shuangzhi Wu, Shujie Liu

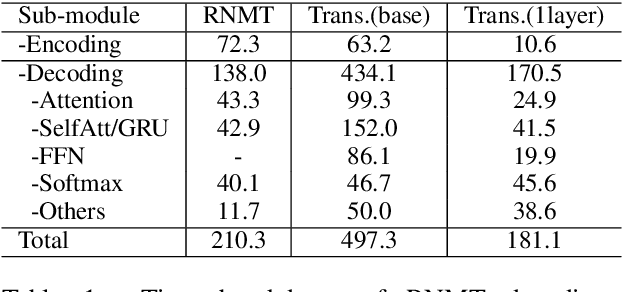

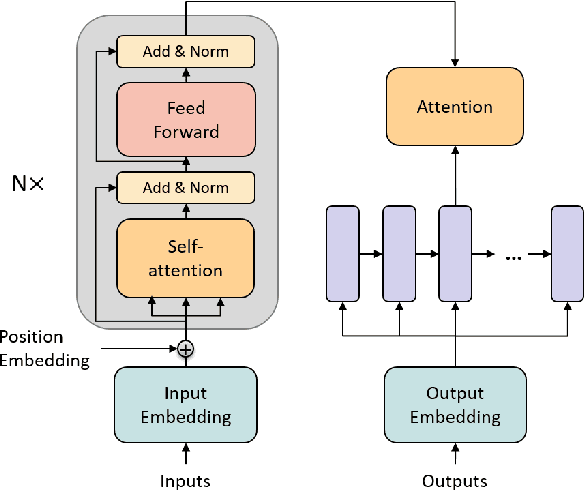

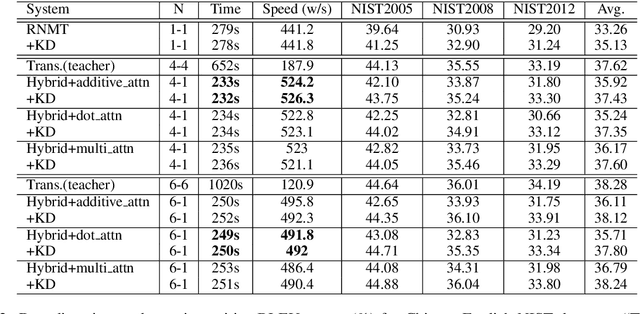

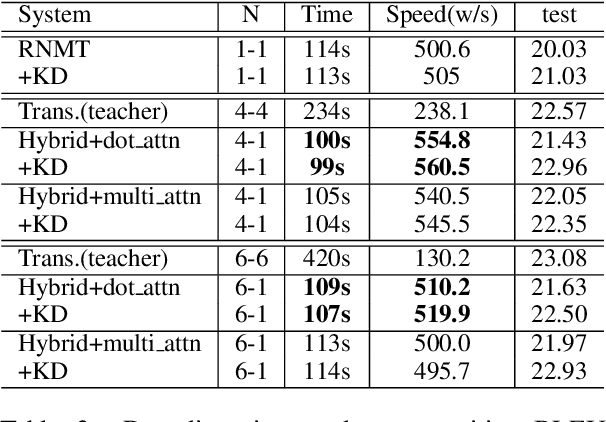

Due to the highly parallelizable architecture, Transformer is faster to train than RNN-based models and popularly used in machine translation tasks. However, at inference time, each output word requires all the hidden states of the previously generated words, which limits the parallelization capability, and makes it much slower than RNN-based ones. In this paper, we systematically analyze the time cost of different components of both the Transformer and RNN-based model. Based on it, we propose a hybrid network of self-attention and RNN structures, in which, the highly parallelizable self-attention is utilized as the encoder, and the simpler RNN structure is used as the decoder. Our hybrid network can decode 4-times faster than the Transformer. In addition, with the help of knowledge distillation, our hybrid network achieves comparable translation quality to the original Transformer.

Source Dependency-Aware Transformer with Supervised Self-Attention

Sep 05, 2019Chengyi Wang, Shuangzhi Wu, Shujie Liu

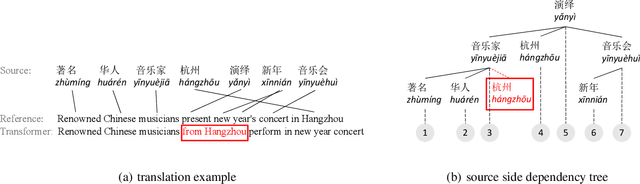

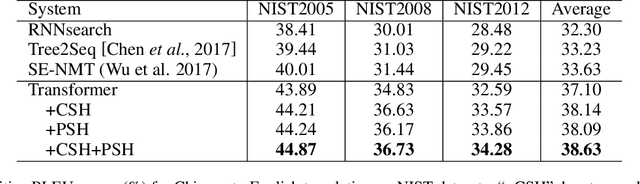

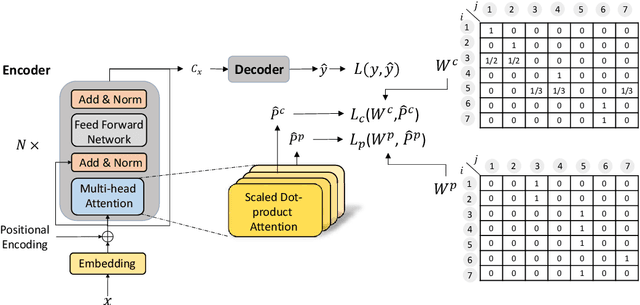

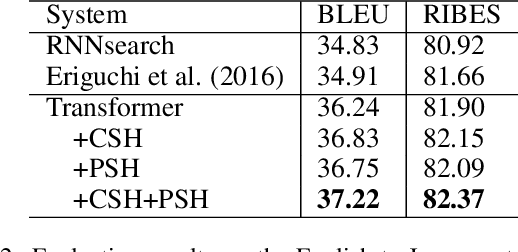

Recently, Transformer has achieved the state-of-the-art performance on many machine translation tasks. However, without syntax knowledge explicitly considered in the encoder, incorrect context information that violates the syntax structure may be integrated into source hidden states, leading to erroneous translations. In this paper, we propose a novel method to incorporate source dependencies into the Transformer. Specifically, we adopt the source dependency tree and define two matrices to represent the dependency relations. Based on the matrices, two heads in the multi-head self-attention module are trained in a supervised manner and two extra cross entropy losses are introduced into the training objective function. Under this training objective, the model is trained to learn the source dependency relations directly. Without requiring pre-parsed input during inference, our model can generate better translations with the dependency-aware context information. Experiments on bi-directional Chinese-to-English, English-to-Japanese and English-to-German translation tasks show that our proposed method can significantly improve the Transformer baseline.

Explicit Cross-lingual Pre-training for Unsupervised Machine Translation

Aug 31, 2019Shuo Ren, Yu Wu, Shujie Liu, Ming Zhou, Shuai Ma

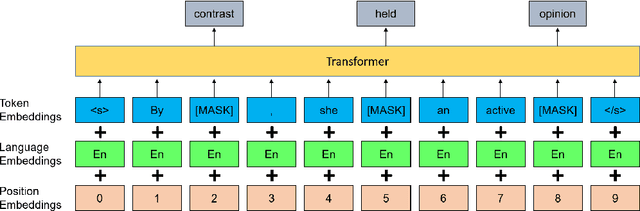

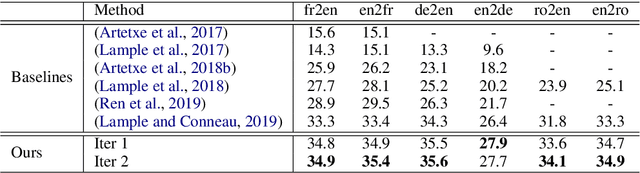

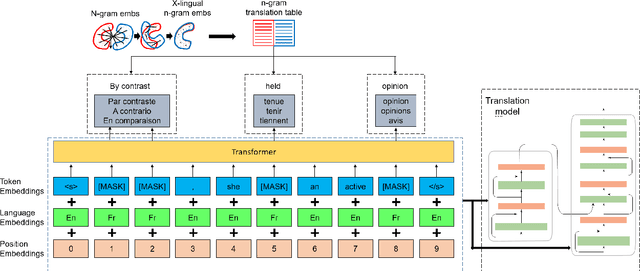

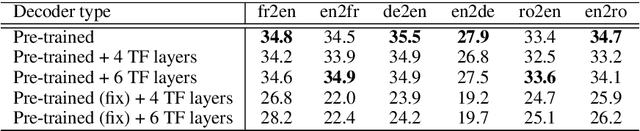

Pre-training has proven to be effective in unsupervised machine translation due to its ability to model deep context information in cross-lingual scenarios. However, the cross-lingual information obtained from shared BPE spaces is inexplicit and limited. In this paper, we propose a novel cross-lingual pre-training method for unsupervised machine translation by incorporating explicit cross-lingual training signals. Specifically, we first calculate cross-lingual n-gram embeddings and infer an n-gram translation table from them. With those n-gram translation pairs, we propose a new pre-training model called Cross-lingual Masked Language Model (CMLM), which randomly chooses source n-grams in the input text stream and predicts their translation candidates at each time step. Experiments show that our method can incorporate beneficial cross-lingual information into pre-trained models. Taking pre-trained CMLM models as the encoder and decoder, we significantly improve the performance of unsupervised machine translation.

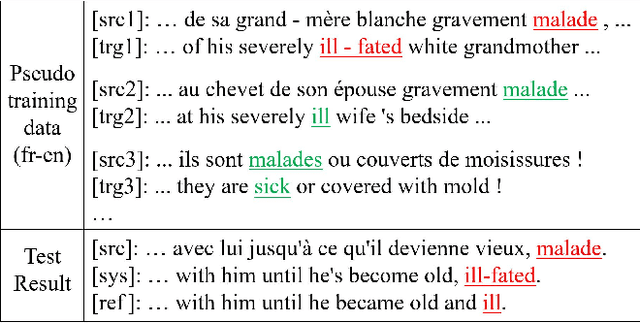

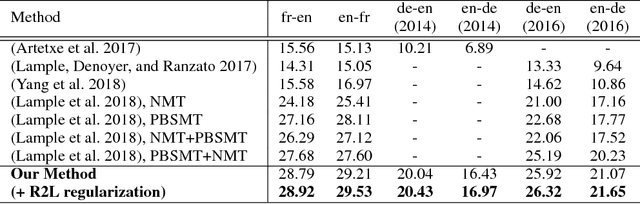

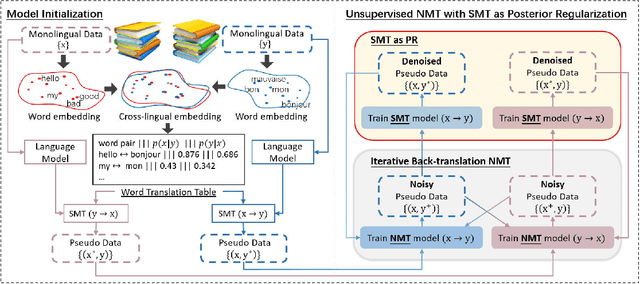

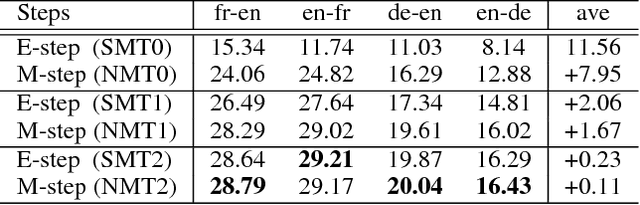

Unsupervised Neural Machine Translation with SMT as Posterior Regularization

Jan 14, 2019Shuo Ren, Zhirui Zhang, Shujie Liu, Ming Zhou, Shuai Ma

Without real bilingual corpus available, unsupervised Neural Machine Translation (NMT) typically requires pseudo parallel data generated with the back-translation method for the model training. However, due to weak supervision, the pseudo data inevitably contain noises and errors that will be accumulated and reinforced in the subsequent training process, leading to bad translation performance. To address this issue, we introduce phrase based Statistic Machine Translation (SMT) models which are robust to noisy data, as posterior regularizations to guide the training of unsupervised NMT models in the iterative back-translation process. Our method starts from SMT models built with pre-trained language models and word-level translation tables inferred from cross-lingual embeddings. Then SMT and NMT models are optimized jointly and boost each other incrementally in a unified EM framework. In this way, (1) the negative effect caused by errors in the iterative back-translation process can be alleviated timely by SMT filtering noises from its phrase tables; meanwhile, (2) NMT can compensate for the deficiency of fluency inherent in SMT. Experiments conducted on en-fr and en-de translation tasks show that our method outperforms the strong baseline and achieves new state-of-the-art unsupervised machine translation performance.

Close to Human Quality TTS with Transformer

Sep 19, 2018Naihan Li, Shujie Liu, Yanqing Liu, Sheng Zhao, Ming Liu, Ming Zhou

Although end-to-end neural text-to-speech (TTS) methods (such as Tacotron2) are proposed and achieve state-of-the-art performance, they still suffer from two problems: 1) low efficiency during training and inference; 2) hard to model long dependency using current recurrent neural networks (RNNs). Inspired by the success of Transformer network in neural machine translation (NMT), in this paper, we introduce and adapt the multi-head attention mechanism to replace the RNN structures and also the original attention mechanism in Tacotron2. With the help of multi-head self-attention, the hidden states in the encoder and decoder are constructed in parallel, which improves training efficiency. Meanwhile, any two inputs at different times are connected directly by a self-attention mechanism, which solves the long range dependency problem effectively. Using phoneme sequences as input, our Transformer TTS network generates mel spectrograms, followed by a WaveNet vocoder to output the final audio results. Experiments are conducted to test the efficiency and performance of our new network. For the efficiency, our Transformer TTS network can speed up the training about 4.25 times faster compared with Tacotron2. For the performance, rigorous human tests show that our proposed model achieves state-of-the-art performance (outperforms Tacotron2 with a gap of 0.048) and is very close to human quality (4.39 vs 4.44 in MOS).

Approximate Distribution Matching for Sequence-to-Sequence Learning

Sep 02, 2018Wenhu Chen, Guanlin Li, Shujie Liu, Zhirui Zhang, Mu Li, Ming Zhou

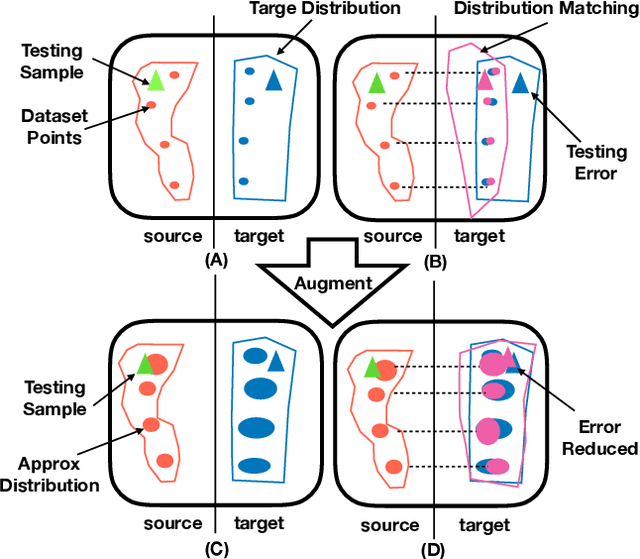

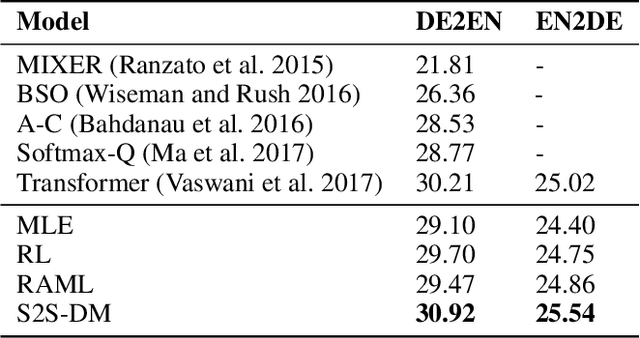

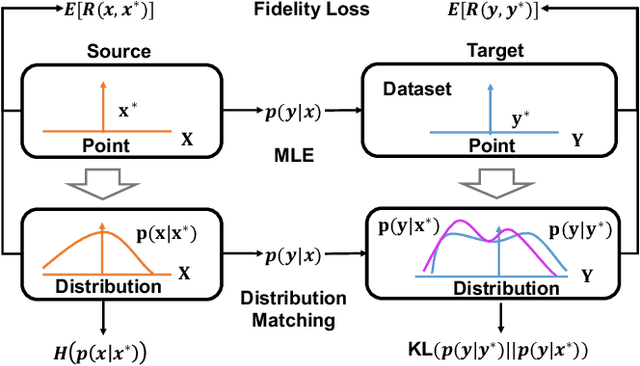

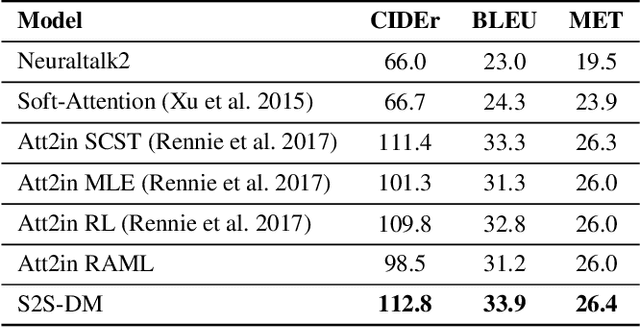

Sequence-to-Sequence models were introduced to tackle many real-life problems like machine translation, summarization, image captioning, etc. The standard optimization algorithms are mainly based on example-to-example matching like maximum likelihood estimation, which is known to suffer from data sparsity problem. Here we present an alternate view to explain sequence-to-sequence learning as a distribution matching problem, where each source or target example is viewed to represent a local latent distribution in the source or target domain. Then, we interpret sequence-to-sequence learning as learning a transductive model to transform the source local latent distributions to match their corresponding target distributions. In our framework, we approximate both the source and target latent distributions with recurrent neural networks (augmenter). During training, the parallel augmenters learn to better approximate the local latent distributions, while the sequence prediction model learns to minimize the KL-divergence of the transformed source distributions and the approximated target distributions. This algorithm can alleviate the data sparsity issues in sequence learning by locally augmenting more unseen data pairs and increasing the model's robustness. Experiments conducted on machine translation and image captioning consistently demonstrate the superiority of our proposed algorithm over the other competing algorithms.

Style Transfer as Unsupervised Machine Translation

Aug 23, 2018Zhirui Zhang, Shuo Ren, Shujie Liu, Jianyong Wang, Peng Chen, Mu Li, Ming Zhou, Enhong Chen

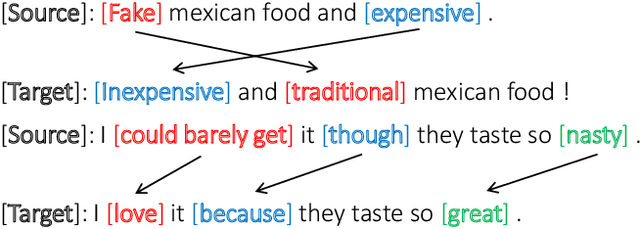

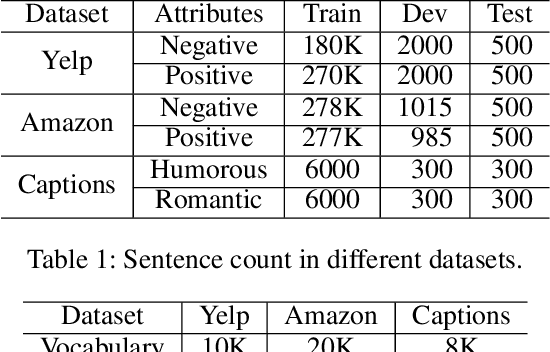

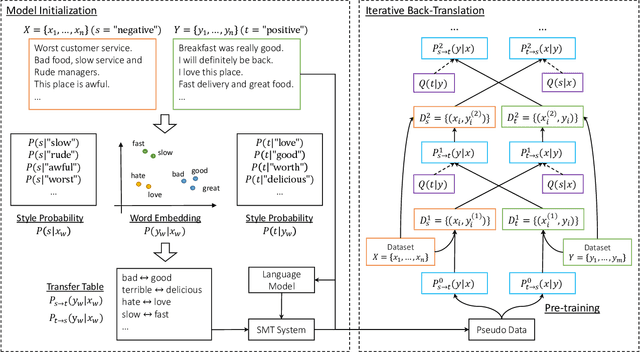

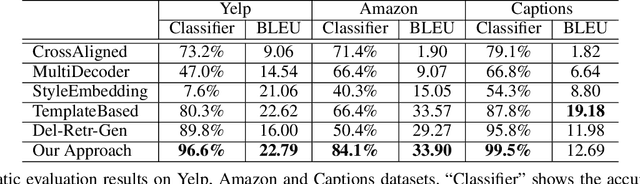

Language style transferring rephrases text with specific stylistic attributes while preserving the original attribute-independent content. One main challenge in learning a style transfer system is a lack of parallel data where the source sentence is in one style and the target sentence in another style. With this constraint, in this paper, we adapt unsupervised machine translation methods for the task of automatic style transfer. We first take advantage of style-preference information and word embedding similarity to produce pseudo-parallel data with a statistical machine translation (SMT) framework. Then the iterative back-translation approach is employed to jointly train two neural machine translation (NMT) based transfer systems. To control the noise generated during joint training, a style classifier is introduced to guarantee the accuracy of style transfer and penalize bad candidates in the generated pseudo data. Experiments on benchmark datasets show that our proposed method outperforms previous state-of-the-art models in terms of both accuracy of style transfer and quality of input-output correspondence.

Regularizing Neural Machine Translation by Target-bidirectional Agreement

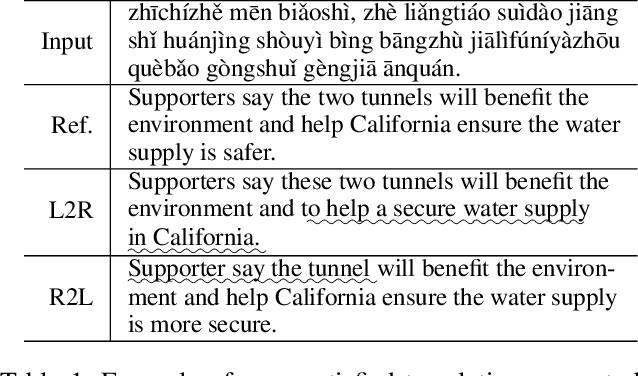

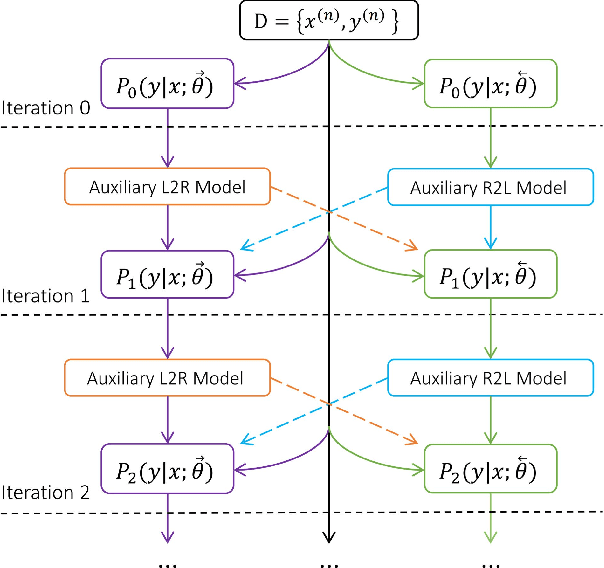

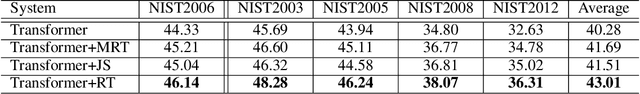

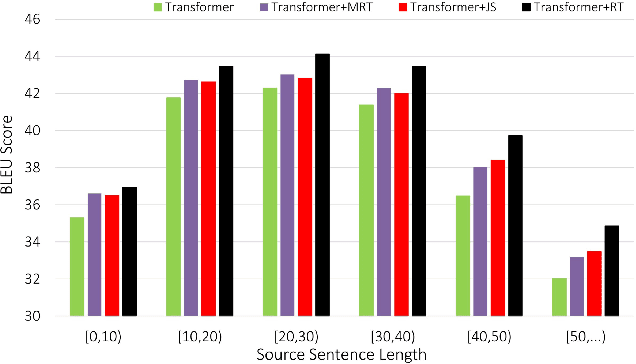

Aug 13, 2018Zhirui Zhang, Shuangzhi Wu, Shujie Liu, Mu Li, Ming Zhou, Enhong Chen

Although Neural Machine Translation (NMT) has achieved remarkable progress in the past several years, most NMT systems still suffer from a fundamental shortcoming as in other sequence generation tasks: errors made early in generation process are fed as inputs to the model and can be quickly amplified, harming subsequent sequence generation. To address this issue, we propose a novel model regularization method for NMT training, which aims to improve the agreement between translations generated by left-to-right (L2R) and right-to-left (R2L) NMT decoders. This goal is achieved by introducing two Kullback-Leibler divergence regularization terms into the NMT training objective to reduce the mismatch between output probabilities of L2R and R2L models. In addition, we also employ a joint training strategy to allow L2R and R2L models to improve each other in an interactive update process. Experimental results show that our proposed method significantly outperforms state-of-the-art baselines on Chinese-English and English-German translation tasks.

Triangular Architecture for Rare Language Translation

Jul 11, 2018Shuo Ren, Wenhu Chen, Shujie Liu, Mu Li, Ming Zhou, Shuai Ma

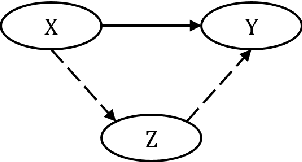

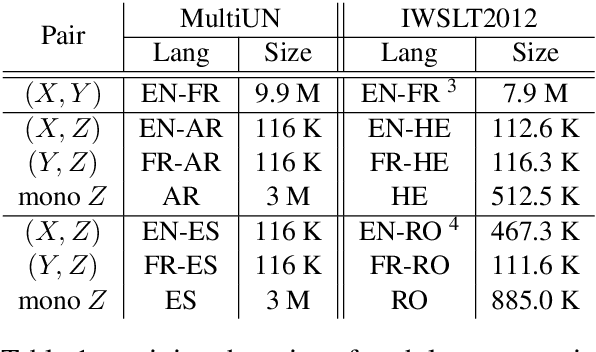

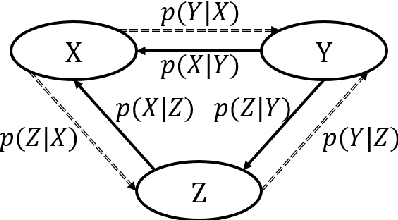

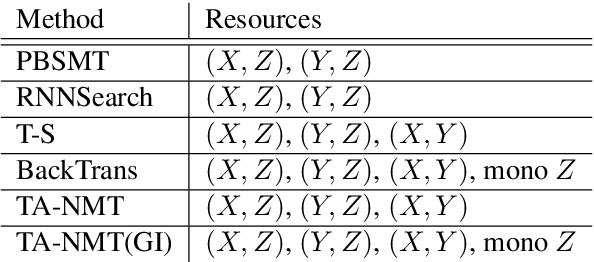

Neural Machine Translation (NMT) performs poor on the low-resource language pair $(X,Z)$, especially when $Z$ is a rare language. By introducing another rich language $Y$, we propose a novel triangular training architecture (TA-NMT) to leverage bilingual data $(Y,Z)$ (may be small) and $(X,Y)$ (can be rich) to improve the translation performance of low-resource pairs. In this triangular architecture, $Z$ is taken as the intermediate latent variable, and translation models of $Z$ are jointly optimized with a unified bidirectional EM algorithm under the goal of maximizing the translation likelihood of $(X,Y)$. Empirical results demonstrate that our method significantly improves the translation quality of rare languages on MultiUN and IWSLT2012 datasets, and achieves even better performance combining back-translation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge