Meng Chen

refer to the report for detailed contributions

OTF: Optimal Transport based Fusion of Supervised and Self-Supervised Learning Models for Automatic Speech Recognition

Jun 05, 2023

Abstract:Self-Supervised Learning (SSL) Automatic Speech Recognition (ASR) models have shown great promise over Supervised Learning (SL) ones in low-resource settings. However, the advantages of SSL are gradually weakened when the amount of labeled data increases in many industrial applications. To further improve the ASR performance when abundant labels are available, we first explore the potential of combining SL and SSL ASR models via analyzing their complementarity in recognition accuracy and optimization property. Then, we propose a novel Optimal Transport based Fusion (OTF) method for SL and SSL models without incurring extra computation cost in inference. Specifically, optimal transport is adopted to softly align the layer-wise weights to unify the two different networks into a single one. Experimental results on the public 1k-hour English LibriSpeech dataset and our in-house 2.6k-hour Chinese dataset show that OTF largely outperforms the individual models with lower error rates.

DiffusEmp: A Diffusion Model-Based Framework with Multi-Grained Control for Empathetic Response Generation

Jun 02, 2023

Abstract:Empathy is a crucial factor in open-domain conversations, which naturally shows one's caring and understanding to others. Though several methods have been proposed to generate empathetic responses, existing works often lead to monotonous empathy that refers to generic and safe expressions. In this paper, we propose to use explicit control to guide the empathy expression and design a framework DiffusEmp based on conditional diffusion language model to unify the utilization of dialogue context and attribute-oriented control signals. Specifically, communication mechanism, intent, and semantic frame are imported as multi-grained signals that control the empathy realization from coarse to fine levels. We then design a specific masking strategy to reflect the relationship between multi-grained signals and response tokens, and integrate it into the diffusion model to influence the generative process. Experimental results on a benchmark dataset EmpatheticDialogue show that our framework outperforms competitive baselines in terms of controllability, informativeness, and diversity without the loss of context-relatedness.

Learning to Generate Poetic Chinese Landscape Painting with Calligraphy

May 08, 2023

Abstract:In this paper, we present a novel system (denoted as Polaca) to generate poetic Chinese landscape painting with calligraphy. Unlike previous single image-to-image painting generation, Polaca takes the classic poetry as input and outputs the artistic landscape painting image with the corresponding calligraphy. It is equipped with three different modules to complete the whole piece of landscape painting artwork: the first one is a text-to-image module to generate landscape painting image, the second one is an image-to-image module to generate stylistic calligraphy image, and the third one is an image fusion module to fuse the two images into a whole piece of aesthetic artwork.

Latent Evolution Model for Change Point Detection in Time-varying Networks

Dec 17, 2022Abstract:Graph-based change point detection (CPD) play an irreplaceable role in discovering anomalous graphs in the time-varying network. While several techniques have been proposed to detect change points by identifying whether there is a significant difference between the target network and successive previous ones, they neglect the natural evolution of the network. In practice, real-world graphs such as social networks, traffic networks, and rating networks are constantly evolving over time. Considering this problem, we treat the problem as a prediction task and propose a novel CPD method for dynamic graphs via a latent evolution model. Our method focuses on learning the low-dimensional representations of networks and capturing the evolving patterns of these learned latent representations simultaneously. After having the evolving patterns, a prediction of the target network can be achieved. Then, we can detect the change points by comparing the prediction and the actual network by leveraging a trade-off strategy, which balances the importance between the prediction network and the normal graph pattern extracted from previous networks. Intensive experiments conducted on both synthetic and real-world datasets show the effectiveness and superiority of our model.

MNER-QG: An End-to-End MRC framework for Multimodal Named Entity Recognition with Query Grounding

Nov 27, 2022Abstract:Multimodal named entity recognition (MNER) is a critical step in information extraction, which aims to detect entity spans and classify them to corresponding entity types given a sentence-image pair. Existing methods either (1) obtain named entities with coarse-grained visual clues from attention mechanisms, or (2) first detect fine-grained visual regions with toolkits and then recognize named entities. However, they suffer from improper alignment between entity types and visual regions or error propagation in the two-stage manner, which finally imports irrelevant visual information into texts. In this paper, we propose a novel end-to-end framework named MNER-QG that can simultaneously perform MRC-based multimodal named entity recognition and query grounding. Specifically, with the assistance of queries, MNER-QG can provide prior knowledge of entity types and visual regions, and further enhance representations of both texts and images. To conduct the query grounding task, we provide manual annotations and weak supervisions that are obtained via training a highly flexible visual grounding model with transfer learning. We conduct extensive experiments on two public MNER datasets, Twitter2015 and Twitter2017. Experimental results show that MNER-QG outperforms the current state-of-the-art models on the MNER task, and also improves the query grounding performance.

Privacy-Utility Balanced Voice De-Identification Using Adversarial Examples

Nov 10, 2022

Abstract:Faced with the threat of identity leakage during voice data publishing, users are engaged in a privacy-utility dilemma when enjoying convenient voice services. Existing studies employ direct modification or text-based re-synthesis to de-identify users' voices, but resulting in inconsistent audibility in the presence of human participants. In this paper, we propose a voice de-identification system, which uses adversarial examples to balance the privacy and utility of voice services. Instead of typical additive examples inducing perceivable distortions, we design a novel convolutional adversarial example that modulates perturbations into real-world room impulse responses. Benefit from this, our system could preserve user identity from exposure by Automatic Speaker Identification (ASI) while remaining the voice perceptual quality for non-intrusive de-identification. Moreover, our system learns a compact speaker distribution through a conditional variational auto-encoder to sample diverse target embeddings on demand. Combining diverse target generation and input-specific perturbation construction, our system enables any-to-any identify transformation for adaptive de-identification. Experimental results show that our system could achieve 98% and 79% successful de-identification on mainstream ASIs and commercial systems with an objective Mel cepstral distortion of 4.31dB and a subjective mean opinion score of 4.48.

UFO2: A unified pre-training framework for online and offline speech recognition

Oct 26, 2022Abstract:In this paper, we propose a Unified pre-training Framework for Online and Offline (UFO2) Automatic Speech Recognition (ASR), which 1) simplifies the two separate training workflows for online and offline modes into one process, and 2) improves the Word Error Rate (WER) performance with limited utterance annotating. Specifically, we extend the conventional offline-mode Self-Supervised Learning (SSL)-based ASR approach to a unified manner, where the model training is conditioned on both the full-context and dynamic-chunked inputs. To enhance the pre-trained representation model, stop-gradient operation is applied to decouple the online-mode objectives to the quantizer. Moreover, in both the pre-training and the downstream fine-tuning stages, joint losses are proposed to train the unified model with full-weight sharing for the two modes. Experimental results on the LibriSpeech dataset show that UFO2 outperforms the SSL-based baseline method by 29.7% and 18.2% relative WER reduction in offline and online modes, respectively.

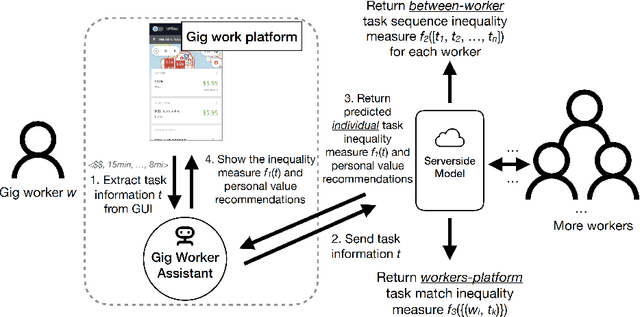

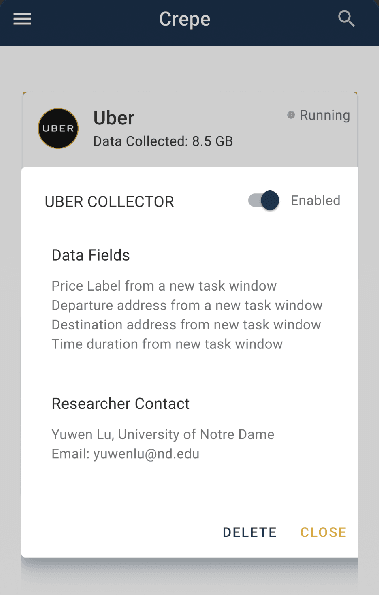

A Bottom-Up End-User Intelligent Assistant Approach to Empower Gig Workers against AI Inequality

Apr 29, 2022

Abstract:The growing inequality in gig work between workers and platforms has become a critical social issue as gig work plays an increasingly prominent role in the future of work. The AI inequality is caused by (1) the technology divide in who has access to AI technologies in gig work; and (2) the data divide in who owns the data in gig work leads to unfair working conditions, growing pay gap, neglect of workers' diverse preferences, and workers' lack of trust in the platforms. In this position paper, we argue that a bottom-up approach that empowers individual workers to access AI-enabled work planning support and share data among a group of workers through a network of end-user-programmable intelligent assistants is a practical way to bridge AI inequality in gig work under the current paradigm of privately owned platforms. This position paper articulates a set of research challenges, potential approaches, and community engagement opportunities, seeking to start a dialogue on this important research topic in the interdisciplinary CHIWORK community.

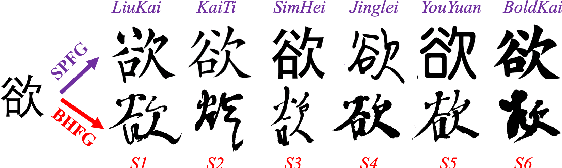

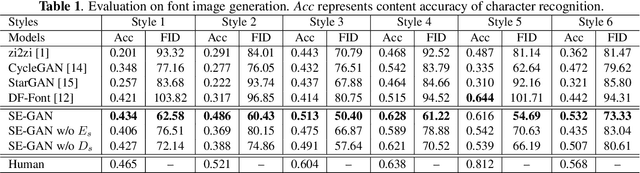

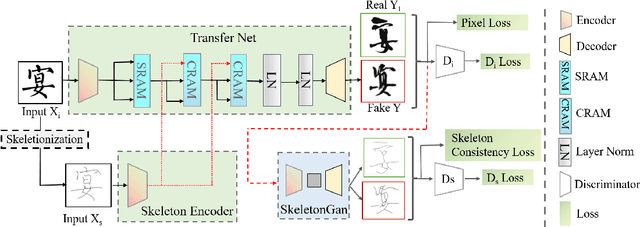

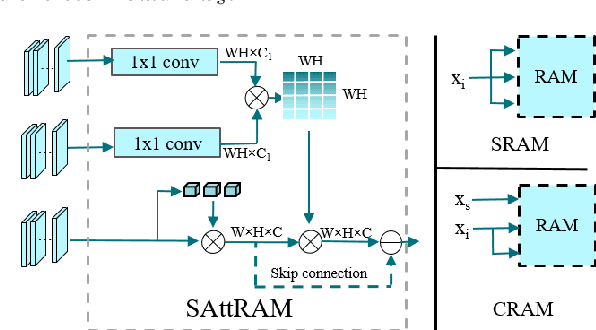

SE-GAN: Skeleton Enhanced GAN-based Model for Brush Handwriting Font Generation

Apr 22, 2022

Abstract:Previous works on font generation mainly focus on the standard print fonts where character's shape is stable and strokes are clearly separated. There is rare research on brush handwriting font generation, which involves holistic structure changes and complex strokes transfer. To address this issue, we propose a novel GAN-based image translation model by integrating the skeleton information. We first extract the skeleton from training images, then design an image encoder and a skeleton encoder to extract corresponding features. A self-attentive refined attention module is devised to guide the model to learn distinctive features between different domains. A skeleton discriminator is involved to first synthesize the skeleton image from the generated image with a pre-trained generator, then to judge its realness to the target one. We also contribute a large-scale brush handwriting font image dataset with six styles and 15,000 high-resolution images. Both quantitative and qualitative experimental results demonstrate the competitiveness of our proposed model.

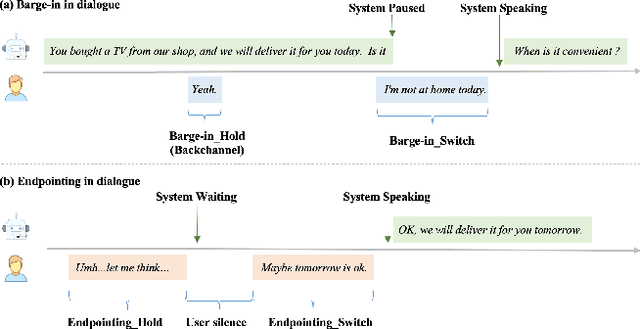

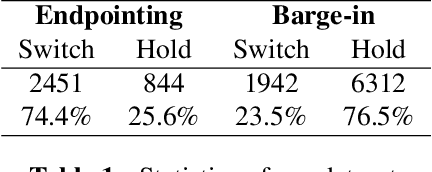

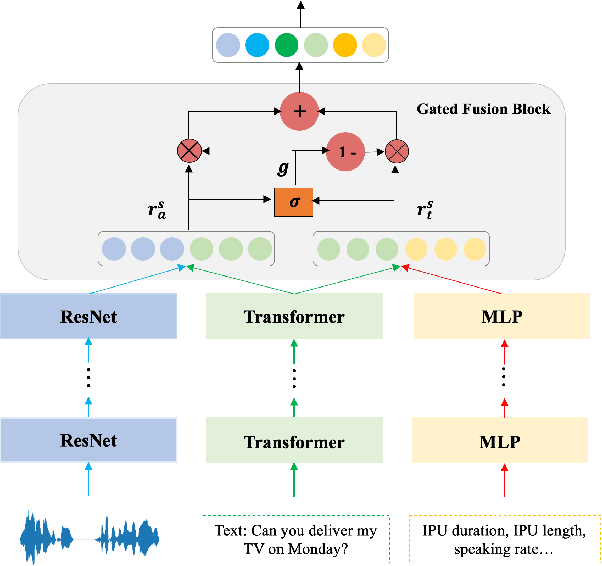

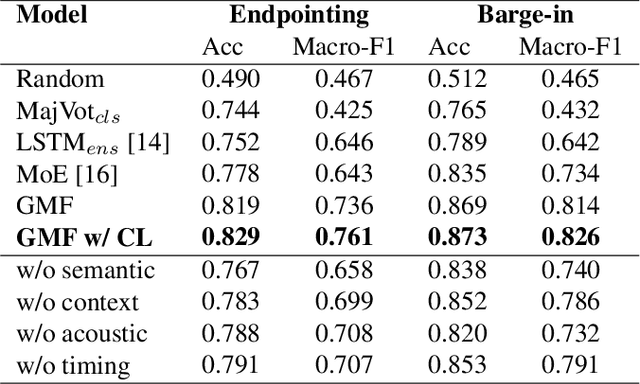

Gated Multimodal Fusion with Contrastive Learning for Turn-taking Prediction in Human-robot Dialogue

Apr 18, 2022

Abstract:Turn-taking, aiming to decide when the next speaker can start talking, is an essential component in building human-robot spoken dialogue systems. Previous studies indicate that multimodal cues can facilitate this challenging task. However, due to the paucity of public multimodal datasets, current methods are mostly limited to either utilizing unimodal features or simplistic multimodal ensemble models. Besides, the inherent class imbalance in real scenario, e.g. sentence ending with short pause will be mostly regarded as the end of turn, also poses great challenge to the turn-taking decision. In this paper, we first collect a large-scale annotated corpus for turn-taking with over 5,000 real human-robot dialogues in speech and text modalities. Then, a novel gated multimodal fusion mechanism is devised to utilize various information seamlessly for turn-taking prediction. More importantly, to tackle the data imbalance issue, we design a simple yet effective data augmentation method to construct negative instances without supervision and apply contrastive learning to obtain better feature representations. Extensive experiments are conducted and the results demonstrate the superiority and competitiveness of our model over several state-of-the-art baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge